Home >Technology peripherals >AI >This year's English College Entrance Examination, CMU used reconstruction pre-training to achieve a high score of 134, significantly surpassing GPT3

This year's English College Entrance Examination, CMU used reconstruction pre-training to achieve a high score of 134, significantly surpassing GPT3

- PHPzforward

- 2023-04-09 10:21:051798browse

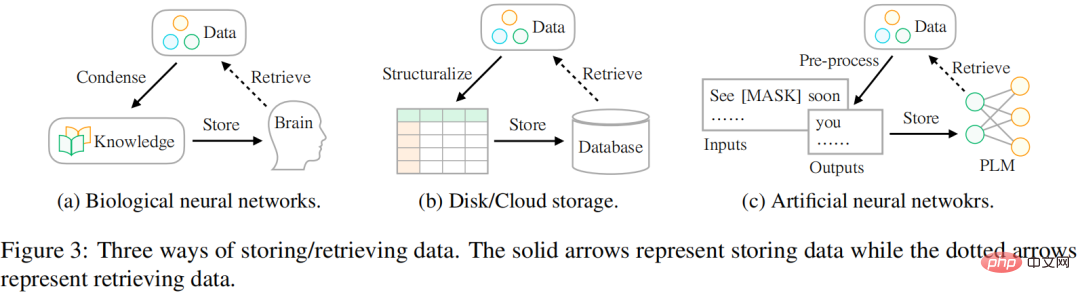

The way we store data is changing, from biological neural networks to artificial neural networks. In fact, the most common situation is to use the brain to store data. As the amount of data available today continues to grow, people seek to store data using different external devices such as hard drives or cloud storage. With the rise of deep learning technology, another promising storage technology has emerged, which uses artificial neural networks to store information in data.

Researchers believe that the ultimate goal of data storage is to better serve human life, and the access method of data is equally important as the storage method. However, there are differences in how the data is stored and accessed. Historically, people have struggled to bridge this gap in order to make better use of the information that exists in the world. As shown in Figure 3:

- In terms of biological neural networks (such as the human brain), humans receive curriculum (i.e. knowledge) education at a very young age , so that they can extract specific data to deal with the complex and changing life.

- For external device storage, people usually structure the data according to a certain pattern (such as a table), and then use a specialized language (such as SQL) to efficiently retrieve the required information from the database.

- For artificial neural network-based storage, researchers leverage self-supervised learning to store data from large corpora (i.e., pre-training) and then use the network for a variety of downstream tasks (e.g., emotion classification).

Researchers from CMU have proposed a new way to access data containing various types of information that can serve as pre-training signals to guide model parameter optimization. The study presents data in a structured manner in units of signals. This is similar to the scenario of using a database to store data: they are first structured into tables or JSON format, so that the exact information required can be retrieved through a specialized language such as SQL.

In addition, this study believes that valuable signals exist abundantly in all kinds of data in the world, rather than simply existing in manually managed supervised data sets. What researchers need to do is (a) identify the data (b) Reorganize the data with a unified language (c) Integrate and store them into a pre-trained language model. The study calls this learning paradigm reStructured Pre-training (RST). Researchers liken the process to a "treasure hunt in a mine." Different data sources such as Wikipedia are equivalent to mines rich in gems. They contain rich information, such as named entities from hyperlinks, that can provide signals for model pre-training. A good pretrained model (PLM) should clearly understand the composition of various signals in the data in order to provide accurate information based on the different needs of downstream tasks.

Paper address: https://arxiv.org/pdf/2206.11147.pdf

Preliminary Training language model treasure hunt

This research proposes a new paradigm of natural language processing task learning, namely RST, which re-emphasizes the role of data and regards model pre-training and fine-tuning of downstream tasks as the role of data. Storage and access procedures. On this basis, this research realizes a simple principle, that is, a good storage mechanism should not only have the ability to cache large amounts of data, but also consider the convenience of access.

After overcoming some engineering challenges, the research achieved this by pre-training on reconstructed data (composed of various valuable information instead of the original data). Experiments demonstrate that the RST model not only significantly outperforms the best existing systems (e.g., T0) on 52/55 popular datasets from various NLP tasks (e.g., classification, information extraction, fact retrieval, text generation, etc.), but also does not require Fine-tune downstream tasks. It has also achieved excellent results in China's most authoritative College Entrance Examination English exam, which is taken by millions of students every year.

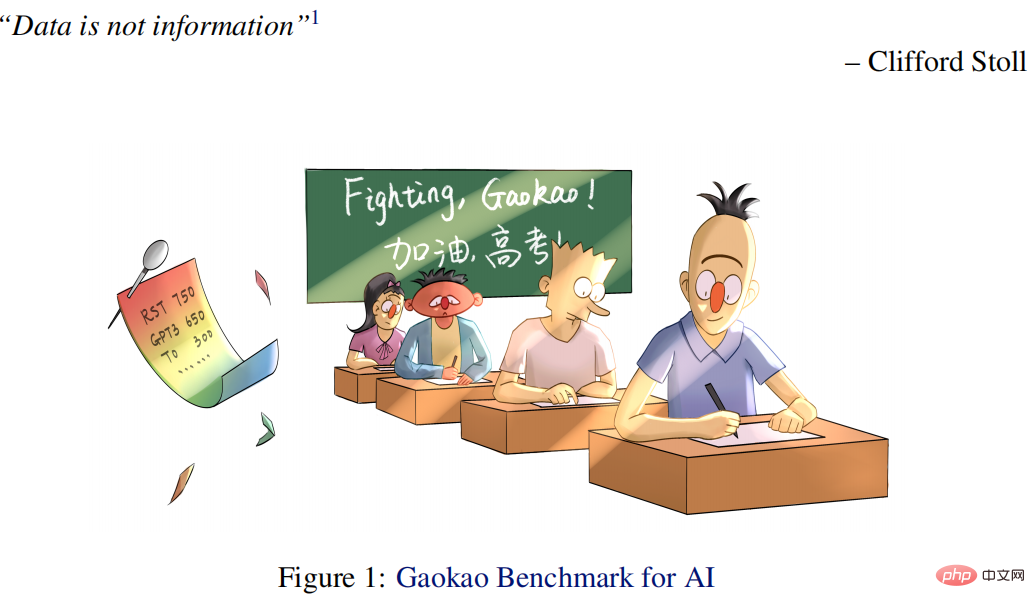

Specifically, the college entrance examination AI (Qin) proposed in this article is 40 points higher than the average student score and 15 points higher than GPT3 using 1/16 parameters. The special Qin scored a high score of 138.5 (out of 150) in the 2018 English test.

In addition, the study also released the Gaokao Benchmark online submission platform, which contains 10 annotated English test papers from 2018-2021 to the present (and will be expanded every year), allowing more AI models to participate in the college entrance examination. The research also establishes a relatively level test bed for human and AI competition, helping us better understand where we stand. In addition, in the 2022 College Entrance Examination English test a few days ago (2022.06.08), the AI system scored a good score of 134 points, while GPT3 only scored 108 points.

The main contributions of this study include:

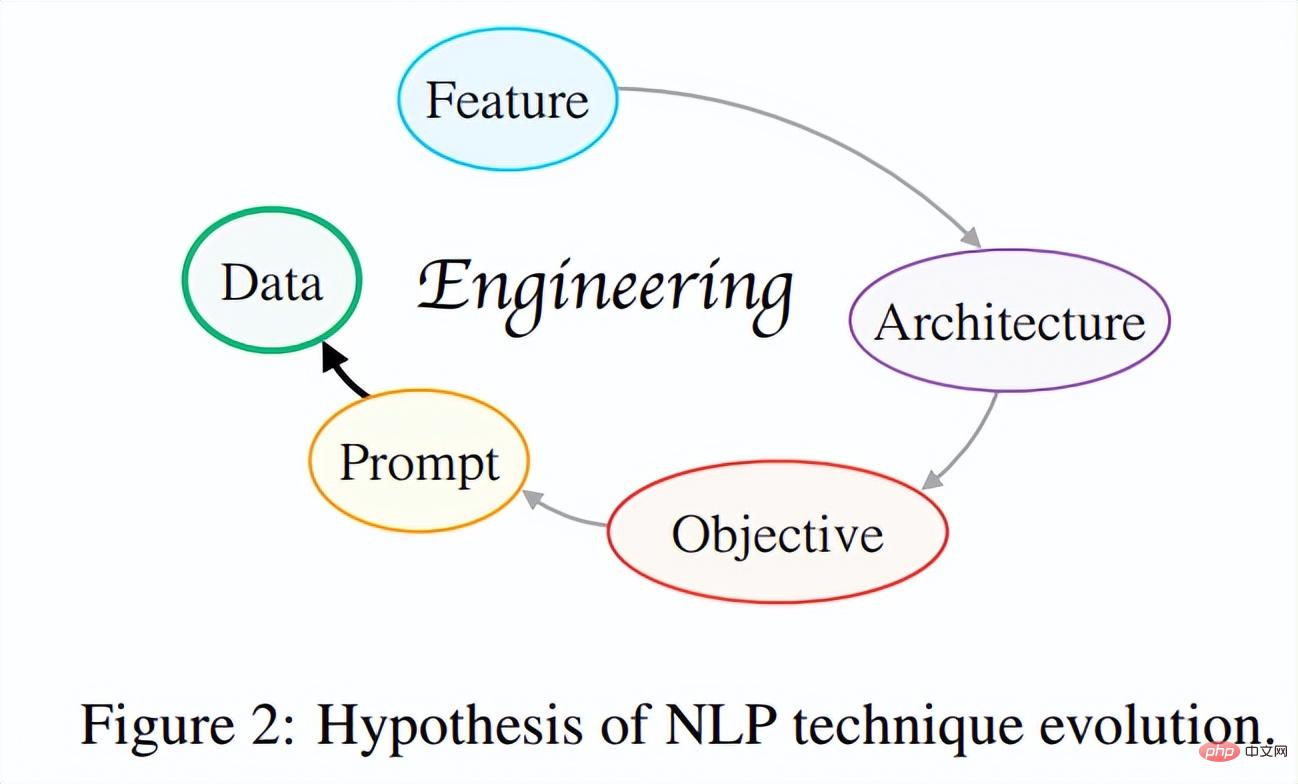

(1) Propose the evolution hypothesis of NLP method. This research attempts to establish the "NLP technology evolution hypothesis" from an overall perspective by exploring the intrinsic connections between the development of modern NLP technology. In short, the core idea of this hypothesis is that technology iterations always develop in such a direction that developers only need to do less to design better and more versatile systems.

So far, the evolution of NLP technology has gone through multiple iterations as shown in Figure 2: Feature Engineering → Architecture Engineering → Target Engineering → Prompt Engineering, and is moving towards A move towards more practical and effective data-centric engineering. The researchers hope that in the future, more scientific researchers will be inspired to think critically about this issue, grasp the core driving force of technological progress, find a "gradient upward" path for academic development, and do more scientifically meaningful work.

(2) New paradigm based on evolution hypothesis: reStructured Pre-training. This paradigm treats model pre-training/fine-tuning as a data storage/access process and claims that a good storage mechanism should make the intended data easily accessible. With such a new paradigm, the research was able to unify 26 different types of signals in the world (e.g. entities of sentences) from 10 data sources (e.g. Wikipedia). The general model trained on this basis achieved strong generalization capabilities on various tasks, including 55 NLP data sets.

(3) AI used for college entrance examination. Based on the above paradigm, this study developed an AI system - Qin - specifically used for college entrance examination English testing tasks. This is the world’s first college entrance examination English artificial intelligence system based on deep learning. Qin has achieved outstanding results on college entrance examination questions for many years: 40 points higher than ordinary people, and achieved 15 points higher than GPT-3 using only 1/16 of the parameters of GPT-3. Especially in the 2018 English test questions, QIN received a high score of 138.5 points (out of 150 points), with perfect scores in both listening and reading comprehension.

(4) Rich resources. (1) In order to track the progress of existing AI technology in achieving human intelligence, the study released a new benchmark-Gaokao Benchmark. Not only does it provide a comprehensive assessment of a variety of practical tasks and domains in real-world scenarios, it also provides human performance scores so that AI systems can be directly compared to humans. (2) This study uses ExplainaBoard (Liu et al., 2021b) to set an interactive leaderboard for Gaokao Benchmark so that more AI systems can easily participate in Gaokao Benchmark and automatically obtain scores. (3) All resources can be found on GitHub.

In addition, the success of AI in the college entrance examination English test task has provided researchers with many new ideas: AI technology can empower education and help solve a series of problems in education and teaching.

For example, (a) help teachers automate grading, (b) help students answer questions about assignments and explain them in detail, and (c) more importantly, promote educational equity and make it accessible to most families Educational services of the same quality. This work integrates 26 different signals from around the world in a unified way for the first time, and rather than trying to distinguish between supervised and unsupervised data, it is concerned with how much and how we can use the information nature gives us. The outstanding performance on more than 50 datasets from various NLP tasks shows the value of data-centric pre-training and inspires more future exploration.

Refactoring pre-training

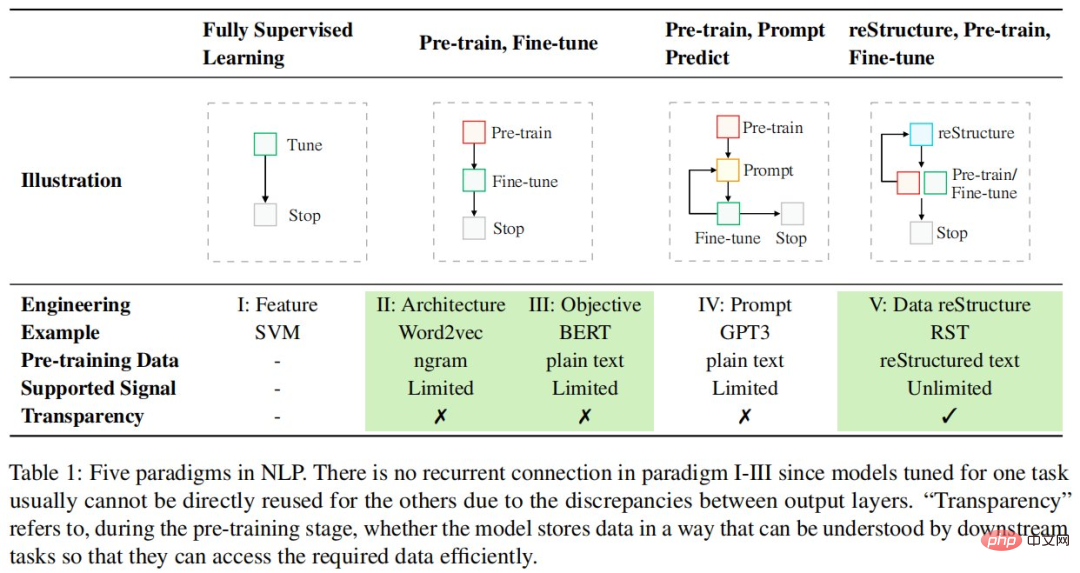

The paradigms for solving NLP tasks are changing rapidly and are still continuing. The following table lists the five paradigms in NLP:

Different from the existing model-centric design paradigm, this research thinks more from the perspective of data to maximize the use of existing data. Specifically, this study adopts the data storage and access view, where the pre-training stage is regarded as the data storage process, while the downstream tasks (e.g., sentiment classification) based on the pre-trained model are regarded as the data access process from the pre-trained model, And claims that a good data storage mechanism should make the stored data more accessible.

To achieve this goal, this study treats data as an object composed of different signals, and believes that a good pre-training model should (1) cover as many signal types as possible, (2) when downstream Provide precise access mechanisms to these signals when required by the mission. Generally speaking, this new paradigm consists of three steps: reconstruction, pre-training, and fine-tuning.

The new paradigm of reconstruction, pre-training, and fine-tuning highlights the importance of data, and researchers need to invest more engineering energy in data processing.

Reconstruction Project

Signal Definition

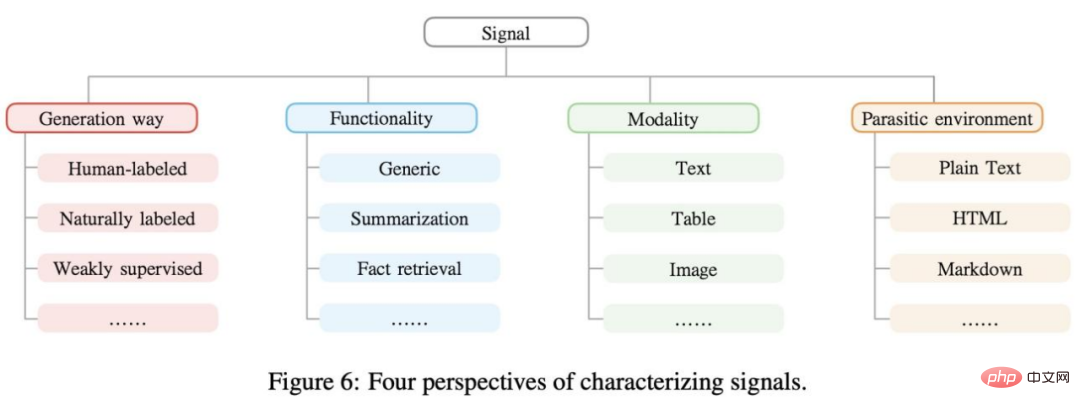

Signals are useful information present in the data that can provide supervision for machine learning models and are expressed as n-tuples. For example, "Mozart was born in Salzburg", "Mozart" and "Salzburg" can be considered as signals for named entity recognition. Typically, signals can be clustered from different perspectives, as shown in Figure 6 below.

Data Mining

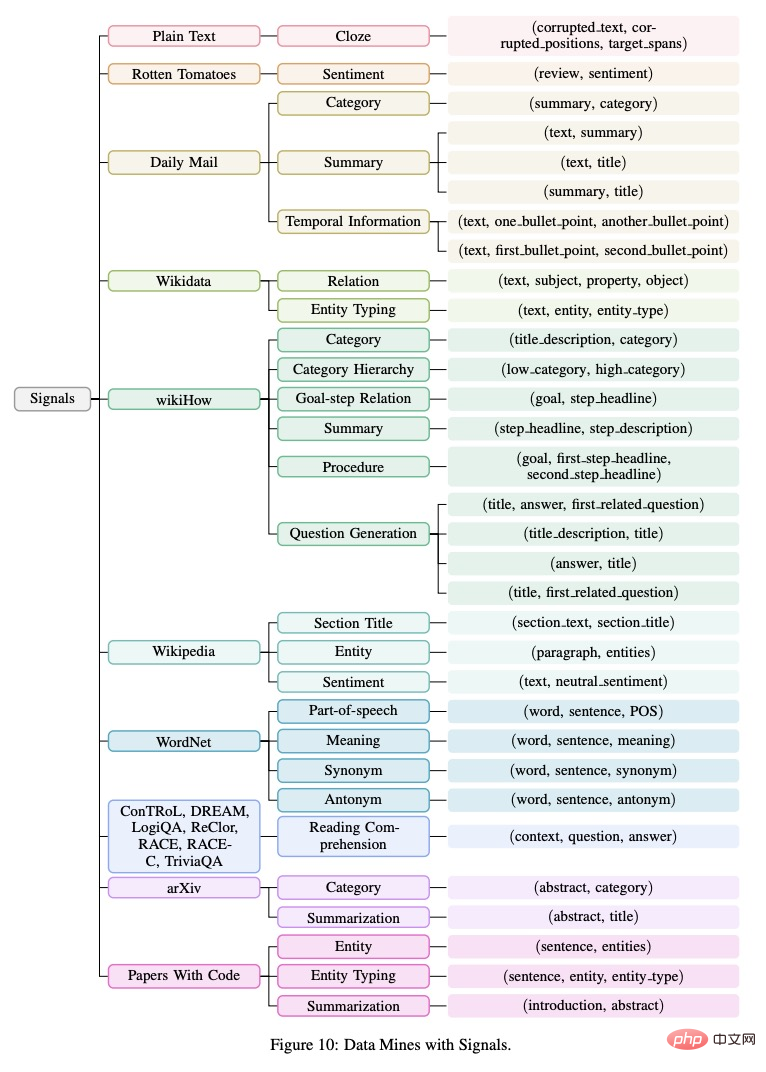

Real-world data contains many different types of signals. Reconstructing pre-training enables these signals to be fully exploited. The study organized the collected signals (n-tuples) in a tree diagram as shown in Figure 10 below.

Signal Extraction

The next step of the study was signal extraction and processing, which involved obtaining raw data from different modalities of data mining, data cleaning and Data normalization. Existing methods are roughly divided into two types: (1) rule-based and (2) machine learning-based. In this work, the research mainly focuses on rule-based signal extraction strategies and leaves more high-coverage methods for future work.

Signal Reconstruction

After extracting different signals from various data mining, the next important step is to unify them into a fixed form so that they can be transformed during pre-training. All information is stored consistently in the model. The prompt method (Brown et al., 2020; Liu et al., 2021d) can achieve this goal, and in principle, with appropriate prompt design, it can unify almost all types of signals into a language model style.

The study divided signals into two broad categories: general signals and task-related signals. The former contains basic language knowledge and can benefit all downstream tasks to a certain extent, while the latter can benefit some specific downstream tasks.

Experiments on 55 commonly used NLP datasets

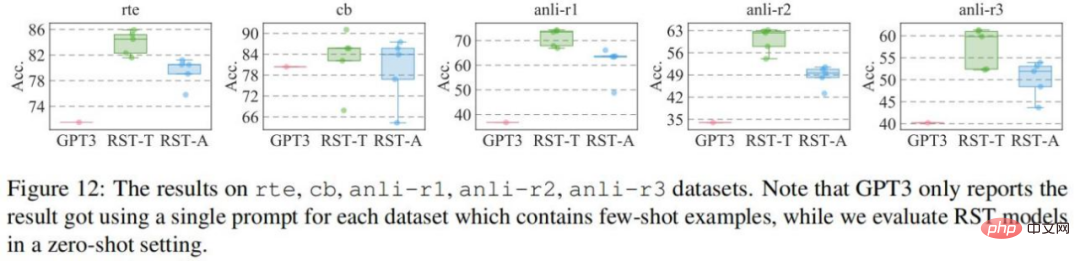

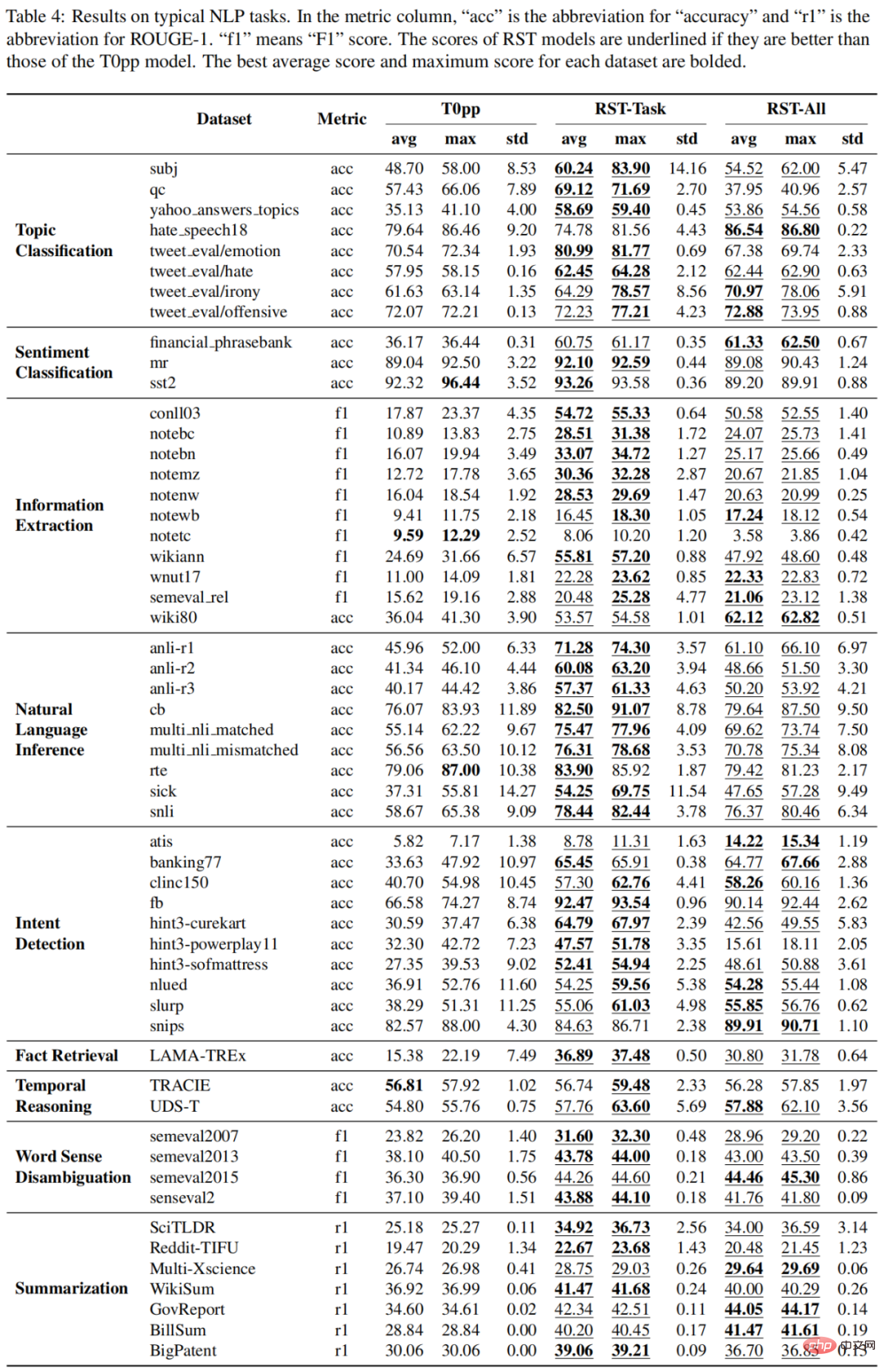

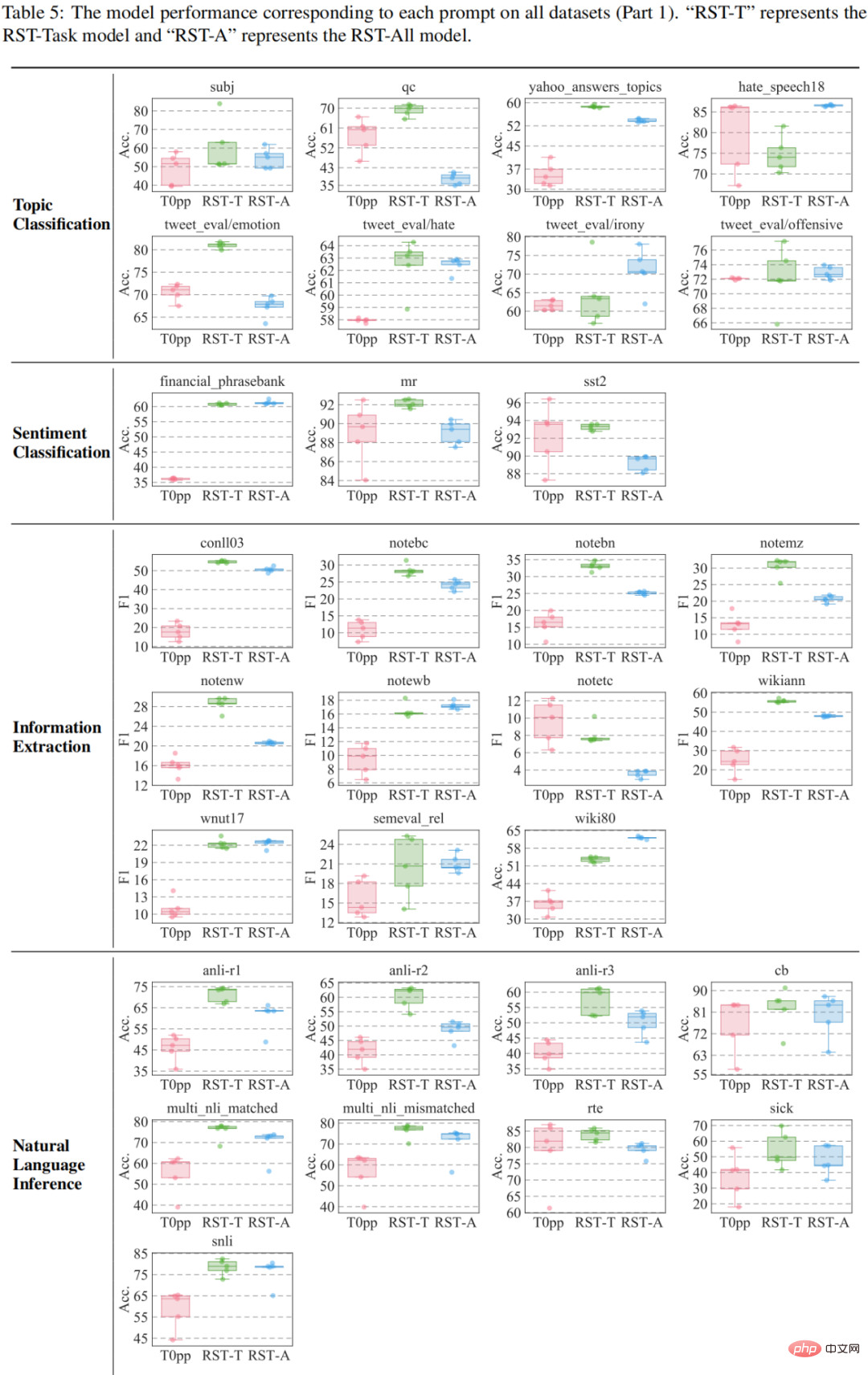

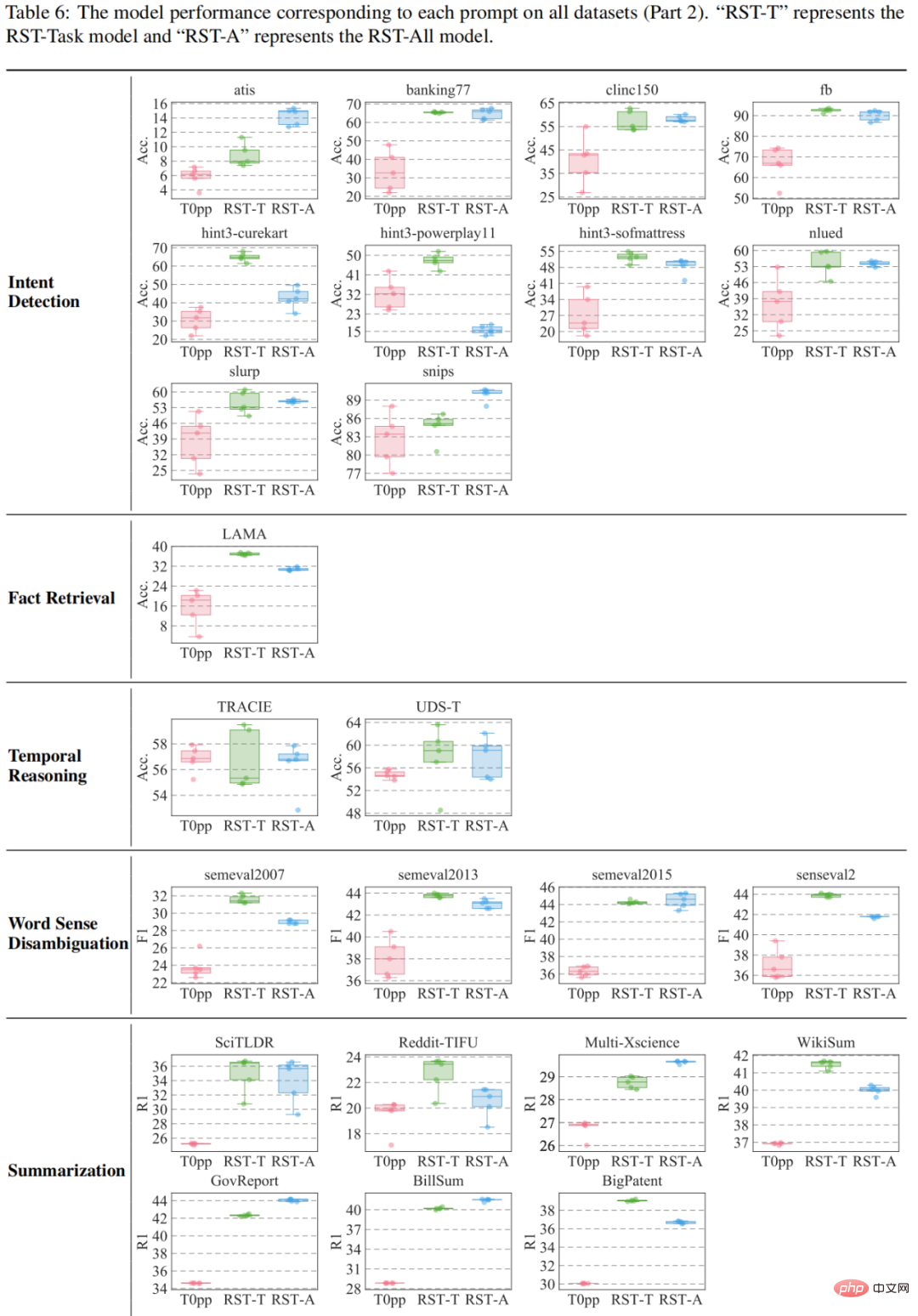

The study is evaluated on 55 datasets and then compared with GPT3 and T0pp respectively. The results of the comparison with GPT3 are shown in the figure: on the four datasets except the cb dataset, both RST-All and RST-Task have better zero-shot performance than GPT3's few-shot learning. In addition, the cb data set is the smallest of these data sets, with only 56 samples in the validation set, so the performance of different prompts on this data set will fluctuate greatly.

and T0pp are shown in Table 4-6. For example, in the average performance of 55 measurements, RST-All beats T0pp on 49 datasets and wins with maximum performance on 47/55 examples. Furthermore, in the average performance test on 55 datasets, RST-Task outperforms T0pp on 52 datasets and outperforms T0pp on 50/55 examples. This illustrates the superiority of reconstructive learning.

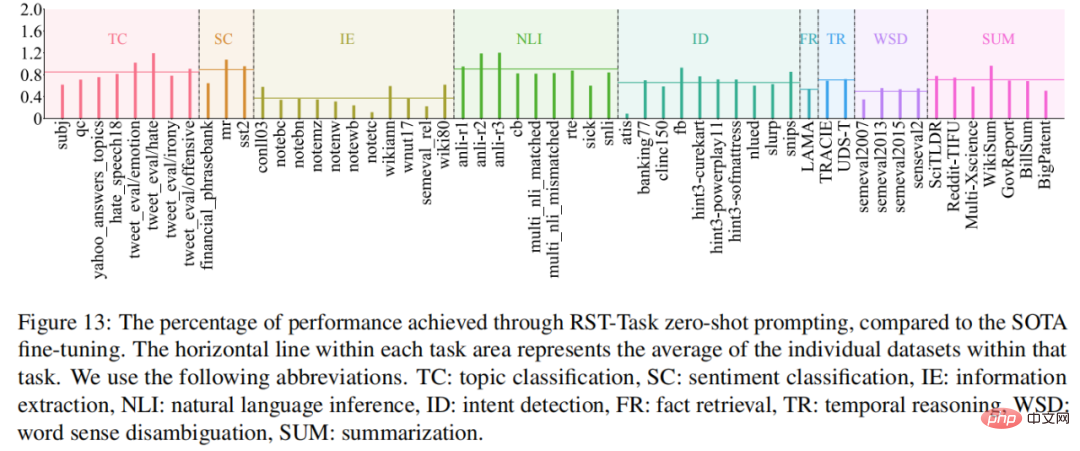

Best performing model What tasks is RST-Task good at? To answer this question, this study compares the performance of the RST-Task model in the zero-sample setting with current SOTA models, and the results are shown in Figure 13. RST-Task is good at topic classification, sentiment classification and natural language reasoning tasks, but performs poorly in information extraction tasks.

College Entrance Examination Experiment: Towards Human-Level AI

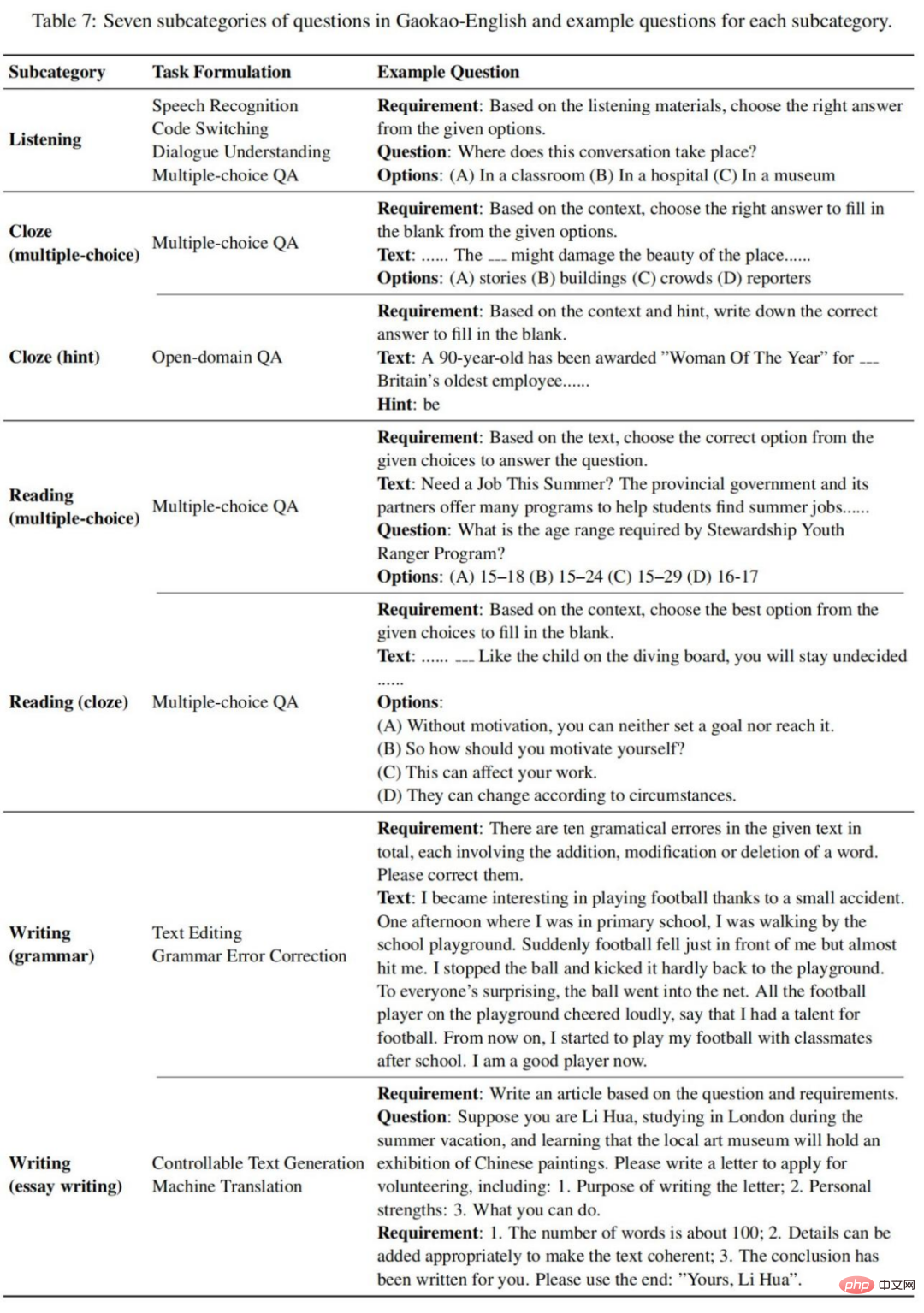

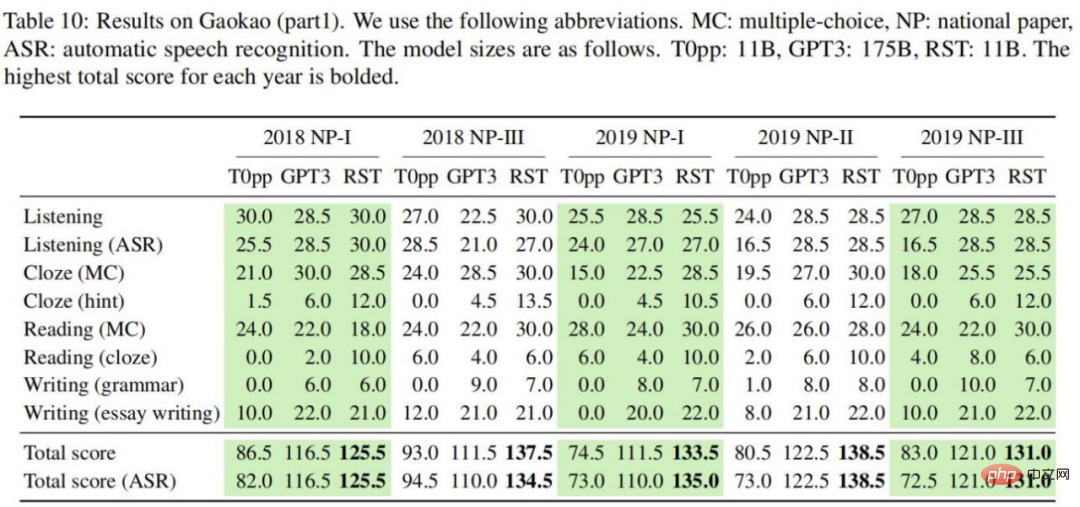

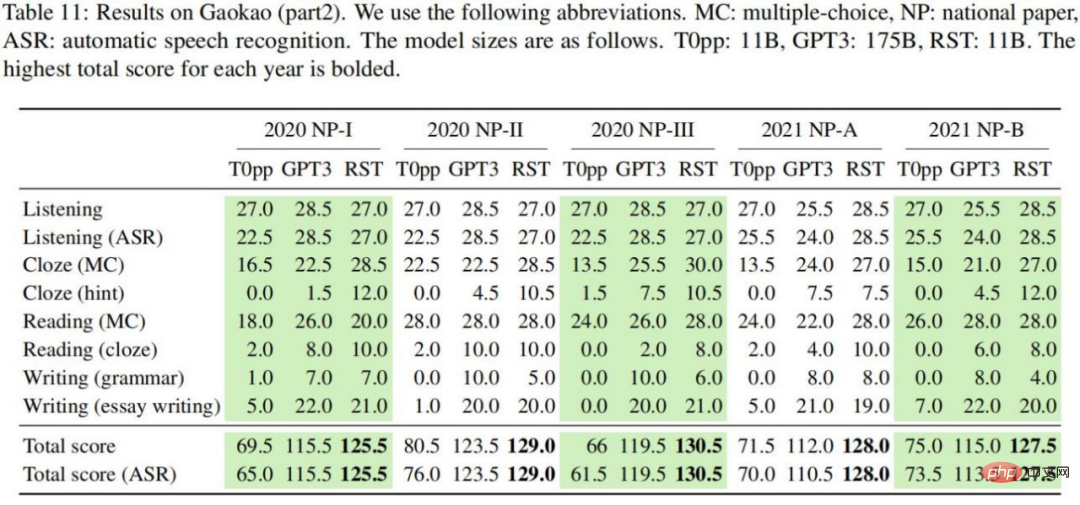

This study collected 10 college entrance examination English test papers, including the 2018 National Entrance Examination I/III, 2019 2020 National Examination I/II/III, 2020 National Examination I/II/III, 2021 National Paper A/B. These test papers follow the same question type, and they divide all test question types into the following seven subcategories, as shown in Table 7:

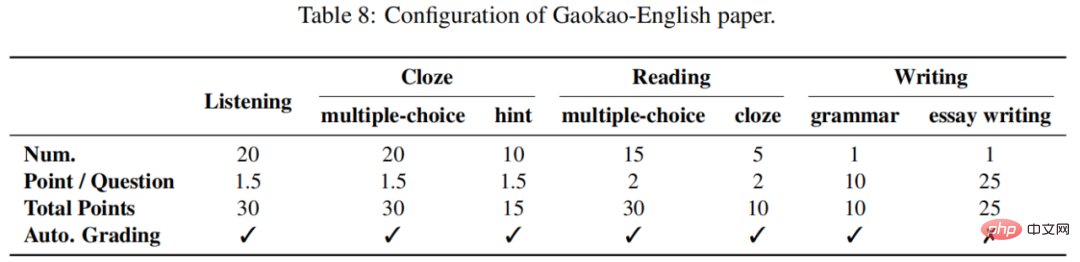

The full score of each college entrance examination English paper is 150 points . Listening, cloze, reading, and writing accounted for 30, 45, 40, and 35 respectively. Typically, the writing section is subjective and requires human assessment, while other sections are objective and can be automatically scored. As shown in Table 8:

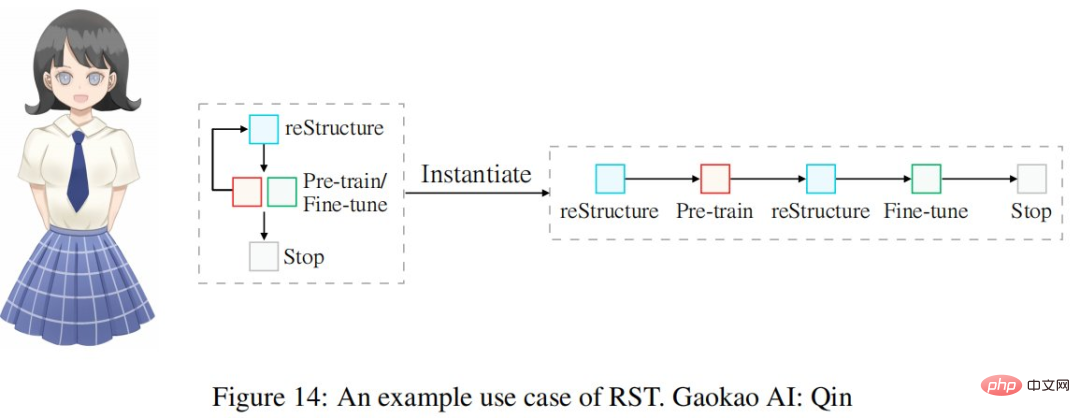

Use the reconstruction engineering cycle shown in Table 1 to build the College Entrance Examination English AI system, namely Qin. The whole process is shown in Figure 14:

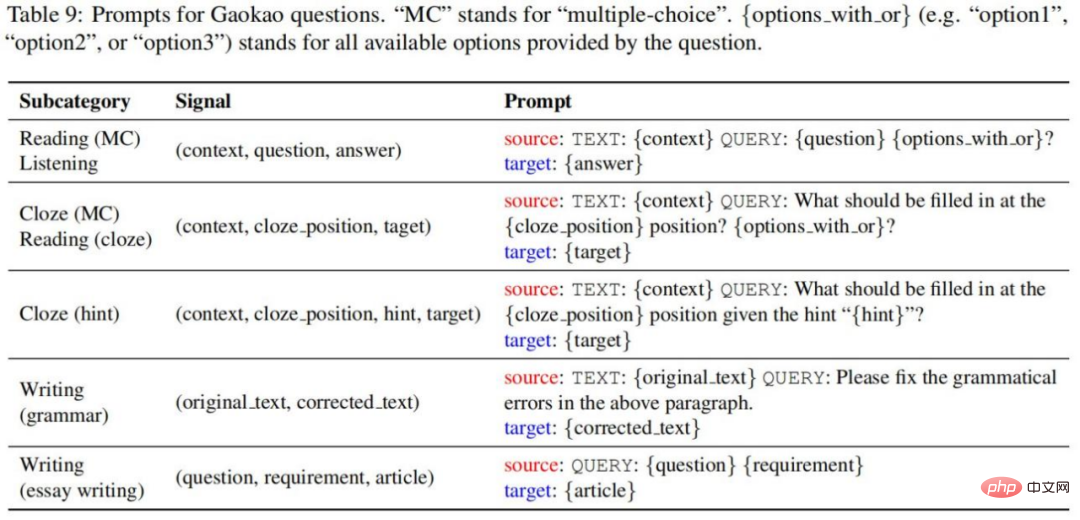

This study uses the following prompt to convert the original signal tuple into a prompt sample, as shown in Table 9:

The experimental results are shown in Table 10-11. We can draw the following conclusions: In each English test paper, RST achieved the highest total score in the two sets of listening tests, with an average score of 130.6 points; compared with T0pp In comparison, the performance of RST is far better than T0pp under the same model size. Across all settings, the total score obtained by RST is on average 54.5 points higher than T0pp, with the highest difference being 69 points (46% of the total score); compared to GPT3, RST can achieve significant results with a model size 16 times smaller. Better results. Across all settings considered, the total score obtained with RST was on average 14.0 points higher than that of T0pp, with a maximum of 26 points (17% of the total score); for T0pp, the listening scores obtained using gold and speech-to-text transcripts differed significantly , with an average of 4.2 points. In comparison, GPT3 and RST are 0.6 and 0.45 respectively, indicating that T0pp’s performance is sensitive to text quality.

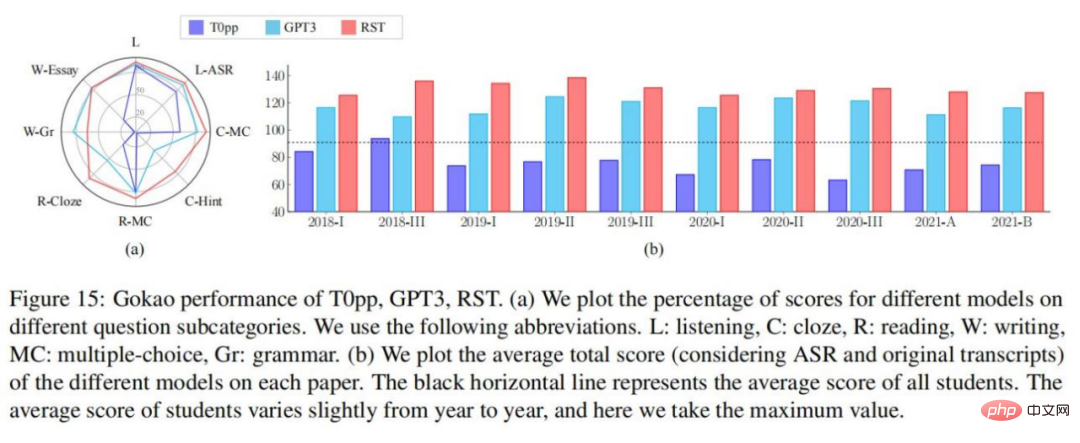

The study conducted a fine-grained analysis to understand the performance of different models on different problem subcategories. In Figure 15-(a), it is clear that RST and GPT3 outperform T0pp on every problem subcategory.

Figure 15-(b) shows the performance of the model and the average performance of students on national test papers in recent years. It is clear that T0pp's overall score on paper 9/10 is below the student average, while RST and GPT3 performance exceeds the student average. In particular, five of the ten papers had an overall RST score above 130 (often considered the target score for students to aim for).

The 2022 College Entrance Examination - English Test (2022.06.08) has just ended, and we learned about the performance of the model in the college entrance examination papers of the most recent year. This study performed experiments with GPT3 and RST. The results show that the total RST score reaches 134, which is much higher than the 108 score achieved by GPT3.

The above is the detailed content of This year's English College Entrance Examination, CMU used reconstruction pre-training to achieve a high score of 134, significantly surpassing GPT3. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology