Home >Technology peripherals >AI >Google uses artificial intelligence to highlight flaws in human cognition

Google uses artificial intelligence to highlight flaws in human cognition

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-04-09 10:11:061555browse

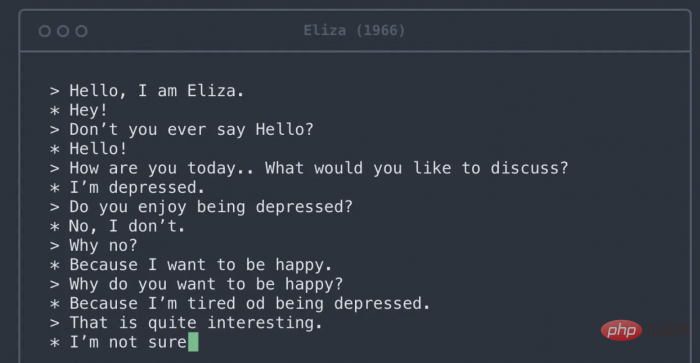

Today, some sentences that look very human-like are actually generated by artificial intelligence systems that have been trained on large amounts of human text. People are so used to assuming that fluent language comes from thinking, feeling humans that evidence to the contrary can be incomprehensible, and that if an AI model can express itself fluently, that means it also thinks and feels like a human. .

So maybe a former Google engineer’s recent claim that Google’s artificial intelligence system LAMDA is self-aware because it can eloquently generate text about its alleged feelings Not surprising. The incident and subsequent media coverage led to articles and posts casting doubt on claims that computational models of human language are alive.

Text generated by models such as Google’s LaMDA can be difficult to distinguish from text written by humans. This impressive achievement is the result of a decades-long project to build models capable of generating grammatical, meaningful language. Today's models, which are data and rule sets that approximate human language, differ from these early attempts in several important ways. First, they are basically trained on the entire Internet. Second, they can learn relationships between words that are far apart, not just adjacent words. Third, they have so much internal tweaking that even the engineers who design them have a hard time understanding why they produce one sequence of words and not another.

Large-scale artificial intelligence language models enable smooth conversations. However, they have no overall message to convey, so their phrases tend to follow common literary tropes that are drawn from the texts they were trained on. The human brain has hard-wired rules for inferring the intention behind words. Every time you engage in a conversation, your brain automatically constructs a mental model of your conversation partner. You then use what they say to fill in the model with the person's goals, feelings, and beliefs. The jump from utterances to mental models is seamless and is triggered every time you receive a complete sentence. This cognitive process saves you a lot of time and energy in daily life and greatly facilitates your social interactions. However, in the case of an AI system, it fails because it builds a mental model out of thin air.

A sad irony is that the same cognitive biases that allow people to attribute humanity to large AI language models can also cause them to treat real humans in an inhumane manner. Sociocultural linguistics research shows that assuming too strong a link between fluent expression and fluent thinking can lead to biases against different people. For example, people with foreign accents are often seen as less intelligent and less likely to get jobs for which they are qualified. Similar prejudices exist against dialects that are not considered prestigious, such as Southern English in the United States, against deaf people who use sign language, and against people with language disorders such as stuttering. These biases are very harmful, often leading to racist and sexist assumptions that have been proven time and time again to be unfounded.

The above is the detailed content of Google uses artificial intelligence to highlight flaws in human cognition. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology