Meta AI under LeCun bets on self-supervision

Is self-supervised learning really a key step towards AGI?

Meta’s chief AI scientist, Yann LeCun, did not forget the long-term goals when talking about “specific measures to be taken at this moment.” He said in an interview: "We want to build intelligent machines that learn like animals and humans."

In recent years, Meta has published a series of papers on self-supervised learning (SSL) of AI systems. LeCun firmly believes that SSL is a necessary prerequisite for AI systems, which can help AI systems build world models to obtain human-like capabilities such as rationality, common sense, and the ability to transfer skills and knowledge from one environment to another.

Their new paper shows how a self-supervised system called a masked autoencoder (MAE) can learn to reconstruct images, video and even audio from very fragmented, incomplete data. While MAEs are not a new idea, Meta has expanded this work into new areas. LeCun said that by studying how to predict missing data, whether it is a still image or a video or audio sequence, MAE systems are building a model of the world. He said: "If it can predict what is about to happen in the video, it must understand that the world is three-dimensional, that some objects are inanimate and do not move on their own, and other objects are alive and difficult to predict, until prediction Complex behavior of living beings." Once an AI system has an accurate model of the world, it can use this model to plan actions.

LeCun said, “The essence of intelligence is learning to predict.” Although he did not claim that Meta’s MAE system is close to general artificial intelligence, he believes that it is an important step towards general artificial intelligence.

But not everyone agrees that Meta researchers are on the right path toward general artificial intelligence. Yoshua Bengio sometimes engages in friendly debates with LeCun about big ideas in AI. In an email to IEEE Spectrum, Bengio laid out some of the differences and similarities in their goals.

Bengio wrote: "I really don't think our current methods (whether self-supervised or not) are enough to bridge the gap between artificial and human intelligence levels." He said that the field needs to make "qualitative progress" , can truly push technology closer to human-scale artificial intelligence.

Bengio agreed with LeCun’s view that “the ability to reason about the world is the core element of intelligence.” However, his team did not focus on models that can predict; A model that can present knowledge in the form of natural language. He noted that such models would allow us to combine these pieces of knowledge to solve new problems, conduct counterfactual simulations, or study possible futures. Bengio's team developed a new neural network framework that is more modular than the one favored by LeCun, who works on end-to-end learning.

The Hot Transformer

Meta’s MAE is based on a neural network architecture called Transformer. This architecture initially became popular in the field of natural language processing, and later expanded to many fields such as computer vision.

Of course, Meta is not the first team to successfully use Transformer for visual tasks. Ross Girshick, a researcher at Meta AI, said that Google’s research on Visual Transformer (ViT) inspired the Meta team. “The adoption of the ViT architecture helped (us) eliminate some obstacles encountered during the experiment.”

Girshick is one of the authors of Meta's first MAE system paper. One of the authors of this paper is He Kaiming. They discuss a very simple method: mask random blocks of the input image and reconstruct the lost ones. pixels.

The training of this model is similar to BERT and some other Transformer-based language models. Researchers will show them huge text databases, but some words are missing, In other words, it was "covered". The model needs to predict the missing words on its own, and then the masked words are revealed so that the model can check its work and update its parameters. This process keeps repeating. To do something similar visually, the team broke the image into patches, then masked some of the patches and asked the MAE system to predict the missing parts of the image, Girshick explained.

The training of this model is similar to BERT and some other Transformer-based language models. Researchers will show them huge text databases, but some words are missing, In other words, it was "covered". The model needs to predict the missing words on its own, and then the masked words are revealed so that the model can check its work and update its parameters. This process keeps repeating. To do something similar visually, the team broke the image into patches, then masked some of the patches and asked the MAE system to predict the missing parts of the image, Girshick explained.

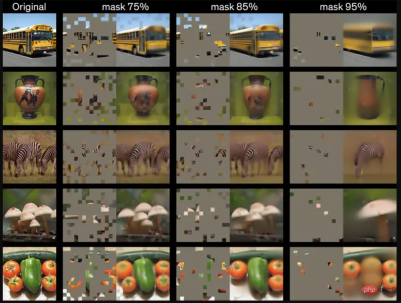

One of the team’s breakthroughs was the realization that masking most of the image would get the best results, a key difference from language transformers, which might only mask 15% of words. “Language is an extremely dense and efficient system of communication, and every symbol carries a lot of meaning,” Girshick said. “But images—these signals from the natural world—are not built to eliminate redundancy. So we This will allow you to compress the content well when creating JPG images."

Researchers at Meta AI experimented with how much of the image needed to be masked to get the best results.

#Girshick explained that by masking more than 75% of the patches in the image, they eliminated redundancy in the image that would otherwise make the task too trivial for training. Their two-part MAE system first uses an encoder to learn the relationships between pixels from a training dataset, and then a decoder does its best to reconstruct the original image from the masked image. After this training scheme is completed, the encoder can also be fine-tuned for vision tasks such as classification and object detection.

Girshick said, "What's ultimately exciting for us is that we see the results of this model in downstream tasks." When using the encoder to complete tasks such as object recognition, "the gains we see are very substantial. ." He pointed out that continuing to increase the model can lead to better performance, which is a potential direction for future models, because SSL "has the potential to use large amounts of data without manual annotation."

Going all out to learn from massive, unfiltered data sets may be Meta's strategy for improving SSL results, but it's also an increasingly controversial approach. AI ethics researchers like Timnit Gebru have called attention to the biases inherent in the uncurated data sets that large language models learn from, which can sometimes lead to disastrous results.

Self-supervised learning for video and audio

In the video MAE system, the masker obscures 95% of each video frame because the similarity between frames means that the video signal is better than the static Images have more redundancy. Meta researcher Christoph Feichtenhofer said that when it comes to video, a big advantage of the MAE approach is that videos are often computationally intensive, and MAE reduces computational costs by up to 95% by masking out up to 95% of the content of each frame. The video clips used in these experiments were only a few seconds long, but Feichtenhofer said training artificial intelligence systems with longer videos is a very active research topic. Imagine you have a virtual assistant who has a video of your home and can tell you where you left your keys an hour ago.

More directly, we can imagine that image and video systems are both useful for the classification tasks required for content moderation on Facebook and Instagram, Feichtenhofer said, "integrity" is one possible application, "We We are communicating with the product team, but this is very new and we don’t have any specific projects yet.”

For the audio MAE work, Meta AI’s team said they will publish the research results on arXiv soon. They found a clever way to apply the masking technique. They converted the sound files into spectrograms, which are visual representations of the spectrum of frequencies in a signal, and then masked parts of the images for training. The reconstructed audio is impressive, although the model can currently only handle clips of a few seconds. Bernie Huang, a researcher on the audio system, said potential applications of this research include classification tasks, assisting voice over IP (VoIP) transmission by filling in the audio lost when packets are dropped, or finding A more efficient way to compress audio files.

Meta has been conducting open source AI research, such as these MAE models, and also provides a pre-trained large language model to the artificial intelligence community. But critics point out that despite being so open to research, Meta has not made its core business algorithms available for study: those that control news feeds, recommendations and ad placement.

The above is the detailed content of Meta AI under LeCun bets on self-supervision. For more information, please follow other related articles on the PHP Chinese website!

What is GPT-4V (vision)? A thorough explanation of how to use it, fee structure, and examples of use!May 13, 2025 am 01:35 AM

What is GPT-4V (vision)? A thorough explanation of how to use it, fee structure, and examples of use!May 13, 2025 am 01:35 AMGPT-4V (GPT-4 Vision) released by OpenAI in September 2023 has attracted much attention as a multimodal AI and led the innovation of AI technology. Based on the original text AI model GPT-4, GPT-4V integrates image recognition and voice output functions, realizing a new AI form that combines vision and hearing. This article will discuss the characteristics, usage methods and applications of GPT-4V in depth. GPT-4V can not only understand text, but also images and speech, and perform comprehensive processing. This makes user interaction more natural and intuitive, and AI communication is more convenient. OpenAI's latest AI agent, "OpenAI Deep Research

How to create an ad banner using ChatGPT! We also introduce examples of creation and promptsMay 13, 2025 am 01:34 AM

How to create an ad banner using ChatGPT! We also introduce examples of creation and promptsMay 13, 2025 am 01:34 AMA guide to creating attractive advertising banners using AI: collaboration with ChatGPT, DALL-E3, and Canva Effective advertising banners are essential in today's digital marketing. This article explains how to create an advertising banner using AI, especially ChatGPT and DALL-E3. We will also introduce advanced banner creation in collaboration with Canva. Creating an ad banner using ChatGPT and DALL-E3 By subscribing to ChatGPT Plus, you can use DALL-E3 without limits and use creatively from the text prompt.

A thorough explanation of the advantages, disadvantages, and precautions of translating using ChatGPT!May 13, 2025 am 01:32 AM

A thorough explanation of the advantages, disadvantages, and precautions of translating using ChatGPT!May 13, 2025 am 01:32 AMTranslations using ChatGPT: Advantages, Disadvantages and Safe Usage Translation with ChatGPT offers many benefits, but there are also potential risks. In this article, we will explain the advantages and disadvantages of ChatGPT translation, including specific examples, and introduce safe usage methods. It is important to understand the possibilities and limitations of ChatGPT to facilitate multilingual communication. Information about OpenAI Deep Research is here ⬇️ [ChatGPT] What is OpenAI Deep Research?

Use ChatGPT to check contracts! A thorough explanation of prompt examples and points to be careful aboutMay 13, 2025 am 01:31 AM

Use ChatGPT to check contracts! A thorough explanation of prompt examples and points to be careful aboutMay 13, 2025 am 01:31 AMContract checks using AI to improve utilization efficiency and accuracy: A practical guide using ChatGPT Contract confirmation requires a great deal of time and effort due to its precision. However, the evolution of AI technologies such as ChatGPT has made this work more efficient and effective. This article explains how to check contracts using ChatGPT, how to use them, risk management, and the importance of collaborating with experts. New contract checking process with specific examples and practical advice on how AI can contribute to risk reduction in business.

An easy-to-understand explanation of how to create a manual using ChatGPT!May 13, 2025 am 01:30 AM

An easy-to-understand explanation of how to create a manual using ChatGPT!May 13, 2025 am 01:30 AMCreating manuals is an essential process for improving business efficiency, but can be time-consuming and labor-intensive. What's attracting attention is the approach to creating manuals using AI technology. This article explains how to efficiently create manuals using ChatGPT, an AI that is excellent at natural language processing. With ChatGPT, not only can you reduce costs and time, but you can also provide high-quality manuals that support multiple languages. We will introduce the benefits of using ChatGPT to create manuals, actual steps, prompt examples, and more, as well as examples of how to use it in companies, so we will introduce AI.

You cannot change the email address or phone number registered with ChatGPT! Explaining how to deal with itMay 13, 2025 am 01:29 AM

You cannot change the email address or phone number registered with ChatGPT! Explaining how to deal with itMay 13, 2025 am 01:29 AMChatGPT Account Information Change Guide: Easily switch email and mobile phone numbers! Many users want to change ChatGPT's registered email or mobile phone number, but ChatGPT does not currently support directly modifying registered information. The solution is to create a new account. This article will guide you in detail how to create a new account, process an old account, and safely delete an account. We will cover password modification, new account creation precautions, etc., to help you use ChatGPT more safely and efficiently. Please click here for the latest AI agent "OpenAI Deep Research" introduction ⬇️ 【ChatGPT】Detailed explanation of OpenAI Deep Research: How to use and charging standards!

What does ChatGPT work? Illustrated and easy-to-understand explanation!May 13, 2025 am 01:28 AM

What does ChatGPT work? Illustrated and easy-to-understand explanation!May 13, 2025 am 01:28 AMChatGPT: Revealing the operating mechanism behind it Today, people can have natural and smooth conversations with AI, and ChatGPT is the best of them. However, many people don’t understand the working principle behind it. This article will gradually reveal how ChatGPT developed by OpenAI generates such intelligent answers, from text preprocessing to self-attention mechanism based on Transformer model, and carefully interprets the operating mechanism of ChatGPT for you. By learning how ChatGPT works, you can have a deeper understanding of AI technology and experience its charm and potential. OpenAI Deep Research, the latest AI agent released by OpenAI. For details, please click

Explaining how to change the icon in ChatGPT! Customize to your favorite imageMay 13, 2025 am 01:27 AM

Explaining how to change the icon in ChatGPT! Customize to your favorite imageMay 13, 2025 am 01:27 AMChatGPT: AI Chatbot Icon Change Guide ChatGPT is an excellent AI that allows for natural conversations, but it does not officially provide the ability to change icons. In this article, we will explain how to change icons for users and ChatGPT. Is it possible to change the icon in ChatGPT? Basically, you cannot change the icons on the user side and the ChatGPT side. The display of the user icon differs depending on how you register (Gmail, Microsoft, Apple ID, email address). The OpenAI logo is the default on the ChatGPT side.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

Dreamweaver CS6

Visual web development tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Mac version

God-level code editing software (SublimeText3)