Technology peripherals

Technology peripherals AI

AI Perception network for depth, attitude and road estimation in joint driving scenarios

Perception network for depth, attitude and road estimation in joint driving scenariosPerception network for depth, attitude and road estimation in joint driving scenarios

The arXiv paper "JPerceiver: Joint Perception Network for Depth, Pose and Layout Estimation in Driving Scenes", uploaded in July 22, reports on the work of Professor Tao Dacheng of the University of Sydney, Australia, and Beijing JD Research Institute.

Depth estimation, visual odometry (VO) and bird's eye view (BEV) scene layout estimation are three key tasks for driving scene perception, which is the key to motion in autonomous driving. Fundamentals of planning and navigation. Although complementary, they usually focus on separate tasks and rarely address all three simultaneously.

A simple approach is to do it independently in a sequential or parallel manner, but there are three disadvantages, namely 1) depth and VO results are affected by the inherent scale ambiguity problem; 2) BEV layout is usually done independently Estimating roads and vehicles while ignoring explicit overlay-underlay relationships; 3) Although depth maps are useful geometric cues for inferring scene layout, BEV layout is actually predicted directly from front view images without using any depth-related information.

This paper proposes a joint perception framework JPerceiver to solve these problems and simultaneously estimate scale-perceived depth, VO and BEV layout from monocular video sequences. Use a cross-view geometric transformation (CGT) to propagate absolute scale from the road layout to depth and VO according to a carefully designed scale loss. At the same time, a cross-view and cross-modal transfer (CCT) module is designed to use depth clues to reason about road and vehicle layout through attention mechanisms. JPerceiver is trained in an end-to-end multi-task learning method, in which the CGT scale loss and CCT modules promote knowledge transfer between tasks and facilitate feature learning for each task.

Code and model can be downloaded

https://github.com/sunnyHelen/JPerceiver. As shown in the figure, JPerceiver consists of three networks: depth, attitude and road layout, all based on the encoder-decoder architecture. The depth network aims to predict the depth map Dt of the current frame It, where each depth value represents the distance between a 3D point and the camera. The goal of the pose network is to predict the pose transformation Tt → t m between the current frame It and its adjacent frame It m. The goal of the road layout network is to estimate the BEV layout Lt of the current frame, that is, the semantic occupancy of roads and vehicles in the top-view Cartesian plane. The three networks are jointly optimized during training.

#The two networks predicting depth and pose are jointly optimized with photometric loss and smoothness loss in a self-supervised manner. In addition, the CGT scale loss is also designed to solve the scale ambiguity problem of monocular depth and VO estimation.

#The two networks predicting depth and pose are jointly optimized with photometric loss and smoothness loss in a self-supervised manner. In addition, the CGT scale loss is also designed to solve the scale ambiguity problem of monocular depth and VO estimation.

In order to achieve scale-aware environment perception, using the scale information in the BEV layout, the scale loss of CGT is proposed for depth estimation and VO. Since the BEV layout shows the semantic occupancy in the BEV Cartesian plane, it covers the range of Z meters in front of the vehicle and (Z/2) meters to the left and right respectively. It provides a natural distance field z, the metric distance zij of each pixel relative to the own vehicle, as shown in the figure:

Assume that the BEV plane is the ground , its origin is just below the origin of the self-vehicle coordinate system. Based on the camera external parameters, the BEV plane can be projected to the forward camera through homography transformation. Therefore, the BEV distance field z can be projected into the forward camera, as shown in the figure above, and used to adjust the predicted depth d, thus deriving the CGT scale loss:

Assume that the BEV plane is the ground , its origin is just below the origin of the self-vehicle coordinate system. Based on the camera external parameters, the BEV plane can be projected to the forward camera through homography transformation. Therefore, the BEV distance field z can be projected into the forward camera, as shown in the figure above, and used to adjust the predicted depth d, thus deriving the CGT scale loss:

For roads For layout estimation, an encoder-decoder network structure is adopted. It is worth noting that a shared encoder is used as a feature extractor and different decoders to learn the BEV layout of different semantic categories simultaneously. In addition, a CCT module is designed to enhance feature interaction and knowledge transfer between tasks, and provide 3-D geometric information for BEV’s spatial reasoning. In order to regularize the road layout network, various loss terms are combined together to form a hybrid loss and achieve different classes of balanced optimization.

For roads For layout estimation, an encoder-decoder network structure is adopted. It is worth noting that a shared encoder is used as a feature extractor and different decoders to learn the BEV layout of different semantic categories simultaneously. In addition, a CCT module is designed to enhance feature interaction and knowledge transfer between tasks, and provide 3-D geometric information for BEV’s spatial reasoning. In order to regularize the road layout network, various loss terms are combined together to form a hybrid loss and achieve different classes of balanced optimization.

CCT studies the correlation between the forward view feature Ff, BEV layout feature Fb, re-converted forward feature Ff′ and forward depth feature FD, and refines the layout features accordingly, as shown in the figure Shown: divided into two parts, namely

CCT-CVand CCT-CM of the cross-view module and the cross-modal module.

In CCT, Ff and Fd are extracted by the encoder of the corresponding perceptual branch, while Fb is obtained by a view projection MLP to convert Ff to BEV, and a cycle loss constrained same MLP to re-convert it to Ff′.

In CCT-CV, the cross-attention mechanism is used to discover the geometric correspondence between the forward view and BEV features, and then guides the refinement of the forward view information and prepares for BEV inference. In order to make full use of the forward view image features, Fb and Ff are projected to patches: Qbi and Kbi, as query and key respectively.

In addition to utilizing forward view features, CCT-CM is also deployed to impose 3-D geometric information from Fd. Since Fd is extracted from the forward view image, it is reasonable to use Ff as a bridge to reduce the cross-modal gap and learn the correspondence between Fd and Fb. Fd plays the role of Value, thereby obtaining valuable 3-D geometric information related to BEV information and further improving the accuracy of road layout estimation.

In the process of exploring a joint learning framework to simultaneously predict different layouts, there are large differences in the characteristics and distribution of different semantic categories. For features, the road layout in driving scenarios usually needs to be connected, while different vehicle targets must be segmented.

Regarding the distribution, more straight road scenes are observed than turning scenes, which is reasonable in real data sets. This difference and imbalance increase the difficulty of BEV layout learning, especially jointly predicting different categories, since simple cross-entropy (CE) loss or L1 loss fails in this case. Several segmentation losses, including distribution-based CE loss, region-based IoU loss, and boundary loss, are combined into a hybrid loss to predict the layout of each category.

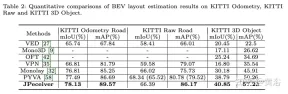

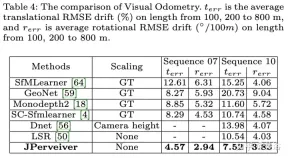

The experimental results are as follows:

##

##

The above is the detailed content of Perception network for depth, attitude and road estimation in joint driving scenarios. For more information, please follow other related articles on the PHP Chinese website!

Are You At Risk Of AI Agency Decay? Take The Test To Find OutApr 21, 2025 am 11:31 AM

Are You At Risk Of AI Agency Decay? Take The Test To Find OutApr 21, 2025 am 11:31 AMThis article explores the growing concern of "AI agency decay"—the gradual decline in our ability to think and decide independently. This is especially crucial for business leaders navigating the increasingly automated world while retainin

How to Build an AI Agent from Scratch? - Analytics VidhyaApr 21, 2025 am 11:30 AM

How to Build an AI Agent from Scratch? - Analytics VidhyaApr 21, 2025 am 11:30 AMEver wondered how AI agents like Siri and Alexa work? These intelligent systems are becoming more important in our daily lives. This article introduces the ReAct pattern, a method that enhances AI agents by combining reasoning an

Revisiting The Humanities In The Age Of AIApr 21, 2025 am 11:28 AM

Revisiting The Humanities In The Age Of AIApr 21, 2025 am 11:28 AM"I think AI tools are changing the learning opportunities for college students. We believe in developing students in core courses, but more and more people also want to get a perspective of computational and statistical thinking," said University of Chicago President Paul Alivisatos in an interview with Deloitte Nitin Mittal at the Davos Forum in January. He believes that people will have to become creators and co-creators of AI, which means that learning and other aspects need to adapt to some major changes. Digital intelligence and critical thinking Professor Alexa Joubin of George Washington University described artificial intelligence as a “heuristic tool” in the humanities and explores how it changes

Understanding LangChain Agent FrameworkApr 21, 2025 am 11:25 AM

Understanding LangChain Agent FrameworkApr 21, 2025 am 11:25 AMLangChain is a powerful toolkit for building sophisticated AI applications. Its agent architecture is particularly noteworthy, allowing developers to create intelligent systems capable of independent reasoning, decision-making, and action. This expl

What are the Radial Basis Functions Neural Networks?Apr 21, 2025 am 11:13 AM

What are the Radial Basis Functions Neural Networks?Apr 21, 2025 am 11:13 AMRadial Basis Function Neural Networks (RBFNNs): A Comprehensive Guide Radial Basis Function Neural Networks (RBFNNs) are a powerful type of neural network architecture that leverages radial basis functions for activation. Their unique structure make

The Meshing Of Minds And Machines Has ArrivedApr 21, 2025 am 11:11 AM

The Meshing Of Minds And Machines Has ArrivedApr 21, 2025 am 11:11 AMBrain-computer interfaces (BCIs) directly link the brain to external devices, translating brain impulses into actions without physical movement. This technology utilizes implanted sensors to capture brain signals, converting them into digital comman

Insights on spaCy, Prodigy and Generative AI from Ines MontaniApr 21, 2025 am 11:01 AM

Insights on spaCy, Prodigy and Generative AI from Ines MontaniApr 21, 2025 am 11:01 AMThis "Leading with Data" episode features Ines Montani, co-founder and CEO of Explosion AI, and co-developer of spaCy and Prodigy. Ines offers expert insights into the evolution of these tools, Explosion's unique business model, and the tr

A Guide to Building Agentic RAG Systems with LangGraphApr 21, 2025 am 11:00 AM

A Guide to Building Agentic RAG Systems with LangGraphApr 21, 2025 am 11:00 AMThis article explores Retrieval Augmented Generation (RAG) systems and how AI agents can enhance their capabilities. Traditional RAG systems, while useful for leveraging custom enterprise data, suffer from limitations such as a lack of real-time dat

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

WebStorm Mac version

Useful JavaScript development tools

Atom editor mac version download

The most popular open source editor

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software