How can unsupervised machine learning benefit industrial automation?

Modern industrial environments are filled with sensors and smart components, and all of these devices together produce a wealth of data. This data, untapped in most factories today, powers a variety of exciting new applications. In fact, according to IBM, the average factory generates 1TB of production data every day. However, only about 1% of data is turned into actionable insights.

Machine learning (ML) is a fundamental technology designed to leverage this data and unlock massive amounts of value. Using training data, machine learning systems can build mathematical models that teach a system to perform specific tasks without explicit instructions.

ML uses algorithms that act on data to make decisions largely without human intervention. The most common form of machine learning in industrial automation is supervised machine learning, which uses large amounts of historical data labeled by humans to train models (i.e., the training of human-supervised algorithms).

This is useful for well-known problems such as bearing defects, lubrication failures, or product defects. Where supervised machine learning falls short is when enough historical data isn't available, labeling is too time-consuming or expensive, or users aren't sure exactly what they're looking for in the data. This is where unsupervised machine learning comes into play.

Unsupervised machine learning aims to operate on unlabeled data using algorithms that are good at recognizing patterns and pinpointing anomalies in the data. Properly applied unsupervised machine learning serves a variety of industrial automation use cases, from condition monitoring and performance testing to cybersecurity and asset management.

Supervised Learning vs. Unsupervised Learning

Supervised machine learning is easier to perform than unsupervised machine learning. With a properly trained model, it can provide very consistent, reliable results. Supervised machine learning may require large amounts of historical data - as is needed to include all relevant cases, i.e., in order to detect product defects, the data needs to contain a sufficient number of cases of defective products. Labeling these massive data sets can be time-consuming and expensive. Furthermore, training models is an art. It requires large amounts of data, properly organized, to produce good results.

Today, the process of benchmarking different ML algorithms is significantly simplified using tools like AutoML. At the same time, over-constraining the training process may result in a model that performs well on the training set but performs poorly on real data. Another key drawback is that supervised machine learning is not very effective at identifying unexpected trends in data or discovering new phenomena. For these types of applications, unsupervised machine learning can provide better results.

Common unsupervised machine learning techniques

Compared with supervised machine learning, unsupervised machine learning only operates on unlabeled inputs . It provides powerful tools for data exploration to discover unknown patterns and correlations without human help. The ability to operate on unlabeled data saves time and money and enables unsupervised machine learning to operate on the data as soon as the input is generated.

The downside is that unsupervised machine learning is more complex than supervised machine learning. It is more expensive, requires a higher level of expertise, and often requires more data. Its output tends to be less reliable than supervised ML, and ultimately requires human supervision for optimal results.

The three important forms of unsupervised machine learning techniques are clustering, anomaly detection and data dimensionality reduction.

Clustering

As the name suggests, clustering involves analyzing a data set to identify shared characteristics between the data and group similar instances together . Because clustering is an unsupervised ML technique, the algorithm (rather than a human) determines the ranking criteria. Therefore, clustering can lead to surprising discoveries and is an excellent data exploration tool.

To give a simple example: imagine three people being asked to sort fruits in a production department. One might sort by fruit type -- citrus, stone fruit, tropical fruit, etc.; another might sort by color; and a third might sort by shape. Each method highlights a different set of characteristics.

Clustering can be divided into many types. The most common are:

Mutually exclusive clustering (Exclusive Clustering): A data instance is exclusively assigned to a cluster.

Fuzzy or overlapping clustering (Fuzzy Clustering): A data instance can be assigned to multiple clusters. For example, oranges are both citrus and tropical fruits. In the case of unsupervised ML algorithms operating on unlabeled data, it is possible to assign a probability that a data block correctly belongs to group A versus group B.

Hierarchical clustering: This technique involves building a hierarchical structure of clustered data, rather than a set of clusters. Oranges are citrus fruits, but they are also included in the larger spherical fruit group and can be further absorbed by all fruit groups.

Let’s look at a set of the most popular clustering algorithms:

- K-mean

K-mean (K-means) algorithm classifies data into K clusters, where the value of K is preset by the user. At the beginning of the process, the algorithm randomly assigns K data points as centroids for K clusters. Next, it calculates the mean between each data point and the centroid of its cluster. This results in resorting the data to the cluster. At this point, the algorithm recalculates the centroid and repeats the mean calculation. It repeats the process of calculating centroids and reordering clusters until it reaches a constant solution (see Figure 1).

Figure 1: K-means algorithm divides the data set into K clusters, first randomly selecting K data points as centroids, and then randomly distribute the remaining instances across the cluster.

K-means algorithm is simple and efficient. It is very useful for pattern recognition and data mining. The downside is that it requires some advanced knowledge of the dataset to optimize the setup. It is also disproportionately affected by outliers.

- K-median

The K-median algorithm is a close relative of K-means . It uses essentially the same process, except instead of calculating the mean of each data point, it calculates the median. Therefore, the algorithm is less sensitive to outliers.

Here are some common use cases for cluster analysis:

- Clustering is very effective for use cases such as segmentation. This is often associated with customer analytics. It can also be applied to asset classes, not only to analyze product quality and performance, but also to identify usage patterns that may impact product performance and service life. This is helpful for OEM companies that manage "fleets" of assets, such as automated mobile robots in smart warehouses or drones for inspection and data collection.

- It can be used for image segmentation as part of image processing operations.

- Cluster analysis can also be used as a preprocessing step to help prepare data for supervised ML applications.

Anomaly Detection

Anomaly detection is critical for a variety of use cases from defect detection to condition monitoring to cybersecurity. This is a key task in unsupervised machine learning. There are several anomaly detection algorithms used in unsupervised machine learning, let’s take a look at the two most popular ones:

- Isolation Forest Algorithm

The standard method of anomaly detection is to establish a set of normal values and then analyze each piece of data to see whether and how much it deviates from the normal value. This is a very time-consuming process when working with massive data sets of the kind used in ML. The isolation forest algorithm takes the opposite approach. It defines outliers as being neither common nor very different from other instances in the data set. Therefore, they are more easily isolated from the rest of the dataset on other instances.

The isolation forest algorithm has minimal memory requirements and the time required is linearly related to the size of the data set. They can handle high-dimensional data even if it involves irrelevant attributes.

- Local Outlier Factor (LOF)

One of the challenges of identifying outliers only by their distance from the centroid Yes, data points that are a short distance from a small cluster may be outliers, while points that appear to be far away from a large cluster may not be. The LOF algorithm is designed to make this distinction.

LOF defines an outlier as a data point with a local density deviation that is much greater than its neighboring data points (see Figure 2). Although like K-means it does require some user setup ahead of time, it can be very effective. It can also be applied to novelty detection when used as a semi-supervised algorithm and trained on normal data only.

Figure 2: Local Outlier Factor (LOF) uses the local density deviation of each data point to calculate the anomaly score , thereby distinguishing normal data points from outliers.

The following are a few use cases for anomaly detection:

- Predictive Maintenance: Most industrial equipment is built to last with minimal downtime. Therefore, the historical data available is often limited. Because unsupervised ML can detect anomalous behavior even in limited data sets, it can potentially identify developmental deficiencies in these cases. Here, too, it can be used for fleet management, providing early warning of defects while minimizing the amount of data that needs to be reviewed.

- Quality Assurance/Inspection: Improperly operated machinery may produce substandard products. Unsupervised machine learning can be used to monitor functions and processes to flag any anomalies. Unlike standard QA processes, it can do this without labeling and training.

- Identification of image anomalies: This is particularly useful in medical imaging to identify dangerous pathologies.

- Cybersecurity: One of the biggest challenges in cybersecurity is that threats are constantly changing. In this case, anomaly detection via unsupervised ML can be very effective. One standard security technique is to monitor data flows. If a PLC that normally sends commands to other components suddenly starts receiving a steady stream of commands from atypical devices or IP addresses, this could indicate an intrusion. But what if the malicious code comes from a trusted source (or a bad actor spoofs a trusted source)? Unsupervised learning can detect bad actors by looking for atypical behavior in devices receiving commands.

- Test data analysis: Testing plays a vital role in both design and production. The two biggest challenges involved are the sheer volume of data involved, and the ability to analyze the data without introducing inherent bias. Unsupervised machine learning can solve both challenges. It can be a particular benefit during the development process or production troubleshooting when the test team is not even sure what they are looking for.

Dimensionality reduction

Machine learning is based on large amounts of data, often very large amounts. It’s one thing to filter a data set with ten to dozens of features. Datasets with thousands of features (and they certainly exist) can be overwhelming. Therefore, the first step in ML can be dimensionality reduction to reduce the data to the most meaningful features.

A common algorithm used for dimensionality reduction, pattern recognition, and data exploration is principal component analysis (PCA). A detailed discussion of this algorithm is beyond the scope of this article. Arguably it can help identify mutually orthogonal data subsets, i.e. they can be removed from the data set without affecting the main analysis. PCA has several interesting use cases:

- Data Preprocessing: When it comes to machine learning, the often-stated philosophy is that more is better. That said, sometimes more is more, especially in the case of irrelevant/redundant data. In these cases, unsupervised machine learning can be used to remove unnecessary features (data dimensions), speeding up processing time and improving results. In the case of visual systems, unsupervised machine learning can be used for noise reduction.

- Image Compression: PCA is very good at reducing the dimensionality of a data set while retaining meaningful information. This makes the algorithm very good at image compression.

- Pattern Recognition: The same capabilities discussed above make PCA useful for tasks such as facial recognition and other complex image recognition.

Unsupervised machine learning is not better or worse than supervised machine learning. For the right project, it can be very effective. That said, the best rule of thumb is to keep it simple, so unsupervised machine learning is generally only used on problems that supervised machine learning cannot solve.

Think about the following questions to determine which machine learning approach is best for your project:

- What is the question ?

- What is a business case? What is the goal of quantification? How quickly will the project provide a return on investment? How does this compare to supervised learning or other more traditional solutions?

- What types of input data are available? how much do you have? Is it relevant to the question you want to answer? Is there a process that already produces labeled data, for example, is there a QA process that identifies defective products? Is there a maintenance database that records equipment failures?

- Is it suitable for unsupervised machine learning?

Finally, here are some tips to help ensure success:

- Do your homework and develop a strategy before starting a project.

- Start small and fix errors on a smaller scale.

- Make sure the solution is scalable, you don’t want to end up in pilot project purgatory.

- Consider working with a partner. All types of machine learning require expertise. Find the right tools and partners to automate. Don't reinvent the wheel. You can pay to build the necessary skills in-house, or you can direct your resources toward delivering the products and services you do best while letting your partners and ecosystem handle the heavy lifting.

Data collected in industrial settings can be a valuable resource, but only if properly harnessed. Unsupervised machine learning can be a powerful tool for analyzing data sets to extract actionable insights. Adopting this technology can be challenging, but it can provide a significant competitive advantage in a challenging world.

The above is the detailed content of How can unsupervised machine learning benefit industrial automation?. For more information, please follow other related articles on the PHP Chinese website!

Convert Text Documents to a TF-IDF Matrix with tfidfvectorizerApr 18, 2025 am 10:26 AM

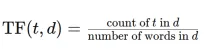

Convert Text Documents to a TF-IDF Matrix with tfidfvectorizerApr 18, 2025 am 10:26 AMThis article explains the Term Frequency-Inverse Document Frequency (TF-IDF) technique, a crucial tool in Natural Language Processing (NLP) for analyzing textual data. TF-IDF surpasses the limitations of basic bag-of-words approaches by weighting te

Building Smart AI Agents with LangChain: A Practical GuideApr 18, 2025 am 10:18 AM

Building Smart AI Agents with LangChain: A Practical GuideApr 18, 2025 am 10:18 AMUnleash the Power of AI Agents with LangChain: A Beginner's Guide Imagine showing your grandmother the wonders of artificial intelligence by letting her chat with ChatGPT – the excitement on her face as the AI effortlessly engages in conversation! Th

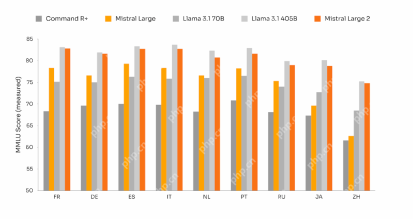

Mistral Large 2: Powerful Enough to Challenge Llama 3.1 405B?Apr 18, 2025 am 10:16 AM

Mistral Large 2: Powerful Enough to Challenge Llama 3.1 405B?Apr 18, 2025 am 10:16 AMMistral Large 2: A Deep Dive into Mistral AI's Powerful Open-Source LLM Meta AI's recent release of the Llama 3.1 family of models was quickly followed by Mistral AI's unveiling of its largest model to date: Mistral Large 2. This 123-billion paramet

What is Noise Schedules in Stable Diffusion? - Analytics VidhyaApr 18, 2025 am 10:15 AM

What is Noise Schedules in Stable Diffusion? - Analytics VidhyaApr 18, 2025 am 10:15 AMUnderstanding Noise Schedules in Diffusion Models: A Comprehensive Guide Have you ever been captivated by the stunning visuals of digital art generated by AI and wondered about the underlying mechanics? A key element is the "noise schedule,&quo

How to Build a Conversational Chatbot with GPT-4o? - Analytics VidhyaApr 18, 2025 am 10:06 AM

How to Build a Conversational Chatbot with GPT-4o? - Analytics VidhyaApr 18, 2025 am 10:06 AMBuilding a Contextual Chatbot with GPT-4o: A Comprehensive Guide In the rapidly evolving landscape of AI and NLP, chatbots have become indispensable tools for developers and organizations. A key aspect of creating truly engaging and intelligent chat

Top 7 Frameworks for Building AI Agents in 2025Apr 18, 2025 am 10:00 AM

Top 7 Frameworks for Building AI Agents in 2025Apr 18, 2025 am 10:00 AMThis article explores seven leading frameworks for building AI agents – autonomous software entities that perceive, decide, and act to achieve goals. These agents, surpassing traditional reinforcement learning, leverage advanced planning and reasoni

What's the Difference Between Type I and Type II Errors ? - Analytics VidhyaApr 18, 2025 am 09:48 AM

What's the Difference Between Type I and Type II Errors ? - Analytics VidhyaApr 18, 2025 am 09:48 AMUnderstanding Type I and Type II Errors in Statistical Hypothesis Testing Imagine a clinical trial testing a new blood pressure medication. The trial concludes the drug significantly lowers blood pressure, but in reality, it doesn't. This is a Type

Automated Text Summarization with Sumy LibraryApr 18, 2025 am 09:37 AM

Automated Text Summarization with Sumy LibraryApr 18, 2025 am 09:37 AMSumy: Your AI-Powered Summarization Assistant Tired of sifting through endless documents? Sumy, a powerful Python library, offers a streamlined solution for automatic text summarization. This article explores Sumy's capabilities, guiding you throug

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

SublimeText3 English version

Recommended: Win version, supports code prompts!

SublimeText3 Chinese version

Chinese version, very easy to use

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool