Home >Technology peripherals >AI >Meta's latest image generation tool is so popular that it can turn dreams into reality!

Meta's latest image generation tool is so popular that it can turn dreams into reality!

- WBOYforward

- 2023-04-08 17:11:041407browse

AI is very good at painting.

Recently, Meta has also developed an AI "painter" - Make-A-Scene.

Do you still think it’s that simple to just use text to generate paintings?

You must know that relying only on text descriptions can sometimes "overturn", such as the "artist" Parti launched by Google some time ago.

"A plate without a banana, and a glass without orange juice next to it."

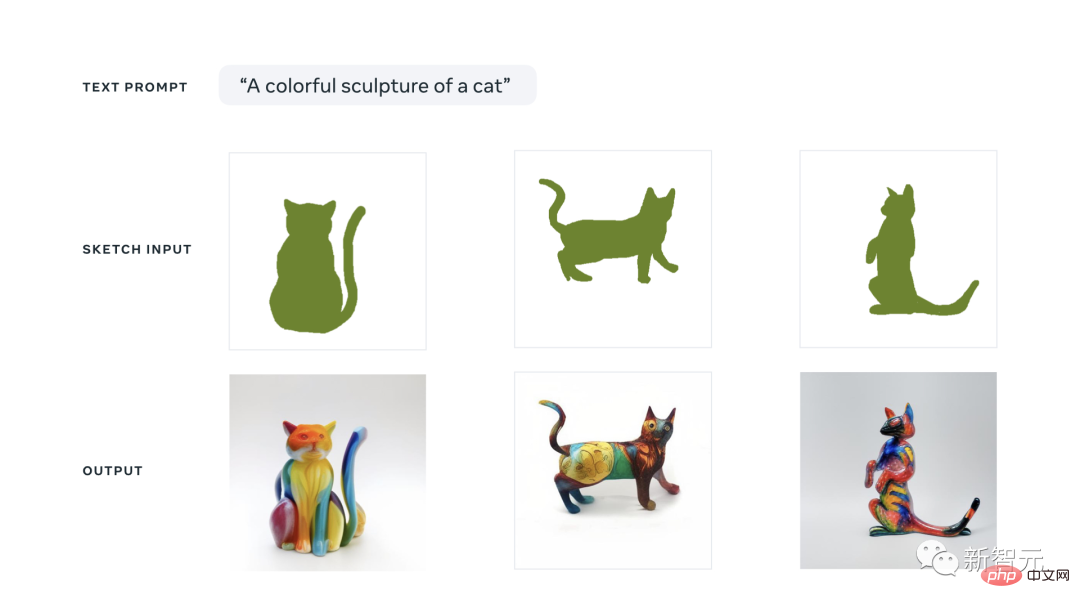

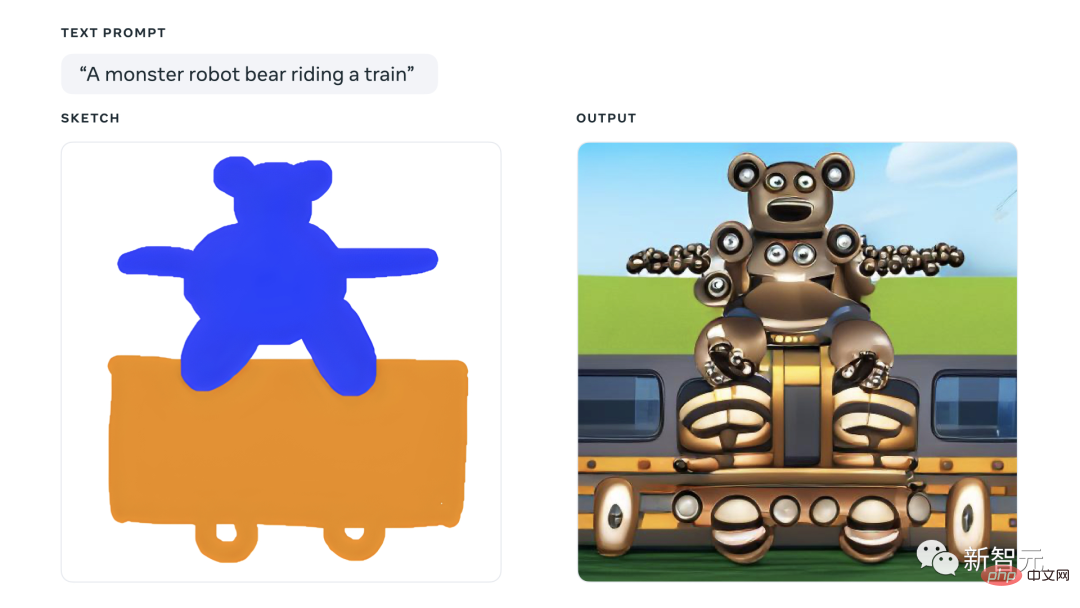

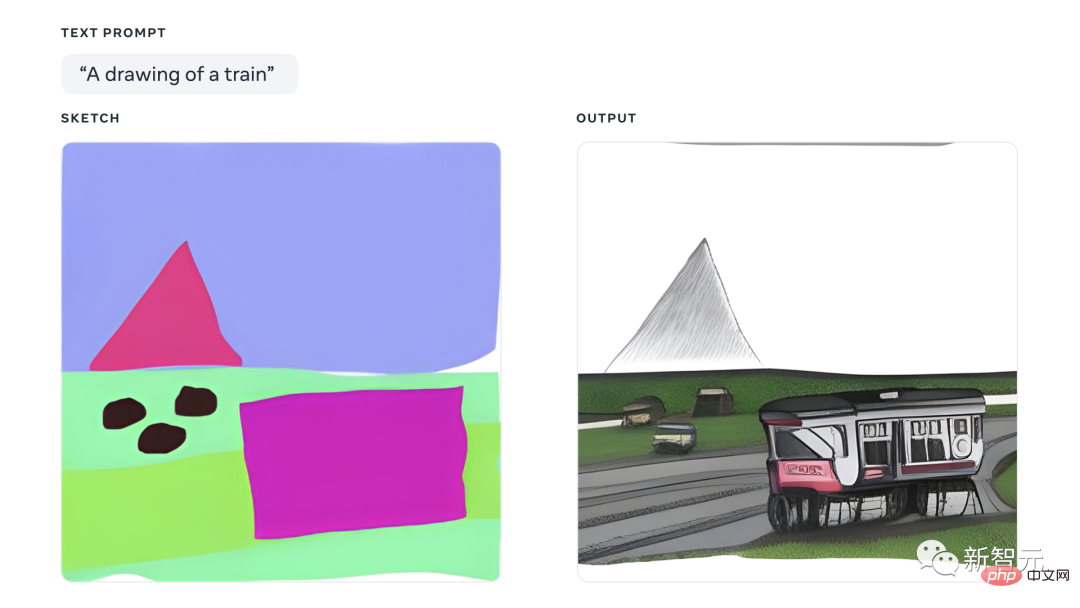

This time, Make-A-Scene can be described via text , plus a sketch, to create the look you want.

You have the final say on the top and bottom, left and right, size, shape and other elements of the composition.

Even LeCun has come out to promote its own products. Needless to say, creativity, the key is "controllable"!

Make-A-Scene is so awesome, let’s take a look.

Meta’s magic pen Ma Liang

All talk without practice, fake tricks!

Let’s see how people use Make-A-Scene to realize their imagination.

The research team will conduct a Make-A-Scene demonstration session with well-known artificial intelligence artists.

The artist team has a strong lineup, including Sofia Crespo, Scott Eaton, Alexander Reben, Refik Anadol, etc. These masters have first-hand experience in applying generative artificial intelligence. Experience.

The R&D team allows these artists to use Make-A-Scene as part of the creative process and provide feedback while using it.

Next, let’s appreciate the works created by the masters using Make-A-Scene.

For example, Sofia Crespo is an artist who focuses on the intersection of nature and technology. She loved imagining artificial life forms that never existed, so she used Make-A-Scene's sketching and text prompting features to create brand new "hybrid creatures."

For example, flower-shaped jellyfish.

Crespo leverages its free drawing capabilities to quickly iterate on new ideas. She said that Make-A-Scene will help artists better express their creativity and allow artists to use a more intuitive interface to draw.

(Flower Jellyfish)

Scott Eaton is an artist, educator, and creative technology expert whose work investigates contemporary realities and relationship between technologies.

He uses Make-A-Scene as a way to compose a scene, exploring changes in the scene through different cues, such as using themes like "sunken and decaying skyscrapers in the desert" to emphasize the climate. crisis.

(Skyscrapers in the Desert)

Alexander Reben is an artist, researcher and roboticist.

He believes that if he can have more control over the output, it will really help to express his artistic intentions. He incorporates these tools into his ongoing series.

For media artist and director Refik Anadol, this tool is a way to promote the development of imagination and better explore unknown territories.

#In fact, this prototype tool is not just for people who are interested in art.

The research team believes that Make-A-Scene can help anyone express themselves better, including those with little artistic talent.

As a start, the research team provided partial access to Meida employees. They are testing and providing feedback on their experiences with Make-A-Scene.

Meda Project Manager Andy Boyatzis uses Make-A-Scene to create art with his two- and four-year-olds. They use playful drawings to bring their ideas and imagination to life.

The following is their work~

A colorful sculpture cat~Isn’t it cute? But this color is actually a bit unbearable to look at, like a child kneading a big lump of plasticine together.

A monster bear riding a train. Seriously, people with cryptophobia should stay away. The editor immediately jumped to the top after reading this picture. Look at these weird arms, a body like a face, wheels like eyeballs...

A mountain peak. To be honest, this picture is quite artistic. But do you feel that the mountains in the distance and the small train nearby are not the same style at all?

Behind the Technology

While current methods provide reasonably good conversions between text and image domains, they still suffer from several key issues. Well addressed: controllability, human perception, image quality.

The method of this model improves structural consistency and image quality to a certain extent.

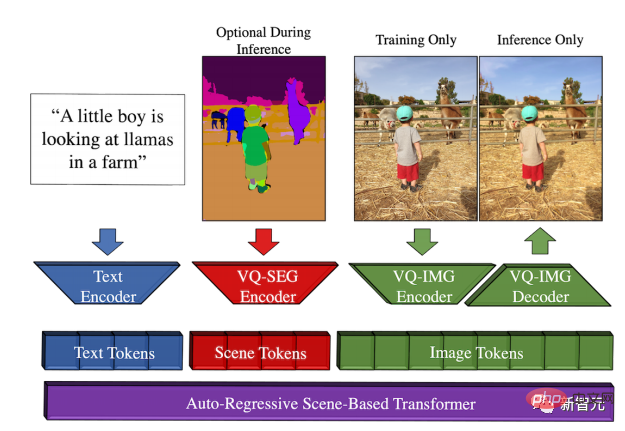

The entire scene consists of three complementary semantic segmentation groups (panorama, human and face).

By combining the three extracted semantic segmentation groups, the network learns to generate the layout and conditions of the semantics to generate the final image.

In order to create the token space of the scene, the authors adopted "VQ-SEG", which is an improvement on "VQ-VAE".

In this implementation, the input and output of "VQ-SEG" are m channels. Additional channels are maps of edges that separate different classes and instances. Edge channels provide separation for adjacent instances of the same class and emphasize rare classes of high importance.

When training the "VQ-SEG" network, since each face part occupies a relatively small number of pixels in the scene space, it leads to the reconstruction of the face parts (such as eyes, nose, etc.) Semantic segmentation of lips, eyebrows) is frequently reduced.

In this regard, the authors tried to use weighted binary cross-entropy face loss based on segmented face part classification to highlight the importance of the face part. In addition, the edges of the face parts are also used as part of the above-mentioned semantic segmentation edge map.

The authors adopt a pre-trained VGG network trained on the ImageNet dataset instead of a dedicated face embedding network, and introduce a feature matching loss that represents the perceptual difference between the reconstructed image and the real image.

By using feature matching and adding additional upsampling layers and downsampling layers to the encoder and decoder in VQ-IMG, the resolution of the output image can be increased from 256×256.

I believe everyone is familiar with Transformer, so what is a scene-based Transformer?

It relies on an autoregressive Transformer with three independent and continuous token spaces, namely text, scene and image.

The token sequence consists of text token encoded by the BPE encoder, scene token encoded by VQ-SEG, and image token encoded or decoded by VQ-IMG.

Before training the scene-based Transformer, each encoded token sequence corresponds to a [text, scene, image] tuple, which is extracted using the corresponding encoder.

In addition, the authors also adopted classifier-free guidance, which is the process of guiding unconditional samples to conditional samples.

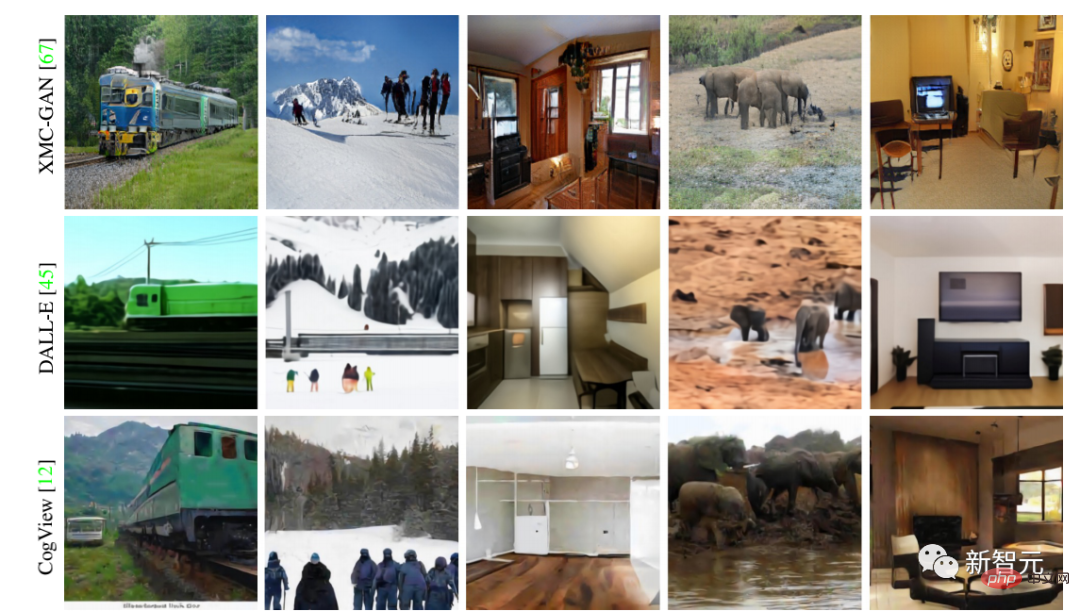

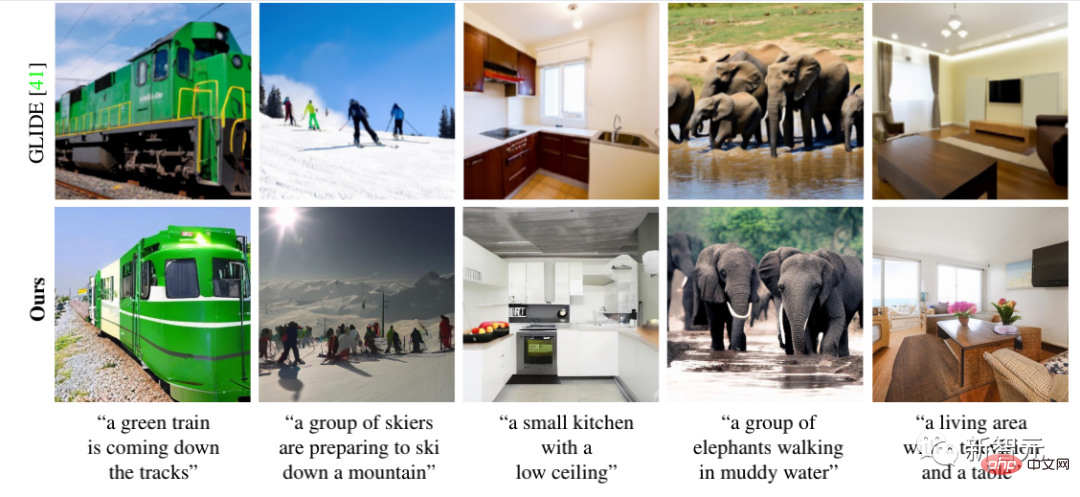

This model achieves SOTA results. Let’s take a closer look at the comparison with the previous method

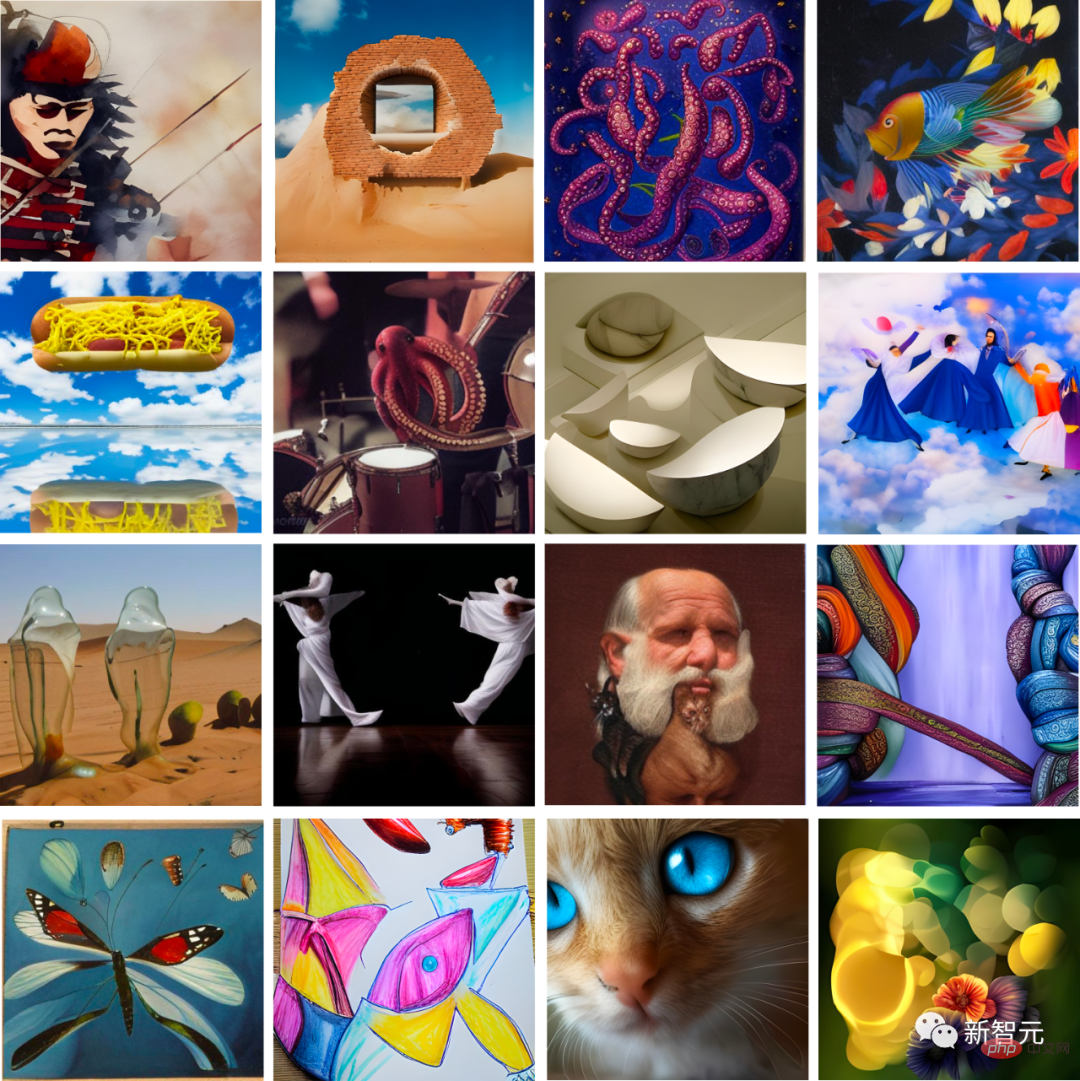

Now, the researchers have also integrated Make-A-Scene with a super-resolution Network, you can generate images with 2048x2048 and 4 times the resolution.

is as follows:

In fact, like other generative AI models, Make-A-Scene learns vision by training on millions of example images relationship with the text.

It is undeniable that bias reflected in the training data can affect the output of these models.

As the researchers pointed out, Make-A-Scene still has many areas to improve.

The above is the detailed content of Meta's latest image generation tool is so popular that it can turn dreams into reality!. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology