Technology peripherals

Technology peripherals AI

AI One article to understand edge computing technology in autonomous driving systems

One article to understand edge computing technology in autonomous driving systemsOne article to understand edge computing technology in autonomous driving systems

With the advent of the 5G era, edge computing has become a new business growth point in autonomous driving systems. In the future, more than 60% of data and applications will be generated and processed at the edge.

Edge computing is a new computing model that performs calculations at the edge of the network. Its data processing mainly includes two parts, one is the downlink cloud service, and the other is the uplink Internet of Everything services. "Edge" is actually a relative concept, referring to any computing, storage and network-related resources on the path from data to the cloud computing center. From one end of the data to the other end of the cloud service center, the edge can be represented as one or more resource nodes on this path based on the specific needs of the application and actual application scenarios. The business essence of edge computing is the extension and evolution of cloud computing's aggregation nodes outside the data center. It is mainly composed of three types of implementation forms: edge cloud, edge network, and edge gateway.

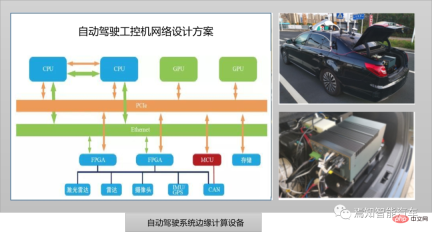

As shown in the picture above, it represents an industrial computer currently used in autonomous driving. In fact, it is a ruggedized enhanced personal computer. It can operate reliably in an industrial environment as an industrial controller. It uses an all-steel industrial chassis that complies with EIA standards to enhance its ability to resist electromagnetic interference. It also uses bus structure and modular design technology to prevent single points of failure. The above autonomous driving industrial computer network design plan fully considers the requirements of ISO26262. Among them, the CPU, GPU, FPGA and bus are all designed for redundancy. When the overall IPC system fails, redundant MCU control can ensure computing security and directly send instructions to the vehicle CAN bus to control vehicle parking. At present, this centralized architecture is suitable for the next generation of centralized autonomous driving system solutions. The industrial computer is equivalent to the next generation of centralized domain controller. All computing work is unified into one, and algorithm iteration does not require excessive consideration of hardware. Overall upgrade and vehicle regulations requirements.

Edge Computing and Edge Cloud

In current autonomous driving, large-scale artificial intelligence algorithm models and large-scale data centralized analysis are both placed Do it in the cloud. Because the cloud has a large amount of computing resources and can complete data processing in a very short time, but relying solely on the cloud to provide services for autonomous vehicles is not feasible in many cases. Because autonomous vehicles will generate a large amount of data that needs to be processed in real time during driving. If these data are transmitted to the remote cloud for processing through the core network, then the data transmission alone will cause a large delay and cannot meet the data processing requirements. Real-time requirements. The bandwidth of the core network is also difficult to support a large number of self-driving cars sending a large amount of data to the cloud at the same time. Moreover, once the core network is congested and the data transmission is unstable, the driving safety of self-driving cars cannot be guaranteed.

Edge computing focuses on local businesses, has high real-time requirements, puts great pressure on the network, and the computing method is oriented towards localization. Edge computing is more suitable for local small-scale intelligent analysis and preprocessing based on integrated algorithm models. Applying edge computing to the field of autonomous driving will help solve the problems faced by autonomous vehicles in acquiring and processing environmental data.

As two important computing methods for the digital transformation of the industry, edge computing and cloud computing basically coexist at the same time, complement each other, promote each other, and jointly solve Computing issues in the era of big data.

Edge computing refers to a computing model that performs calculations at the edge of the network. Its operation objects come from the downlink data of cloud services and the uplink data of Internet of Everything services, and the " "Edge" refers to any computing and network resources on the path from the data source to the cloud computing center. In short, edge computing deploys servers to edge nodes near users to provide services to users at the edge of the network (such as wireless access points), avoiding long-distance data transmission and providing users with faster responses. Task offloading technology offloads the computing tasks of autonomous vehicles to other edge nodes for execution, solving the problem of insufficient computing resources for autonomous vehicles.

Edge computing has the characteristics of proximity, low latency, locality and location awareness. Among them, proximity means that edge computing is close to the information source. It is suitable for capturing and analyzing key information in big data through data optimization. It can directly access the device, serve edge intelligence more efficiently, and easily derive specific application scenarios. Low latency means that edge computing services are close to the terminal devices that generate data. Compared with cloud computing, latency is greatly reduced, especially in smart driving application scenarios, making the feedback process faster. Locality means that edge computing can run in isolation from the rest of the network to achieve localized, relatively independent computing. On the one hand, it ensures local data security, and on the other hand, it reduces the dependence of computing on network quality. Location awareness means that when the edge network is part of a wireless network, edge computing-style local services can use relatively little information to determine the location of all connected devices. These services can be applied to location-based service application scenarios.

At the same time, the development trend of edge computing will gradually evolve towards heterogeneous computing, edge intelligence, edge-cloud collaboration and 5G edge computing. Heterogeneous computing requires the use of computing units of different types of instruction sets and architectures to form a system of computing to meet the needs of edge services for diverse computing. Heterogeneous computing can not only meet the infrastructure construction of a new generation of "connected computing", It can also meet the needs of fragmented industries and differentiated applications, improve computing resource utilization, and support flexible deployment and scheduling of computing power.

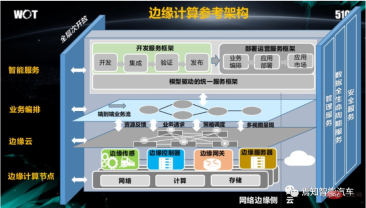

Edge Computing Reference Architecture

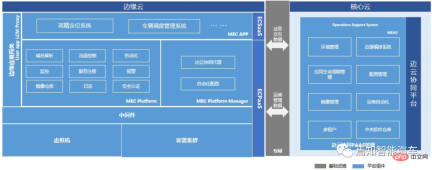

Each layer of the Edge Computing Reference Architecture provides a modeled open interface, realizing the full level of the architecture Open, through vertical management services, data full life cycle services and security services, realize full business process and full life cycle intelligent services.

As shown in the figure above, the edge computing reference architecture mainly includes the following contents:

The entire system is divided into There are four layers of intelligent services, business orchestration, edge cloud and edge computing nodes. Edge computing is located between the cloud and field devices. The edge layer supports the access of various field devices downwards and can connect with the cloud upwards. The edge layer includes two main parts: edge node and edge manager. The edge node is a hardware entity and is the core of carrying edge computing services. The core of the edge manager is software, and its main function is to uniformly manage edge nodes. Edge computing nodes generally have computing resources, network resources and storage resources. The edge computing system uses resources in two ways: First, it directly encapsulates computing resources, network resources and storage resources and provides a calling interface. The edge manager uses Edge node resources are used in code download, network policy configuration, and database operations; secondly, edge node resources are further encapsulated into functional modules according to functional areas, and the edge manager combines and calls functional modules through model-driven business orchestration to achieve Integrated development and agile deployment of edge computing services.

Hardware infrastructure for edge computing

1. Edge server

The edge server is the main computing carrier of edge computing and edge data centers and can be deployed in a computer room of the operator. Since edge computing environments vary greatly, and edge services have personalized requirements in terms of latency, bandwidth, GPU, and AI, engineers should minimize on-site operations and have strong management and operation capabilities, including status Collection, operation control and management interface to achieve remote and automated management.

In autonomous driving systems, intelligent edge all-in-one machines are usually used to organically integrate computing, storage, network, virtualization, environmental power and other products into one In the industrial computer, it facilitates the normal operation of the automatic driving system.

2. Edge access network

#The edge computing access network refers to the connection from the user system to the edge computing system A series of network infrastructures passed through, including but not limited to campus networks, access networks, edge gateways, etc. It also has features such as convergence, low latency, large bandwidth, large connections, and high security.

3. Edge internal network

The edge computing internal network refers to the internal network infrastructure of the edge computing system, such as the network equipment connected to the server, the network equipment interconnected with the external network, and the network built by it. The internal network of edge computing has the characteristics of simplified architecture, complete functions, and greatly reduced performance loss; at the same time, it can achieve edge-cloud collaboration and centralized management and control.

Since the edge computing system naturally exhibits distributed attributes, the individual scale is small but the number is large. If a single point management mode is adopted, it is difficult to meet the operation requirements, and it will also occupy industrial computer resources and reduce the cost. Benefits; on the other hand, edge computing business emphasizes end-to-end delay, bandwidth and security, so the collaboration between edge cloud and edge is also very important. Generally, it is necessary to introduce an intelligent cross-domain management and orchestration system into the cloud computing system to uniformly manage and control all edge computing system network infrastructure within a certain range, and ensure the automation of network and computing resources by supporting a centralized management model based on edge-cloud collaboration. Efficient configuration.

4. Edge computing interconnection network

The edge computing interconnection network includes from edge computing system to cloud computing system ( Such as public cloud, private cloud, communication cloud, user-built cloud, etc.), other edge computing systems, and the network infrastructure passed by various data centers. The edge computing interconnection network has the characteristics of diversified connections and low cross-domain latency.

How to combine edge computing and autonomous driving systems

In the next stage, in order to achieve higher-order autonomous driving system tasks, only rely on single-vehicle intelligence is completely insufficient.

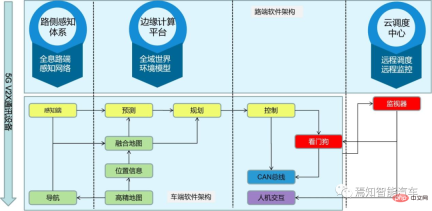

Collaborative sensing and task offloading are the main applications of edge computing in the field of autonomous driving. These two technologies make it possible to achieve high-level autonomous driving. Collaborative sensing technology allows cars to obtain sensor information from other edge nodes, expanding the sensing range of autonomous vehicles and increasing the integrity of environmental data. Taking autonomous driving as an example, cars will integrate sensors such as lidar and cameras. At the same time, it is necessary to achieve comprehensive perception of vehicles, roads and traffic data through vehicle network V2X, etc., to obtain more information than the internal and external sensors of a single vehicle, and to enhance the visibility beyond visual range. Perception of the environment within the range and sharing the autonomous driving position in real time through high-definition 3D dynamic maps. The collected data will be interacted with road edge nodes and surrounding vehicles to expand perception capabilities and achieve vehicle-to-vehicle and vehicle-to-road collaboration. The cloud computing center is responsible for collecting data from widely distributed edge nodes, sensing the operating status of the transportation system, and issuing reasonable dispatching instructions to edge nodes, traffic signal systems and vehicles through big data and artificial intelligence algorithms, thereby improving system operation. efficiency. For example, in bad weather such as rain, snow, heavy fog, or in scenes such as intersections and turns, radars and cameras cannot clearly identify obstacles ahead. Using V2x to obtain real-time data on roads, driving, etc., intelligent prediction of road conditions can be achieved. Avoid accidents.

With the improvement of autonomous driving levels and the increase in the number of smart sensors equipped, autonomous vehicles generate a large amount of raw data every day. These raw data require local real-time processing, fusion, and feature extraction, including target detection and tracking based on deep learning. At the same time, V2X needs to be used to improve the perception of the environment, roads and other vehicles, and use 3D high-definition maps for real-time modeling and positioning, path planning and selection, and driving strategy adjustment to safely control the vehicle. Since these tasks require real-time processing and response in the vehicle at all times, a powerful and reliable edge computing platform is required to perform them. Considering the diversity of computing tasks, in order to improve execution efficiency and reduce power consumption and cost, it is generally necessary to support heterogeneous computing platforms.

The edge computing architecture of autonomous driving relies on edge-cloud collaboration and the communication infrastructure and services provided by LTE/5G. The edge side mainly refers to vehicle-mounted units, roadside units (RSU) or mobile edge computing (MEC) servers. Among them, the vehicle-mounted unit is the main body of environmental perception, decision-making planning and vehicle control, but it relies on the cooperation of RSU or MEC server. For example, RSU provides the vehicle-mounted unit with more information about roads and pedestrians, but some functions are more suitable and even better run in the cloud. Irreplaceable. For example, vehicle remote control, vehicle simulation and verification, node management, data persistence and management, etc.

For edge computing of autonomous driving systems, it can achieve advantages such as load integration, heterogeneous computing, real-time processing, connection and interoperability, and security optimization.

1. "Load Consolidation"

Run loads with different attributes such as ADAS, IVI, digital instruments, head-up display and rear entertainment system on the same hardware platform through virtualized computing. At the same time, load integration based on virtualization and hardware abstraction layer makes it easier to implement cloud business orchestration, deep learning model updates, software and firmware upgrades for the entire vehicle driving system.

2. "Heterogeneous computing"

is the inheritance of many different computing technologies by the edge platform of the autonomous driving system. Computing tasks with attributes adopt different computing methods based on their performance and energy consumption ratio differences when running on different hardware platforms. For example, geolocation and path planning, target recognition and detection based on deep learning, image preprocessing and feature extraction, sensor fusion and target tracking, etc. GPUs are good at handling convolutional calculations for target recognition and tracking. The CPU will produce better performance and lower energy consumption for logical computing capabilities. The digital signal processing DSP produces more advantages in feature extraction algorithms such as positioning. This heterogeneous computing method greatly improves the performance and energy consumption ratio of the computing platform and reduces computing latency. Heterogeneous computing selects appropriate hardware implementations for different computing tasks, gives full play to the advantages of different hardware platforms, and shields hardware diversity by unifying upper-layer software interfaces.

3. "Real-time processing"

As we all know, the autonomous driving system has extremely high requirements for real-time performance. , because in dangerous situations there may only be a few seconds available for the autonomous driving system to brake and avoid collisions. Moreover, the braking reaction time includes the response time of the entire driving system, involving cloud computing processing, workshop negotiation processing time, vehicle itself system calculation and braking processing time. If the autonomous driving response is divided into real-time requirements for each functional module of its edge computing platform. It needs to be refined into perception detection time, fusion analysis time and behavioral path planning time. At the same time, the entire network latency must also be considered, because the low latency and high reliability application scenarios brought by 5G are also very critical. It can enable self-driving cars to achieve end-to-end latency of less than 1ms and reliability close to 100%. At the same time, 5G can flexibly allocate network processing capabilities according to priority, thereby ensuring a faster response speed for vehicle control signal transmission.

4. "Connectivity and Interoperability"

Edge computing for autonomous vehicles is inseparable from vehicle wireless communication technology (V2X, vehicle-to-everything) support, which provides a means of communication between autonomous vehicles and other elements in the intelligent transportation system, and is the basis for cooperation between autonomous vehicles and edge nodes.

Currently, V2X is mainly based on dedicated short range communication (DSRC, dedicated short range communication) and cellular networks [5]. DSRC is a communication standard specifically used between vehicles (V2V, vehicle-to-vehicle) and vehicles and road infrastructure (V2I, vehicle-to-infrastructure). It has high data transmission rate and low latency. , Support point-to-point or point-to-multipoint communication and other advantages. Cellular networks represented by 5G have the advantages of large network capacity and wide coverage, and are suitable for V2I communications and communications between edge servers.

5. "Security Optimization"

Edge computing security is an important guarantee for edge computing, and its design combines It has established an in-depth security protection system for cloud computing and edge computing, enhanced the ability of edge infrastructure, networks, applications, and data to identify and resist various security threats, and built a safe and trusted environment for the development of edge computing. The control plane and data plane of the 5G core network of the next-generation autonomous driving system are separated. NFV makes network deployment more flexible, thereby ensuring the success of edge distributed computing deployment. Edge computing spreads more data computing and storage from the central unit to the edge. Its computing power is deployed close to the data source. Some data no longer has to go through the network to reach the cloud for processing, thus reducing latency and network load and improving data efficiency. Security and privacy. For future mobile communication devices close to vehicles, such as base stations, roadside units, etc., edge computing of the Internet of Vehicles may be deployed, which can well complete local data processing, encryption and decision-making, and provide real-time, highly reliable communication capabilities. .

Edge computing has extremely important applications in environmental perception and data processing of autonomous driving. Self-driving cars can expand their perception range by obtaining environmental information from edge nodes, and can also offload computing tasks to edge nodes to solve the problem of insufficient computing resources. Compared with cloud computing, edge computing avoids the high delays caused by long-distance data transmission, can provide faster responses to autonomous vehicles, and reduces the load on the backbone network. Therefore, the use of edge computing in the staged autonomous driving research and development process will be an important option for its continuous optimization and development.

The above is the detailed content of One article to understand edge computing technology in autonomous driving systems. For more information, please follow other related articles on the PHP Chinese website!

Are You At Risk Of AI Agency Decay? Take The Test To Find OutApr 21, 2025 am 11:31 AM

Are You At Risk Of AI Agency Decay? Take The Test To Find OutApr 21, 2025 am 11:31 AMThis article explores the growing concern of "AI agency decay"—the gradual decline in our ability to think and decide independently. This is especially crucial for business leaders navigating the increasingly automated world while retainin

How to Build an AI Agent from Scratch? - Analytics VidhyaApr 21, 2025 am 11:30 AM

How to Build an AI Agent from Scratch? - Analytics VidhyaApr 21, 2025 am 11:30 AMEver wondered how AI agents like Siri and Alexa work? These intelligent systems are becoming more important in our daily lives. This article introduces the ReAct pattern, a method that enhances AI agents by combining reasoning an

Revisiting The Humanities In The Age Of AIApr 21, 2025 am 11:28 AM

Revisiting The Humanities In The Age Of AIApr 21, 2025 am 11:28 AM"I think AI tools are changing the learning opportunities for college students. We believe in developing students in core courses, but more and more people also want to get a perspective of computational and statistical thinking," said University of Chicago President Paul Alivisatos in an interview with Deloitte Nitin Mittal at the Davos Forum in January. He believes that people will have to become creators and co-creators of AI, which means that learning and other aspects need to adapt to some major changes. Digital intelligence and critical thinking Professor Alexa Joubin of George Washington University described artificial intelligence as a “heuristic tool” in the humanities and explores how it changes

Understanding LangChain Agent FrameworkApr 21, 2025 am 11:25 AM

Understanding LangChain Agent FrameworkApr 21, 2025 am 11:25 AMLangChain is a powerful toolkit for building sophisticated AI applications. Its agent architecture is particularly noteworthy, allowing developers to create intelligent systems capable of independent reasoning, decision-making, and action. This expl

What are the Radial Basis Functions Neural Networks?Apr 21, 2025 am 11:13 AM

What are the Radial Basis Functions Neural Networks?Apr 21, 2025 am 11:13 AMRadial Basis Function Neural Networks (RBFNNs): A Comprehensive Guide Radial Basis Function Neural Networks (RBFNNs) are a powerful type of neural network architecture that leverages radial basis functions for activation. Their unique structure make

The Meshing Of Minds And Machines Has ArrivedApr 21, 2025 am 11:11 AM

The Meshing Of Minds And Machines Has ArrivedApr 21, 2025 am 11:11 AMBrain-computer interfaces (BCIs) directly link the brain to external devices, translating brain impulses into actions without physical movement. This technology utilizes implanted sensors to capture brain signals, converting them into digital comman

Insights on spaCy, Prodigy and Generative AI from Ines MontaniApr 21, 2025 am 11:01 AM

Insights on spaCy, Prodigy and Generative AI from Ines MontaniApr 21, 2025 am 11:01 AMThis "Leading with Data" episode features Ines Montani, co-founder and CEO of Explosion AI, and co-developer of spaCy and Prodigy. Ines offers expert insights into the evolution of these tools, Explosion's unique business model, and the tr

A Guide to Building Agentic RAG Systems with LangGraphApr 21, 2025 am 11:00 AM

A Guide to Building Agentic RAG Systems with LangGraphApr 21, 2025 am 11:00 AMThis article explores Retrieval Augmented Generation (RAG) systems and how AI agents can enhance their capabilities. Traditional RAG systems, while useful for leveraging custom enterprise data, suffer from limitations such as a lack of real-time dat

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

Dreamweaver Mac version

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

WebStorm Mac version

Useful JavaScript development tools