Technology peripherals

Technology peripherals AI

AI Using AI to find loved ones separated after the Holocaust! Google engineers develop facial recognition program that can identify more than 700,000 old World War II photos

Using AI to find loved ones separated after the Holocaust! Google engineers develop facial recognition program that can identify more than 700,000 old World War II photosUsing AI to find loved ones separated after the Holocaust! Google engineers develop facial recognition program that can identify more than 700,000 old World War II photos

Has new business opened up in the field of AI facial recognition?

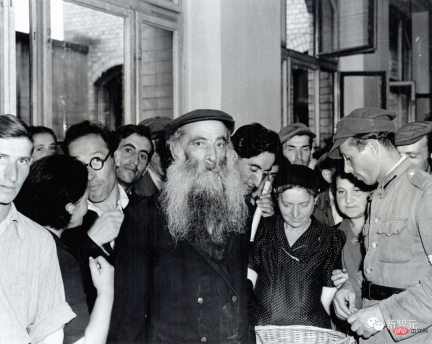

This time, it was about identifying faces in old photos from World War II.

Recently, Daniel Patt, a software engineer from Google, developed an AI face recognition technology called N2N (Numbers to Names), which can identify photos of Europe before World War II and the Holocaust, and Relate them to modern people.

Using AI to find long-lost relatives

In 2016, Pat came up with an idea when he visited the Memorial Museum of Polish Jews in Warsaw.

Could these strange faces be related to him by blood?

Three of his grandparents were Holocaust survivors from Poland, he thought. Help your grandmother find photos of family members killed by the Nazis.

During World War II, due to the large number of Polish Jews who were imprisoned in different concentration camps, many of them were missing.

Just through a yellowed photo, it is difficult to identify the face in it, let alone find one's lost relative.

So, he returned home and immediately turned this idea into reality.

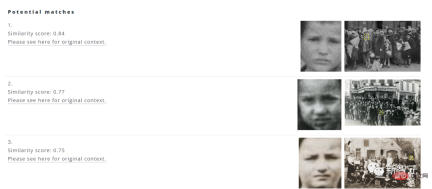

The original idea of the software was to collect image information of faces through a database and use artificial intelligence algorithms to help match the top ten options with the highest similarity.

Most of the image data comes from The US Holocaust Memorial Museum (The US Holocaust Memorial Museum), with more than a million images from databases across the country.

Users only need to select the image in the computer file and click upload, and the system will automatically filter out the top ten options with the highest matching images.

In addition, users can also click on the source address to view the year, location, collection and other information of the picture.

One drawback is that if you enter modern character images, the search results may be outrageous.

Is this the result? (Black question mark)

In short, the system functions still need to be improved.

In addition, Patt works with other teams of software engineers and data scientists at Google to improve the scope and accuracy of searches.

Due to the risk of privacy leakage in the facial recognition system, Patt said, "We do not make any evaluation of identity. We are only responsible for presenting the results using similarity scores and letting users make their own judgments."

Development of AI facial recognition technology

So how does this technology recognize faces?

Initially, face recognition technology had to start with "how to determine whether the detected image is a face."

In 2001, computer vision researchers Paul Viola and Michael Jones proposed a framework to detect faces in real time with high accuracy.

This framework can be based on training models to understand "what is a face and what is not a face".

After training, the model extracts specific features and then stores these features in a file so that features in new images can be compared with previously stored features at various stages.

To help ensure accuracy, the algorithm needs to be trained on "a large data set containing hundreds of thousands of positive and negative images," which improves the algorithm's ability to determine whether a face is in an image and where it is.

If the image under study passes each stage of feature comparison, a face has been detected and the operation can proceed.

Although the Viola-Jones framework is highly accurate for face recognition in real-time applications, it has certain limitations.

For example, the framework may not work if a face is wearing a mask, or if a face is not oriented correctly.

To help eliminate the shortcomings of the Viola-Jones framework and improve face detection, they developed additional algorithms.

Such as region-based convolutional neural network (R-CNN) and single shot detector (SSD) to help improve the process.

Convolutional neural network (CNN) is an artificial neural network used for image recognition and processing, specifically designed to process pixel data.

R-CNN generates region proposals on a CNN framework to localize and classify objects in images.

While methods based on region proposal networks (such as R-CNN) require two shots - one to generate region proposals and another to detect each proposed object - SSD only requires one shot to detect multiple objects in an image. Therefore, SSD is significantly faster than R-CNN.

In recent years, the advantages of face recognition technology driven by deep learning models are significantly better than traditional computer vision methods.

Early face recognition mostly used traditional machine learning algorithms, and research focused more on how to extract more discriminating features and how to align faces more effectively.

With the deepening of research, the performance improvement of traditional machine learning algorithm face recognition on two-dimensional images has gradually reached a bottleneck.

People began to study the problem of face recognition in videos, or combined with three-dimensional model methods to further improve the performance of face recognition, while a few scholars began to study the problem of three-dimensional face recognition.

On the most famous LFW public library, the deep learning algorithm has broken through the bottleneck of traditional machine learning algorithms in face recognition performance on two-dimensional images, and for the first time has improved the recognition rate. Improved to more than 97%.

That is to use the "high-dimensional model established by the CNN network" to directly extract effective identification features from the input face image, and directly calculate the cosine distance for face recognition.

Face detection has evolved from basic computer vision techniques to advances in machine learning (ML) to increasingly complex artificial neural networks (ANN) and related techniques, with the result being continued performance improvements.

Now it plays an important role as the first step in many critical applications - including facial tracking, facial analysis and facial recognition.

During World War II, China also suffered the trauma of the war, and many of the people in the photos at that time were no longer identifiable.

Those who have been traumatized by the war have many relatives and friends whose whereabouts are unknown.

The development of this technology may help people uncover the dusty years and find some comfort for people in the past.

Reference: https://www.timesofisrael.com/google-engineer-identifies-anonymous-faces-in-wwii-photos-with-ai-facial-recognition/

The above is the detailed content of Using AI to find loved ones separated after the Holocaust! Google engineers develop facial recognition program that can identify more than 700,000 old World War II photos. For more information, please follow other related articles on the PHP Chinese website!

Are You At Risk Of AI Agency Decay? Take The Test To Find OutApr 21, 2025 am 11:31 AM

Are You At Risk Of AI Agency Decay? Take The Test To Find OutApr 21, 2025 am 11:31 AMThis article explores the growing concern of "AI agency decay"—the gradual decline in our ability to think and decide independently. This is especially crucial for business leaders navigating the increasingly automated world while retainin

How to Build an AI Agent from Scratch? - Analytics VidhyaApr 21, 2025 am 11:30 AM

How to Build an AI Agent from Scratch? - Analytics VidhyaApr 21, 2025 am 11:30 AMEver wondered how AI agents like Siri and Alexa work? These intelligent systems are becoming more important in our daily lives. This article introduces the ReAct pattern, a method that enhances AI agents by combining reasoning an

Revisiting The Humanities In The Age Of AIApr 21, 2025 am 11:28 AM

Revisiting The Humanities In The Age Of AIApr 21, 2025 am 11:28 AM"I think AI tools are changing the learning opportunities for college students. We believe in developing students in core courses, but more and more people also want to get a perspective of computational and statistical thinking," said University of Chicago President Paul Alivisatos in an interview with Deloitte Nitin Mittal at the Davos Forum in January. He believes that people will have to become creators and co-creators of AI, which means that learning and other aspects need to adapt to some major changes. Digital intelligence and critical thinking Professor Alexa Joubin of George Washington University described artificial intelligence as a “heuristic tool” in the humanities and explores how it changes

Understanding LangChain Agent FrameworkApr 21, 2025 am 11:25 AM

Understanding LangChain Agent FrameworkApr 21, 2025 am 11:25 AMLangChain is a powerful toolkit for building sophisticated AI applications. Its agent architecture is particularly noteworthy, allowing developers to create intelligent systems capable of independent reasoning, decision-making, and action. This expl

What are the Radial Basis Functions Neural Networks?Apr 21, 2025 am 11:13 AM

What are the Radial Basis Functions Neural Networks?Apr 21, 2025 am 11:13 AMRadial Basis Function Neural Networks (RBFNNs): A Comprehensive Guide Radial Basis Function Neural Networks (RBFNNs) are a powerful type of neural network architecture that leverages radial basis functions for activation. Their unique structure make

The Meshing Of Minds And Machines Has ArrivedApr 21, 2025 am 11:11 AM

The Meshing Of Minds And Machines Has ArrivedApr 21, 2025 am 11:11 AMBrain-computer interfaces (BCIs) directly link the brain to external devices, translating brain impulses into actions without physical movement. This technology utilizes implanted sensors to capture brain signals, converting them into digital comman

Insights on spaCy, Prodigy and Generative AI from Ines MontaniApr 21, 2025 am 11:01 AM

Insights on spaCy, Prodigy and Generative AI from Ines MontaniApr 21, 2025 am 11:01 AMThis "Leading with Data" episode features Ines Montani, co-founder and CEO of Explosion AI, and co-developer of spaCy and Prodigy. Ines offers expert insights into the evolution of these tools, Explosion's unique business model, and the tr

A Guide to Building Agentic RAG Systems with LangGraphApr 21, 2025 am 11:00 AM

A Guide to Building Agentic RAG Systems with LangGraphApr 21, 2025 am 11:00 AMThis article explores Retrieval Augmented Generation (RAG) systems and how AI agents can enhance their capabilities. Traditional RAG systems, while useful for leveraging custom enterprise data, suffer from limitations such as a lack of real-time dat

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

Dreamweaver Mac version

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

WebStorm Mac version

Useful JavaScript development tools