Home >Technology peripherals >AI >Get rid of the 'clickbait', another open source masterpiece from the Tsinghua team!

Get rid of the 'clickbait', another open source masterpiece from the Tsinghua team!

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-04-08 12:51:181106browse

As someone who has trouble naming, what troubles me most about writing essays in high school is that I can write a good article but don’t know what title to give it. After I started making a public account, I lost a lot of hair every time I thought of a title.. ....

Recently, I finally discovered the light of "Name Waste" on GitHub, a large-scale interesting application launched by Tsinghua University and the OpenBMB open source community: "Outsmart" "Title", enter the text content and you can generate a hot title with one click!

Ready to use right out of the box. After trying it, all I can say is: it smells great!

##Online experience: https://live.openbmb.org/ant

GitHub: https://github.com/OpenBMB/CPM-Live

When it comes to this headline-making artifact, we have to talk about it first. Let’s talk about its “ontology” – the large model CPM-Ant.

CPM-Ant is the first tens of billions model in China to be trained live. The training took 68 days and was completed on August 5, 2022, and was officially released by OpenBMB!

- Five Outstanding Features

- ##Four Innovation Breakthroughs

- The training process is low-cost and environment-friendly!

- #The most important thing is - completely open source!

Now, let’s take a look at the CPM-Ant release results content report!

Model Overview

CPM-Ant is an open source Chinese pre-trained language model with 10B parameters. It is also the first milestone in the CPM-Live live training process.

The entire training process is low-cost and environment-friendly. It does not require high hardware requirements and running costs. It is based on the delta tuning method and has achieved excellent results in the CUGE benchmark test.CPM-Ant related code, log files and model parameters are fully open source under an open license agreement. In addition to the full model, OpenBMB also provides various compressed versions to suit different hardware configurations.

Five outstanding features of CPM-Ant:

(1) Computational efficiency

With the BMTrain[1] toolkit, you can make full use of the capabilities of distributed computing resources to efficiently train large models.The training of CPM-Ant lasted 68 days and cost 430,000 yuan, which is 1/20 of the approximately US$1.3 million cost of training the T5-11B model by Google. The greenhouse gas emissions of training CPM-Ant are about 4872kg CO₂e, while the emissions of training T5-11B are 46.7t CO₂e[9]. The CPM-Ant solution is about 1/10 of its emissions.

(2) Excellent performance

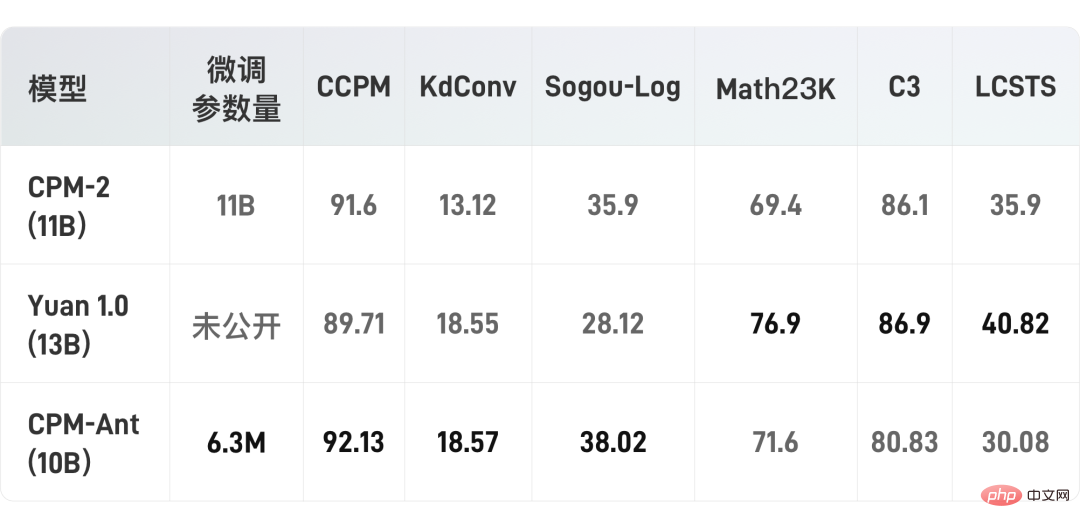

With the OpenDelta[3] tool, it is very convenient to perform incremental fine-tuning Adapt CPM-Ant to downstream tasks.Experiments show that CPM-Ant achieves the best results on 3/6 CUGE tasks by only fine-tuning 6.3M parameters. This result surpasses other fully parameter fine-tuned models. For example: the number of fine-tuned parameters of CPM-Ant is only 0.06% of CPM2 (fine-tuned 11B parameters).

(3) Deployment economy

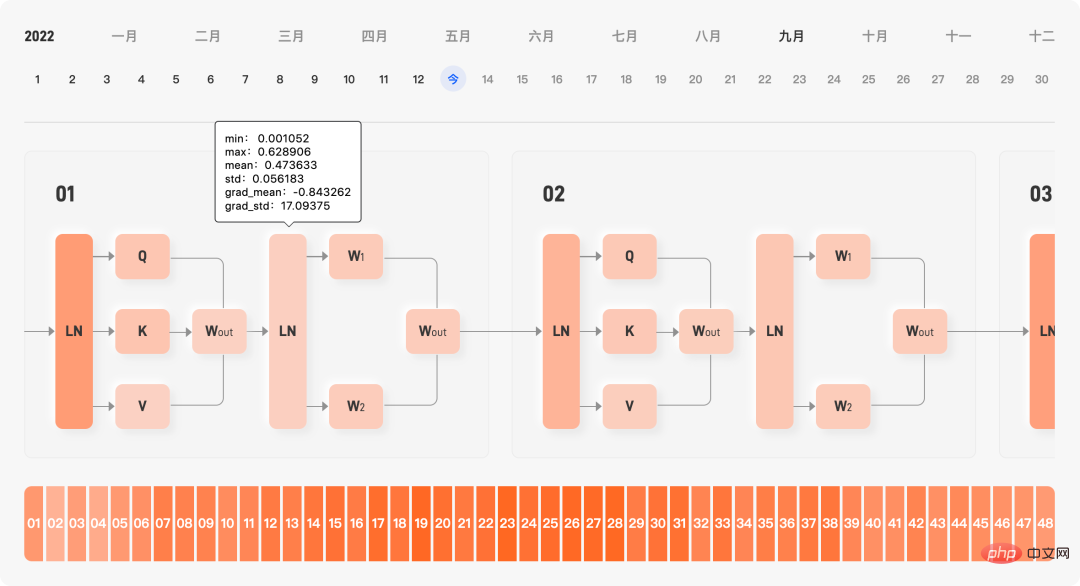

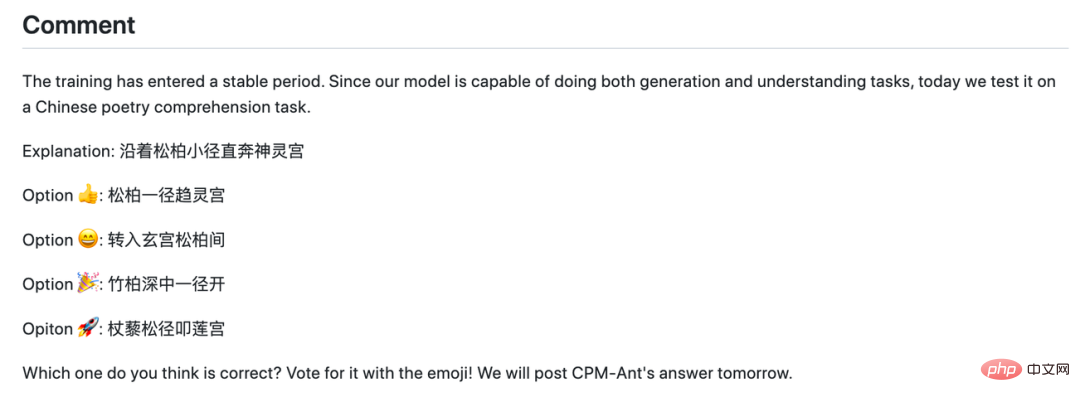

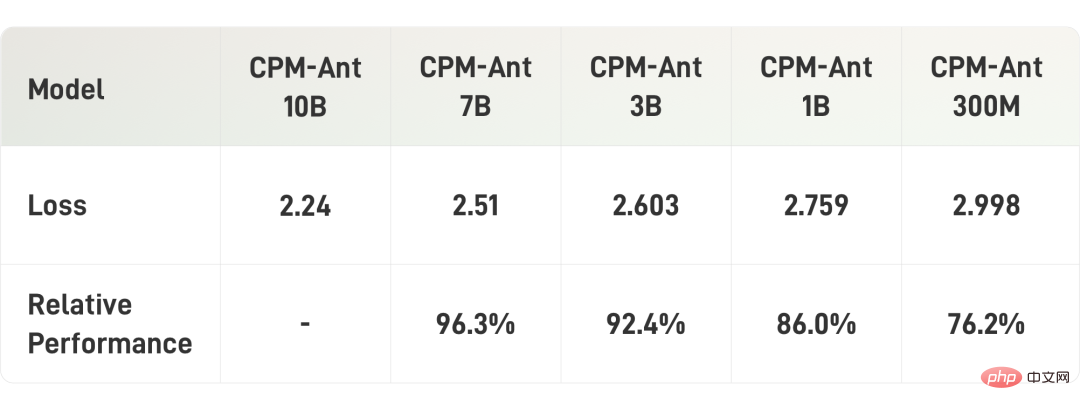

With the help of BMCook[7] and BMInf[4] toolkits, you can Driving CPM-Ant under limited computing resources.Based on BMInf, it can replace the computing cluster and perform large model inference on a single GPU (even a consumer-grade graphics card such as GTX 1060). To make the deployment of CPM-Ant more economical, OpenBMB uses BMCook to further compress the original 10B model into different versions. The compressed model (7B, 3B, 1B, 300M) can adapt to the needs of different low resource scenarios. (4) Easy to use Whether it is the original 10B model or the related compressed version, through a few lines of code It can be easily loaded and run. OpenBMB will also add CPM-Ant to ModelCenter[8], making further development of the model easier. (5) Open Democracy The training process of CPM-Ant is completely open. OpenBMB publishes all code, log files, and model archives as open access. CPM-Ant also adopts an open license that allows commercialization. For manufacturers and research institutions capable of large model training, the CPM-Ant training process provides a complete Practical records of Chinese large model training. OpenBMB has released the model design, training scheme, data requirements and implementation code of the CPM-Live series of models. Based on the model architecture of CPM-Live, it is possible to quickly and easily design and implement large model training programs and organize relevant business data to complete model pre-research and data preparation. The official website records all training dynamics during the training process, including loss function, learning rate, learned data, throughput, gradient size, cost curve, and internal parameters of the model The mean and standard deviation are displayed in real time. Through these training dynamics, users can quickly diagnose whether there are problems in the model training process. #Real-time display of model training internal parameters In addition, the R&D students of OpenBMB update the training record summary in real time every day. The summary includes loss value, gradient value, and overall progress. It also records some problems encountered and bugs during the training process, so that users can understand the model training process in advance. various "pits" that may be encountered. On days when model training is "calm", the R&D guy will also throw out some famous quotes, introduce some latest papers, and even launch guessing activities. A guessing activity in the log In addition, OpenBMB also provides cost-effective training solutions. For enterprises that actually have large model training needs, through relevant training acceleration technologies, training costs have been reduced to an acceptable level. Using the BMTrain[1] toolkit, the computational cost of training the tens of billions of large model CPM-Ant is only 430,000 yuan (the current cost is calculated based on public cloud prices, the actual cost will be lower ), which is approximately 1/20 of the externally estimated cost of the 11B large model T5 of US$1.3 million! How does CPM-Ant help us adapt downstream tasks? For large model researchers, OpenBMB provides a large model performance evaluation solution based on efficient fine-tuning of parameters, which facilitates rapid downstream task adaptation and evaluation of model performance. Use parameter efficient fine-tuning, that is, delta tuning, to evaluate the performance of CPM-Ant on six downstream tasks. LoRA [2] was used in the experiment, which inserts two adjustable low-rank matrices in each attention layer and freezes all parameters of the original model. Using this approach, only 6.3M parameters were fine-tuned per task, accounting for only 0.067% of the total parameters. With the help of OpenDelta[3], OpenBMB conducted all experiments without modifying the code of the original model. It should be noted that no data augmentation methods were used when evaluating the CPM-Ant model on downstream tasks. The experimental results are shown in the following table: It can be seen that with only fine-tuning a few parameters, the OpenBMB model performed well on three data sets. The performance has exceeded CPM-2 and Source 1.0. Some tasks (such as LCSTS) may be difficult to learn when there are very few fine-tuned parameters. The training process of CPM-Live will continue, and the performance on each task will also be affected. Polish further. Interested students can visit the GitHub link below to experience CPM-Ant and OpenDelta first, and further explore the capabilities of CPM-Ant on other tasks! https://github.com/OpenBMB/CPM-Live The performance of large models is amazing, but high hardware requirements and running costs have always troubled many users. For users of large models, OpenBMB provides a series of hardware-friendly usage methods, which can more easily run different model versions in different hardware environments. Using the BMInf[4] toolkit, CPM-Ant can run in a low resource environment such as a single card 1060! In addition, OpenBMB also compresses CPM-Ant. These compressed models include CPM-Ant-7B/3B/1B/0.3B. All of these model compression sizes can correspond to the classic sizes of existing open source pre-trained language models. Considering that users may perform further development on released checkpoints, OpenBMB mainly uses task-independent structured pruning to compress CPM-Ant. The pruning process is also gradual, that is, from 10B to 7B, from 7B to 3B, from 3B to 1B, and finally from 1B to 0.3B. In the specific pruning process, OpenBMB will train a dynamic learnable mask matrix, and then use this mask matrix to prune the corresponding parameters. Finally, the parameters are pruned according to the threshold of the mask matrix, which is determined based on the target sparsity. For more compression details, please refer to the technical blog [5]. The following table shows the results of model compression: Now that the hard core content is over, then How can large models help us "choose titles"? Based on CPM-Ant, all large model developers and enthusiasts can develop it Interesting text fun applications. In order to further verify the effectiveness of the model and provide examples, OpenBMB fine-tuned a hot title generator based on CPM-Ant to demonstrate the model's capabilities. Just paste the text content into the text box below, click to generate, and you can get the exciting title provided by the big model! The title of the first article of the CPM-Ant achievement report is generated by the generator This demo will be continuously polished, and more special effects will be added in the future to enhance the user experience Interested users can also use CPM-Ant to build own display application. If you have any application ideas, need technical support, or encounter any problems while using the demo, you can initiate a discussion at the CPM-Live forum [6] at any time! The release of CPM-Ant is the first milestone of CPM-Live, but it is only the first phase of training. OpenBMB will continue to conduct a series of training in the future. Just a quick spoiler, new features such as multi-language support and structured input and output will be added in the next training period. You are welcome to continue to pay attention! Project GitHub address: https://github.com/OpenBMB/CPM -Live Demo experience address (PC access only): https://live.openbmb.org/ant A complete large model training practice

An efficient fine-tuning solution that has repeatedly created SOTA

A series of hardware-friendly inference methods

An unexpectedly interesting large model application

Portal|Project link

The above is the detailed content of Get rid of the 'clickbait', another open source masterpiece from the Tsinghua team!. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology