Home >Technology peripherals >AI >Why TensorFlow for Python is dying a slow death

Why TensorFlow for Python is dying a slow death

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2023-04-08 12:41:061678browse

There has always been a "sectarian dispute" in the field of science and technology. Whether it’s a debate about the pros and cons of different operating systems, cloud providers, or deep learning frameworks, all it takes is a few beers and the facts go by the wayside and people start fighting over the technologies they support like a Holy Grail. war.

The discussion about IDEs seems to be endless, some people prefer VisualStudio, some prefer IntelliJ, and still others prefer plain old editors like Vim. It’s often said that the text editor you like to use often reflects the personality of the user. This may sound a bit absurd.

After the rise of AI technology, a similar "war" seems to have broken out between the two camps of deep learning frameworks PyTorch and TensorFlow. There are plenty of supporters behind both camps, and they both have good reasons why their preferred framework is the best.

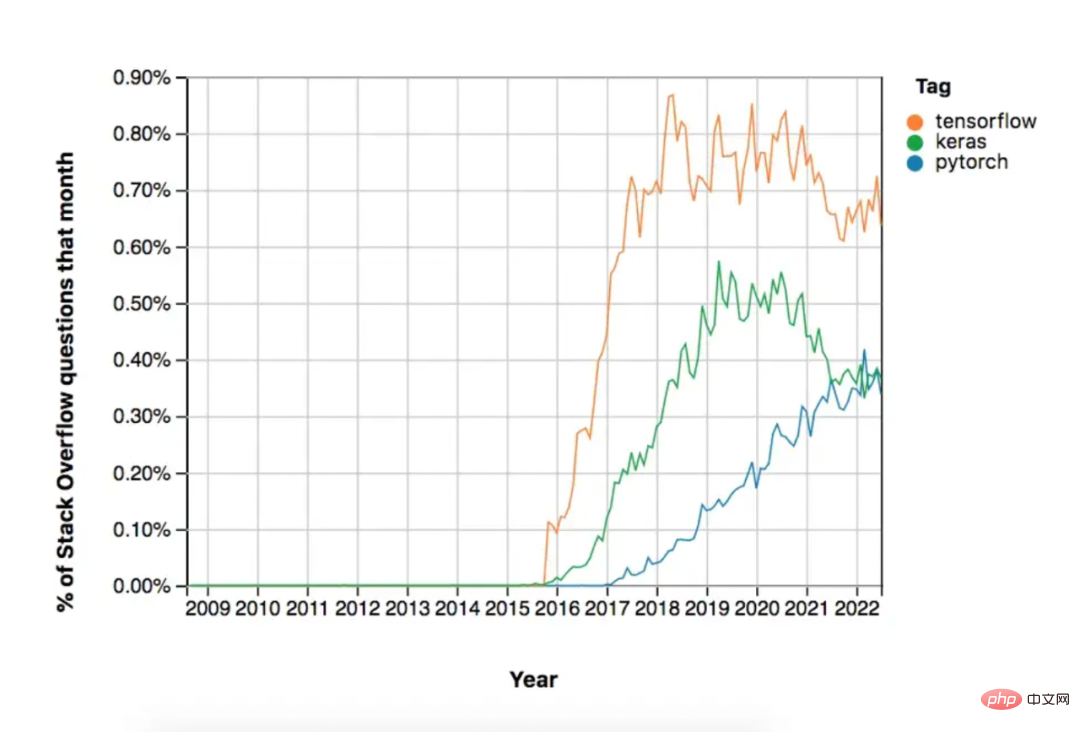

Having said that, the data shows an all too obvious fact. TensorFlow is currently the most widely used deep learning framework. It receives almost twice as many questions on StackOverflow every month as PyTorch.

But on the other hand, PyTorch has been developing very well recently, and TensorFlow's users have not grown. Prior to this article, PyTorch had been steadily gaining traction.

For completeness, the image below also shows Keras, which was released around the same time as TensorFlow. Clearly, Keras has underperformed in recent years, simply because Keras is a bit simple and too slow for the needs of most deep learning practitioners.

PyTorch’s popularity is still growing, while TensorFlow’s growth has stagnated

Chart from StackOverflow trends

TensorFlow’s StackOverflow traffic may not be declining quickly, but it’s still declining. So there's every reason to think that this downward trend will become even more pronounced in the coming years, especially in the Python space.

PyTorch is a more Pythonic framework

TensorFlow, developed by Google, was one of the first frameworks to appear at the deep learning party at the end of 2015. one. However, like any software, the first version is always quite cumbersome to use.

This is why Meta (Facebook) began to develop PyTorch, as a technology that has similar functions to TensorFlow but is more convenient to operate.

The TensorFlow development team quickly noticed this and adopted many of the most popular features on PyTorch in the TensorFlow 2.0 major version update.

A good rule of thumb is that users can do anything in TensorFlow that PyTorch can do. It will take twice as much effort to write the code. Even today it's not that intuitive and feels very unpythonic.

On the other hand, for users who like to use Python, the PyTorch experience is very natural.

Many companies and academic institutions do not have the powerful computing power required to build large models. However, when it comes to machine learning, scale is king; the bigger the model, the better the performance.

With the help of HuggingFace, engineers can take large, trained and tuned models and incorporate them into their workflow pipelines with just a few lines of code. However, a surprising 85% of these models only work with PyTorch. Only about 8% of HuggingFace models are unique to TensorFlow. The rest can be shared between the two frames.

This means that if many users today plan to use large models, they'd better stay away from TensorFlow, otherwise they will need to invest a lot of computing resources to train the model.

PyTorch is more suitable for students and research use

PyTorch is more popular in academia. This is not without reason: three-quarters of research papers use PyTorch. Even among those researchers who started out using TensorFlow — remember, it came much earlier in deep learning — most have now moved on to using PyTorch.

This surprising trend will continue, even though Google has a significant role in AI research and has been mainly using TensorFlow.

More logically, research affects teaching and determines what students will learn. A professor who publishes most of his papers using PyTorch will be more inclined to use it in lectures. Not only can they more easily teach and answer questions about PyTorch, they may also have a stronger belief in PyTorch's success.

Therefore, college students may know much more about PyTorch than TensorFlow. Moreover, considering that today’s college students are tomorrow’s workers, the direction of this trend can be imagined...

PyTorch’s ecosystem is developing faster

In the end, software frameworks only matter if they participate in the ecosystem. Both PyTorch and TensorFlow have fairly developed ecosystems, including repositories for training models in addition to HuggingFace, data management systems, failure prevention mechanisms, and more.

It is worth mentioning that so far, TensorFlow’s ecosystem is still slightly more developed than PyTorch. But keep in mind that PyTorch came later and has seen considerable user growth in just the past few years. Therefore, PyTorch's ecosystem may one day surpass TensorFlow's.

TensorFlow has better infrastructure deployment

Although TensorFlow code is cumbersome to write, once it is written, it is more deployable than PyTorch Much easier. Tools like TensorFlow services and TensorFlow Lite can be deployed to the cloud, servers, mobile and IoT devices in a snap.

On the other hand, PyTorch has always been notoriously slow when it comes to deploying release tools. That being said, it has been closing the gap with TensorFlow at an accelerated pace recently.

It’s difficult to predict at this time, but it is very likely that PyTorch will catch up with or even exceed TensorFlow’s infrastructure deployment in the next few years.

TensorFlow code may still be around for a while because switching frameworks after deployment is expensive. However, it is conceivable that new deep learning applications will increasingly be written and deployed using PyTorch.

TensorFlow is not all Python

TensorFlow is not going away. It's just not as hot as it used to be.

The core reason is that many people who use Python for machine learning are turning to PyTorch.

It should be noted that Python is not the only language for machine learning. It is the representative programming language for machine learning, which is the only reason why TensorFlow developers have focused their support on Python.

Now TensorFlow can also be used with JavaScript, Java, and C. The community has also begun developing support for other languages such as Julia, Rust, Scala, and Haskell.

PyTorch, on the other hand, is extremely Python-centric — which is why it feels so Pythonic. Even though it has a C API, its support for other languages is not half as good as TensorFlow's.

It is conceivable that PyTorch will replace TensorFlow in Python. TensorFlow, on the other hand, will remain a major player in the deep learning space due to its excellent ecosystem, deployment capabilities, and support for other languages.

How much you like Python determines whether to choose TensorFlow or PyTorch for your next project.

Original link: https://thenextweb.com/news/why-tensorflow-for-python-is-dying-a-slow-death

The above is the detailed content of Why TensorFlow for Python is dying a slow death. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology