Technology peripherals

Technology peripherals AI

AI Is GPT-5 going to be stopped? OpenAI issued a response in the early morning: To ensure the safety of AI, we do not 'cut corners'

Is GPT-5 going to be stopped? OpenAI issued a response in the early morning: To ensure the safety of AI, we do not 'cut corners'Is GPT-5 going to be stopped? OpenAI issued a response in the early morning: To ensure the safety of AI, we do not 'cut corners'

In recent days, it can be described as a "troubled time" for OpenAI.

Due to the security issues that ChatGPT and GPT-4 may cause, OpenAI has received some criticism and obstruction from the outside world:

- Musk and thousands of other people jointly called for "all artificial intelligence The laboratory should immediately suspend training large models more powerful than GPT-4 for at least 6 months";

- Italy banned ChatGPT, OpenAl "must notify them through its representative in Europe within 20 days Notify the company of the measures taken to implement this requirement";

- ChatGPT has banned a large number of accounts;

- ChatGPT Plus has been discontinued;

- ......

These events show that although AI has proven to have the ability to bring many benefits to human society, technology is always a double-edged sword and can also bring real risks to human society, and AI is no exception .

On April 6, OpenAI officially released a blog article titled "Our approach to AI safety", which discussed how to "safely build, deploy and use artificial intelligence systems."

#OpenAI is committed to keeping strong artificial intelligence safe and broadly beneficial. Our AI tools provide many benefits to people today.

Users from around the world tell us that ChatGPT helps increase their productivity, enhance their creativity, and provide a tailored learning experience.

We also recognize that, like any technology, these tools come with real risks - so we work hard to ensure security is built into our systems at every level.

1. Build increasingly secure artificial intelligence systems

Before releasing any new system, we conduct rigorous testing, involve external experts for feedback, and strive to leverage reinforcement learning from human feedback Technologies such as improving model behavior and establishing extensive safety and monitoring systems.

For example, after our latest model, GPT-4, completed training, all of our staff spent over 6 months making it more secure and consistent before its public release.

We believe that powerful artificial intelligence systems should undergo rigorous security assessments. Regulation is needed to ensure this approach is adopted and we are actively engaging with government to explore the best form this regulation might take.

2. Learn from real-world use to improve safeguards

We strive to prevent foreseeable risks before deployment, however, what we can learn in the laboratory is limited. Despite extensive research and testing, we cannot predict all of the beneficial ways people use our technology, or all the ways people misuse it. That’s why we believe that learning from real-world use is a key component to creating and releasing increasingly secure AI systems over time.

We carefully release new AI systems incrementally, with plenty of safeguards in place, pushing them out to a steadily expanding population, and continually improving based on what we learn.

We provide our most capable models through our own services and APIs so developers can use this technology directly in their applications. This allows us to monitor and take action on abuse and continually build mitigations for the real ways people abuse our systems, not just theories about what abuse might look like.

Real-world use has also led us to develop increasingly nuanced policies to prevent behaviors that pose real risks to people, while also allowing for many beneficial uses of our technology.

Crucially, we believe society must be given time to update and adjust to increasingly capable AI, and that everyone affected by this technology should have an understanding of AI’s Have an important say in further development. Iterative deployment helps us bring various stakeholders into the conversation about adopting AI technologies more effectively than they would if they had not experienced these tools first-hand.

3. Protecting Children

A key aspect of safety is protecting children. We require that people using our AI tools be 18 or older, or 13 or older with parental approval, and we are working on verification options.

We do not allow our technology to be used to generate hateful, harassing, violent or adult content, among other (harmful) categories. Our latest model, GPT-4, has an 82% lower response rate for disallowed content requests compared to GPT-3.5, and we have built a robust system to monitor abuse. GPT-4 is now available to ChatGPT Plus users, and we hope to make it available to more people over time.

We put a lot of effort into minimizing the likelihood that our models will produce content that is harmful to children. For example, when a user attempts to upload child-safe abuse material to our image tools, we block the action and report it to the National Center for Missing and Exploited Children.

In addition to our default safety guardrails, we work with developers like the nonprofit Khan Academy – which built an AI-powered assistant that serves as both a virtual tutor for students and a classroom assistant for teachers --Customize security mitigations for their use cases. We are also developing features that will allow developers to set more stringent standards for model output to better support developers and users who want this functionality.

4. Respect Privacy

Our large language model is trained on an extensive corpus of text, including public, authorized content, and content generated by human reviewers . We don’t use data to sell our services, ads or build profiles on people, we use data to make our models more helpful to people. ChatGPT, for example, improves capabilities by further training people on conversations with it.

While some of our training data includes personal information on the public internet, we want our models to learn about the world, not the private world. Therefore, we work to remove personal information from training data sets where feasible, fine-tune our models to deny requests for private information, and respond to requests from individuals to have their personal information removed from our systems. These steps minimize the possibility that our model could produce content that includes private information.

5. Improve factual accuracy

Large language models predict and generate the next sequence of words based on patterns they have seen previously, including text input provided by the user. In some cases, the next most likely word may not be factually accurate.

Improving factual accuracy is an important job for OpenAI and many other AI developers, and we are making progress. By leveraging user feedback on ChatGPT outputs that were flagged as incorrect as the primary data source.

We recognize that there is much more work to be done to further reduce the likelihood of hallucinations and educate the public about the current limitations of these artificial intelligence tools.

6. Ongoing Research and Engagement

We believe that a practical way to address AI safety issues is to invest more time and resources into researching effective mitigation measures and adapting technologies, and targeting Tested with real world abuse.

Importantly, we believe that improving the safety and capabilities of AI should go hand in hand. To date, our best security work has come from working with our most capable models because they are better at following user instructions and are easier to guide or "coach."

We will be increasingly cautious as more capable models are created and deployed, and we will continue to strengthen security precautions as our AI systems further develop.

While we waited more than 6 months to deploy GPT-4 in order to better understand its capabilities, benefits, and risks, sometimes it can take longer than that to improve the performance of AI systems. safety. Therefore, policymakers and AI vendors will need to ensure that the development and deployment of AI is effectively managed globally, and that no one “cuts corners” in order to achieve success as quickly as possible. This is a difficult challenge that requires technological and institutional innovation, but it is also a contribution we are eager to make.

Addressing safety issues also requires widespread debate, experimentation and engagement, including on the boundaries of AI system behavior. We have and will continue to promote collaboration and open dialogue among stakeholders to create a safe AI ecosystem.

The above is the detailed content of Is GPT-5 going to be stopped? OpenAI issued a response in the early morning: To ensure the safety of AI, we do not 'cut corners'. For more information, please follow other related articles on the PHP Chinese website!

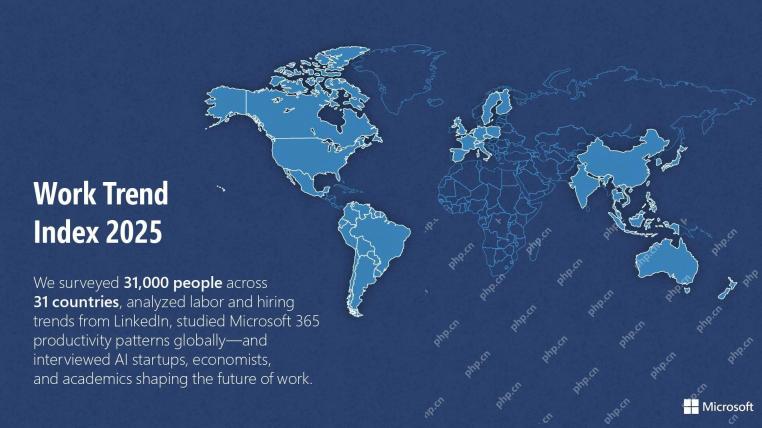

Microsoft Work Trend Index 2025 Shows Workplace Capacity StrainApr 24, 2025 am 11:19 AM

Microsoft Work Trend Index 2025 Shows Workplace Capacity StrainApr 24, 2025 am 11:19 AMThe burgeoning capacity crisis in the workplace, exacerbated by the rapid integration of AI, demands a strategic shift beyond incremental adjustments. This is underscored by the WTI's findings: 68% of employees struggle with workload, leading to bur

Can AI Understand? The Chinese Room Argument Says No, But Is It Right?Apr 24, 2025 am 11:18 AM

Can AI Understand? The Chinese Room Argument Says No, But Is It Right?Apr 24, 2025 am 11:18 AMJohn Searle's Chinese Room Argument: A Challenge to AI Understanding Searle's thought experiment directly questions whether artificial intelligence can genuinely comprehend language or possess true consciousness. Imagine a person, ignorant of Chines

China's 'Smart' AI Assistants Echo Microsoft Recall's Privacy FlawsApr 24, 2025 am 11:17 AM

China's 'Smart' AI Assistants Echo Microsoft Recall's Privacy FlawsApr 24, 2025 am 11:17 AMChina's tech giants are charting a different course in AI development compared to their Western counterparts. Instead of focusing solely on technical benchmarks and API integrations, they're prioritizing "screen-aware" AI assistants – AI t

Docker Brings Familiar Container Workflow To AI Models And MCP ToolsApr 24, 2025 am 11:16 AM

Docker Brings Familiar Container Workflow To AI Models And MCP ToolsApr 24, 2025 am 11:16 AMMCP: Empower AI systems to access external tools Model Context Protocol (MCP) enables AI applications to interact with external tools and data sources through standardized interfaces. Developed by Anthropic and supported by major AI providers, MCP allows language models and agents to discover available tools and call them with appropriate parameters. However, there are some challenges in implementing MCP servers, including environmental conflicts, security vulnerabilities, and inconsistent cross-platform behavior. Forbes article "Anthropic's model context protocol is a big step in the development of AI agents" Author: Janakiram MSVDocker solves these problems through containerization. Doc built on Docker Hub infrastructure

Using 6 AI Street-Smart Strategies To Build A Billion-Dollar StartupApr 24, 2025 am 11:15 AM

Using 6 AI Street-Smart Strategies To Build A Billion-Dollar StartupApr 24, 2025 am 11:15 AMSix strategies employed by visionary entrepreneurs who leveraged cutting-edge technology and shrewd business acumen to create highly profitable, scalable companies while maintaining control. This guide is for aspiring entrepreneurs aiming to build a

Google Photos Update Unlocks Stunning Ultra HDR For All Your PicturesApr 24, 2025 am 11:14 AM

Google Photos Update Unlocks Stunning Ultra HDR For All Your PicturesApr 24, 2025 am 11:14 AMGoogle Photos' New Ultra HDR Tool: A Game Changer for Image Enhancement Google Photos has introduced a powerful Ultra HDR conversion tool, transforming standard photos into vibrant, high-dynamic-range images. This enhancement benefits photographers a

Descope Builds Authentication Framework For AI Agent IntegrationApr 24, 2025 am 11:13 AM

Descope Builds Authentication Framework For AI Agent IntegrationApr 24, 2025 am 11:13 AMTechnical Architecture Solves Emerging Authentication Challenges The Agentic Identity Hub tackles a problem many organizations only discover after beginning AI agent implementation that traditional authentication methods aren’t designed for machine-

Google Cloud Next 2025 And The Connected Future Of Modern WorkApr 24, 2025 am 11:12 AM

Google Cloud Next 2025 And The Connected Future Of Modern WorkApr 24, 2025 am 11:12 AM(Note: Google is an advisory client of my firm, Moor Insights & Strategy.) AI: From Experiment to Enterprise Foundation Google Cloud Next 2025 showcased AI's evolution from experimental feature to a core component of enterprise technology, stream

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

Atom editor mac version download

The most popular open source editor

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

Zend Studio 13.0.1

Powerful PHP integrated development environment

WebStorm Mac version

Useful JavaScript development tools