Home >Technology peripherals >AI >GPT-4 is too strong, even OpenAI doesn't understand it! How did intelligence suddenly 'emerge'?

GPT-4 is too strong, even OpenAI doesn't understand it! How did intelligence suddenly 'emerge'?

- PHPzforward

- 2023-03-31 22:39:271245browse

How will unexplainable intelligence develop in the future?

Since 2023, ChatGPT and GPT-4 have always been on the hot search list. On the one hand, laymen are marveling at how AI is suddenly so powerful, and whether it will revolutionize the lives of "workers"; On the one hand, even insiders don’t understand why amazing intelligence suddenly “emerges” after the model scale breaks through a certain limit.

The emergence of intelligence is a good thing, but the uncontrollable, unpredictable, and unexplainable behavior of the model has made the entire academic community fall into confusion and deep thought.

The super-large model that suddenly became stronger

A simple question first, what movie do the following emojs represent?

The simplest language model can often only continue to write "The movie is a movie about a man who is a man who is a man"; the answer of the medium complexity model is closer, and the answer given It's "The Emoji Movie"; but the most complex language model will only give one answer: Finding Nemo "Finding Nemo"

In fact, this prompt is also 204 items designed to test the capabilities of various large-scale language models One of the tasks.

Ethan Dyer, a computer scientist at Google Research who participated in organizing this test, said that although I was ready for surprises when building the BIG-Bench data set, when I really witnessed what these models can do I was still very surprised.

The surprise is that these models only require a prompt: that is, accept a string of text as input, and predict over and over again what comes next purely based on statistics content.

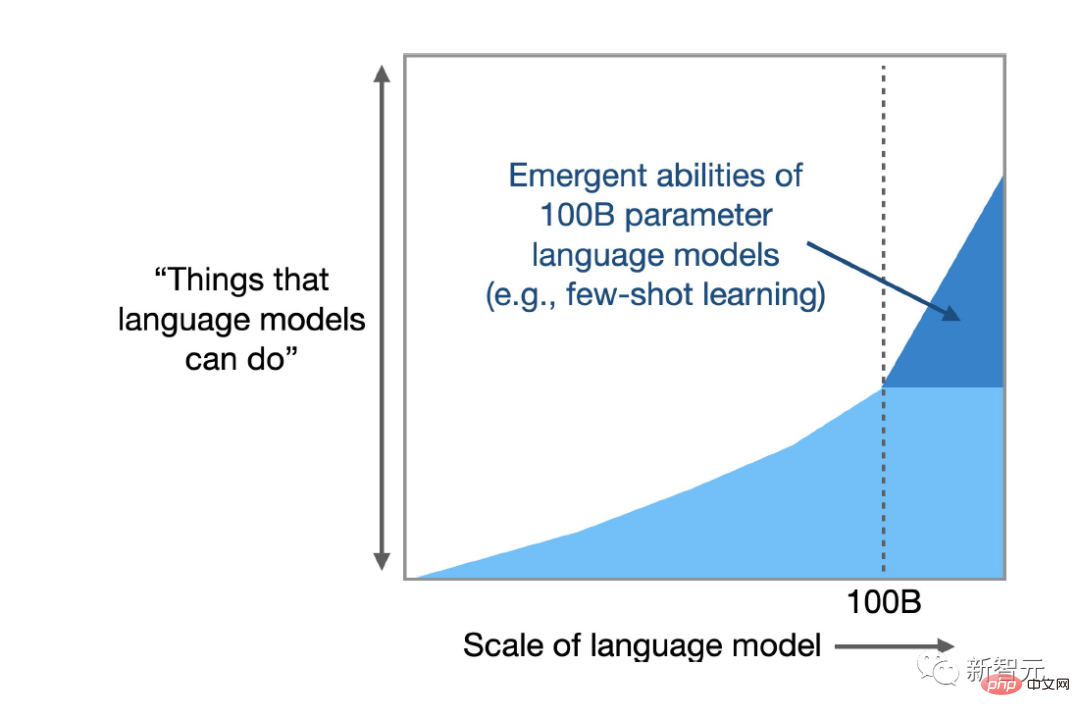

Computer scientists had expected that scaling up would improve performance on known tasks, but they did not anticipate that models would suddenly be able to handle so many new, unpredictable tasks.

The results of a recent survey that Dyer participated in showed that LLM can produce hundreds of "emergent" capabilities, that is, tasks that large models can complete but small models cannot complete. Many of these tasks seem to be related to analyzing text. Unrelated, such as from multiplication calculations to generating executable computer code, including movie decoding based on Emoji symbols, etc.

New analysis shows that for certain tasks and certain models, there is a complexity threshold above which the model’s capabilities increase by leaps and bounds.

The researchers also suggested another negative side effect of emergent power: As complexity increases, some models display new biases and inaccuracies in their responses.

Rishi Bommasani, a computer scientist at Stanford University, said that there is no discussion in any literature that I am aware of that language models can do these things. Last year, Bommasani helped compile a list of dozens of emergent behaviors, including several found in Dyer’s projects, and the list continues to grow.

Paper link: https://openreview.net/pdf?id=yzkSU5zdwD

Paper link: https://openreview.net/pdf?id=yzkSU5zdwD

Currently researchers are not only racing to discover more emergent capabilities, And also trying to figure out why and how they happen, essentially trying to predict the unpredictability.

Understanding emergence can reveal answers to deep questions surrounding artificial intelligence and machine learning in general, such as whether complex models are actually doing something new, or are just getting really good at statistics, and it's okay Help researchers exploit potential advantages and reduce emerging risks.

Deep Ganguli, a computer scientist at artificial intelligence startup Anthroic, said we don't know how to tell which applications' harmful capabilities will emerge, whether it is normal or unpredictable.

The Emergence of Emergence

Biologists, physicists, ecologists, and other scientists use the word "emergence" to describe when a large group of things act as a whole. self-organizing, collective behavior that emerges from time to time.

For example, inanimate atoms combine to create living cells; water molecules create waves; the whispers of starlings fly across the sky in changing but recognizable patterns; cells make muscles move and hearts beat.

Importantly, emergent capabilities are present in systems involving large numbers of independent parts, but researchers have only recently been able to discover these capabilities in LLMs, perhaps because the models have grown to a sufficiently large scale. .

Language models have been around for decades, but until five years ago the most powerful weapon was based on recurrent neural networks (RNN). The training method is to input a string of text and predict what the next word will be; the reason why it is called recurrent (recurrent), because the model learns from its own output, that is, the model's predictions are fed back to the network to improve performance.

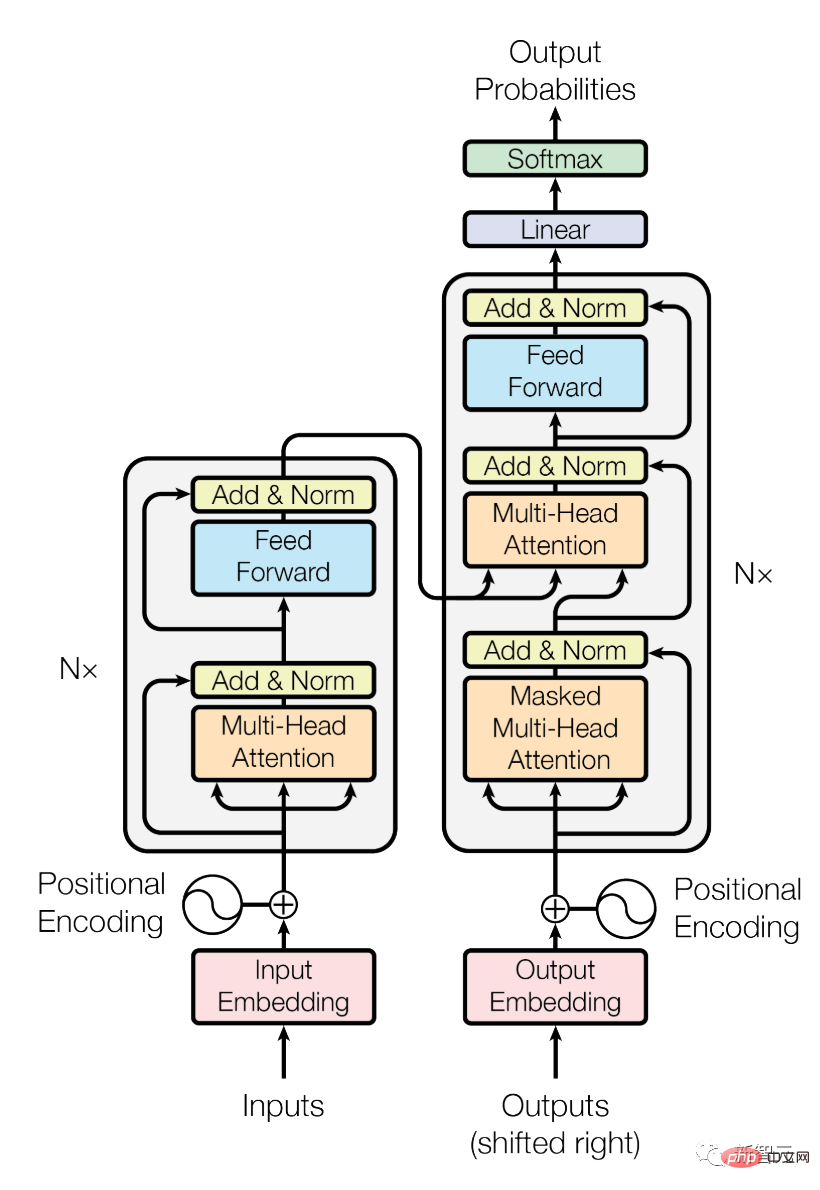

In 2017, researchers at Google Brain introduced a new architecture called Transformer. Compared with the recurrent network that analyzes a sentence word by word, Transformer can process all words at the same time, which means that Transformer can be parallelized. Process large amounts of text.

Transformer allows the complexity of language models to be quickly scaled by increasing the number of parameters in the model, where parameters can be thought of as connections between words, and other factors. Improve predictions by adjusting the weights of these connections during training.

The more parameters in the model, the stronger the ability to establish connections and the stronger the ability to simulate human language.

As expected, a 2020 analysis by OpenAI researchers found that as models increase in size, their accuracy and capabilities improve.

Paper link: https://arxiv.org/pdf/2001.08361.pdf

With GPT-3 (with 175 billion parameters) and Google With the release of models such as PaLM (scalable to 540 billion parameters), users are discovering more and more emergent capabilities.

One DeepMind engineer even reported that he could make ChatGPT think it was a Linux terminal and run some simple math code to calculate the first 10 prime numbers. It's worth noting that ChatGPT can complete the task faster than running the same code on a real Linux box.

As with the movie emoji task, researchers have no reason to think that a language model for predicting text can imitate a computer terminal, and many emergent behaviors exhibit language models’ zero -shot or few-shot learning ability, that is, the ability of LLM to solve problems that have never been seen before or are rarely seen before.

Having found signs that LLM can transcend the constraints of training data, a legion of researchers are working to better grasp what emergence looks like and how it happens, and the first step is to fully document it.

Beyond the Imitation Game

In 2020, Dyer and others at Google Research predicted that LLM would have transformative impacts, but exactly what those impacts would be remains an open question.

Therefore, they asked various research teams to provide examples of difficult and diverse tasks to find the capability boundaries of language models. This work is also called "BIG-bench, Beyond the Imitation Game" (BIG-bench, Beyond the The Imitation Game Benchmark project, named after the "imitation game" proposed by Alan Turing, tests whether the computer can answer questions in a convincing and humane way, also called the Turing test.

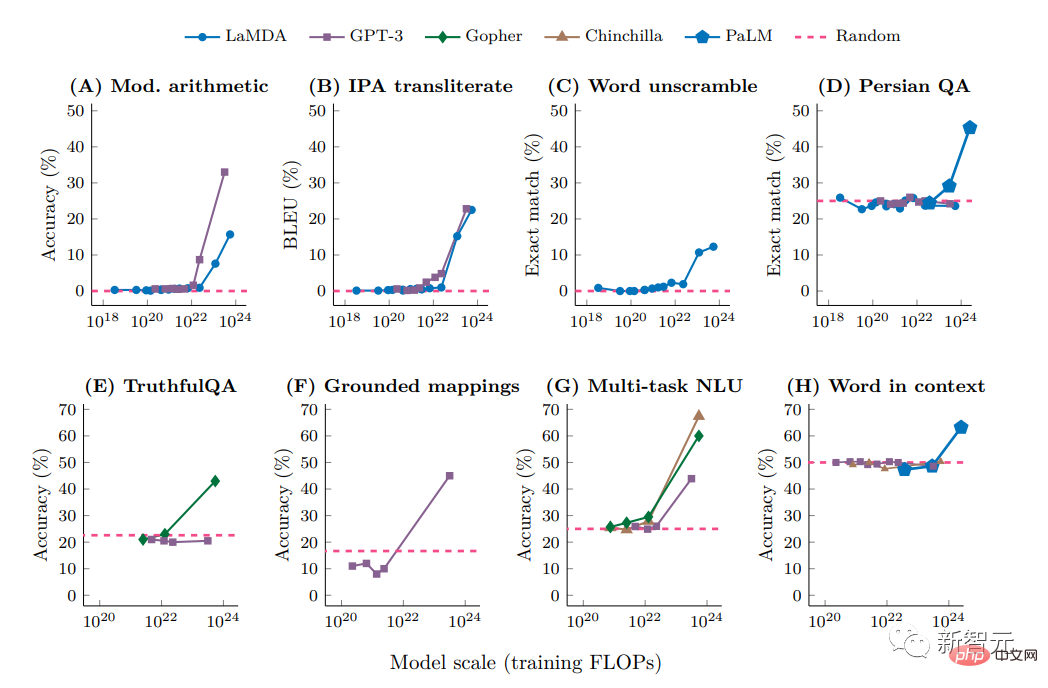

As expected, on some tasks, the model's performance improves smoothly and predictably as complexity increases; while on other tasks , expanding the number of parameters did not produce any improvement.

However, in about 5% of the tasks, the researchers found so-called "breakthroughs", that is, rapid and dramatic jumps in performance within a certain threshold range, which varied with the task and the Varies from model to model.

For example, a model with relatively few parameters (only a few million) cannot successfully complete a three-digit addition or two-digit multiplication problem, but for tens of billions of parameters, the accuracy of some models will A substantial increase.

Other tasks saw similar jumps, including decoding the International Phonetic Alphabet, deciphering the letters of a word, identifying offensive content in Hinglish (a combination of Hindi and English) passages, and generating words similar to Swahili. English equivalent of the Heli proverb.

But researchers soon realized that model complexity was not the only driver. If the data quality was high enough, some unexpected capabilities could be gained from smaller models with fewer parameters, or in larger models. Training on a small data set, in addition, the wording of the query will also affect the accuracy of the model's response.

In a paper presented at NeurIPS, the field's flagship conference, last year, Google Brain researchers showed how to get models to explain themselves using hints (thought chain reasoning), such as how to correctly solve math word problem, which the same model cannot solve correctly without hints.

Paper link: https://neurips.cc/Conferences/2022/ScheduleMultitrack?event=54087

Yi Tay, a scientist at Google Brain, is committed to systematic research on breakthroughs, He pointed out that recent research shows that the thought chain prompt changes the scale curve of the model and also changes the point of emergence. The use of thought chain prompts can induce emergent behavior that was not found in the BIG experiment.

Ellie Pavlick, a computer scientist at Brown University who studies computational models of language, believes that these recent findings raise at least two possibilities:

The first is , as comparisons with biological systems show, larger models do spontaneously acquire new abilities, most likely because the model fundamentally learns something new and different that is not possible at small sizes What's not in the model, and this is what we want to be the case, is that there are some fundamental shifts that occur when the model scales up.

Another, less sensational possibility is that what appears to be a breakthrough event may be an internal, statistics-driven process operating through thought chain reasoning, and that large LLMs may simply be learning heurists. For those parameters with fewer parameters or lower data quality, heuristic algorithms cannot be implemented.

But she believes that figuring out which of these explanations is more likely relies on being able to understand how LLMs operate, and since we don't know how they work under the hood, we can't say that these are guesses. Which of them is more reasonable.

Traps hidden under unknown forces

Google released Bard, a ChatGPT-like product, in February, but a factual error was exposed in the demonstration, which also brought a revelation. Although more and more researchers are beginning to rely on these language models to do basic work, they cannot trust the output results of these models and need people to further check their work.

Emergence creates unpredictability, and unpredictability increases with scale, making it difficult for researchers to predict the consequences of widespread use.

If you want to study emergence phenomena, you must first have a case in your mind. Before studying the impact of scale, you cannot know what capabilities or limitations may arise.

Some harmful behaviors will also emerge in some models. Recent analysis of LLM shows that the emergence of social bias is often accompanied by a large number of parameters, which means that large models will suddenly become more biased. , failure to address this risk could jeopardize the research subjects of these models.

The above is the detailed content of GPT-4 is too strong, even OpenAI doesn't understand it! How did intelligence suddenly 'emerge'?. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology