Home >Database >Mysql Tutorial >Detailed introduction to Mysql performance optimization subquery

Detailed introduction to Mysql performance optimization subquery

- 迷茫Original

- 2017-03-26 13:45:021441browse

I remember when I was working on a project, I heard a sentence, try not to use subqueries, so let’s take a look at this article to see if this sentence is correct.

Before that, It is necessary to introduce some conceptual things and mysql's general processing of statements.

When the connection thread of Mysql Server receives the SQL request sent by the Client, it will go through a series of decomposition Parse, perform corresponding analysis, and then Mysql will use the query optimizer module to perform calculations and analysis based on the relevant statistical information of the data tables involved in the Sql. Then it will derive a data access method that Mysql considers to be the most reasonable and optimal way, that is, We often say "Execution Plan", and then obtain the corresponding data by calling the storage engine interface based on the obtained execution plan. Then perform relevant processing on the data returned by the storage engine, and process it as required by the Client. The format is used as the result set and returned to the Client.

Note: The statistical data mentioned here are some data statistics obtained after we notify Mysql to analyze the relevant data of the table through the Analyze table command. . These data are very important to the Mysql optimizer. The quality of the execution plan generated by the optimizer is mainly determined by these statistical data.

1. Create a table

create table User( Id int not null PRIMARY key auto_increment , NickName varchar(50) comment '用户昵称', Sex int comment '性别', Sign varchar(50) comment '用户签名', Birthday datetime comment '用户生日', CreateTime datetime comment '创建时间') default charset=utf8 comment '用户表';create table UserGroup( Id int not null PRIMARY key auto_increment , UserId int not null comment 'user Id', GroupId int not null comment '用户组Id', CreateTime datetime comment '创建时间', -- key index_groupid(GroupId) using btree, key index_userid(groupid, UserId) using btree ) default charset=utf8 comment '用户组表';

2. Prepare data

var conStr = ConfigurationManager.ConnectionStrings["ConStr"].ToString();

using (IDbConnection conn = new MySqlConnection(conStr))

{

Stopwatch watch = new Stopwatch();

var sql = string.Empty;

var names = new string[] { "非", "想", "红", "帝", "德", "看", "梅", "插", "兔" };

Random ran = new Random();

var insertSql = @" insert into User(NickName,Sex,Sign, Birthday, CreateTime) values(@NickName,@Sex,@Sign, @Birthday, @CreateTime);

INSERT INTO usergroup (UserId, GroupId, CreateTime ) VALUES (LAST_INSERT_ID() , @GroupId, @CreateTime);";

watch.Start();

if (conn.State == ConnectionState.Closed)

{

conn.Open();

}

var tran = conn.BeginTransaction();

for (int i = 0; i < 100000; i++)

{

var param = new { NickName = names[ran.Next(9)] + names[ran.Next(9)] + i, Sign = names[ran.Next(9)] + names[ran.Next(9)], CreateTime = DateTime.Now, Birthday = DateTime.Now.AddYears(ran.Next(10, 30)), Sex = i % 2, GroupId = ran.Next(1, 100) };

conn.Execute(insertSql, param, tran);

}

tran.Commit();

conn.Dispose();

watch.Stop();

Console.WriteLine(watch.ElapsedMilliseconds);

}Here I inserted 5000 pieces of data, and the group was divided into 99 groups, randomly.

3. Query sql

explain select user.id, user.nickname from usergroup left join user on usergroup.UserId = user.Id where usergroup.groupid = 1 order by usergroup.UserId desc limit 100, 20; explain select user.id, user.nickname from (select id, userid from usergroup where groupid = 1 order by userid limit 100, 20) t left join user on t.UserId = user.id ; explain select user.id, user.nickname from (select id, userid from usergroup where groupid = 1 order by userid ) t left join user on t.UserId = user.id limit 100, 20;

Second Both the first sentence and the third sentence use subqueries. The difference is that the second sentence first obtains 20 pieces of data and then associates them with the user table.

4. Analysis

In the case of 100000 pieces of data:

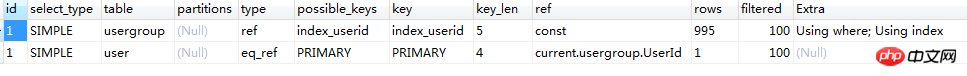

Look at the first sentence

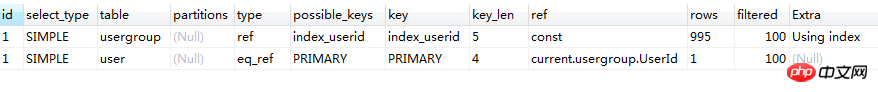

and then look at the second sentence

The third sentence

Looking at the above three pictures, it seems that something can be seen.

Look first Their rows, the second sentence has the most, adding up to more than 1,000, and the other two sentences add up to 996. But what I want to say is, here is not to look at the sum of rows. The correct way is to start from the largest ID Starting from the statement, statements with the same id are executed sequentially from top to bottom.

Let’s first look at the statement with id=2 in the second sentence and the statement with id=1 in the first sentence. They are exactly the same. They all filter data from the usergroup table, and can get the same result set A.

It seems that they all operate based on the same result set, and there will be a difference next.

Look at the first sentence first, and then based on the result set A, go to the left join table user, filter out the final data, and return it to the client.

The second sentence is based on A , filter the data again, get the required data, and then use this data to left join with the user table to get the final result.

From the above point of view, among the execution plans, the second execution plan is more efficient .

If you can significantly reduce the scope of the query through a subquery, you can consider using a subquery statement.

The above is the detailed content of Detailed introduction to Mysql performance optimization subquery. For more information, please follow other related articles on the PHP Chinese website!