Torchchat: Bringing Large Language Model Inference to Your Local Machine

Large language models (LLMs) are transforming technology, yet deploying them on personal devices has been challenging due to hardware limitations. PyTorch's new Torchchat framework addresses this, enabling efficient LLM execution across various hardware platforms, from laptops to mobile devices. This article provides a practical guide to setting up and using Torchchat locally with Python.

PyTorch, Facebook's AI Research Lab's (FAIR) open-source machine learning framework, underpins Torchchat. Its versatility extends to computer vision and natural language processing.

Torchchat's Key Features:

Torchchat offers four core functionalities:

- Python/PyTorch LLM Execution: Run LLMs on machines with Python and PyTorch installed, interacting directly via the terminal or a REST API server. This article focuses on this setup.

- Self-Contained Model Deployment: Utilizing AOT Inductor (Ahead-of-Time Inductor), Torchchat creates self-contained executables (dynamic libraries) independent of Python and PyTorch. This ensures stable model runtime in production environments without recompilation. AOT Inductor optimizes deployment through efficient binary formats, surpassing the overhead of TorchScript.

- Mobile Device Execution: Leveraging ExecuTorch, Torchchat optimizes models for mobile and embedded devices, producing PTE artifacts for execution.

-

Model Evaluation: Evaluate LLM performance using the

lm_evalframework, crucial for research and benchmarking.

Why Run LLMs Locally?

Local LLM execution offers several advantages:

- Enhanced Privacy: Ideal for sensitive data in healthcare, finance, and legal sectors, ensuring data remains within organizational infrastructure.

- Real-Time Performance: Minimizes latency for applications needing rapid responses, such as interactive chatbots and real-time content generation.

- Offline Capability: Enables LLM usage in areas with limited or no internet connectivity.

- Cost Optimization: More cost-effective than cloud API usage for high-volume applications.

Local Setup with Python: A Step-by-Step Guide

-

Clone the Repository: Clone the Torchchat repository using Git:

git clone git@github.com:pytorch/torchchat.git

Alternatively, download directly from the GitHub interface.

-

Installation: Assuming Python 3.10 is installed, create a virtual environment:

python -m venv .venv source .venv/bin/activate

Install dependencies using the provided script:

./install_requirements.sh

Verify installation:

git clone git@github.com:pytorch/torchchat.git

-

Using Torchchat:

-

Listing Supported Models:

python -m venv .venv source .venv/bin/activate

-

Downloading a Model: Install the Hugging Face CLI (

pip install huggingface_hub), create a Hugging Face account, generate an access token, and log in (huggingface-cli login). Download a model (e.g.,stories15M):./install_requirements.sh

-

Running a Model: Generate text:

python torchchat.py --help

Or use chat mode:

python torchchat.py list

-

Requesting Access: For models requiring access (e.g.,

llama3), follow the instructions in the error message.

-

Advanced Usage: Fine-tuning Performance

-

Precision Control (

--dtype): Adjust data type for speed/accuracy trade-offs (e.g.,--dtype fast). -

Just-In-Time (JIT) Compilation (

--compile): Improves inference speed (but increases startup time). -

Quantization (

--quantize): Reduces model size and improves speed using a JSON configuration file. -

Device Specification (

--device): Specify the device (e.g.,--device cuda).

Conclusion

Torchchat simplifies local LLM execution, making advanced AI more accessible. This guide provides a foundation for exploring its capabilities. Further investigation into Torchchat's features is highly recommended.

The above is the detailed content of PyTorch's torchchat Tutorial: Local Setup With Python. For more information, please follow other related articles on the PHP Chinese website!

Gemini 2.5 Pro vs GPT 4.5: Can Google Beat OpenAI's Best?Apr 24, 2025 am 09:39 AM

Gemini 2.5 Pro vs GPT 4.5: Can Google Beat OpenAI's Best?Apr 24, 2025 am 09:39 AMThe AI race is heating up with newer, competing models launched every other day. Amid this rapid innovation, Google Gemini 2.5 Pro challenges OpenAI GPT-4.5, both offering cutting-edge advancements in AI capabilities. In this Gem

Karun Thanks's bluepring for data science successApr 24, 2025 am 09:38 AM

Karun Thanks's bluepring for data science successApr 24, 2025 am 09:38 AMKarun Thankachan: A Data Science Journey from Software Engineer to Walmart Senior Data Scientist Karun Thankachan, a senior data scientist specializing in recommender systems and information retrieval, shares his career path, insights on scaling syst

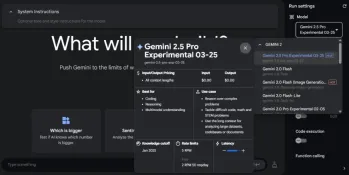

We Tried Gemini 2.5 Pro Experimental and It's Mind-Blowing!Apr 24, 2025 am 09:36 AM

We Tried Gemini 2.5 Pro Experimental and It's Mind-Blowing!Apr 24, 2025 am 09:36 AMGoogle DeepMind's Gemini 2.5 Pro (experimental): A Powerful New AI Model Google DeepMind has released Gemini 2.5 Pro (experimental), a groundbreaking AI model that has quickly ascended to the top of the LMArena Leaderboard. Building on its predecess

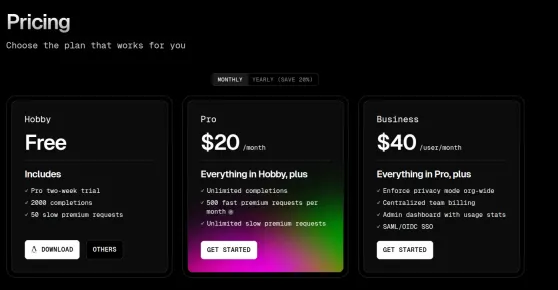

Top 5 Code Editors to Vibe Code in 2025Apr 24, 2025 am 09:31 AM

Top 5 Code Editors to Vibe Code in 2025Apr 24, 2025 am 09:31 AMRevolutionizing Software Development: A Deep Dive into AI Code Editors Tired of endless coding, constant tab-switching, and frustrating troubleshooting? The future of coding is here, and it's powered by AI. AI code editors understand your project f

5 Jobs AI Can't Replace According to Bill GatesApr 24, 2025 am 09:26 AM

5 Jobs AI Can't Replace According to Bill GatesApr 24, 2025 am 09:26 AMBill Gates recently visited Jimmy Fallon's Tonight Show, talking about his new book "Source Code", his childhood and Microsoft's 50-year journey. But the most striking thing in the conversation is about the future, especially the rise of artificial intelligence and its impact on our work. Gates shared his thoughts in a hopeful yet honest way. He believes that AI will revolutionize the world at an unexpected rate and talks about work that AI cannot replace in the near future. Let's take a look at these tasks together. Table of contents A new era of abundant intelligence Solve global shortages in healthcare and education Will artificial intelligence replace jobs? Gates said: For some jobs, it will Work that artificial intelligence (currently) cannot replace: human touch remains important Conclusion

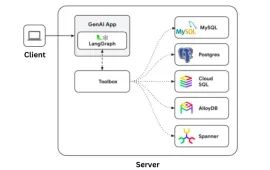

Google Gen AI Toolbox: A Python Library for SQL DatabasesApr 24, 2025 am 09:23 AM

Google Gen AI Toolbox: A Python Library for SQL DatabasesApr 24, 2025 am 09:23 AMGoogle's Gen AI Toolbox for Databases: Revolutionizing Database Interaction with Natural Language Google has unveiled the Gen AI Toolbox for Databases, a revolutionary open-source Python library designed to simplify database interactions using natura

OpenAI's GPT 4o Image Generation is SUPER COOLApr 24, 2025 am 09:21 AM

OpenAI's GPT 4o Image Generation is SUPER COOLApr 24, 2025 am 09:21 AMOpenAI's ChatGPT Now Boasts Native Image Generation: A Game Changer ChatGPT's latest update has sent ripples through the tech world with the introduction of native image generation, powered by GPT-4o. Sam Altman himself hailed it as "one of the

How to Build Multilingual Voice Agent Using OpenAI Agent SDK? - Analytics VidhyaApr 24, 2025 am 09:16 AM

How to Build Multilingual Voice Agent Using OpenAI Agent SDK? - Analytics VidhyaApr 24, 2025 am 09:16 AMOpenAI's Agent SDK now offers a Voice Agent feature, revolutionizing the creation of intelligent, real-time, speech-driven applications. This allows developers to build interactive experiences like language tutors, virtual assistants, and support bo

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Dreamweaver CS6

Visual web development tools

WebStorm Mac version

Useful JavaScript development tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

SublimeText3 Mac version

God-level code editing software (SublimeText3)