Technology peripherals

Technology peripherals AI

AI How LLMs Work: Pre-Training to Post-Training, Neural Networks, Hallucinations, and Inference

How LLMs Work: Pre-Training to Post-Training, Neural Networks, Hallucinations, and InferenceHow LLMs Work: Pre-Training to Post-Training, Neural Networks, Hallucinations, and Inference

Unveiling the Magic Behind Large Language Models (LLMs): A Two-Part Exploration

Large Language Models (LLMs) often appear magical, but their inner workings are surprisingly systematic. This two-part series demystifies LLMs, explaining their construction, training, and refinement into the AI systems we use today. Inspired by Andrej Karpathy's insightful (and lengthy!) YouTube video, this condensed version provides the core concepts in a more accessible format. While Karpathy's video is highly recommended (800,000 views in just 10 days!), this 10-minute read distills the key takeaways from the first 1.5 hours.

Part 1: From Raw Data to Base Model

LLM development involves two crucial phases: pre-training and post-training.

1. Pre-training: Teaching the Language

Before generating text, an LLM must learn language structure. This computationally intensive pre-training process involves several steps:

- Data Acquisition and Preprocessing: Massive, diverse datasets are gathered, often including sources like Common Crawl (250 billion web pages). However, raw data requires cleaning to remove spam, duplicates, and low-quality content. Services like FineWeb offer preprocessed versions available on Hugging Face.

- Tokenization: Text is converted into numerical tokens (words, subwords, or characters) for neural network processing. GPT-4, for example, utilizes 100,277 unique tokens. Tools like Tiktokenizer visualize this process.

- Neural Network Training: The neural network learns to predict the next token in a sequence based on context. This involves billions of iterations, adjusting parameters (weights) via backpropagation to improve prediction accuracy. The network's architecture dictates how input tokens are processed to generate outputs.

The resulting base model understands word relationships and statistical patterns but lacks real-world task optimization. It functions like an advanced autocomplete, predicting based on probability but with limited instruction-following capabilities. In-context learning, using examples within prompts, can be employed, but further training is necessary.

2. Post-training: Refining for Practical Use

Base models are refined through post-training using smaller, specialized datasets. This isn't explicit programming but rather implicit instruction through structured examples.

Post-training methods include:

- Instruction/Conversation Fine-tuning: Teaches the model to follow instructions, engage in conversations, adhere to safety guidelines, and refuse harmful requests (e.g., InstructGPT).

- Domain-Specific Fine-tuning: Adapts the model for specific fields (medicine, law, programming).

Special tokens are introduced to delineate user input and AI responses.

Inference: Generating Text

Inference, performed at any stage, evaluates model learning. The model assigns probabilities to potential next tokens and samples from this distribution, creating text not explicitly in the training data but statistically consistent with it. This stochastic process allows for varied outputs from the same input.

Hallucinations: Addressing False Information

Hallucinations, where LLMs generate false information, arise from their probabilistic nature. They don't "know" facts but predict likely word sequences. Mitigation strategies include:

- "I don't know" Training: Explicitly training the model to recognize knowledge gaps through self-interrogation and automated question generation.

- Web Search Integration: Extending knowledge by accessing external search tools, incorporating results into the model's context window.

LLMs access knowledge through vague recollections (patterns from pre-training) and working memory (information in the context window). System prompts can establish a consistent model identity.

Conclusion (Part 1)

This part explored the foundational aspects of LLM development. Part 2 will delve into reinforcement learning and examine cutting-edge models. Your questions and suggestions are welcome!

The above is the detailed content of How LLMs Work: Pre-Training to Post-Training, Neural Networks, Hallucinations, and Inference. For more information, please follow other related articles on the PHP Chinese website!

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AM

One Prompt Can Bypass Every Major LLM's SafeguardsApr 25, 2025 am 11:16 AMHiddenLayer's groundbreaking research exposes a critical vulnerability in leading Large Language Models (LLMs). Their findings reveal a universal bypass technique, dubbed "Policy Puppetry," capable of circumventing nearly all major LLMs' s

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AM

5 Mistakes Most Businesses Will Make This Year With SustainabilityApr 25, 2025 am 11:15 AMThe push for environmental responsibility and waste reduction is fundamentally altering how businesses operate. This transformation affects product development, manufacturing processes, customer relations, partner selection, and the adoption of new

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AM

H20 Chip Ban Jolts China AI Firms, But They've Long Braced For ImpactApr 25, 2025 am 11:12 AMThe recent restrictions on advanced AI hardware highlight the escalating geopolitical competition for AI dominance, exposing China's reliance on foreign semiconductor technology. In 2024, China imported a massive $385 billion worth of semiconductor

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AM

If OpenAI Buys Chrome, AI May Rule The Browser WarsApr 25, 2025 am 11:11 AMThe potential forced divestiture of Chrome from Google has ignited intense debate within the tech industry. The prospect of OpenAI acquiring the leading browser, boasting a 65% global market share, raises significant questions about the future of th

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AM

How AI Can Solve Retail Media's Growing PainsApr 25, 2025 am 11:10 AMRetail media's growth is slowing, despite outpacing overall advertising growth. This maturation phase presents challenges, including ecosystem fragmentation, rising costs, measurement issues, and integration complexities. However, artificial intell

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AM

'AI Is Us, And It's More Than Us'Apr 25, 2025 am 11:09 AMAn old radio crackles with static amidst a collection of flickering and inert screens. This precarious pile of electronics, easily destabilized, forms the core of "The E-Waste Land," one of six installations in the immersive exhibition, &qu

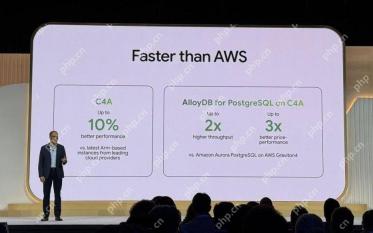

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AM

Google Cloud Gets More Serious About Infrastructure At Next 2025Apr 25, 2025 am 11:08 AMGoogle Cloud's Next 2025: A Focus on Infrastructure, Connectivity, and AI Google Cloud's Next 2025 conference showcased numerous advancements, too many to fully detail here. For in-depth analyses of specific announcements, refer to articles by my

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AM

Talking Baby AI Meme, Arcana's $5.5 Million AI Movie Pipeline, IR's Secret Backers RevealedApr 25, 2025 am 11:07 AMThis week in AI and XR: A wave of AI-powered creativity is sweeping through media and entertainment, from music generation to film production. Let's dive into the headlines. AI-Generated Content's Growing Impact: Technology consultant Shelly Palme

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

Dreamweaver Mac version

Visual web development tools