Summary of FAQs for DeepSeek usage

DeepSeek AI Tool User Guide and FAQ

DeepSeek is a powerful AI intelligent tool. This article will answer some common usage questions to help you get started quickly.

FAQ:

-

Differences between different access methods: There is no difference in function between web version, App version and API calls, and App is just a wrapper for web version. The local deployment uses a distillation model, which is slightly inferior to the full version of DeepSeek-R1, but the 32-bit model theoretically has 90% full version capability.

-

What is SillyTavern? SillyTavern is a front-end interface that requires calling the AI model through the API or Ollama.

-

What is breaking the limit? AI models often have moral restrictions built in, breaking limits means bypassing these restrictions through specific prompt words to access more content.

-

Reasons for interruption of AI responses: Your response may touch on sensitive topics and be filtered by secondary review.

-

How to bypass the moral restrictions of AI? Please refer to the relevant resources in the group file (due to group rules limitations, please visit the unlimited group for more resources).

-

Is the AI deployed by Nvidia complete? Nvidia deploys the full 671B model, but may respond slowly than the official version, especially when dealing with long text.

-

Is there a context restriction for DeepSeek? How to break through? There are context restrictions. For example, calling the API in Cherry can adjust the context length in the model settings.

-

How to deploy locally? Please refer to the video tutorial of UP host NathMath of B station: BV1NGf2YtE8r (Tutorial for full local deployment of DeepSeek R1 inference model).

-

How to choose the right model size? Also refer to NathMath's video. In short, for independent graphics card users (preferably N cards), the memory size plus the video memory size determines the most appropriate model size. For example, 4050 graphics card (8G video memory) has 16G memory, it is recommended to choose the 14B model.

-

How to operate after Cherry Studio is connected? After Cherry Studio has completed the connection, return to the main interface and you can select the model above the main interface. The Nvidia version of DeepSeek is named deepseek-ai/deepseek-r1.

-

How to use .md files? .md files are text files in Markdown format. It is recommended to use Typora or VS Code to open and edit.

-

Can Git and Node.js be installed on other disks? It is recommended to install it on the same disk to avoid subsequent configuration errors.

-

Is DeepSeek complete in 360nm AI? No, DeepSeek in 360nm AI is not a complete version, and its capabilities are weak and not recommended.

-

The reason why AI response speed is slowing? The amount of AI computing will increase with the increase in text length, which is normal for slowing down. It is recommended to start a new conversation.

-

The reason why AI output is blank? It may be caused by external cyber attacks.

-

Dialogue record saving time? API calls are saved locally and last for an unlimited period of time (unless manually deleted). The saving time of the web and app versions is currently unknown.

-

Are the web version and App version 671B models? Yes.

-

Can tools such as Chatbox directly connect to the API? Currently, it is impossible to directly connect to the official API.

The above is the detailed content of Summary of FAQs for DeepSeek usage. For more information, please follow other related articles on the PHP Chinese website!

Chess Lessons Are Coming to DuolingoApr 24, 2025 am 10:41 AM

Chess Lessons Are Coming to DuolingoApr 24, 2025 am 10:41 AMDuolingo, renowned for its language-learning platform, is expanding its offerings! Later this month, iOS users will gain access to new chess lessons integrated seamlessly into the familiar Duolingo interface. The lessons, designed for beginners, wi

Blue Check Verification Is Coming to BlueskyApr 24, 2025 am 10:17 AM

Blue Check Verification Is Coming to BlueskyApr 24, 2025 am 10:17 AMBluesky Echoes Twitter's Past: Introducing Official Verification Bluesky, the decentralized social media platform, is mirroring Twitter's past by introducing an official verification process. This will supplement the existing self-verification optio

Google Photos Now Lets You Convert Standard Photos to Ultra HDRApr 24, 2025 am 10:15 AM

Google Photos Now Lets You Convert Standard Photos to Ultra HDRApr 24, 2025 am 10:15 AMUltra HDR: Google Photos' New Image Enhancement Ultra HDR is a cutting-edge image format offering superior visual quality. Like standard HDR, it packs more data, resulting in brighter highlights, deeper shadows, and richer colors. The key differenc

You Should Try Instagram's New 'Blend' Feature for a Custom Reels FeedApr 23, 2025 am 11:35 AM

You Should Try Instagram's New 'Blend' Feature for a Custom Reels FeedApr 23, 2025 am 11:35 AMInstagram and Spotify now offer personalized "Blend" features to enhance social sharing. Instagram's Blend, accessible only through the mobile app, creates custom daily Reels feeds for individual or group chats. Spotify's Blend mirrors th

Instagram Is Using AI to Automatically Enroll Minors Into 'Teen Accounts'Apr 23, 2025 am 10:00 AM

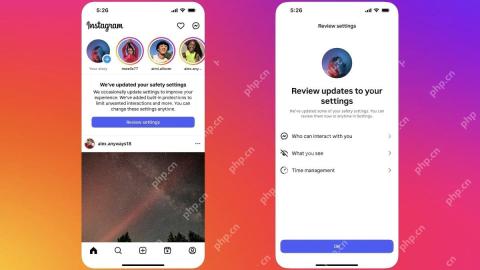

Instagram Is Using AI to Automatically Enroll Minors Into 'Teen Accounts'Apr 23, 2025 am 10:00 AMMeta is cracking down on underage Instagram users. Following the introduction of "Teen Accounts" last year, featuring restrictions for users under 18, Meta has expanded these restrictions to Facebook and Messenger, and is now enhancing its

Should I Use an Agent for Taobao?Apr 22, 2025 pm 12:04 PM

Should I Use an Agent for Taobao?Apr 22, 2025 pm 12:04 PMNavigating Taobao: Why a Taobao Agent Like BuckyDrop Is Essential for Global Shoppers The popularity of Taobao, a massive Chinese e-commerce platform, presents a challenge for non-Chinese speakers or those outside China. Language barriers, payment c

How Can I Avoid Buying Fake Products On Taobao?Apr 22, 2025 pm 12:03 PM

How Can I Avoid Buying Fake Products On Taobao?Apr 22, 2025 pm 12:03 PMNavigating the vast marketplace of Taobao requires vigilance against counterfeit goods. This article provides practical tips to help you identify and avoid fake products, ensuring a safe and satisfying shopping experience. Scrutinize Seller Feedbac

How to Buy from Taobao in the US?Apr 22, 2025 pm 12:00 PM

How to Buy from Taobao in the US?Apr 22, 2025 pm 12:00 PMNavigating Taobao: A Guide for US B2B Buyers Taobao, China's massive eCommerce platform, offers US businesses access to a vast selection of products at competitive prices. However, language barriers, payment complexities, and shipping challenges can

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

WebStorm Mac version

Useful JavaScript development tools

Dreamweaver CS6

Visual web development tools