Backend Development

Backend Development Python Tutorial

Python Tutorial Python Cache: How to Speed Up Your Code with Effective Caching

Python Cache: How to Speed Up Your Code with Effective CachingThis blog was initially posted to Crawlbase Blog

Efficient and fast code is important for creating a great user experience in software applications. Users don’t like waiting for slow responses, whether it’s loading a webpage, training a machine learning model, or running a script. One way to speed up your code is caching.

The purpose of caching is to temporarily cache frequently used data so that your program may access it more rapidly without having to recalculate or retrieve it several times. Caching can speed up response times, reduce load, and improve user experience.

This blog will cover caching principles, its role, use cases, strategies and real world examples of caching in Python. Let’s get started!

Implementing Caching in Python

Caching can be done in Python in multiple ways. Let’s look at two common methods: using a manual decorator for caching and Python’s built-in functools.lru_cache.

1. Manual Decorator for Caching

A decorator is a function that wraps around another function. We can create a caching decorator that stores the result of function calls in memory and returns the cached result if the same input is called again. Here's an example:

import requests

# Manual caching decorator

def memoize(func):

cache = {}

def wrapper(*args):

if args in cache:

return cache[args]

result = func(*args)

cache[args] = result

return result

return wrapper

# Function to get data from a URL

@memoize

def get_html(url):

response = requests.get(url)

return response.text

# Example usage

print(get_html('https://crawlbase.com'))

In this example, the first time get_html is called, it fetches the data from the URL and caches it. On subsequent calls with the same URL, the cached result is returned.

- Using Python’s functools.lru_cache

Python provides a built-in caching mechanism called lru_cache from the functools module. This decorator caches function calls and removes the least recently used items when the cache is full. Here's how to use it:

from functools import lru_cache

@lru_cache(maxsize=128)

def expensive_computation(x, y):

return x * y

# Example usage

print(expensive_computation(5, 6))

In this example, lru_cache caches the result of expensive_computation. If the function is called again with the same arguments, it returns the cached result instead of recalculating.

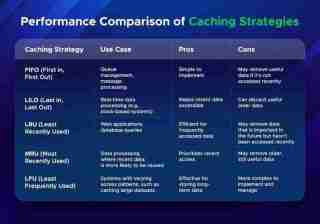

Performance Comparison of Caching Strategies

When choosing a caching strategy, you need to consider how they perform under different conditions. Caching strategies performance depends on the number of cache hits (when data is found in the cache) and the size of the cache.

Here’s a comparison of common caching strategies:

Choosing the right caching strategy depends on your application’s data access patterns and performance needs.

Final Thoughts

Caching can be very useful for your apps. It can reduce data retrieval time and system load. Whether you’re building a web app, a machine learning project or want to speed up your system, smart caching can make your code run faster.

Caching methods such as FIFO, LRU and LFU have different use cases. For example, LRU is good for web apps that need to keep frequently accessed data, whereas LFU is good for programs that need to store data over time.

Implementing caching correctly will let you design faster, more efficient apps and get better performance and user experience.

The above is the detailed content of Python Cache: How to Speed Up Your Code with Effective Caching. For more information, please follow other related articles on the PHP Chinese website!

Why are arrays generally more memory-efficient than lists for storing numerical data?May 05, 2025 am 12:15 AM

Why are arrays generally more memory-efficient than lists for storing numerical data?May 05, 2025 am 12:15 AMArraysaregenerallymorememory-efficientthanlistsforstoringnumericaldataduetotheirfixed-sizenatureanddirectmemoryaccess.1)Arraysstoreelementsinacontiguousblock,reducingoverheadfrompointersormetadata.2)Lists,oftenimplementedasdynamicarraysorlinkedstruct

How can you convert a Python list to a Python array?May 05, 2025 am 12:10 AM

How can you convert a Python list to a Python array?May 05, 2025 am 12:10 AMToconvertaPythonlisttoanarray,usethearraymodule:1)Importthearraymodule,2)Createalist,3)Usearray(typecode,list)toconvertit,specifyingthetypecodelike'i'forintegers.Thisconversionoptimizesmemoryusageforhomogeneousdata,enhancingperformanceinnumericalcomp

Can you store different data types in the same Python list? Give an example.May 05, 2025 am 12:10 AM

Can you store different data types in the same Python list? Give an example.May 05, 2025 am 12:10 AMPython lists can store different types of data. The example list contains integers, strings, floating point numbers, booleans, nested lists, and dictionaries. List flexibility is valuable in data processing and prototyping, but it needs to be used with caution to ensure the readability and maintainability of the code.

What is the difference between arrays and lists in Python?May 05, 2025 am 12:06 AM

What is the difference between arrays and lists in Python?May 05, 2025 am 12:06 AMPythondoesnothavebuilt-inarrays;usethearraymoduleformemory-efficienthomogeneousdatastorage,whilelistsareversatileformixeddatatypes.Arraysareefficientforlargedatasetsofthesametype,whereaslistsofferflexibilityandareeasiertouseformixedorsmallerdatasets.

What module is commonly used to create arrays in Python?May 05, 2025 am 12:02 AM

What module is commonly used to create arrays in Python?May 05, 2025 am 12:02 AMThemostcommonlyusedmoduleforcreatingarraysinPythonisnumpy.1)Numpyprovidesefficienttoolsforarrayoperations,idealfornumericaldata.2)Arrayscanbecreatedusingnp.array()for1Dand2Dstructures.3)Numpyexcelsinelement-wiseoperationsandcomplexcalculationslikemea

How do you append elements to a Python list?May 04, 2025 am 12:17 AM

How do you append elements to a Python list?May 04, 2025 am 12:17 AMToappendelementstoaPythonlist,usetheappend()methodforsingleelements,extend()formultipleelements,andinsert()forspecificpositions.1)Useappend()foraddingoneelementattheend.2)Useextend()toaddmultipleelementsefficiently.3)Useinsert()toaddanelementataspeci

How do you create a Python list? Give an example.May 04, 2025 am 12:16 AM

How do you create a Python list? Give an example.May 04, 2025 am 12:16 AMTocreateaPythonlist,usesquarebrackets[]andseparateitemswithcommas.1)Listsaredynamicandcanholdmixeddatatypes.2)Useappend(),remove(),andslicingformanipulation.3)Listcomprehensionsareefficientforcreatinglists.4)Becautiouswithlistreferences;usecopy()orsl

Discuss real-world use cases where efficient storage and processing of numerical data are critical.May 04, 2025 am 12:11 AM

Discuss real-world use cases where efficient storage and processing of numerical data are critical.May 04, 2025 am 12:11 AMIn the fields of finance, scientific research, medical care and AI, it is crucial to efficiently store and process numerical data. 1) In finance, using memory mapped files and NumPy libraries can significantly improve data processing speed. 2) In the field of scientific research, HDF5 files are optimized for data storage and retrieval. 3) In medical care, database optimization technologies such as indexing and partitioning improve data query performance. 4) In AI, data sharding and distributed training accelerate model training. System performance and scalability can be significantly improved by choosing the right tools and technologies and weighing trade-offs between storage and processing speeds.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

Atom editor mac version download

The most popular open source editor

Dreamweaver CS6

Visual web development tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.