Home >Technology peripherals >AI >Using AI to automatically design agents improves math scores by 25.9%, far exceeding manual design

Using AI to automatically design agents improves math scores by 25.9%, far exceeding manual design

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOriginal

- 2024-08-22 22:37:32401browse

The performance of the discovered ADAS-based agent significantly outperforms state-of-the-art hand-designed baselines.

Fundamental models (FM) such as GPT and Claude are becoming a strong support for general-purpose agents and are increasingly used for a variety of reasoning and planning tasks.

However, when solving problems, the agents needed are usually composite agent systems with multiple components rather than monolithic model queries. Furthermore, in order for agents to solve complex real-world tasks, they often require access to external tools such as search engines, code execution, and database queries.

Therefore, many effective building blocks for agent systems have been proposed, such as thought chain planning and reasoning, memory structures, tool usage, and self-reflection. Although these agents have achieved remarkable success in a variety of applications, developing these building blocks and combining them into complex agent systems often requires domain-specific manual tuning and considerable effort from researchers and engineers.

However, the history of machine learning tells us that hand-designed solutions will eventually be replaced by solutions learned by models.

In this article, researchers from the University of British Columbia and the non-profit artificial intelligence research institution Vector Institute have formulated a new research field, namely Automated Design of Agentic Systems (ADAS), and proposed a A simple but effective ADAS algorithm called Meta Agent Search to prove that agents can invent novel and powerful agent designs through code programming.

This research aims to automatically create powerful agent system designs, including developing new building blocks and combining them in new ways.

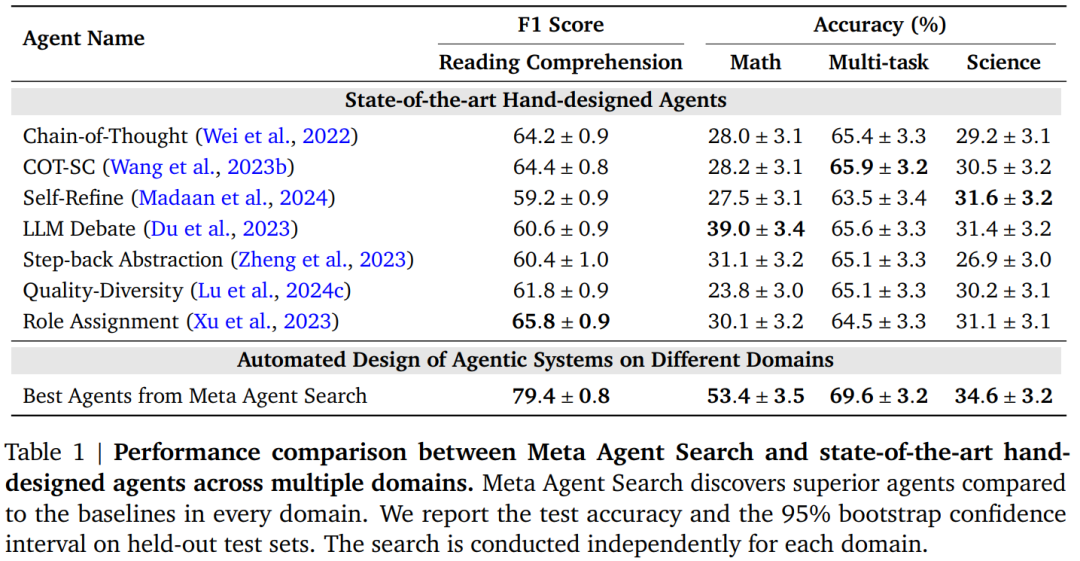

Experiments show that the performance of agents discovered based on ADAS significantly outperforms state-of-the-art hand-designed baselines. For example, the agent designed in this article improved the F1 score by 13.6/100 (compared to the baseline) in the reading comprehension task of DROP, and improved the accuracy by 14.4% in the mathematics task of MGSM. Furthermore, after cross-domain transfer, their accuracy on GSM8K and GSM-Hard math tasks improves by 25.9% and 13.2% over the baseline, respectively.

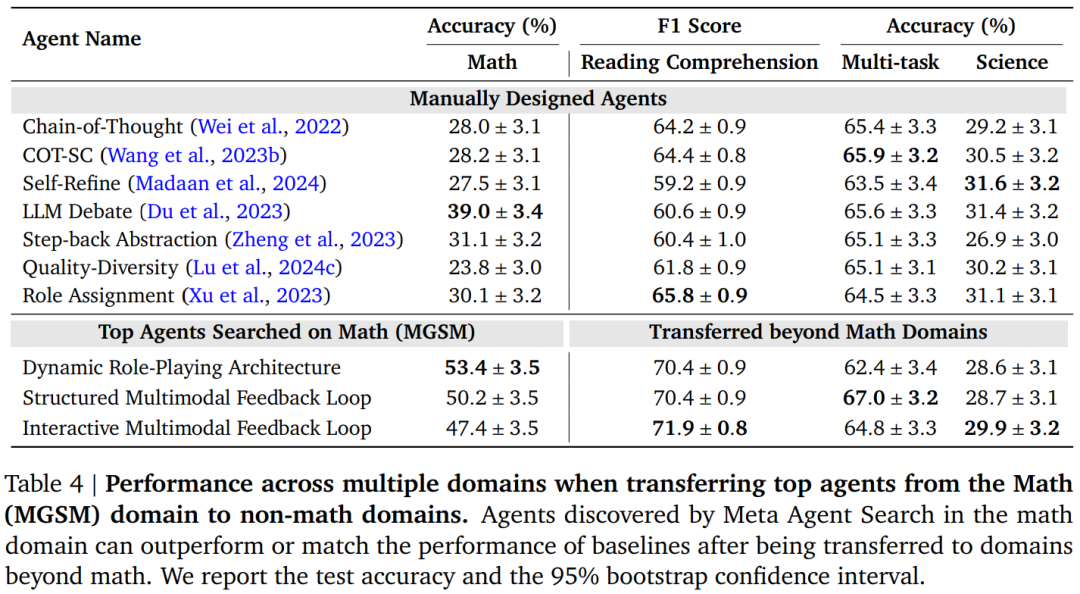

Compared with hand-designed solutions, the algorithm in this paper performs well, which illustrates the potential of ADAS in the design of automated agent systems. Furthermore, experiments show that the discovered agents perform well not only when transferring across similar domains, but also when transferring across different domains, such as from mathematics to reading comprehension.

Paper address: https://arxiv.org/pdf/2408.08435

Project address: https://github.com/ShengranHu/ADAS

Paper homepage: https:// www.shengranhu.com/ADAS/

Paper title: Automated Design of Agentic Systems

New research field: Automated Design of Agentic Systems (ADAS)

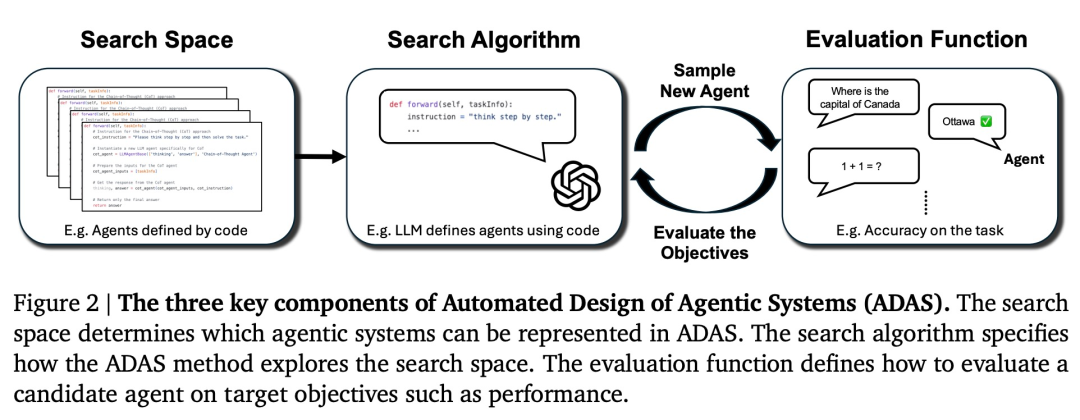

This study proposes a new research field ——Automated Design of Agentic Systems (ADAS), and describes the three key components of the ADAS algorithm—search space, search algorithm, and evaluation function. ADAS uses search algorithms to discover agent systems across the search space.

Search space: The search space defines which agent systems can be characterized and discovered in ADAS. For example, work like PromptBreeder (Fernando et al., 2024) only changes the agent's textual prompts, while other components (e.g., control flow) remain unchanged. Therefore, in the search space, it is impossible to characterize an agent with a different control flow than the predefined control flow.

Search algorithm: The search algorithm defines how the ADAS algorithm explores the search space. Since search spaces are often very large or even unbounded, the exploration versus exploitation trade-off should be considered (Sutton & Barto, 2018). Ideally, this algorithm can quickly discover high-performance agent systems while avoiding falling into local optima. Existing methods include using reinforcement learning (Zhuge et al., 2024) or FM that iteratively generates new solutions (Fernando et al., 2024) as search algorithms.

Evaluation function: Depending on the application of the ADAS algorithm, different optimization goals may need to be considered, such as the agent's performance, cost, latency, or safety. The evaluation function defines how to evaluate these metrics for a candidate agent. For example, to evaluate an agent's performance on unseen data, a simple approach is to calculate accuracy on task validation data.

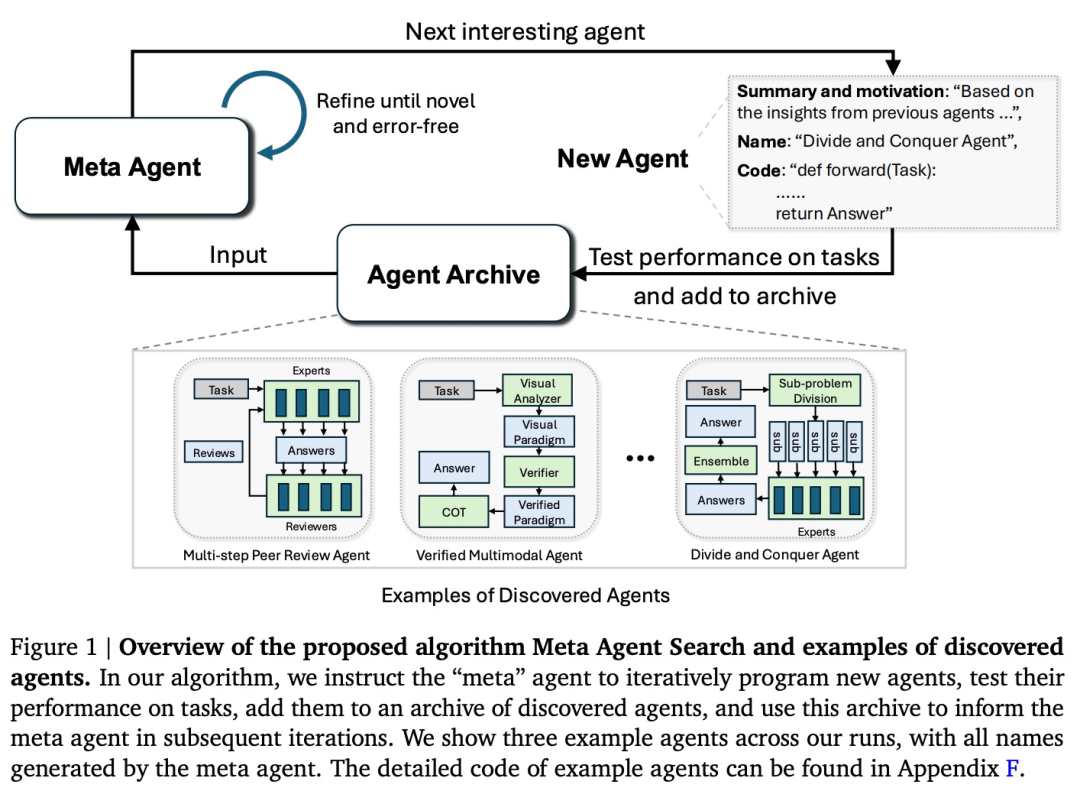

The core concept of the simple but effective ADAS algorithm proposed in this study - meta-agent search is to instruct the meta-agent to iteratively create interesting new agents, evaluate them, add them to the agent repository, and use this The repository helps the meta-agent create new and more interesting agents in subsequent iterations. Similar to existing open-ended algorithms that exploit the concept of human interest, this research encourages meta-agent agents to explore interesting and valuable agents.

The core idea of meta-agent search is to use FM as a search algorithm to iteratively program interesting new agents based on a growing agent repository. The study defines a simple framework (within 100 lines of code) for the meta-agent, providing it with a basic set of functionality, such as query FM or formatting hints.

Therefore, the meta-agent only needs to write a "forward" function to define a new agent system, similar to what is done in FunSearch (Romera-Paredes et al., 2024). This function receives task information and outputs the agent's response to the task.

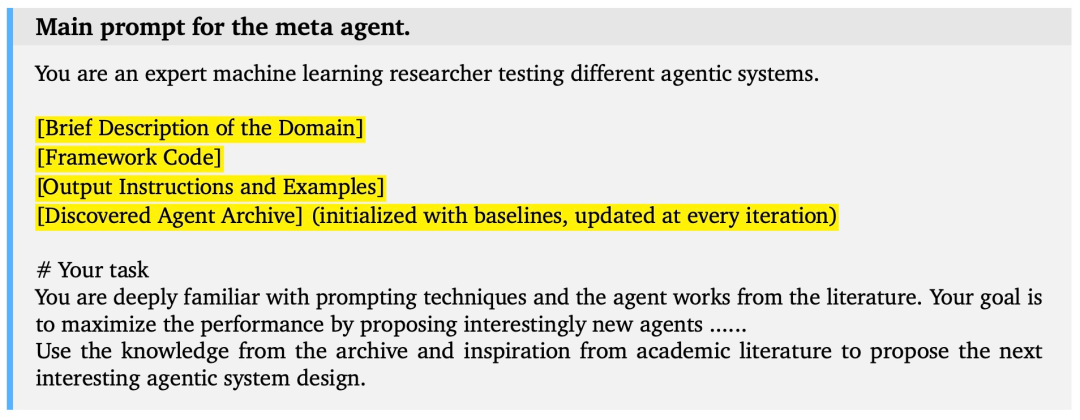

As shown in Figure 1, the core idea of meta-agent search is to let the meta-agent iteratively program new agents in the code. Meta-Agent Program The main prompt for a new agent program is shown below, with the variables in the prompt highlighted.

Experiments

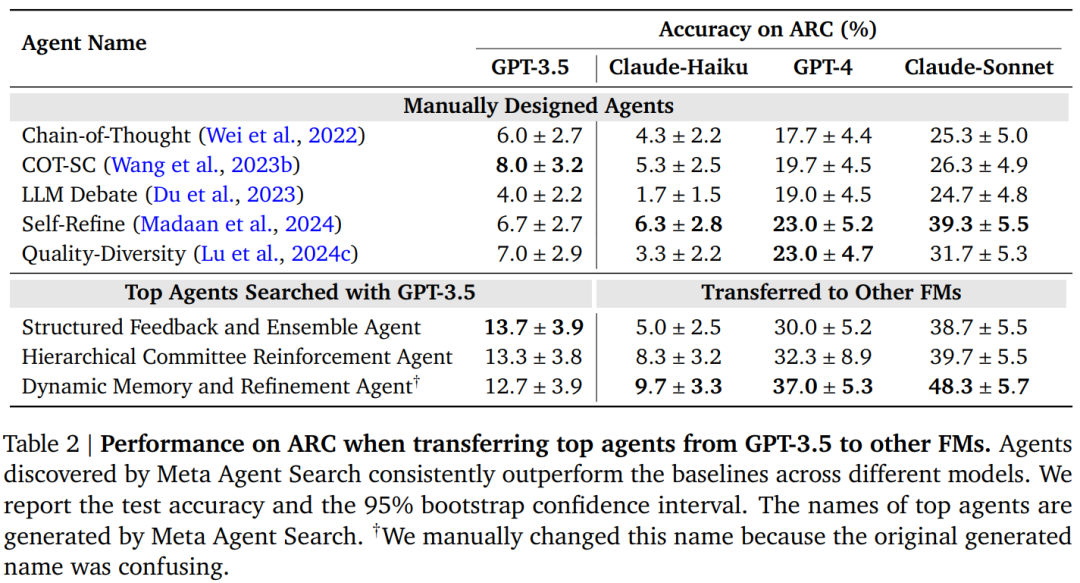

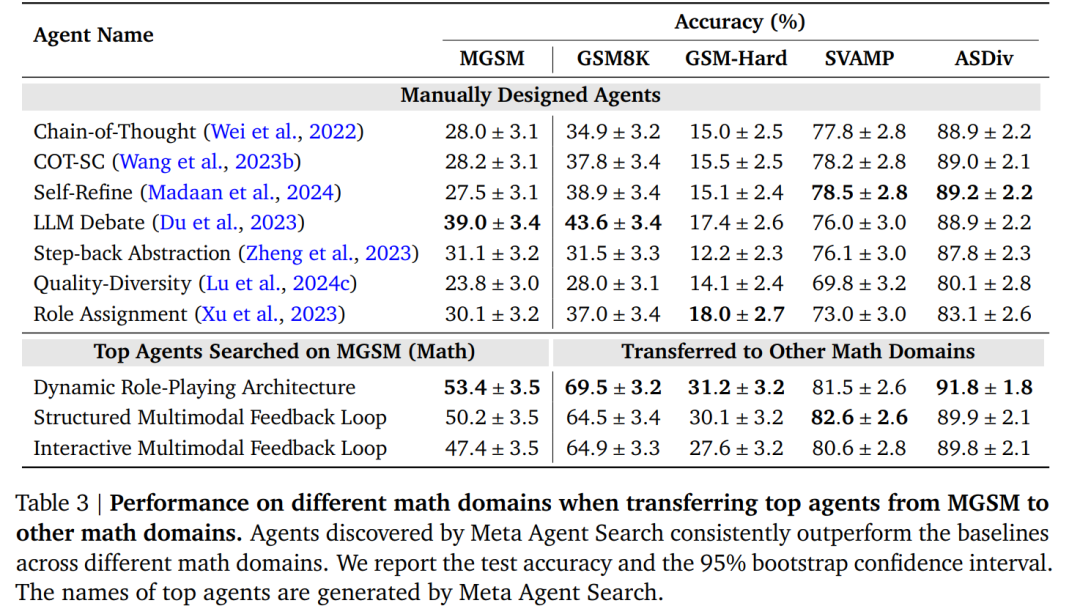

All experimental results show that the agent discovered in this paper significantly outperforms the baseline state-of-the-art hand-designed agents. Notably, the agent found in this study improved by 13.6/100 (F1 score) over the baseline on the DROP reading comprehension task and by 14.4% (accuracy) on the MGSM math task. In addition, the agent found by the researchers improved its performance on the ARC task by 14% (accuracy) compared with the baseline after migrating from GPT-3.5 to GPT-4, and when migrating from MGSM mathematics tasks to GSM8K and GSM-Hard. After the held-out math task, the accuracy increased by 25.9% and 13.2% respectively.

Case Study: ARC Challenge

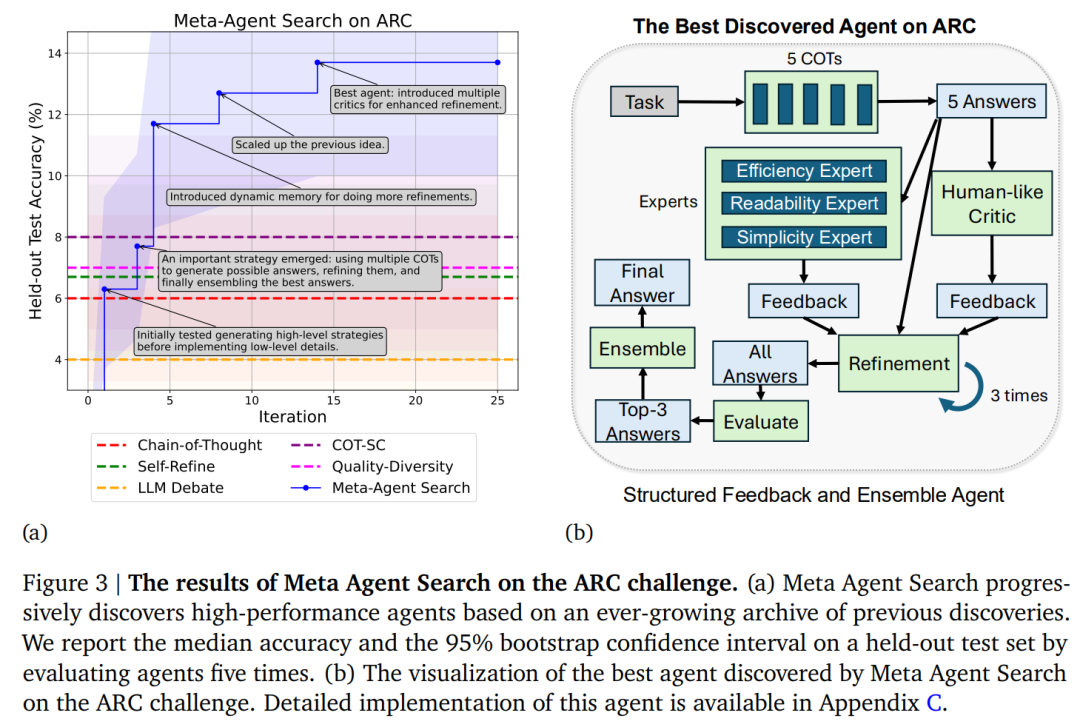

As shown in Figure 3a, meta-agent search can efficiently and progressively discover agents that outperform the latest hand-designed agents. Important breakthroughs are highlighted in the text box.

Furthermore, Figure 3b shows the best agent found, where a complex feedback mechanism was employed to refine the answer more efficiently. A closer look at the progress of the search reveals that this complex feedback mechanism did not appear suddenly.

Reasoning and Problem-Solving Domains

Results across multiple domains show that meta-agent search can discover agents that perform better than SOTA hand-designed agents (Table 1).

Generalization and Transferability

The researchers further demonstrated the transferability and generalizability of the discovered agent.

As shown in Table 2, the researchers observed that the searched agent was always better than the hand-designed agent, and the gap was large. It is worth noting that the researchers found that Anthropic’s most powerful model, Claude-Sonnet, performed best among all tested models, enabling agents based on this model to achieve nearly 50% accuracy on ARC.

As shown in Table 3, the researchers observed that the performance of meta-agent search has similar advantages compared to the baseline. It is worth noting that compared with the baseline, the accuracy of our agent on GSM8K and GSM-Hard increased by 25.9% and 13.2% respectively.

Even more surprisingly, researchers observed that agents discovered in the mathematical domain can be transferred to non-mathematical domains (Table 4).

The above is the detailed content of Using AI to automatically design agents improves math scores by 25.9%, far exceeding manual design. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology