Ten aspects to strengthen Linux container security

Container security solutions need to consider different technology stacks and different stages of the container life cycle. - 1. Container operating system and multi-tenancy - 2. Container content (using trusted sources) - 3. Container registration (encrypted access to container images) - 4. Build process security - 5. Control what can be deployed in the cluster - 6. Container orchestration: Strengthening container platform security - 7. Network isolation - 8. Storage - 9. API management, endpoint security and single sign-on (SSO) - 10. Role and access control management

Containers provide a simple way to package applications and deploy them seamlessly from development and test environments to production environments. It helps ensure consistency across a variety of environments, including physical servers, virtual machines (VMs), or private or public clouds. Leading organizations are rapidly adopting containers based on these benefits to easily develop and manage applications that add business value.

Enterprise applications require strong security. Anyone running basic services in containers will ask: "Are containers safe?", "Can our applications trust containers?"

Securing a container is very similar to securing any running process. Before you deploy and run containers, you need to consider the security of your entire solution technology stack. You also need to consider security throughout the full lifecycle of your application and container.

Please try to strengthen the security of containers at different levels, different technology stacks and different life cycle stages in these 10 aspects.

For developers, containers make it easier for them to build and upgrade applications, which can be relied upon as a unit of application, maximizing server resource utilization by deploying multi-tenant applications on shared hosts. Containers make it easy to deploy multiple applications on a single host and turn individual containers on and off as needed. To take full advantage of this packaging and deployment technology, operations teams need the correct running container environment. Operators need an operating system that can secure containers at the perimeter, isolating the host kernel from the containers and keeping containers safe from each other.

Containers are Linux processes that isolate and constrain resources, enabling you to run sandboxed applications within a shared host kernel. You should secure your containers the same way you would secure any running process on Linux. Giving up privileges is important and remains best practice. A better approach is to create containers with as few privileges as possible. Containers should be run as a normal user, not root. Next, secure your containers by taking advantage of the multiple levels of security features available in Linux: Linux namespaces, Security Enhanced Linux (SELinux), cgroups, capabilities, and Secure Compute Mode (seccomp).

When it comes to security, what does it mean for container contents? . For some time, applications and infrastructure have been composed of off-the-shelf components. Many come from open source software, such as the Linux operating system, Apache web server, Red Hat JBoss Enterprise Application Platform, PostgreSQL and Node.js. Versions of various container-based packages are now readily available, so you don't need to build your own. However, as with any code downloaded from an external source, you need to know the origin of the packages, who created them, and whether there is malicious code inside them.

Your team builds containers based on downloaded public container images, so access management and update downloads are key to management. Container images, built-in images, and other types of binaries need to be managed in the same way. . Many private repository registries support storing container images. Select a private registration server that stores the container image automation policy used.

In a containerized environment, software construction is a stage of the entire life cycle, and application code needs to be integrated with the runtime. Managing this build process is key to ensuring the security of your software stack. Adhere to the concept of "build once, deploy everywhere" to ensure that the products in the build process are exactly the products deployed in production. This is also very important to maintain the continuous stability of containers. In other words, do not patch running containers; instead, rebuild and redeploy them. Whether you work in a highly regulated industry or simply want to optimize your team's work, you need to design your container image management and building processes to leverage the container layer to achieve separation of control so that:

Operation and maintenance team manages basic image

The architecture team manages middleware, runtime, database and other solutions

The development team only focuses on the application layer and code

Finally, sign the custom containers to ensure they are not tampered with between build and deployment.

In case any issues occur during the build process, or vulnerabilities are discovered after deploying an image, another layer of security needs to be added with automated, policy-based deployment.

Let’s take a look at the three container image layers used to build applications: core, middleware, and application. If a problem is discovered in the core image, the image will be rebuilt. Once the build is complete, the image will be pushed to the container platform registration server. The platform can detect changes to the image. For builds that depend on this image and have defined triggers, the platform will automatically rebuild the application and integrate the fixed libraries.

Once the build is complete, the image will be pushed to the container platform's internal registration server. Changes to the image in the internal registration server are detected immediately, and updated images are automatically deployed through triggers defined in the application, ensuring that the code running in production is always the same as the most recently updated image. All these features work together to integrate security capabilities into your continuous integration and continuous deployment (CI/CD) process.

Of course, applications are rarely delivered in a single container. Even simple applications usually have a front end, back end and database. Deploying modern microservice applications in containers often means deploying multiple containers, sometimes on the same host and sometimes distributed across multiple hosts or nodes, as shown in the figure.

When managing container deployments at scale, you need to consider:

Which containers should be deployed to which host?

Which host has greater capacity?

Which containers need to access each other? How will they discover each other?

How to control access and management of shared resources, such as network and storage?

How to monitor container health status?

How to automatically expand application capabilities to meet demand?

How to enable developers to meet security needs while self-service?

Given the broad capabilities of developers and operators, strong role-based access control is a key element of a container platform. For example, the orchestration management server is the central point of access and should receive the highest level of security checks. APIs are key to automated container management at scale, used to validate and configure data for containers, services, and replication controllers; perform project validation on incoming requests; and invoke triggers on other major system components.

Deploying modern microservice applications in containers often means deploying multiple containers distributed across multiple nodes. With network defense in mind, you need a way to isolate applications within a cluster.

A typical public cloud service, such as Google Container Engine (GKE), Azure Container Services, or Amazon Web Services (AWS) Container Service, is a single-tenant service. They allow running containers on a cluster of VMs that you launch. To achieve multi-tenant container security, you need a container platform that allows you to select a single cluster and segment traffic to isolate different users, teams, applications, and environments within that cluster.

Through the network namespace, each collection of containers (called a "POD") gets its own IP and port binding range, thereby isolating the POD network on the node.

By default, PODs from different namespaces (projects) cannot send or receive packets from PODs, services in different projects, except with the options described below. You can use these features to isolate developer, test, and production environments in a cluster; however, this expansion of IP addresses and ports makes networking more complex. Invest in tools to handle this complexity. The preferred tool is to use a software-defined network (SDN) container platform, which provides a unified cluster network to ensure communication between containers in the entire cluster.

Containers are very useful for both stateful and stateless applications. Securing storage is a key element in ensuring stateful services. The container platform should provide a variety of storage plug-ins, including Network File System (NFS), AWS Elastic Block Stores (EBS, elastic block storage), GCE Persistent disk, GlusterFS, iSCSI, RADOS (CEPH), Cinder, etc.

A persistent volume (PV) can be mounted on any host supported by the resource provider. Providers will have different capabilities and the access mode of each PV can be set to a specific mode supported by a specific volume. For example, NFS can support multiple read/write clients, but a particular NFS PV can be exported only as read-only on the server. Each PV has its own set of access modes that define PV-specific performance metrics, such as ReadWriteOnce, ReadOnlyMany, and ReadWriteMany.

Securing applications includes managing application and API authentication and authorization. Web SSO functionality is a critical part of modern applications. When developers build their own applications, the container platform can provide various container services for them to use.

APIs are a critical component of microservice applications. Microservice applications have multiple independent API services, which results in a proliferation of service endpoints and therefore requires more governance tools. It is recommended to use API management tools. All API platforms should provide a variety of standard options for API authentication and security, which can be used alone or in combination to issue certificates and control access. These options include standard API keys, app IDs, key pairs, and OAuth 2.0.

In July 2016, Kubernetes 1.3 introduced Kubernetes Federated Cluster. This is an exciting new feature currently in Kubernetes 1.6 beta.

In public cloud or enterprise data center scenarios, Federation is useful for deploying and accessing application services across clusters. Multi-cluster enables high availability of applications, such as multiple regions, multiple cloud providers (such as AWS, Google Cloud and Azure) to achieve common management of deployment or migration.

When managing cluster federation, you must ensure that the orchestration tool provides the required security across different deployment platform instances. As always, authentication and authorization are key to security - being able to securely pass data to applications no matter where they are running, and managing application multi-tenancy in a cluster.

Kubernetes extends cluster federation to include support for federated encryption, federated namespaces, and object entry.

The above is the detailed content of Ten aspects to strengthen Linux container security. For more information, please follow other related articles on the PHP Chinese website!

How does performance differ between Linux and Windows for various tasks?May 14, 2025 am 12:03 AM

How does performance differ between Linux and Windows for various tasks?May 14, 2025 am 12:03 AMLinux performs well in servers and development environments, while Windows performs better in desktop and gaming. 1) Linux's file system performs well when dealing with large numbers of small files. 2) Linux performs excellently in high concurrency and high throughput network scenarios. 3) Linux memory management has more advantages in server environments. 4) Linux is efficient when executing command line and script tasks, while Windows performs better on graphical interfaces and multimedia applications.

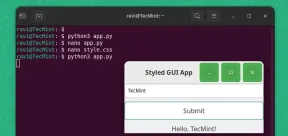

How to Create GUI Applications In Linux Using PyGObjectMay 13, 2025 am 11:09 AM

How to Create GUI Applications In Linux Using PyGObjectMay 13, 2025 am 11:09 AMCreating graphical user interface (GUI) applications is a fantastic way to bring your ideas to life and make your programs more user-friendly. PyGObject is a Python library that allows developers to create GUI applications on Linux desktops using the

How to Install LAMP Stack with PhpMyAdmin in Arch LinuxMay 13, 2025 am 11:01 AM

How to Install LAMP Stack with PhpMyAdmin in Arch LinuxMay 13, 2025 am 11:01 AMArch Linux provides a flexible cutting-edge system environment and is a powerfully suited solution for developing web applications on small non-critical systems because is a completely open source and provides the latest up-to-date releases on kernel

How to Install LEMP (Nginx, PHP, MariaDB) on Arch LinuxMay 13, 2025 am 10:43 AM

How to Install LEMP (Nginx, PHP, MariaDB) on Arch LinuxMay 13, 2025 am 10:43 AMDue to its Rolling Release model which embraces cutting-edge software Arch Linux was not designed and developed to run as a server to provide reliable network services because it requires extra time for maintenance, constant upgrades, and sensible fi

![12 Must-Have Linux Console [Terminal] File Managers](https://img.php.cn/upload/article/001/242/473/174710245395762.png?x-oss-process=image/resize,p_40) 12 Must-Have Linux Console [Terminal] File ManagersMay 13, 2025 am 10:14 AM

12 Must-Have Linux Console [Terminal] File ManagersMay 13, 2025 am 10:14 AMLinux console file managers can be very helpful in day-to-day tasks, when managing files on a local machine, or when connected to a remote one. The visual console representation of the directory helps us quickly perform file/folder operations and sav

qBittorrent: A Powerful Open-Source BitTorrent ClientMay 13, 2025 am 10:12 AM

qBittorrent: A Powerful Open-Source BitTorrent ClientMay 13, 2025 am 10:12 AMqBittorrent is a popular open-source BitTorrent client that allows users to download and share files over the internet. The latest version, qBittorrent 5.0, was released recently and comes packed with new features and improvements. This article will

Setup Nginx Virtual Hosts, phpMyAdmin, and SSL on Arch LinuxMay 13, 2025 am 10:03 AM

Setup Nginx Virtual Hosts, phpMyAdmin, and SSL on Arch LinuxMay 13, 2025 am 10:03 AMThe previous Arch Linux LEMP article just covered basic stuff, from installing network services (Nginx, PHP, MySQL, and PhpMyAdmin) and configuring minimal security required for MySQL server and PhpMyadmin. This topic is strictly related to the forme

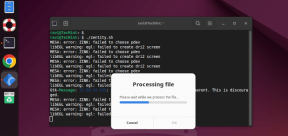

Zenity: Building GTK Dialogs in Shell ScriptsMay 13, 2025 am 09:38 AM

Zenity: Building GTK Dialogs in Shell ScriptsMay 13, 2025 am 09:38 AMZenity is a tool that allows you to create graphical dialog boxes in Linux using the command line. It uses GTK , a toolkit for creating graphical user interfaces (GUIs), making it easy to add visual elements to your scripts. Zenity can be extremely u

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SublimeText3 Chinese version

Chinese version, very easy to use

WebStorm Mac version

Useful JavaScript development tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver Mac version

Visual web development tools