by A. Jesse Jiryu Davis, Python Evangelist at 10gen In a sharded cluster of replica sets, which server or servers handle each of your queries? What about each insert, update, or command? If you know how a MongoDB cluster routes operations

by A. Jesse Jiryu Davis, Python Evangelist at 10gen

In a sharded cluster of replica sets, which server or servers handle each of your queries? What about each insert, update, or command? If you know how a MongoDB cluster routes operations among its servers, you can predict how your application will scale as you add shards and add members to shards.

Operations are routed according to the type of operation, your shard key, and your read preference. Let’s set up a cluster and use the system profiler to see where each operation is run. This is an interactive, experimental way to learn how your cluster really behaves and how your architecture will scale.

Setup

You’ll need a recent install of MongoDB (I’m using 2.4.4), Python, a recent version of PyMongo (at least 2.4—I’m using 2.5.2) and the code in my cluster-profile repository on GitHub. If you install the Colorama Python package you’ll get cute colored output. These scripts were tested on my Mac.

Sharded cluster of replica sets

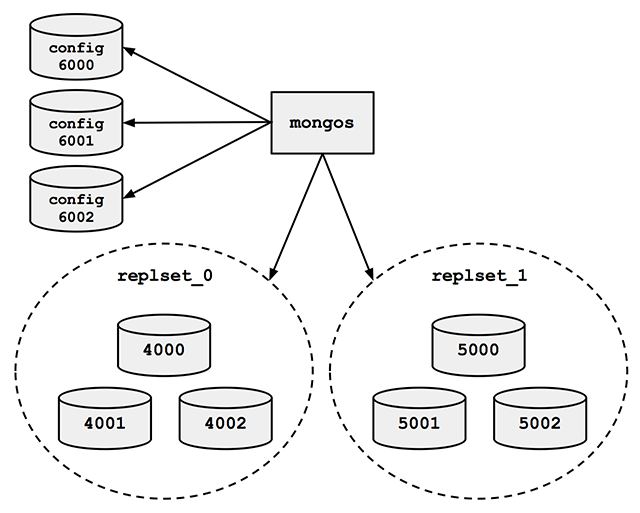

Run the cluster_setup.py script in my repository. It sets up a standard sharded cluster for you running on your local machine. There’s a mongos, three config servers, and two shards, each of which is a three-member replica set. The first shard’s replica set is running on ports 4000 through 4002, the second shard is on ports 5000 through 5002, and the three config servers are on ports 6000 through 6002:

For the finale, cluster_setup.py makes a collection named sharded_collection, sharded on a key named shard_key.

In a normal deployment, we’d let MongoDB’s balancer automatically distribute chunks of data among our two shards. But for this demo we want documents to be on predictable shards, so my script disables the balancer. It makes a chunk for all documents with shard_key less than 500 and another chunk for documents with shard_key greater than or equal to 500. It moves the high chunk to replset_1:

client = MongoClient() # Connect to mongos. admin = client.admin # admin database.

Pre-split.

admin.command(

'split', 'test.sharded_collection',

middle={'shard_key': 500})

admin.command(

'moveChunk', 'test.sharded_collection',

find={'shard_key': 500},

to='replset_1')

If you connect to mongos with the MongoDB shell, sh.status() shows there’s one chunk on each of the two shards:

{ "shard_key" : { "$minKey" : 1 } } -->> { "shard_key" : 500 } on : replset_0 { "t" : 2, "i" : 1 }

{ "shard_key" : 500 } -->> { "shard_key" : { "$maxKey" : 1 } } on : replset_1 { "t" : 2, "i" : 0 }

The setup script also inserts a document with a shard_key of 0 and another with a shard_key of 500. Now we’re ready for some profiling.

Profiling

Run the tail_profile.py script from my repository. It connects to all the replica set members. On each, it sets the profiling level to 2 (“log everything”) on the test database, and creates a tailable cursor on the system.profile collection. The script filters out some noise in the profile collection—for example, the activities of the tailable cursor show up in the system.profile collection that it’s tailing. Any legitimate entries in the profile are spat out to the console in pretty colors.

Experiments

Targeted queries versus scatter-gather

Let’s run a query from Python in a separate terminal:

>>> from pymongo import MongoClient

>>> # Connect to mongos.

>>> collection = MongoClient().test.sharded_collection

>>> collection.find_one({'shard_key': 0})

{'_id': ObjectId('51bb6f1cca1ce958c89b348a'), 'shard_key': 0}

tail_profile.py prints:

replset_0 primary on 4000: query test.sharded_collection {“shard_key”: 0}

The query includes the shard key, so mongos reads from the shard that can satisfy it. Adding shards can scale out your throughput on a query like this. What about a query that doesn’t contain the shard key?:

>>> collection.find_one({})

mongos sends the query to both shards:

replset_0 primary on 4000: query test.sharded_collection {“shard_key”: 0}

replset_1 primary on 5000: query test.sharded_collection {“shard_key”: 500}

For fan-out queries like this, adding more shards won’t scale out your query throughput as well as it would for targeted queries, because every shard has to process every query. But we can scale throughput on queries like these by reading from secondaries.

Queries with read preferences

We can use read preferences to read from secondaries:

>>> from pymongo.read_preferences import ReadPreference

>>> collection.find_one({}, read_preference=ReadPreference.SECONDARY)

tail_profile.py shows us that mongos chose a random secondary from each shard:

replset_0 secondary on 4001: query test.sharded_collection {“$readPreference”: {“mode”: “secondary”}, “$query”: {}}

replset_1 secondary on 5001: query test.sharded_collection {“$readPreference”: {“mode”: “secondary”}, “$query”: {}}

Note how PyMongo passes the read preference to mongos in the query, as the $readPreference field. mongos targets one secondary in each of the two replica sets.

Updates

With a sharded collection, updates must either include the shard key or be “multi-updates”. An update with the shard key goes to the proper shard, of course:

>>> collection.update({'shard_key': -100}, {'$set': {'field': 'value'}})

replset_0 primary on 4000: update test.sharded_collection {“shard_key”: -100}

mongos only sends the update to replset_0, because we put the chunk of documents with shard_key less than 500 there.

A multi-update hits all shards:

>>> collection.update({}, {'$set': {'field': 'value'}}, multi=True)

replset_0 primary on 4000: update test.sharded_collection {}

replset_1 primary on 5000: update test.sharded_collection {}

A multi-update on a range of the shard key need only involve the proper shard:

>>> collection.update({'shard_key': {'$gt': 1000}}, {'$set': {'field': 'value'}}, multi=True)

replset_1 primary on 5000: update test.sharded_collection {“shard_key”: {“$gt”: 1000}}

So targeted updates that include the shard key can be scaled out by adding shards. Even multi-updates can be scaled out if they include a range of the shard key, but multi-updates without the shard key won’t benefit from extra shards.

Commands

In version 2.4, mongos can use secondaries not only for queries, but also for some commands. You can run count on secondaries if you pass the right read preference:

>>> cursor = collection.find(read_preference=ReadPreference.SECONDARY) >>> cursor.count()

replset_0 secondary on 4001: command count: sharded_collection

replset_1 secondary on 5001: command count: sharded_collection

Whereas findAndModify, since it modifies data, is run on the primaries no matter your read preference:

>>> db = MongoClient().test

>>> test.command(

... 'findAndModify',

... 'sharded_collection',

... query={'shard_key': -1},

... remove=True,

... read_preference=ReadPreference.SECONDARY)

replset_0 primary on 4000: command findAndModify: sharded_collection

Go Forth And Scale

To scale a sharded cluster, you should understand how operations are distributed: are they scatter-gather, or targeted to one shard? Do they run on primaries or secondaries? If you set up a cluster and test your queries interactively like we did here, you can see how your cluster behaves in practice, and design your application for future growth.

Read Jesse’s blog, Emptysquare and follow him on Github

原文地址:Real-time Profiling a MongoDB Cluster, 感谢原作者分享。

Bagaimanakah kardinaliti indeks MySQL mempengaruhi prestasi pertanyaan?Apr 14, 2025 am 12:18 AM

Bagaimanakah kardinaliti indeks MySQL mempengaruhi prestasi pertanyaan?Apr 14, 2025 am 12:18 AMCardinality Indeks MySQL mempunyai kesan yang signifikan terhadap prestasi pertanyaan: 1. Indeks kardinaliti yang tinggi dapat lebih berkesan menyempitkan julat data dan meningkatkan kecekapan pertanyaan; 2. Indeks kardinaliti yang rendah boleh membawa kepada pengimbasan jadual penuh dan mengurangkan prestasi pertanyaan; 3. Dalam indeks bersama, urutan kardinaliti yang tinggi harus diletakkan di depan untuk mengoptimumkan pertanyaan.

MySQL: Sumber dan Tutorial untuk Pengguna BaruApr 14, 2025 am 12:16 AM

MySQL: Sumber dan Tutorial untuk Pengguna BaruApr 14, 2025 am 12:16 AMLaluan pembelajaran MySQL termasuk pengetahuan asas, konsep teras, contoh penggunaan, dan teknik pengoptimuman. 1) Memahami konsep asas seperti jadual, baris, lajur, dan pertanyaan SQL. 2) Ketahui definisi, prinsip kerja dan kelebihan MySQL. 3) menguasai operasi CRUD asas dan penggunaan lanjutan, seperti indeks dan prosedur yang disimpan. 4) Biasa dengan debugging kesilapan biasa dan cadangan pengoptimuman prestasi, seperti penggunaan rasional indeks dan pertanyaan pengoptimuman. Melalui langkah -langkah ini, anda akan memahami sepenuhnya penggunaan dan pengoptimuman MySQL.

Mysql dunia nyata: Contoh dan kes penggunaanApr 14, 2025 am 12:15 AM

Mysql dunia nyata: Contoh dan kes penggunaanApr 14, 2025 am 12:15 AMAplikasi dunia nyata MySQL termasuk reka bentuk pangkalan data asas dan pengoptimuman pertanyaan kompleks. 1) Penggunaan Asas: Digunakan untuk menyimpan dan mengurus data pengguna, seperti memasukkan, menanyakan, mengemas kini dan memadam maklumat pengguna. 2) Penggunaan lanjutan: Mengendalikan logik perniagaan yang kompleks, seperti perintah dan pengurusan inventori platform e-dagang. 3) Pengoptimuman Prestasi: Meningkatkan prestasi dengan menggunakan indeks, jadual partisi dan cache pertanyaan.

Perintah SQL di MySQL: Contoh PraktikalApr 14, 2025 am 12:09 AM

Perintah SQL di MySQL: Contoh PraktikalApr 14, 2025 am 12:09 AMPerintah SQL di MySQL boleh dibahagikan kepada kategori seperti DDL, DML, DQL, dan DCL, dan digunakan untuk membuat, mengubah suai, memadam pangkalan data dan jadual, memasukkan, mengemas kini, memadam data, dan melakukan operasi pertanyaan yang kompleks. 1. Penggunaan asas termasuk jadual penciptaan createtable, memasukkan data memasukkan, dan pilih data pertanyaan. 2. Penggunaan lanjutan melibatkan gabungan untuk Jadual Bergabung, Subqueries dan Groupby untuk Agregasi Data. 3. Kesilapan umum seperti kesilapan sintaks, jenis data yang tidak sepadan dan masalah kebenaran boleh disahpepijat melalui pemeriksaan sintaks, penukaran jenis data dan pengurusan kebenaran. 4. Cadangan Pengoptimuman Prestasi termasuk menggunakan indeks, mengelakkan pengimbasan jadual penuh, mengoptimumkan operasi gabungan dan menggunakan transaksi untuk memastikan konsistensi data.

Bagaimanakah InnoDB mengendalikan pematuhan asid?Apr 14, 2025 am 12:03 AM

Bagaimanakah InnoDB mengendalikan pematuhan asid?Apr 14, 2025 am 12:03 AMInnoDB mencapai atomik melalui undolog, konsistensi dan pengasingan melalui mekanisme penguncian dan MVCC, dan kegigihan melalui redolog. 1) Atomicity: Gunakan Undolog untuk merekodkan data asal untuk memastikan urus niaga dapat dilancarkan kembali. 2) Konsistensi: Memastikan konsistensi data melalui penguncian peringkat baris dan MVCC. 3) Pengasingan: Menyokong pelbagai tahap pengasingan, dan RepeatableRead digunakan secara lalai. 4) Kegigihan: Gunakan redolog untuk merekodkan pengubahsuaian untuk memastikan data disimpan untuk masa yang lama.

Tempat Mysql: Pangkalan Data dan PengaturcaraanApr 13, 2025 am 12:18 AM

Tempat Mysql: Pangkalan Data dan PengaturcaraanApr 13, 2025 am 12:18 AMKedudukan MySQL dalam pangkalan data dan pengaturcaraan sangat penting. Ia adalah sistem pengurusan pangkalan data sumber terbuka yang digunakan secara meluas dalam pelbagai senario aplikasi. 1) MySQL menyediakan fungsi penyimpanan data, organisasi dan pengambilan data yang cekap, sistem sokongan web, mudah alih dan perusahaan. 2) Ia menggunakan seni bina pelanggan-pelayan, menyokong pelbagai enjin penyimpanan dan pengoptimuman indeks. 3) Penggunaan asas termasuk membuat jadual dan memasukkan data, dan penggunaan lanjutan melibatkan pelbagai meja dan pertanyaan kompleks. 4) Soalan -soalan yang sering ditanya seperti kesilapan sintaks SQL dan isu -isu prestasi boleh disahpepijat melalui arahan jelas dan log pertanyaan perlahan. 5) Kaedah pengoptimuman prestasi termasuk penggunaan indeks rasional, pertanyaan yang dioptimumkan dan penggunaan cache. Amalan terbaik termasuk menggunakan urus niaga dan preparedStatemen

Mysql: Dari perniagaan kecil ke perusahaan besarApr 13, 2025 am 12:17 AM

Mysql: Dari perniagaan kecil ke perusahaan besarApr 13, 2025 am 12:17 AMMySQL sesuai untuk perusahaan kecil dan besar. 1) Perniagaan kecil boleh menggunakan MySQL untuk pengurusan data asas, seperti menyimpan maklumat pelanggan. 2) Perusahaan besar boleh menggunakan MySQL untuk memproses data besar dan logik perniagaan yang kompleks untuk mengoptimumkan prestasi pertanyaan dan pemprosesan transaksi.

Apa yang dibaca oleh Phantom dan bagaimana InnoDB menghalang mereka (kunci seterusnya)?Apr 13, 2025 am 12:16 AM

Apa yang dibaca oleh Phantom dan bagaimana InnoDB menghalang mereka (kunci seterusnya)?Apr 13, 2025 am 12:16 AMInnoDB secara berkesan menghalang pembacaan hantu melalui mekanisme utama. 1) Kekunci seterusnya menggabungkan kunci baris dan kunci jurang untuk mengunci rekod dan jurang mereka untuk mengelakkan rekod baru daripada dimasukkan. 2) Dalam aplikasi praktikal, dengan mengoptimumkan pertanyaan dan menyesuaikan tahap pengasingan, persaingan kunci dapat dikurangkan dan prestasi konkurensi dapat ditingkatkan.

Alat AI Hot

Undresser.AI Undress

Apl berkuasa AI untuk mencipta foto bogel yang realistik

AI Clothes Remover

Alat AI dalam talian untuk mengeluarkan pakaian daripada foto.

Undress AI Tool

Gambar buka pakaian secara percuma

Clothoff.io

Penyingkiran pakaian AI

AI Hentai Generator

Menjana ai hentai secara percuma.

Artikel Panas

Alat panas

PhpStorm versi Mac

Alat pembangunan bersepadu PHP profesional terkini (2018.2.1).

Hantar Studio 13.0.1

Persekitaran pembangunan bersepadu PHP yang berkuasa

Penyesuai Pelayan SAP NetWeaver untuk Eclipse

Integrasikan Eclipse dengan pelayan aplikasi SAP NetWeaver.

SublimeText3 versi Mac

Perisian penyuntingan kod peringkat Tuhan (SublimeText3)

VSCode Windows 64-bit Muat Turun

Editor IDE percuma dan berkuasa yang dilancarkan oleh Microsoft