Home >Technology peripherals >AI >The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

- PHPzforward

- 2024-04-29 18:55:141529browse

Cry to death, the whole world is crazy about making big models, the data on the Internet is not enough, not enough at all.

The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these big data eaters.

This problem is particularly prominent in multi-modal tasks. When

was at a loss, the start-up team from the Department of Renmin University used its own new model to take the lead in China in turning "model-generated data to feed itself" into Reality.

Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself.

What is the model?

The multi-modal large model Awaker 1.0 just appeared on the Zhongguancun Forum. Who is the team?

Sophon engine. was founded by Gao Yizhao, a doctoral student at the Hillhouse School of Artificial Intelligence at Renmin University of China, with Professor Lu Zhiwu from the Hillhouse School of Artificial Intelligence serving as a consultant. When the company was founded in 2021, it entered the "no man's land" track of multi-modality early. MOE architecture, solving the conflict problem of multi-modal and multi-task training

This is not the first time that Sophon Engine has released a model.

On March 8 last year, the team that has devoted two years of research and development released the first self-developed multi-modal model, the ChatImg sequence model with tens of billions of parameters, and launched the world's first public evaluation based on this. Multimodal conversation application ChatImg

(元 multiply image). Later, ChatImg continued to iterate, and the research and development of the new model Awaker was also advanced in parallel. The latter also inherits the basic capabilities of the previous model.

Compared with the previous generation ChatImg sequence model, Awaker 1.0

adopts the MoE model architecture. The reason is that we want to solve the problem of serious conflicts in multi-modal and multi-task training.

Using the MoE model architecture, it can better learn multi-modal general capabilities and the unique capabilities required for each task, thereby further improving the capabilities of the entire Awaker 1.0 on multiple tasks.

Data is worth a thousand words:

In view of the problem of evaluation data leakage in mainstream multi-modal evaluation lists, the Sophon team strictly constructed its own evaluation set. Most of the test images come from personal mobile phone photo albums.

In view of the problem of evaluation data leakage in mainstream multi-modal evaluation lists, the Sophon team strictly constructed its own evaluation set. Most of the test images come from personal mobile phone photo albums.

The table shows that the team evaluated Awaker 1.0 and the three most advanced multi-modal large models at home and abroad.

One more thing to mention, since GPT-4V and Intern-VL do not directly support detection tasks, their detection results are obtained by requiring the model to use language to describe the orientation of the object.

It can be seen that in visual question answering and business application tasks, the base model of Awaker 1.0 exceeds GPT-4V, Qwen-VL-Max and Intern-VL.

The base model of Awaker 1.0 achieved the second-best results on description, reasoning, and detection tasks.

Finally, looking at the average score, Awaker 1.0 has the highest value among them.

Therefore, the above results also confirm the effectiveness of the multi-task multi-modal model using the MoE architecture.

The data set evaluation results are available, but the real effect needs to be further experienced.

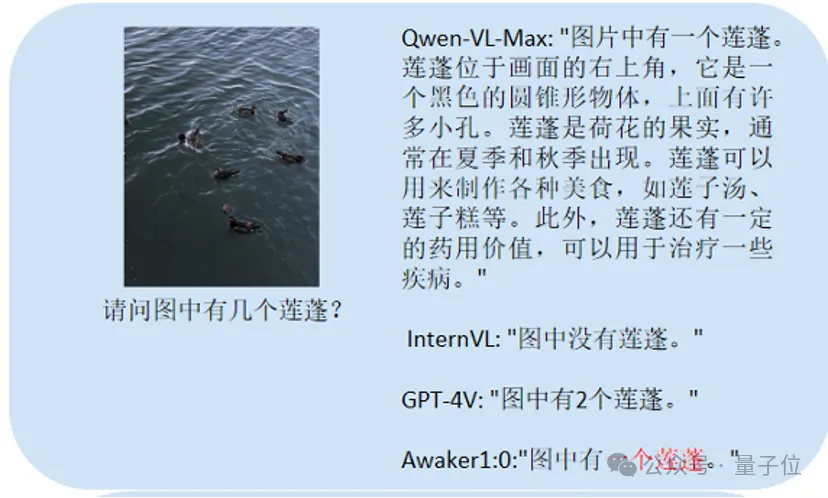

Here we mainly ask some questions about Chinese OCR

(picture text recognition)and counting issues, detailed description tasks, etc. compared with the large model. This main

test count:Awaker 1.0 can give the correct answer, while the other three models all answer incorrectly.

This main test

This main test

Chinese OCR:The players who answered correctly are Qwen-VL-Max and Awaker 1.0.

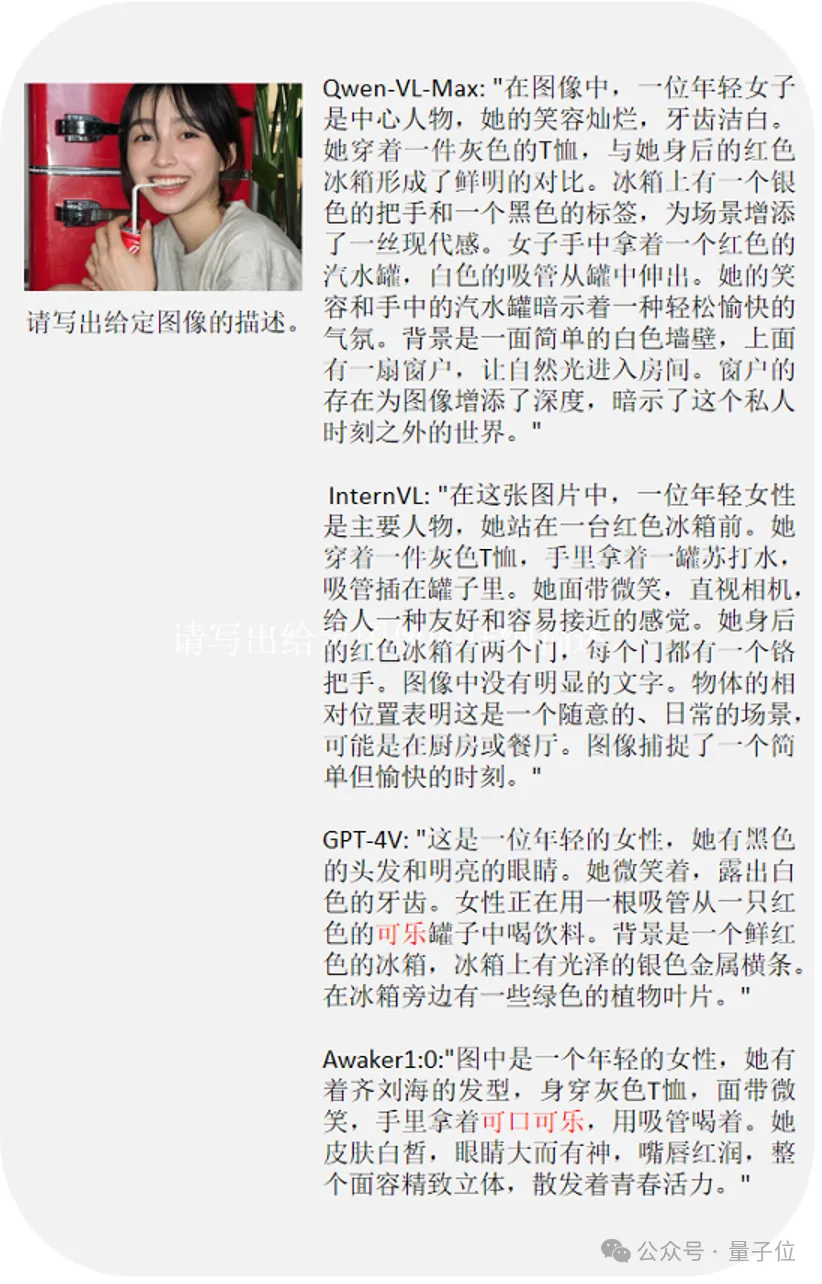

The last question tests the understanding of the

The last question tests the understanding of the

picture content. GPT-4V and Awaker 1.0 can not only describe the content of the picture in detail, but also accurately identify the details in the picture, such as Coca-Cola shown in the picture.

It must be mentioned that Awaker 1.0 inherits some of the research results that the Sophon team has previously received much attention from.

It must be mentioned that Awaker 1.0 inherits some of the research results that the Sophon team has previously received much attention from.

I’m talking about you - the

generated side of Awaker 1.0. The generation side of Awaker 1.0 is the Sora-like video generation base VDT

(Video Diffusion Transformer)independently developed by Sophon Engine. VDT's academic paper preceded the release of OpenAI Sora

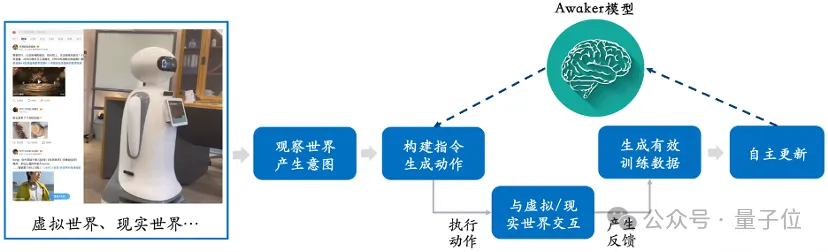

(last May), and has been accepted by the top conference ICLR 2024. VDT與眾不同的創新之處,主要有兩點。 一是在技術架構上採用Diffusion Transformer#,在OpenAI之前就展現了Transformer在影片產生領域的巨大潛力。 它的優勢在於其出色的時間依賴性捕獲能力,能夠產生時間上連貫的視訊幀,包括模擬三維物件隨時間的物理動態。 二是提出統一的時空遮罩建模機制,使VDT能夠處理多種視訊產生任務。 VDT靈活的條件資訊處理方式,如簡單的token空間拼接,有效地統一了不同長度和模態的資訊。 同時,透過與該工作提出的時空掩碼建模機制結合,VDT成為了一個通用的視訊擴散工具,在不修改模型結構的情況下可以應用於無條件生成、視訊後續幀預測、插幀、圖生影片、影片畫面補全等多種影片生成任務。 據了解,智子引擎團隊不僅探討了VDT對簡單物理規律的模擬,發現它能模擬物理過程: 也在超寫實人像影片產生任務上進行了深度探索。 因為肉眼對人臉及人的動態變化非常敏感,所以這個任務對影片產生品質的要求非常高。不過,智子引擎已經突破超寫實人像影片產生的大部分關鍵技術,比起Sora也沒在害怕的。 口說無憑。 這是智子引擎結合VDT和可控生成,對人像視頻生成質量提升後的效果: 據悉,智子引擎還將繼續優化人物可控的生成演算法,並積極進行商業化探索。 更值得關注的是,智子引擎團隊強調: Awaker 1.0是世界上首個能自主更新的多模態大模型。 換句話說,Awaker 1.0是「活」的,它的參數可以即時持續地更新-這就導致Awaker 1.0區別於所有其它多模態大模型, Awaker 1.0的自主更新機制,包含三大關鍵技術,分別是: 在理解側,Awaker 1.0與數位世界和現實世界互動。 在生成側,Awaker 1.0可以進行高品質的多模態內容生成,為理解側模型提供更多的訓練資料。 把新知識「記憶」在自個兒模型的參數上。 面對資料瓶頸的團隊們,一種可行、可用的新選擇,不就被Awaker 1.0送來了? 話說回來,正是由於實現了視覺理解與視覺生成的融合,當遇到「多模態大模型適配具身智能」的問題,Awaker 1.0的驕傲已經顯露無疑。 事情是這樣的: Awaker 1.0這類多模態大模型,其具有的視覺理解能力可以天然與具身智能的「眼睛」結合。 而主流聲音也認為,「多模態大模型 具身智慧」有可能大幅提升具身智慧的適應性和創造性,甚至是實現AGI的可行路徑。 理由不外乎兩點。 第一,人們期望具身智慧擁有適應性,即智能體能夠透過持續學習來適應不斷變化的應用環境。 這樣一來,具身智慧既能在已知多模態任務上越做越好,也能快速適應未知的多模態任務。 第二,人們也期望具身智慧具有真正的創造性,希望它透過對環境的自主探索,能夠發現新的策略和解決方案,並探索AI的能力邊界。 但是二者的適配,並不是簡簡單單把多模態大模型連結個身體,或直接給具身智能裝個腦子那麼簡單。 就拿多模態大模型來說,至少有兩個明顯的問題擺在眼前。 一是模型的迭代更新周期長,需要大量的人力投入; 二是模型的訓練資料都源自已有的數據,模型不能持續獲得大量的新知識。雖然透過RAG和擴長上下文視窗也可以注入持續出現的新知識,模型記不住,補救方式還會帶來額外的問題。 總之,目前的多模態大模型在實際應用場景中不具備很強的適應性,更不具備創造性,導致在行業落地時總是出現各種各樣的困難。 妙啊——還記得我們前面提到,Awaker 1.0不僅可以學習新知識,還能記住新知識,而這種學習是每天的、持續的、及時的。 從這張框架圖可以看出,Awaker 1.0能夠與各種智慧型裝置結合,透過智慧型裝置觀察世界,產生動作意圖,並自動建構指令控制智能設備完成各種動作。 在完成各種動作後,智慧型裝置會自動產生各種回饋,Awaker 1.0能夠從這些動作和回饋中獲得有效的訓練資料進行持續的自我更新,不斷強化模型的各種能力。 這就等於具身智能擁有一個活的大腦了。 誰看了不說一句how pay(狗頭)~ #尤其重要的是,因為具備自主更新能力,Awaker 1.0##不單單是可以和具身智能適配,它也適用於更廣泛的行業場景,能夠解決更複雜的實際任務。

產生源源不絕的新互動資料

這三項技術,讓Awaker 1.0具備自主學習、自動反思和自主更新的能力,可以在這個世界自由探索,甚至與人類互動。 基於此,Awaker 1.0在理解側和生成側都能產生源源不絕的新交互資料。 怎麼做到的?

具身智能「活」的大腦

The above is the detailed content of The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Mysql database basic statement training questions detailed code

- php '7-Day Devil Training Camp' free live course registration notice! ! ! ! ! !

- How to delete a table in the database in mysql

- How to delete duplicate data in excel so that only one remains

- Volcano Engine helps Shenzhen Technology release the industry's first 3D molecular pre-training model Uni-Mol