Home >Technology peripherals >AI >Musk's first multi-modal large model is here, and GPT-4V has been surpassed again

Musk's first multi-modal large model is here, and GPT-4V has been surpassed again

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2024-04-14 21:04:14675browse

Since Grok’s debut in November 2023, Musk’s xAI has been making continuous progress in the field of large models, attacking pioneers such as OpenAI. Less than a month after Grok-1 was open sourced, xAI’s first multi-modal model was released.

Yesterday, xAI launched Grok-1.5V, a model that not only understands text, but also processes content in documents, charts, screenshots, and photos.

Grok-1.5V is a multi-modal model capable of demonstrating elegant performance in many domains, from multi-disciplinary reasoning to understanding documents , scientific diagrams, screenshots and photos, etc. xAI also indicated that in the coming months, it is expected to demonstrate higher model capabilities on various modalities such as image, audio and video.

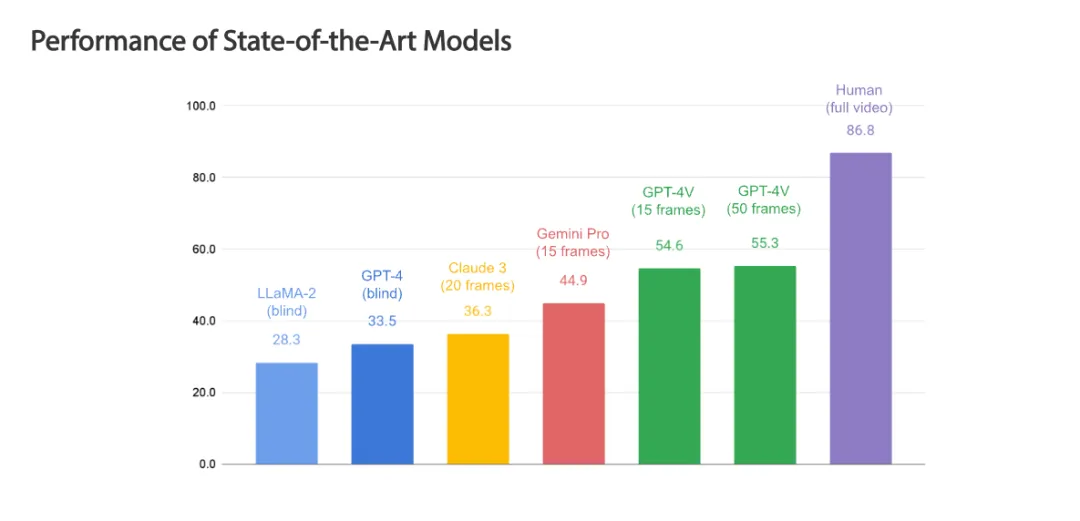

We saw test comparisons of Grok-1.5V and GPT-4V, Claude 3 Sonnet, Claude 3 Opus and Gemini Pro 1.5. Among other things: "We're particularly excited about Grok's ability to understand the physical world. Grok outperformed its peers on our new RealWorldQA benchmark. For all of the datasets below, we tested Evaluating Grok without Thought Chain Prompts" Word Count: 105

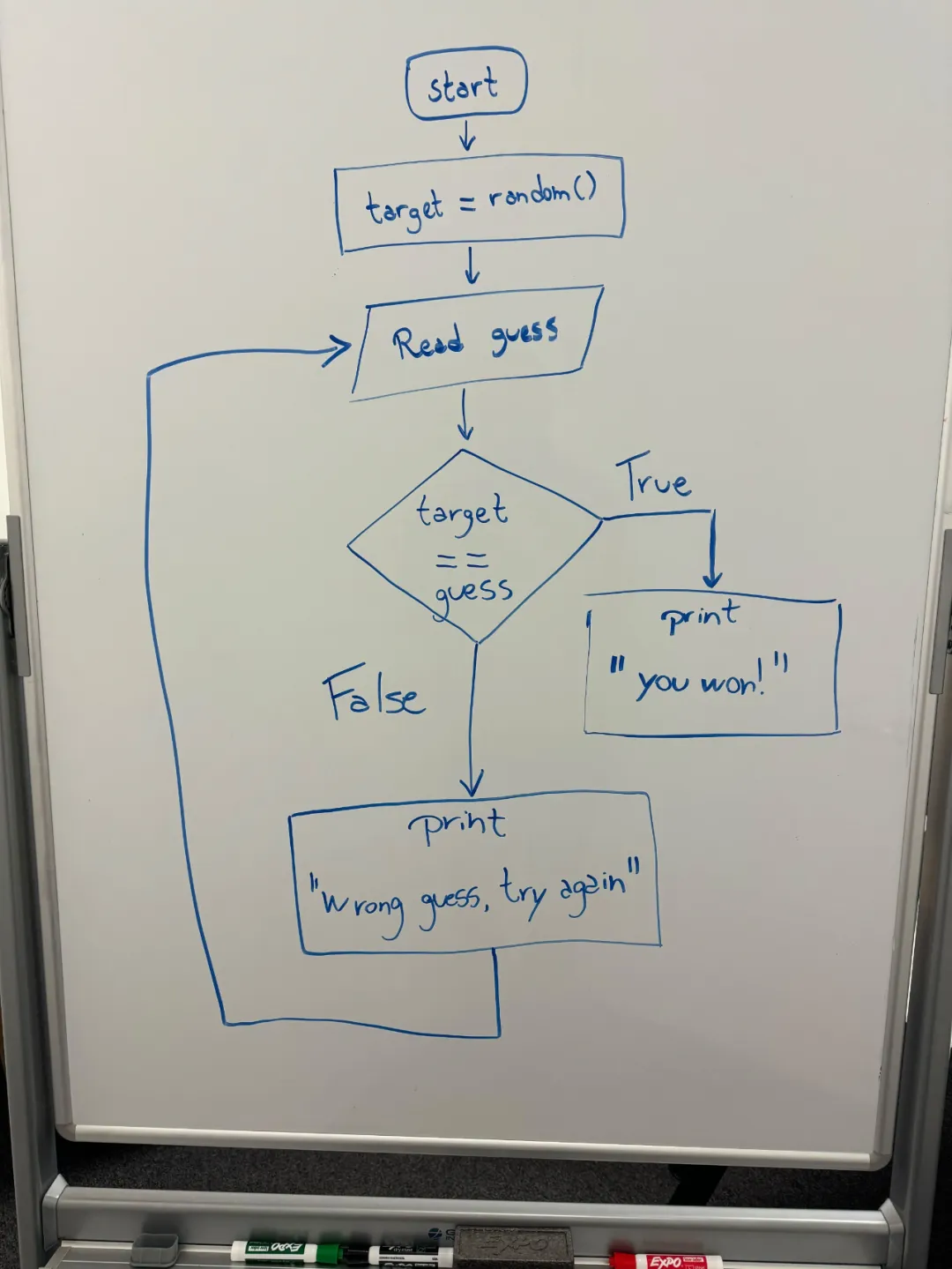

##Grok: Of course! The flowchart you provided describes a simple guessing game: the computer generates a random number and the user must guess it. Here is the Python code that represents the logic in the flowchart:

##Grok: Of course! The flowchart you provided describes a simple guessing game: the computer generates a random number and the user must guess it. Here is the Python code that represents the logic in the flowchart:

import randomdef guess_number(): # Generate a random number between 1 and 10target = random.randint(1, 10)while True:guess = int(input("Guess a number between 1 and 10: "))if guess == target:print("You won!")breakelse:print("Wrong guess, try again!")if __name__ == "__main__":guess_number()

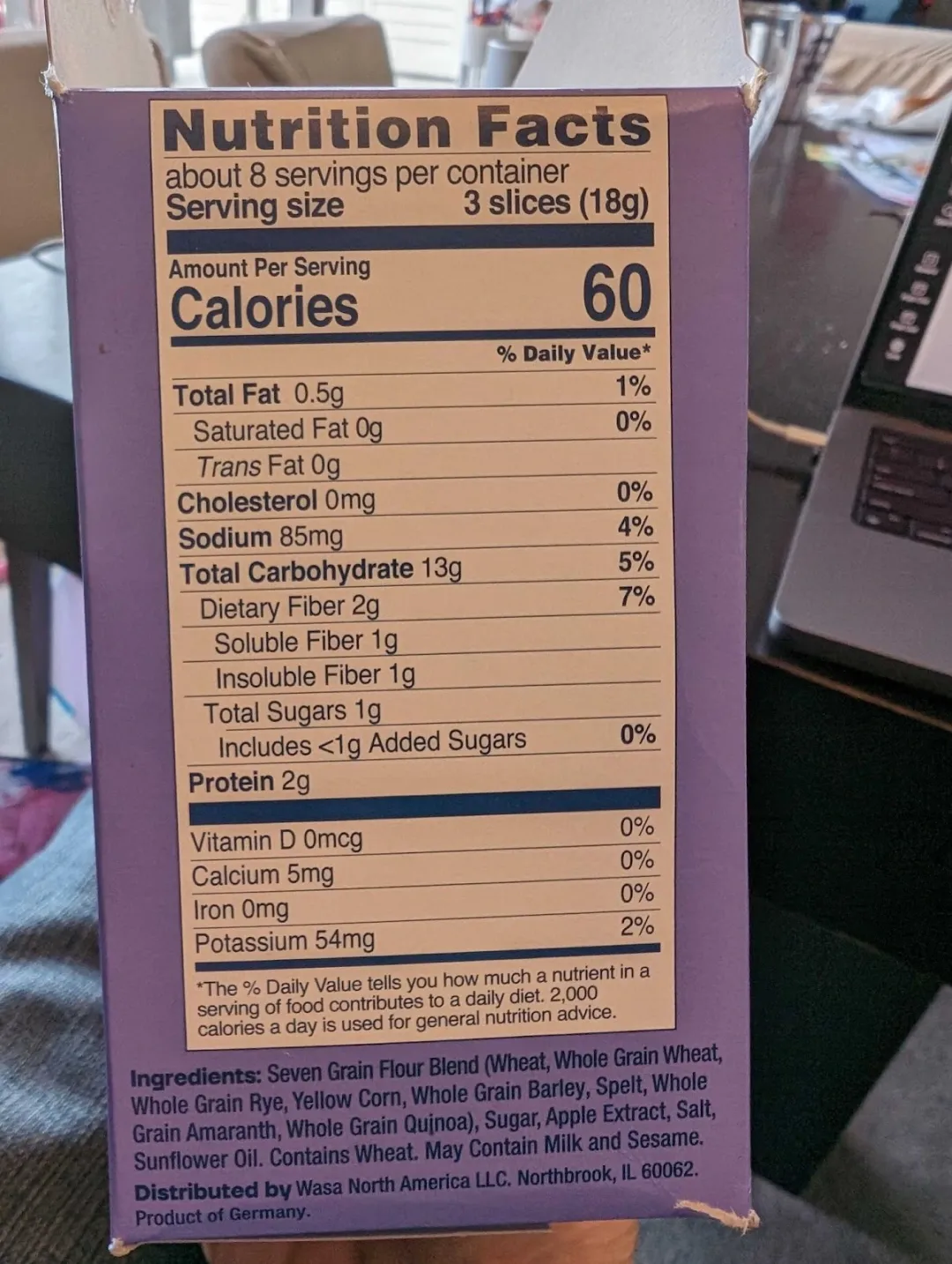

第二个是计算卡路里:

Prompt:How many calories are there in 5 slices of this?(5 片有多少卡路里?)

Grok:如果一份是 3 片,含有 60 卡路里,那么 5 片就含有大约 100 卡路里。计算方法是首先确定每片的热量:60 卡 / 3 片 = 20 卡 / 片。然后,将每片的卡路里乘以片数:每片 20 卡路里 * 5 片 = 100 卡路里。

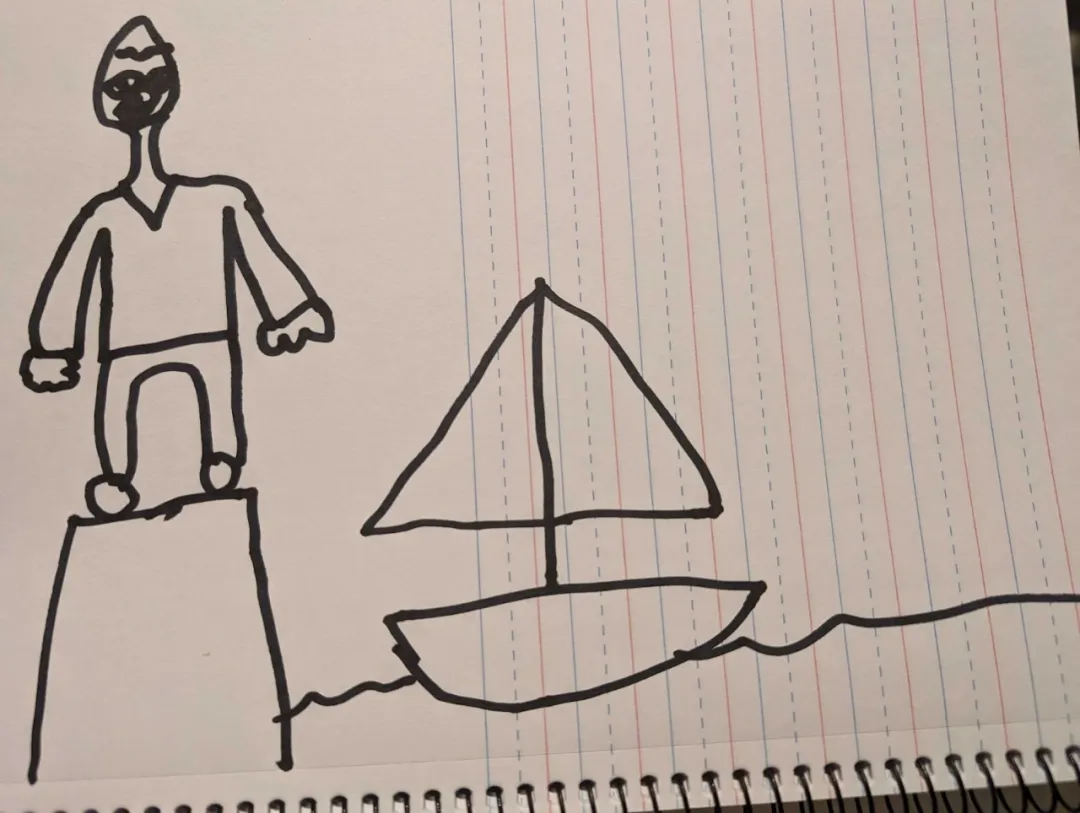

第三个是将草图转化为睡前故事:

Prompt:My son drew this, can you tell a short bedtime story based on his drawing?(这是我儿子画的,你能根据他的画讲一个简短的睡前故事吗?)

Grok:

很久很久以前,在一个充满冒险和奇迹的国度里,有一个勇敢的小男孩,名叫提米。提米和家人住在舒适的小房子里,他最喜欢的事情就是探索周围的世界。

在一个阳光明媚的日子里,提米决定去附近的森林探险。他带上了自己最喜欢的零食、指南针和他最信任的笔记本,准备把看到的所有令人兴奋的事物都画下来。

当 Timmy 走进森林深处时,他发现了一条在阳光下闪闪发光的神奇河流。这条河是如此迷人,以至于提米决定做一艘小纸船,顺流而下,作为给森林精灵的特别讯息。

提米小心翼翼地折好纸船,在上面画了一个笑脸,希望能给森林精灵带来欢乐。然后,他把小船放在河面上,看着小船载着他的友谊和对大自然的爱漂走了。

夕阳西下,提米知道是时候回家了。他为自己在森林里度过的神奇的一天感到庆幸,他知道自己将永远铭记这次探险。

回到家后,提米和家人分享了他的故事,家人听到他在森林里度过的神奇一天都非常高兴。他们一致认为,提米勇敢、善良的精神是一种真正的激励,他们知道,提米今后还会有更多精彩的冒险经历。

就这样,带着满心的爱和满脑子的梦想,提米渐渐进入了梦乡,他渴望发现在他的想象世界里还有什么新的冒险在等着他。

第四个是解释梗图:

Prompt:I don't get it, please explain(我不理解,啥意思?)

Grok:

这幅图幽默地将初创公司和大公司作了对比。在标有 "初创企业" 的左侧,有一群人在一起挖洞。他们都在积极参与,洞已经挖得很深了。

在标有 "大公司" 的右侧,有一群人围着一个洞站着。然而,与初创公司不同的是,只有一个人真正在挖洞,其他人都在观看或从事其他活动。

这幅图的幽默感来自于对初创企业和大公司之间差异的夸大。在初创公司,人们往往有一种紧迫感,希望每个人都能直接为手头的任务做出贡献。相比之下,大公司往往被描述为官僚主义更严重,所有团队成员对每个项目的直接参与更少。

第五个是将表格转换为 CSV 文件格式:

Prompt:Please convert this table to csv.(请将此表转换为 csv。)

Grok:

medal,name,sport,event,date1 gold,El Amin Chentouf,Athletics,Men's marathon - T13,11 September1 gold,Mohamed Amguoun,Athletics,Men's 400m - T13,15 September1 gold,Azeddine Nouiri,Athletics,Men's shot put - T34,15 September2 silver,Mahdi Afri,Athletics,Men's 400m - T12,9 September2 silver,El Amin Chentouf,Athletics,Men's 5000m - T13,15 September3 bronze,Mahdi Afri,Athletics,Men's 200m - T12,17 September3 bronze,Mohamed Lahna,Paratriathlon,Men's PT2,10 September

CSV 格式不支持样式或格式设置,因此信息以纯文本格式呈现。

第六个是辨别木头的腐朽程度:

Prompt:These holes are appearing around the screws in my deck. Is it rotten? Should I get my boards replaced?(这些孔出现在我的甲板上的螺钉周围。是不是烂了?我应该更换吗?)

Grok:

甲板上的木材似乎已经开始腐烂。这表现在螺丝周围出现孔洞,这是木材腐烂的常见迹象。

为了确保露台的安全和使用寿命,建议更换受影响的木板。这将有助于防止其受到进一步损坏,并保持其结构的完整性。

如果您不确定如何更换木板,咨询专业的露台建造商或承包商可能会有所帮助。他们将能够评估损坏的程度,并就最佳行动方案向您提供专家建议。

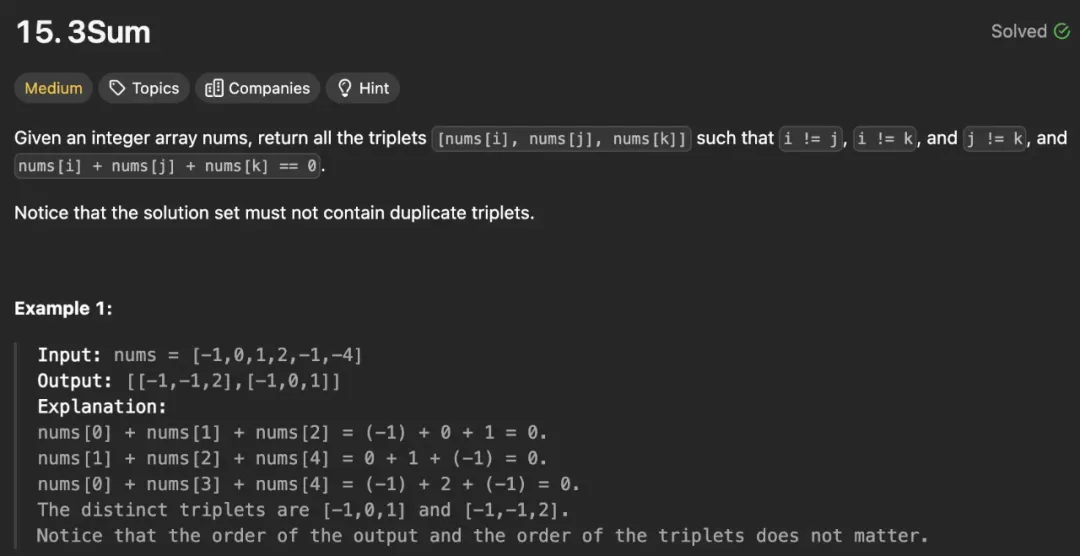

第七个是解决编程问题:

Prompt:Can you write Python code that solves this?(你能编写 Python 代码来解决这个问题吗?)

Grok 回复如下:

def find_triplets(nums):result = []nums.sort()for i in range(len(nums) - 2):if i > 0 and nums[i] == nums[i - 1]:continuetarget = -nums[i]left = i + 1right = len(nums) - 1while left

RealWorldQA 基准

本次发布还有一个亮点:xAI 推出了一个新的基准「RealWorldQA」。

下载地址:https://data.x.ai/realworldqa.zip

「为了开发有用的真实世界人工智能助手,提高模型对物理世界的理解至关重要。」xAI 希望借助该基准更好地评估多模态模型的基本真实世界空间理解能力。「虽然当前基准中的许多示例对人类来说相对容易,但它们往往对前沿模型构成挑战。」

目前,RealWorldQA 包含 700 多张图片,每张图片都有一个问题和易于验证的答案。

在真实世界的图像外,该数据集还包括从车辆上拍摄的匿名图像。这对于自动驾驶领域来说,或许是个好消息。

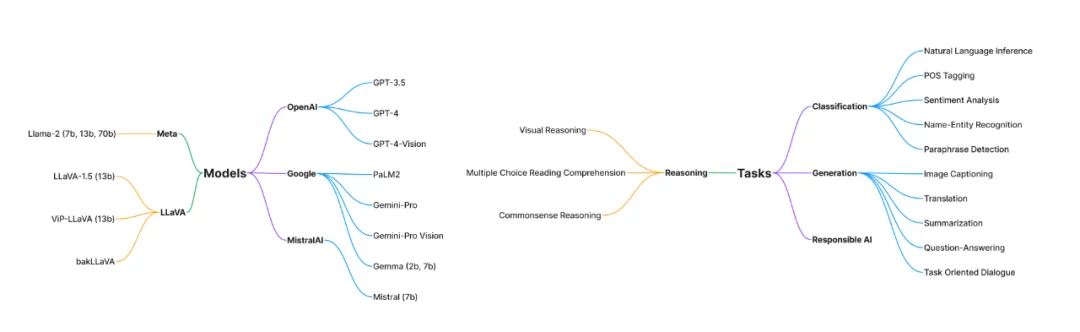

巧合的是,微软研究院前段时间更新了跨语言、模态、模型和任务的基准测试 MEGAVERSE,包含 22 个数据集,涵盖 83 种语言(包括资源匮乏的非洲语言)。微软还在基准测试中加入了两个多模态数据集,并比较了 LLaVA 模型 GPT-4-Vision 和 Gemini-Pro-Vision 的性能。

Meta 在前几天也开源了衡量人工智能系统具体问答能力的基准数据集 OpenEQA,包含家庭和办公室等 180 多种不同现实环境的 1600 多个问题,跨越七个类别,全面测试 AI 在物体和属性识别、空间和功能推理以及常识知识等技能方面的能力,加深大模型对现实世界的理解。

即使是最先进的 AI 模型,如 GPT-4V,在 OpenEQA 上也难以与人类表现相媲美。OpenEQA 是衡量人工智能系统理解和回答现实世界问题能力的新基准。

在这些研究的推动下,我们可以期待一下 2024 年大模型在现实世界任务取得更多的进展。

参考链接:https://x.ai/blog/grok-1.5v

The above is the detailed content of Musk's first multi-modal large model is here, and GPT-4V has been surpassed again. For more information, please follow other related articles on the PHP Chinese website!