Home >Technology peripherals >AI >Mistral open source 8X22B large model, OpenAI updates GPT-4 Turbo vision, they are all bullying Google

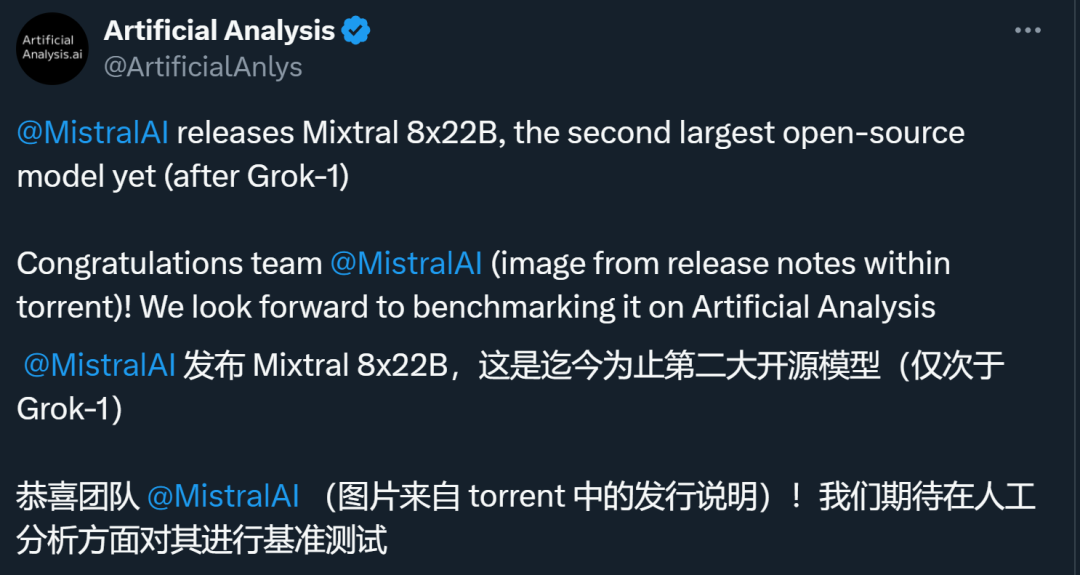

Mistral open source 8X22B large model, OpenAI updates GPT-4 Turbo vision, they are all bullying Google

- 王林forward

- 2024-04-10 17:37:271192browse

There is really a trend of encircling and suppressing Google!

The above is the detailed content of Mistral open source 8X22B large model, OpenAI updates GPT-4 Turbo vision, they are all bullying Google. For more information, please follow other related articles on the PHP Chinese website!

Statement:

This article is reproduced at:jiqizhixin.com. If there is any infringement, please contact admin@php.cn delete

Previous article:Building a digital, decarbonized energy future: Technology-driven green transformationNext article:Building a digital, decarbonized energy future: Technology-driven green transformation

Related articles

See more- Where is the address of the World VR Industry Conference?

- What should I do if Google Chrome does not prompt to save passwords?

- The scale of my country's computing industry reaches 2.6 trillion yuan, with more than 20.91 million general-purpose servers and 820,000 AI servers shipped in the past six years.

- How to set up automatic refresh of web pages in Google Chrome

- How to stop Google Chrome from automatically updating