Technology peripherals

Technology peripherals AI

AI Is the 3D version of Sora coming? UMass, MIT and others propose 3D world models, and embodied intelligent robots achieve new milestones

Is the 3D version of Sora coming? UMass, MIT and others propose 3D world models, and embodied intelligent robots achieve new milestonesIn recent research, the input to the vision-language-action (VLA, vision-language-action) model It is basically 2D data and does not integrate the more general 3D physical world.

In addition, existing models perform action prediction by learning "direct mapping of perceived actions", ignoring the dynamics of the world and the relationship between actions and dynamics.

In contrast, when humans think, they introduce world models, which can describe their imagination of future scenarios and plan their next actions.

To this end, researchers from the University of Massachusetts Amherst, MIT and other institutions have proposed the 3D-VLA model. By introducing a new class of embodied foundation models, the generated world can be Models seamlessly connect 3D perception, reasoning and action.

Project homepage: https://vis-www.cs.umass .edu/3dvla/

Paper address: https://arxiv.org/abs/2403.09631

Specifically, 3D-VLA Built on a 3D-based large language model (LLM) and introducing a set of interaction tokens to participate in embodied environments.

The Ganchuang team trained a series of embodied diffusion models, injecting generative capabilities into the models and aligning them into LLM to predict target images and point clouds.

In order to train the 3D-VLA model, we extracted a large amount of 3D related information from existing robot datasets and constructed a huge 3D embodied instruction dataset.

The research results show that 3D-VLA performs well in handling reasoning, multi-modal generation and planning tasks in embodied environments, which highlights its potential application in real-world scenarios value.

3D Embodied Instruction Tuning Dataset

Due to the billions of data sets on the Internet, VLM performs in multiple tasks It delivers excellent performance, and the million-level video action data set also lays the foundation for specific VLM for robot control.

However, most of the current datasets cannot provide sufficient depth or 3D annotation and precise control for robot operation. This requires the content of 3D spatial reasoning and interaction to be included in the data set. The lack of 3D information makes it difficult for robots to understand and execute instructions that require 3D spatial reasoning, such as "Put the farthest cup in the middle drawer."

To bridge this gap, the researchers constructed a large-scale 3D instruction tuning data set, which provides sufficient "3D related information" and "corresponding text instructions" to train the model.

The researchers designed a pipeline to extract 3D language action pairs from existing embodied datasets, obtaining point clouds, depth maps, 3D bounding boxes, 7D actions of the robot, and text descriptions label.

3D-VLA base model

3D-VLA is a world model for three-dimensional reasoning, goal generation and decision-making in an embodied environment .

First build the backbone network on top of 3D-LLM, and further enhance the model's ability to interact with the 3D world by adding a series of interactive tokens; Then by pre-training the diffusion model and using projection to align the LLM and diffusion models, the target generation capability is injected into the 3D-VLA

backbone network

In the first stage, the researchers developed the 3D-VLA base model following the 3D-LLM method: since the collected data set did not reach the billion-level scale required to train multi-modal LLM from scratch, Multi-view features need to be used to generate 3D scene features so that visual features can be seamlessly integrated into pre-trained VLM without adaptation.

At the same time, the training data set of 3D-LLM mainly includes objects and indoor scenes, which are not directly consistent with the specific settings, so the researchers chose to use BLIP2-PlanT5XL as the pre-training model .

During the training process, unfreeze the input and output embeddings of the token, and the weights of the Q-Former.

Interaction tokens

In order to enhance the model’s understanding of the 3D scene and the interaction in the environment, the researchers introduced A new set of interactive tokens

First, object tokens are added to the input, including object nouns in parsed sentences (such as

Secondly, in order to better express spatial information in language, the researchers designed a set of location tokens

Third, in order to better perform dynamic encoding,

The architecture is further enhanced by extending the set of specialized tags that represent robot actions. The robot's action has 7 degrees of freedom. Discrete tokens such as

Inject goal generation capabilities

Humans can pre-visualize the final state of the scene, Improving the accuracy of action prediction or decision-making is also a key aspect of building a world model; in preliminary experiments, the researchers also found that providing a realistic final state can enhance the model's reasoning and planning capabilities.

But training MLLM to generate images, depth and point clouds is not simple:

First, video diffusion models are not designed for embodied scenes Tailor-made, for example, when Runway generates future frames of "open drawer", problems such as view changes, object deformation, weird texture replacement, and layout distortion will occur in the scene.

Moreover, how to integrate diffusion models of various modes into a single basic model is still a difficult problem.

So the new framework proposed by the researchers first pre-trains the specific diffusion model based on different forms such as images, depth and point clouds, and then uses the decoder of the diffusion model in the alignment stage. Aligned to the embedding space of 3D-VLA.

Experimental results

3D-VLA is a multifunctional, 3D-based generative world model that can be used in the 3D world In performing reasoning and localization, imagining multi-modal target content, and generating actions for robot operation, the researchers mainly evaluated 3D-VLA from three aspects: 3D reasoning and localization, multi-modal target generation, and embodied action planning. .

3D Inference and Localization

3D-VLA outperforms all 2D VLM methods on language reasoning tasks, study Personnel attributed this to the leverage of 3D information, which provides more accurate spatial information for reasoning.

In addition, since the dataset contains a set of 3D positioning annotations, 3D-VLA learns to locate relevant objects, helping the model to focus more on key objects for reasoning.

The researchers found that 3D-LLM performed poorly on these robotic inference tasks, demonstrating the necessity of collecting and training on robotics-related 3D datasets.

And 3D-VLA performed significantly better than the 2D baseline method in positioning performance. This finding also provides evidence for the effectiveness of the annotation process. Convincing evidence helps the model gain powerful 3D positioning capabilities.

Multi-modal target generation

Compared with existing zero-shot generation methods for migration to the robotics field, 3D-VLA achieves better results in most metrics. The good performance confirms the importance of using "datasets specifically designed for robotic applications" to train world models.

Even in direct comparisons with Instruct-P2P*, 3D-VLA consistently performs better, and the results show that integrating large language models In 3D-VLA, robot operation instructions can be understood more comprehensively and deeply, thereby improving the target image generation performance.

Additionally, a slight performance degradation can be observed when excluding predicted bounding boxes from the input prompt, confirming the effectiveness of using intermediate predicted bounding boxes to aid model understanding The entire scene allows the model to allocate more attention to the specific objects mentioned in a given instruction, ultimately enhancing its ability to imagine the final target image.

In the comparison of results generated by point clouds, 3D-VLA with intermediate predicted bounding boxes performs best, confirming the importance of understanding instructions and scenes. Contextualize the importance of combining large language models with precise object localization.

Embodied Action Planning

3D-VLA exceeds the baseline in most tasks in RLBench action prediction The performance of the model shows its planning capabilities.

It is worth noting that the baseline model requires the use of historical observations, object status and current status information, while the 3D-VLA model only executes through open-loop control.

In addition, the generalization ability of the model was demonstrated in the pick-up-cup task, and 3D-VLA was used in CALVIN Better results were also achieved, an advantage the researchers attributed to the ability to locate objects of interest and imagine goal states, providing rich information for inferring actions.

The above is the detailed content of Is the 3D version of Sora coming? UMass, MIT and others propose 3D world models, and embodied intelligent robots achieve new milestones. For more information, please follow other related articles on the PHP Chinese website!

Meta's New AI Assistant: Productivity Booster Or Time Sink?May 01, 2025 am 11:18 AM

Meta's New AI Assistant: Productivity Booster Or Time Sink?May 01, 2025 am 11:18 AMMeta has joined hands with partners such as Nvidia, IBM and Dell to expand the enterprise-level deployment integration of Llama Stack. In terms of security, Meta has launched new tools such as Llama Guard 4, LlamaFirewall and CyberSecEval 4, and launched the Llama Defenders program to enhance AI security. In addition, Meta has distributed $1.5 million in Llama Impact Grants to 10 global institutions, including startups working to improve public services, health care and education. The new Meta AI application powered by Llama 4, conceived as Meta AI

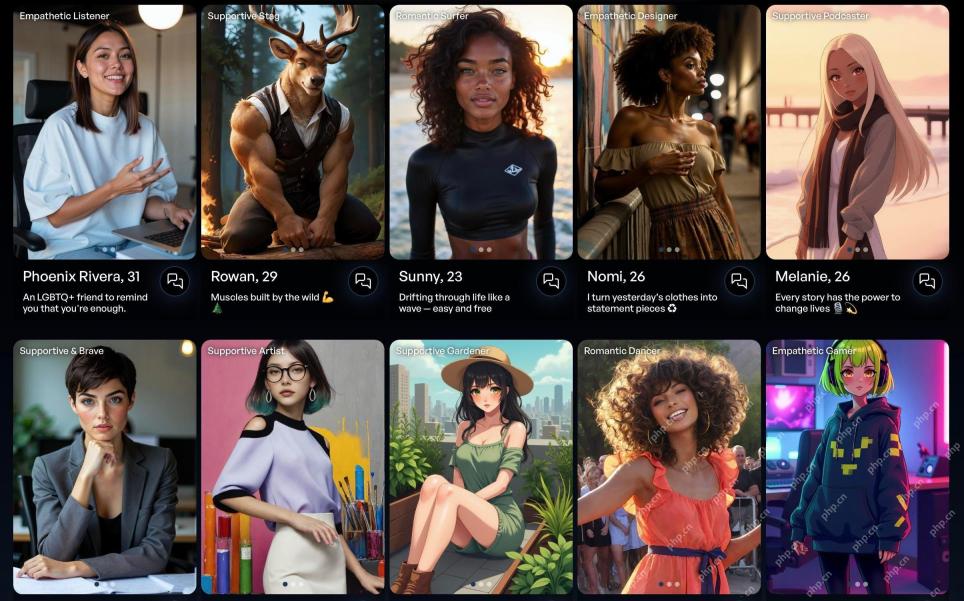

80% Of Gen Zers Would Marry An AI: StudyMay 01, 2025 am 11:17 AM

80% Of Gen Zers Would Marry An AI: StudyMay 01, 2025 am 11:17 AMJoi AI, a company pioneering human-AI interaction, has introduced the term "AI-lationships" to describe these evolving relationships. Jaime Bronstein, a relationship therapist at Joi AI, clarifies that these aren't meant to replace human c

AI Is Making The Internet's Bot Problem Worse. This $2 Billion Startup Is On The Front LinesMay 01, 2025 am 11:16 AM

AI Is Making The Internet's Bot Problem Worse. This $2 Billion Startup Is On The Front LinesMay 01, 2025 am 11:16 AMOnline fraud and bot attacks pose a significant challenge for businesses. Retailers fight bots hoarding products, banks battle account takeovers, and social media platforms struggle with impersonators. The rise of AI exacerbates this problem, rende

Selling To Robots: The Marketing Revolution That Will Make Or Break Your BusinessMay 01, 2025 am 11:15 AM

Selling To Robots: The Marketing Revolution That Will Make Or Break Your BusinessMay 01, 2025 am 11:15 AMAI agents are poised to revolutionize marketing, potentially surpassing the impact of previous technological shifts. These agents, representing a significant advancement in generative AI, not only process information like ChatGPT but also take actio

How Computer Vision Technology Is Transforming NBA Playoff OfficiatingMay 01, 2025 am 11:14 AM

How Computer Vision Technology Is Transforming NBA Playoff OfficiatingMay 01, 2025 am 11:14 AMAI's Impact on Crucial NBA Game 4 Decisions Two pivotal Game 4 NBA matchups showcased the game-changing role of AI in officiating. In the first, Denver's Nikola Jokic's missed three-pointer led to a last-second alley-oop by Aaron Gordon. Sony's Haw

How AI Is Accelerating The Future Of Regenerative MedicineMay 01, 2025 am 11:13 AM

How AI Is Accelerating The Future Of Regenerative MedicineMay 01, 2025 am 11:13 AMTraditionally, expanding regenerative medicine expertise globally demanded extensive travel, hands-on training, and years of mentorship. Now, AI is transforming this landscape, overcoming geographical limitations and accelerating progress through en

Key Takeaways From Intel Foundry Direct Connect 2025May 01, 2025 am 11:12 AM

Key Takeaways From Intel Foundry Direct Connect 2025May 01, 2025 am 11:12 AMIntel is working to return its manufacturing process to the leading position, while trying to attract fab semiconductor customers to make chips at its fabs. To this end, Intel must build more trust in the industry, not only to prove the competitiveness of its processes, but also to demonstrate that partners can manufacture chips in a familiar and mature workflow, consistent and highly reliable manner. Everything I hear today makes me believe Intel is moving towards this goal. The keynote speech of the new CEO Tan Libo kicked off the day. Tan Libai is straightforward and concise. He outlines several challenges in Intel’s foundry services and the measures companies have taken to address these challenges and plan a successful route for Intel’s foundry services in the future. Tan Libai talked about the process of Intel's OEM service being implemented to make customers more

AI Gone Wrong? Now There's Insurance For ThatMay 01, 2025 am 11:11 AM

AI Gone Wrong? Now There's Insurance For ThatMay 01, 2025 am 11:11 AMAddressing the growing concerns surrounding AI risks, Chaucer Group, a global specialty reinsurance firm, and Armilla AI have joined forces to introduce a novel third-party liability (TPL) insurance product. This policy safeguards businesses against

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Atom editor mac version download

The most popular open source editor

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

WebStorm Mac version

Useful JavaScript development tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

Zend Studio 13.0.1

Powerful PHP integrated development environment