Technology peripherals

Technology peripherals AI

AI The world's first Sora-like open source reproduction solution is here! Full disclosure of all training details and model weights

The world's first Sora-like open source reproduction solution is here! Full disclosure of all training details and model weightsThe world's first Sora-like open source reproduction solution is here! Full disclosure of all training details and model weights

The world's first open source Sora-like architecture video generation model is here!

The entire training process, including data processing, all training details and model weights, is all open.

This is the just released Open-Sora 1.0.

The actual effect it brings is as follows, it can generate the bustling traffic in the night scene of the bustling city.

You can also use an aerial photography perspective to show the scene of the cliff coast and the sea water lapping against the rocks.

Or the vast starry sky under time-lapse photography.

Since Sora's release, revealing and recreating Sora has become one of the most talked about topics in the development community due to its stunning effects and scarcity of technical details. For example, the Colossal-AI team launched a Sora training and inference replication process that can reduce costs by 46%.

After just two weeks, the team once again released the latest progress, reproducing a Sora-like solution, and made the technical solution and detailed tutorials open source for free on GitHub.

Then the question is, how to reproduce Sora?

Open-Sora open source address: https://github.com/hpcaitech/Open-Sora

Comprehensive interpretation of the Sora recurrence plan

The Sora recurrence plan includes four Aspects:

- Model architecture design

- Training reproduction plan

- Data preprocessing

- Efficient training optimization strategy

Model Architecture Design

The model adopts Sora homologous architecture Diffusion Transformer (DiT).

It is based on PixArt-α, a high-quality open source Vincent graph model using DiT architecture. On this basis, it introduces a temporal attention layer and extends it to video data.

Specifically, the entire architecture includes a pre-trained VAE, a text encoder and a STDiT (Spatial Temporal Diffusion Transformer) model that utilizes the spatial-temporal attention mechanism.

Among them, the structure of each layer of STDiT is shown in the figure below.

It uses a serial method to superimpose a one-dimensional temporal attention module on a two-dimensional spatial attention module to model temporal relationships. After the temporal attention module, the cross-attention module is used to align the semantics of the text.

Compared with the full attention mechanism, such a structure greatly reduces training and inference overhead.

Compared with the Latte model, which also uses the spatial-temporal attention mechanism, STDiT can better utilize the weights of pre-trained image DiT to continue training on video data.

△STDiT structure diagram

The training and inference process of the entire model is as follows.

It is understood that in the training stage, the pre-trained Variational Autoencoder (VAE) encoder is first used to compress the video data, and then STDiT is trained together with text embedding in the compressed latent space. Diffusion model.

In the inference stage, a Gaussian noise is randomly sampled from the latent space of VAE, and input into STDiT together with prompt embedding to obtain the denoised features, and finally input to VAE decoding processor, decode to get the video.

△Model training process

Training reproduction plan

In the training reproduction part, Open-Sora refers to Stable Video Diffusion (SVD).

It is divided into 3 stages:

- Large-scale image pre-training.

- Large-scale video pre-training.

- Fine-tuning of high-quality video data.

Each stage will continue training based on the weights of the previous stage.

Compared with single-stage training from scratch, multi-stage training achieves the goal of high-quality video generation more efficiently by gradually expanding data.

△Three phases of training plan

The first phase is large-scale image pre-training.

The team used the rich image data and Vincentian graph technology on the Internet to first train a high-quality Vincentian graph model, and used this model as the initialization weight for the next stage of video pre-training.

At the same time, since there is currently no high-quality spatio-temporal VAE, they use Stable Diffusion pre-trained image VAE.

This not only ensures the superior performance of the initial model, but also significantly reduces the overall cost of video pre-training.

The second stage is large-scale video pre-training.

This stage mainly increases the generalization ability of the model and effectively grasps the time series correlation of the video.

It needs to use a large amount of video data for training and ensure the diversity of video materials.

At the same time, the second-stage model adds a temporal attention module based on the first-stage Vincentian graph model to learn temporal relationships in videos. The remaining modules remain consistent with the first stage and load the first stage weights as initialization. At the same time, the output of the temporal attention module is initialized to zero to achieve more efficient and faster convergence.

The Colossal-AI team used PixArt-alpha’s open source weights as the initialization of the second-stage STDiT model, and the T5 model as the text encoder. They used a small resolution of 256x256 for pre-training, which further increased the convergence speed and reduced training costs.

△Open-Sora generation effect (prompt word: shot of the underwater world, a turtle swimming leisurely among the coral reefs)

The third stage is fine-tuning of high-quality video data.

According to reports, this stage can significantly improve the quality of model generation. The data size used is one order of magnitude lower than in the previous stage, but the duration, resolution and quality of the videos are higher.

Fine-tuning in this way can achieve efficient expansion of video generation from short to long, from low resolution to high resolution, and from low fidelity to high fidelity.

It is worth mentioning that Colossal-AI also disclosed the resource usage of each stage in detail.

In the reproduction process of Open-Sora, they used 64 H800s for training. The total training volume of the second stage is 2808 GPU hours, which is approximately US$7,000, and the training volume of the third stage is 1920 GPU hours, which is approximately US$4,500. After preliminary estimation, the entire training plan successfully controlled the Open-Sora reproduction process to about US$10,000.

Data Preprocessing

In order to further reduce the threshold and complexity of Sora reproduction, the Colossal-AI team also provides a convenient video data preprocessing script in the code warehouse, so that everyone can easily Start Sora recurrence pre-training.

Includes downloading public video data sets, segmenting long videos into short video clips based on shot continuity, and using the open source large language model LLaVA to generate precise prompt words.

The batch video title generation code they provide can annotate a video with two cards and 3 seconds, and the quality is close to GPT-4V.

The final video/text pair can be used directly for training. With the open source code they provide on GitHub, you can easily and quickly generate the video/text pairs required for training on your own data set, significantly reducing the technical threshold and preliminary preparation for starting a Sora replication project.

Efficient training support

In addition, the Colossal-AI team also provides a training acceleration solution.

Through efficient training strategies such as operator optimization and hybrid parallelism, an acceleration effect of 1.55 times was achieved in the training of processing 64-frame, 512x512 resolution video.

At the same time, thanks to Colossal-AI’s heterogeneous memory management system, a 1-minute 1080p high-definition video training task can be performed without hindrance on a single server (8H800).

#And the team also found that the STDiT model architecture also showed excellent efficiency during training.

Compared with DiT, which uses a full attention mechanism, STDiT achieves an acceleration effect of up to 5 times as the number of frames increases, which is particularly critical in real-life tasks such as processing long video sequences.

Finally, the team also released more Open-Sora generation effects.

, duration 00:25

The team and Qubits revealed that they will update and optimize Open-Sora related solutions and developments in the long term. In the future, more video training data will be used to generate higher quality, longer video content and support multi-resolution features.

In terms of practical applications, the team revealed that it will promote implementation in movies, games, advertising and other fields.

Interested developers can visit the GitHub project to learn more~

Open-Sora open source address: https://github.com/hpcaitech/Open-Sora

Reference link:

[1]https://arxiv.org/abs/2212.09748 Scalable Diffusion Models with Transformers.

[2]https://arxiv.org/abs/2310.00426 PixArt-α: Fast Training of Diffusion Transformer for Photorealistic Text-to-Image Synthesis.

[3]https://arxiv.org/abs/2311.15127 Stable Video Diffusion: Scaling Latent Video Diffusion Models to Large Datasets.

[4]https://arxiv.org/abs/2401.03048 Latte: Latent Diffusion Transformer for Video Generation.

[5]https://huggingface.co/stabilityai/sd-vae-ft-mse-original.

[6]https://github.com/google-research/text-to-text-transfer-transformer.

[7]https://github.com/haotian-liu/LLaVA.

[8]https://hpc-ai.com/blog/open-sora-v1.0.

The above is the detailed content of The world's first Sora-like open source reproduction solution is here! Full disclosure of all training details and model weights. For more information, please follow other related articles on the PHP Chinese website!

Reading The AI Index 2025: Is AI Your Friend, Foe, Or Co-Pilot?Apr 11, 2025 pm 12:13 PM

Reading The AI Index 2025: Is AI Your Friend, Foe, Or Co-Pilot?Apr 11, 2025 pm 12:13 PMThe 2025 Artificial Intelligence Index Report released by the Stanford University Institute for Human-Oriented Artificial Intelligence provides a good overview of the ongoing artificial intelligence revolution. Let’s interpret it in four simple concepts: cognition (understand what is happening), appreciation (seeing benefits), acceptance (face challenges), and responsibility (find our responsibilities). Cognition: Artificial intelligence is everywhere and is developing rapidly We need to be keenly aware of how quickly artificial intelligence is developing and spreading. Artificial intelligence systems are constantly improving, achieving excellent results in math and complex thinking tests, and just a year ago they failed miserably in these tests. Imagine AI solving complex coding problems or graduate-level scientific problems – since 2023

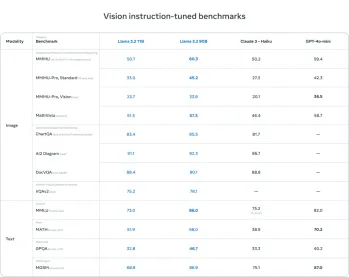

Getting Started With Meta Llama 3.2 - Analytics VidhyaApr 11, 2025 pm 12:04 PM

Getting Started With Meta Llama 3.2 - Analytics VidhyaApr 11, 2025 pm 12:04 PMMeta's Llama 3.2: A Leap Forward in Multimodal and Mobile AI Meta recently unveiled Llama 3.2, a significant advancement in AI featuring powerful vision capabilities and lightweight text models optimized for mobile devices. Building on the success o

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and MoreApr 11, 2025 pm 12:01 PM

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and MoreApr 11, 2025 pm 12:01 PMThis week's AI landscape: A whirlwind of advancements, ethical considerations, and regulatory debates. Major players like OpenAI, Google, Meta, and Microsoft have unleashed a torrent of updates, from groundbreaking new models to crucial shifts in le

The Human Cost Of Talking To Machines: Can A Chatbot Really Care?Apr 11, 2025 pm 12:00 PM

The Human Cost Of Talking To Machines: Can A Chatbot Really Care?Apr 11, 2025 pm 12:00 PMThe comforting illusion of connection: Are we truly flourishing in our relationships with AI? This question challenged the optimistic tone of MIT Media Lab's "Advancing Humans with AI (AHA)" symposium. While the event showcased cutting-edg

Understanding SciPy Library in PythonApr 11, 2025 am 11:57 AM

Understanding SciPy Library in PythonApr 11, 2025 am 11:57 AMIntroduction Imagine you're a scientist or engineer tackling complex problems – differential equations, optimization challenges, or Fourier analysis. Python's ease of use and graphics capabilities are appealing, but these tasks demand powerful tools

3 Methods to Run Llama 3.2 - Analytics VidhyaApr 11, 2025 am 11:56 AM

3 Methods to Run Llama 3.2 - Analytics VidhyaApr 11, 2025 am 11:56 AMMeta's Llama 3.2: A Multimodal AI Powerhouse Meta's latest multimodal model, Llama 3.2, represents a significant advancement in AI, boasting enhanced language comprehension, improved accuracy, and superior text generation capabilities. Its ability t

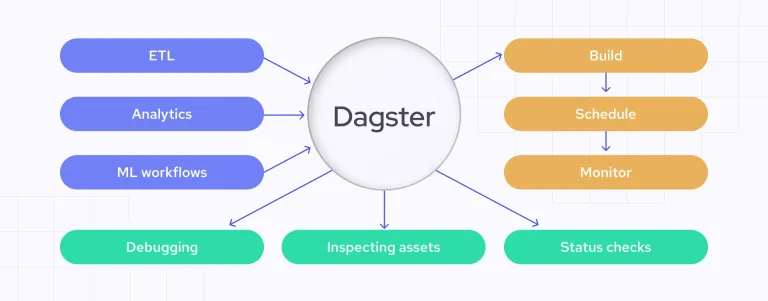

Automating Data Quality Checks with DagsterApr 11, 2025 am 11:44 AM

Automating Data Quality Checks with DagsterApr 11, 2025 am 11:44 AMData Quality Assurance: Automating Checks with Dagster and Great Expectations Maintaining high data quality is critical for data-driven businesses. As data volumes and sources increase, manual quality control becomes inefficient and prone to errors.

Do Mainframes Have A Role In The AI Era?Apr 11, 2025 am 11:42 AM

Do Mainframes Have A Role In The AI Era?Apr 11, 2025 am 11:42 AMMainframes: The Unsung Heroes of the AI Revolution While servers excel at general-purpose applications and handling multiple clients, mainframes are built for high-volume, mission-critical tasks. These powerful systems are frequently found in heavil

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

Dreamweaver Mac version

Visual web development tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

SublimeText3 Chinese version

Chinese version, very easy to use