Technology peripherals

Technology peripherals AI

AI The upper limit of LLaMA-2-7B math ability has reached 97.7%? Xwin-Math unlocks potential with synthetic data

The upper limit of LLaMA-2-7B math ability has reached 97.7%? Xwin-Math unlocks potential with synthetic dataThe upper limit of LLaMA-2-7B math ability has reached 97.7%? Xwin-Math unlocks potential with synthetic data

Synthetic data continues to unlock the mathematical reasoning potential of large models!

Paper link: https://arxiv.org/pdf/2403.04706.pdf Code Link: https://github.com/Xwin-LM/Xwin-LM

The above is the detailed content of The upper limit of LLaMA-2-7B math ability has reached 97.7%? Xwin-Math unlocks potential with synthetic data. For more information, please follow other related articles on the PHP Chinese website!

Reading The AI Index 2025: Is AI Your Friend, Foe, Or Co-Pilot?Apr 11, 2025 pm 12:13 PM

Reading The AI Index 2025: Is AI Your Friend, Foe, Or Co-Pilot?Apr 11, 2025 pm 12:13 PMThe 2025 Artificial Intelligence Index Report released by the Stanford University Institute for Human-Oriented Artificial Intelligence provides a good overview of the ongoing artificial intelligence revolution. Let’s interpret it in four simple concepts: cognition (understand what is happening), appreciation (seeing benefits), acceptance (face challenges), and responsibility (find our responsibilities). Cognition: Artificial intelligence is everywhere and is developing rapidly We need to be keenly aware of how quickly artificial intelligence is developing and spreading. Artificial intelligence systems are constantly improving, achieving excellent results in math and complex thinking tests, and just a year ago they failed miserably in these tests. Imagine AI solving complex coding problems or graduate-level scientific problems – since 2023

Getting Started With Meta Llama 3.2 - Analytics VidhyaApr 11, 2025 pm 12:04 PM

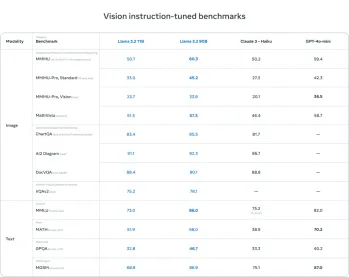

Getting Started With Meta Llama 3.2 - Analytics VidhyaApr 11, 2025 pm 12:04 PMMeta's Llama 3.2: A Leap Forward in Multimodal and Mobile AI Meta recently unveiled Llama 3.2, a significant advancement in AI featuring powerful vision capabilities and lightweight text models optimized for mobile devices. Building on the success o

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and MoreApr 11, 2025 pm 12:01 PM

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and MoreApr 11, 2025 pm 12:01 PMThis week's AI landscape: A whirlwind of advancements, ethical considerations, and regulatory debates. Major players like OpenAI, Google, Meta, and Microsoft have unleashed a torrent of updates, from groundbreaking new models to crucial shifts in le

The Human Cost Of Talking To Machines: Can A Chatbot Really Care?Apr 11, 2025 pm 12:00 PM

The Human Cost Of Talking To Machines: Can A Chatbot Really Care?Apr 11, 2025 pm 12:00 PMThe comforting illusion of connection: Are we truly flourishing in our relationships with AI? This question challenged the optimistic tone of MIT Media Lab's "Advancing Humans with AI (AHA)" symposium. While the event showcased cutting-edg

Understanding SciPy Library in PythonApr 11, 2025 am 11:57 AM

Understanding SciPy Library in PythonApr 11, 2025 am 11:57 AMIntroduction Imagine you're a scientist or engineer tackling complex problems – differential equations, optimization challenges, or Fourier analysis. Python's ease of use and graphics capabilities are appealing, but these tasks demand powerful tools

3 Methods to Run Llama 3.2 - Analytics VidhyaApr 11, 2025 am 11:56 AM

3 Methods to Run Llama 3.2 - Analytics VidhyaApr 11, 2025 am 11:56 AMMeta's Llama 3.2: A Multimodal AI Powerhouse Meta's latest multimodal model, Llama 3.2, represents a significant advancement in AI, boasting enhanced language comprehension, improved accuracy, and superior text generation capabilities. Its ability t

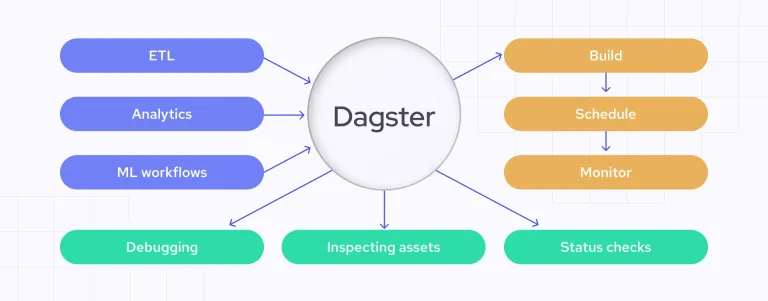

Automating Data Quality Checks with DagsterApr 11, 2025 am 11:44 AM

Automating Data Quality Checks with DagsterApr 11, 2025 am 11:44 AMData Quality Assurance: Automating Checks with Dagster and Great Expectations Maintaining high data quality is critical for data-driven businesses. As data volumes and sources increase, manual quality control becomes inefficient and prone to errors.

Do Mainframes Have A Role In The AI Era?Apr 11, 2025 am 11:42 AM

Do Mainframes Have A Role In The AI Era?Apr 11, 2025 am 11:42 AMMainframes: The Unsung Heroes of the AI Revolution While servers excel at general-purpose applications and handling multiple clients, mainframes are built for high-volume, mission-critical tasks. These powerful systems are frequently found in heavil

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Dreamweaver Mac version

Visual web development tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool