Technology peripherals

Technology peripherals AI

AI How to give AI a lot of physics knowledge? The EIT and Peking University teams proposed the concept of 'importance of rules'

How to give AI a lot of physics knowledge? The EIT and Peking University teams proposed the concept of 'importance of rules'

Editor | ScienceAI

Deep learning models have had a profound impact in the field of scientific research due to their ability to learn latent relationships from massive amounts of data. However, models that rely purely on data gradually reveal their limitations, including over-reliance on data, limitations in generalization capabilities, and consistency issues with the real physical world. These issues drive researchers to explore more interpretable and explainable models to make up for the shortcomings of data-driven models. Therefore, combining domain knowledge and data-driven methods to build models with more interpretability and generalization capabilities has become an important direction in current scientific research. This

For example, the text-to-video model Sora developed by the American company OpenAI is highly praised for its excellent image generation capabilities and is considered an important progress in the field of artificial intelligence. Despite being able to generate realistic images and videos, Sora still has some challenges in dealing with the laws of physics, such as gravity and object fragmentation. While Sora has made significant progress in simulating real-life scenarios, there is still room for improvement in understanding and accurately simulating physical laws. The development of AI technology still requires continuous efforts to improve the comprehensiveness and accuracy of models to better adapt to various real-world situations.

One potential way to solve this problem is to incorporate human knowledge into deep learning models. By combining prior knowledge and data, the generalization ability of the model can be enhanced, resulting in an "informed machine learning" model that can understand physical laws. This approach is expected to improve the performance and accuracy of the model, making it better able to cope with complex problems in the real world. By integrating the experience and insights of human experts into machine learning algorithms, we can build more intelligent and efficient systems, thereby promoting the development and application of artificial intelligence technology.

Currently, there is still a lack of in-depth exploration of the exact value of knowledge in deep learning. There is an urgent problem in determining what prior knowledge can be effectively integrated into the model for "pre-learning". At the same time, blindly integrating multiple rules may lead to model failure, which also requires attention. These limitations bring challenges to the in-depth exploration of the relationship between data and knowledge.

In response to this problem, the research team from Eastern Institute of Technology (EIT) and Peking University proposed the concept of "rule importance" and developed a framework that can accurately calculate the impact of each rule on the prediction accuracy of the model. contribute. This framework not only reveals the complex interaction between data and knowledge and provides theoretical guidance for knowledge embedding, but also helps balance the influence of knowledge and data during the training process. In addition, this method can also be used to identify inappropriate a priori rules, providing broad prospects for research and applications in interdisciplinary fields.

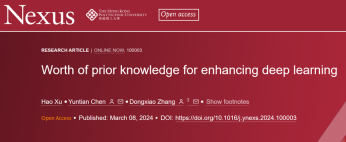

This research, titled "Prior Knowledge's Impact on Deep Learning", was published on March 8, 2024 in the interdisciplinary journal "Nexus" under Cell Press. The research received attention from AAAS (American Association for the Advancement of Science) and EurekAlert!

When teaching children puzzles, you can either let them find out the answers through trial and error, or you can guide them with some basic rules and techniques. Likewise, incorporating rules and techniques—such as the laws of physics—into AI training can make them more realistic and more efficient. However, how to evaluate the value of these rules in artificial intelligence has always been a difficult problem for researchers.

Given the rich diversity of prior knowledge, integrating prior knowledge into deep learning models is a complex multi-objective optimization task. The research team innovatively proposes a framework to quantify the role of different prior knowledge in improving deep learning models. They view this process as a game full of cooperation and competition, and define the importance of rules by evaluating their marginal contribution to model predictions. First, all possible rule combinations (i.e., "coalitions") are generated, a model is built for each combination, and the mean square error is calculated.

In order to reduce the computational cost, they adopted an efficient algorithm based on perturbation: first train a completely data-based neural network as a baseline model, then add each rule combination one by one for additional training, and finally on the test data Evaluate model performance. By comparing the model's performance across all coalitions with and without a rule, the marginal contribution of that rule can be calculated, and thus its importance.

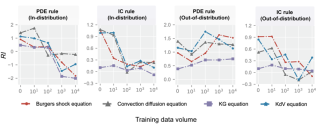

Through fluid mechanics examples, researchers explored the complex relationship between data and rules. They found that data and prior rules played completely different roles in different tasks. When the distribution of test data and training data is similar (ie, in-distribution), the increase in data volume will weaken the effect of the rules.

However, when the distribution similarity between the test data and the training data is low (ie Out-of-distribution), the importance of global rules is highlighted, while the influence of local rules is weakened. The difference between these two types of rules is that global rules (such as governing equations) affect the entire domain, while local rules (such as boundary conditions) only act on specific areas.

The research team found through numerical experiments that in knowledge embedding , there are three types of interaction effects between rules: dependence effect, synergy effect and substitution effect.

Dependency effect means that some rules need to rely on other rules to be effective; synergy effect shows that the effect of multiple rules working together exceeds the sum of their independent effects; substitution effect shows that the function of a rule may be affected by data or other Rule substitution.

These three effects exist at the same time and are affected by the amount of data. By calculating rule importance, these effects can be clearly demonstrated, providing important guidance for knowledge embedding.

At the application level, the research team tried to solve a core issue in the knowledge embedding process: how to balance the role of data and rules to improve embedding efficiency and screen out inappropriate prior knowledge. During the training process of the model, the team proposed a strategy to dynamically adjust the weight of the rules.

Specifically, as the training iteration steps increase, the weight of positive importance rules gradually increases, while the weight of negative importance rules decreases. This strategy can adjust the model's attention to different rules in real time according to the needs of the optimization process, thereby achieving more efficient and accurate knowledge embedding.

In addition, teaching the laws of physics to AI models can make them “more relevant to the real world and thus play a greater role in science and engineering.” Therefore, this framework has a wide range of practical applications in engineering, physics, and chemistry. The researchers not only optimized the machine learning model to solve multivariate equations, but also accurately identified rules that improve the performance of the prediction model for thin-layer chromatography analysis.

The experimental results show that by incorporating these effective rules, the performance of the model is significantly improved, and the mean square error on the test data set is reduced from 0.052 to 0.036 (a reduction of 30.8%). This means that the framework can transform empirical insights into structured knowledge, thereby significantly improving model performance.

Overall, accurately assessing the value of knowledge helps build AI models that are more realistic and improve safety and reliability, which is of great significance to the development of deep learning.

Next, the research team plans to develop their framework into Plug-in tools for artificial intelligence developers. Their ultimate goal is to develop models that can extract knowledge and rules directly from data and then improve themselves, thereby creating a closed-loop system from knowledge discovery to knowledge embedding, making the model a true artificial intelligence scientist.

Paper link: https://www.cell.com/nexus/fulltext/S2950-1601(24)00001-9

The above is the detailed content of How to give AI a lot of physics knowledge? The EIT and Peking University teams proposed the concept of 'importance of rules'. For more information, please follow other related articles on the PHP Chinese website!

Exploring the Capabilities of Google's Gemma 2 ModelsApr 22, 2025 am 11:26 AM

Exploring the Capabilities of Google's Gemma 2 ModelsApr 22, 2025 am 11:26 AMGoogle's Gemma 2: A Powerful, Efficient Language Model Google's Gemma family of language models, celebrated for efficiency and performance, has expanded with the arrival of Gemma 2. This latest release comprises two models: a 27-billion parameter ver

The Next Wave of GenAI: Perspectives with Dr. Kirk Borne - Analytics VidhyaApr 22, 2025 am 11:21 AM

The Next Wave of GenAI: Perspectives with Dr. Kirk Borne - Analytics VidhyaApr 22, 2025 am 11:21 AMThis Leading with Data episode features Dr. Kirk Borne, a leading data scientist, astrophysicist, and TEDx speaker. A renowned expert in big data, AI, and machine learning, Dr. Borne offers invaluable insights into the current state and future traje

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AM

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AMThere were some very insightful perspectives in this speech—background information about engineering that showed us why artificial intelligence is so good at supporting people’s physical exercise. I will outline a core idea from each contributor’s perspective to demonstrate three design aspects that are an important part of our exploration of the application of artificial intelligence in sports. Edge devices and raw personal data This idea about artificial intelligence actually contains two components—one related to where we place large language models and the other is related to the differences between our human language and the language that our vital signs “express” when measured in real time. Alexander Amini knows a lot about running and tennis, but he still

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AM

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AMCaterpillar's Chief Information Officer and Senior Vice President of IT, Jamie Engstrom, leads a global team of over 2,200 IT professionals across 28 countries. With 26 years at Caterpillar, including four and a half years in her current role, Engst

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AM

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AMGoogle Photos' New Ultra HDR Tool: A Quick Guide Enhance your photos with Google Photos' new Ultra HDR tool, transforming standard images into vibrant, high-dynamic-range masterpieces. Ideal for social media, this tool boosts the impact of any photo,

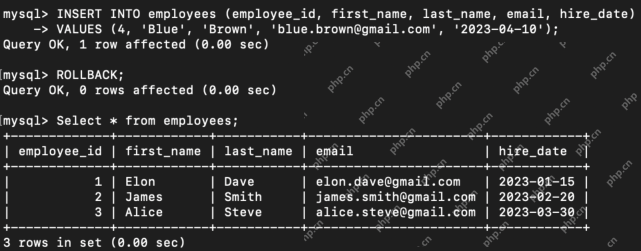

What are the TCL Commands in SQL? - Analytics VidhyaApr 22, 2025 am 11:07 AM

What are the TCL Commands in SQL? - Analytics VidhyaApr 22, 2025 am 11:07 AMIntroduction Transaction Control Language (TCL) commands are essential in SQL for managing changes made by Data Manipulation Language (DML) statements. These commands allow database administrators and users to control transaction processes, thereby

How to Make Custom ChatGPT? - Analytics VidhyaApr 22, 2025 am 11:06 AM

How to Make Custom ChatGPT? - Analytics VidhyaApr 22, 2025 am 11:06 AMHarness the power of ChatGPT to create personalized AI assistants! This tutorial shows you how to build your own custom GPTs in five simple steps, even without coding skills. Key Features of Custom GPTs: Create personalized AI models for specific t

Difference Between Method Overloading and OverridingApr 22, 2025 am 10:55 AM

Difference Between Method Overloading and OverridingApr 22, 2025 am 10:55 AMIntroduction Method overloading and overriding are core object-oriented programming (OOP) concepts crucial for writing flexible and efficient code, particularly in data-intensive fields like data science and AI. While similar in name, their mechanis

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SublimeText3 Mac version

God-level code editing software (SublimeText3)

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

Atom editor mac version download

The most popular open source editor