Home >Hardware Tutorial >Hardware Review >Train your robot dog in real time with Vision Pro! MIT PhD student's open source project is popular

Train your robot dog in real time with Vision Pro! MIT PhD student's open source project is popular

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2024-03-12 21:40:02784browse

Vision Pro has another hot new way to play, and this time it is linked with embodied intelligence~

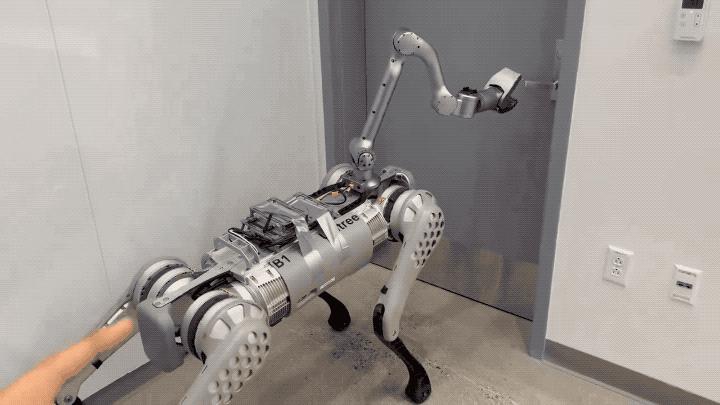

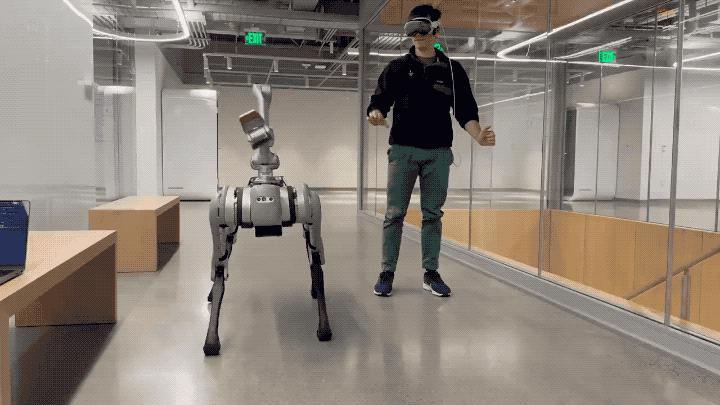

Just like this, the MIT guy used the hand tracking function of Vision Pro to successfully realize the control of the robot dog Real-time control.

#Not only can actions such as opening a door be accurately obtained:

, but there is also almost no delay.

# As soon as the Demo came out, not only netizens praised Goose Meizi, but also various embodied intelligence researchers were excited.

For example, this prospective doctoral student from Tsinghua University:

Some people boldly predict: This is how we will interact with the next generation of machines.

Let’s take a closer look at the App developed by the author’s brother - Tracking Steamer.

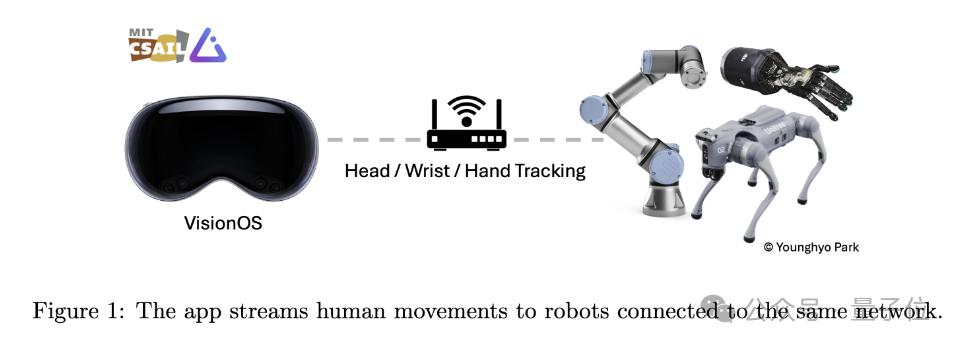

As the name suggests, this application is designed to use Vision Pro to track human movements and transmit these movement data in real time to other robotic devices under the same WiFi.

#The motion tracking part mainly relies on Apple’s ARKit library.

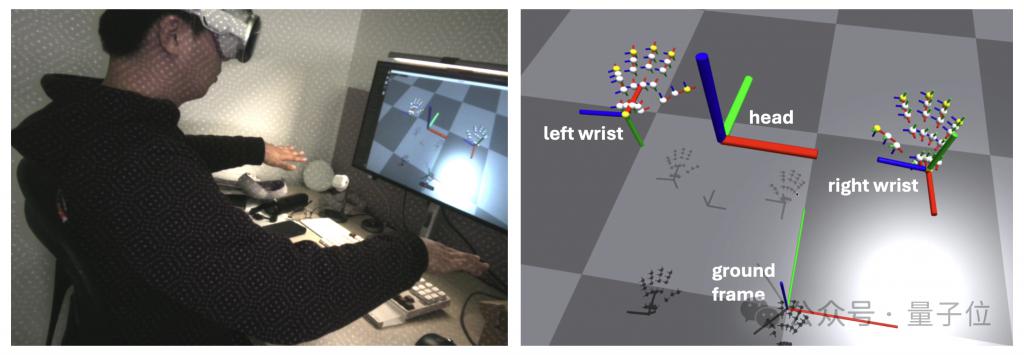

Head tracking calls queryDeviceAnchor. Users can reset the head frame to its current position by pressing and holding the Digital Crown.

Wrist and finger tracking are implemented through HandTrackingProvider. It tracks the position and orientation of the left and right wrists relative to the ground frame, as well as the posture of 25 finger joints on each hand relative to the wrist frame.

#In terms of network communication, this App uses gRPC as the network communication protocol to stream data. This enables data to be subscribed to more devices, including Linux, Mac and Windows devices.

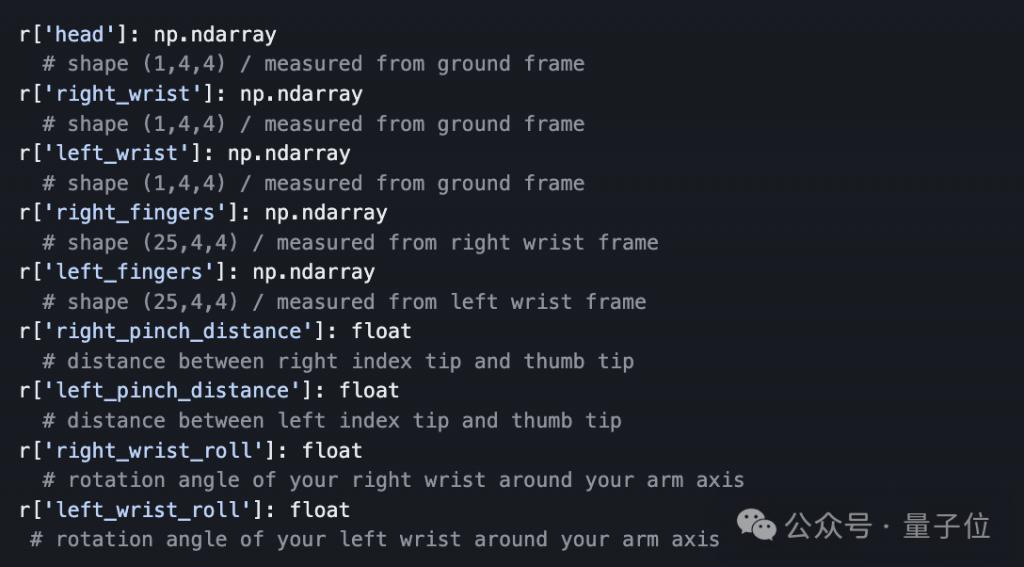

In addition, in order to facilitate data transmission, the author has also prepared a Python API that allows developers to subscribe to and receive tracking data streamed from Vision Pro programmatically.

The data returned by the API is in dictionary form, containing the SE (3) posture information of the head, wrist, and fingers, that is, the three-dimensional position and orientation. Developers can process this data directly in Python for further analysis and control of the robot.

As many professionals have pointed out, regardless of whether the movements of the robot dog are still controlled by humans, in fact, compared to the "control" itself, combined with imitation In the process of learning algorithms, humans are more like robot coaches.

Vision Pro provides an intuitive and simple interaction method by tracking the user's movements, allowing non-professionals to provide accurate training data for robots.

The author himself also wrote in the paper:

In the near future, people may wear devices like Vision Pro just like wearing glasses every day. Imagine what we can collect from this process How much data!

This is a promising source of data from which robots can learn how humans interact with the real world.

Finally, a reminder, if you want to try this open source project, in addition to a Vision Pro, you also need to prepare:

Apple Developer Account

Vision Pro Developer Strap (priced at $299)

Mac computer with Xcode installed

Well, it seems that Apple still has to make a profit first (doge ).

Project link:

https://github.com/Improbable-AI/VisionProTeleop?tab=readme-ov-file

Reference link:

https://twitter.com/younghyo_park/status/1766274298422161830

The above is the detailed content of Train your robot dog in real time with Vision Pro! MIT PhD student's open source project is popular. For more information, please follow other related articles on the PHP Chinese website!