Home >Technology peripherals >AI >Stable Diffusion 3 technical report leaked out, Sora architecture has made great achievements again! Is the open source community violently beating Midjourney and DALL·E 3?

Stable Diffusion 3 technical report leaked out, Sora architecture has made great achievements again! Is the open source community violently beating Midjourney and DALL·E 3?

- PHPzforward

- 2024-03-06 16:22:20802browse

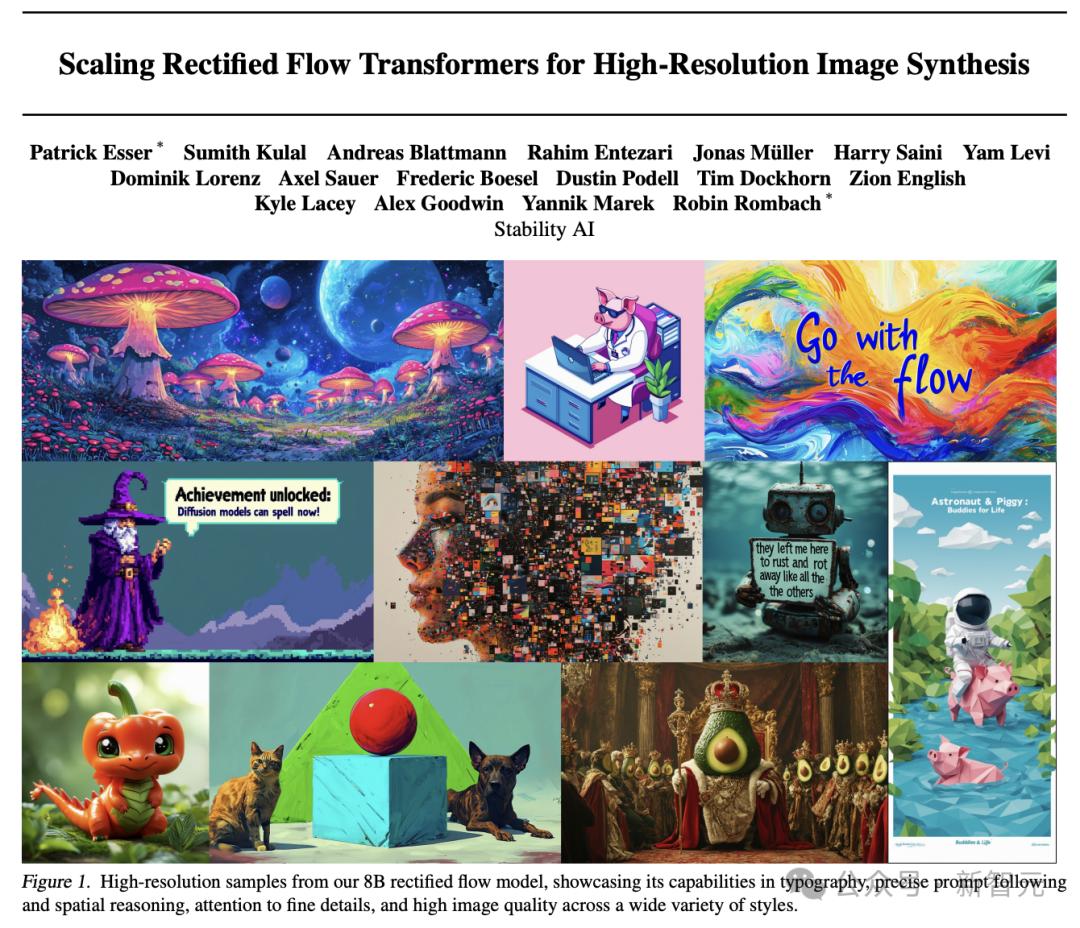

After releasing Stable Diffusion 3, Stability AI released a detailed technical report today.

The paper provides an in-depth analysis of the core technology of Stable Diffusion 3 - an improved version of the Diffusion model and a new architecture of Vincentian graphs based on DiT!

Report address:

https://www.php.cn/link /e5fb88b398b042f6cccce46bf3fa53e8

Passed the human evaluation test, Stable Diffusion 3 surpassed DALL·E 3, Midjourney v6 and Ideogram v1 in terms of font design and accurate response to prompts.

Stability AI’s newly developed Multi-Modal Diffusion Transformer (MMDiT) architecture uses independent weight sets specifically for image and language representation. Compared to earlier versions of SD 3, MMDiT has achieved significant improvements in text comprehension and spelling.

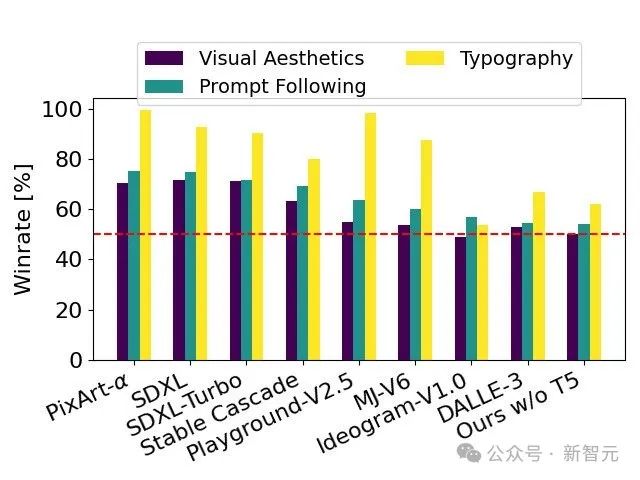

Performance Evaluation

Based on human feedback, the technical report will SD 3 to a large number The open source models SDXL, SDXL Turbo, Stable Cascade, Playground v2.5 and Pixart-α, as well as the closed source models DALL·E 3, Midjourney v6 and Ideogram v1 were evaluated in detail.

Evaluators select the best output for each model based on the consistency of the assigned prompts, the clarity of the text, and the overall aesthetics of the images.

The test results show that Stable Diffusion 3 achieves the highest level of accuracy in following prompts, clear presentation of text, and visual beauty of images. Or exceed the current state-of-the-art of Vincentian diagram generation technology.

The SD 3 model, which is not optimized for hardware at all, has 8B parameters and is able to run on an RTX 4090 consumer GPU with 24GB of video memory, and Using 50 sampling steps, it takes 34 seconds to generate a 1024x1024 resolution image.

In addition, Stable Diffusion 3 will provide multiple versions when released, with parameters ranging from 800 million to 8 billion, which can further lower the hardware threshold for use.

Exposure of architectural details

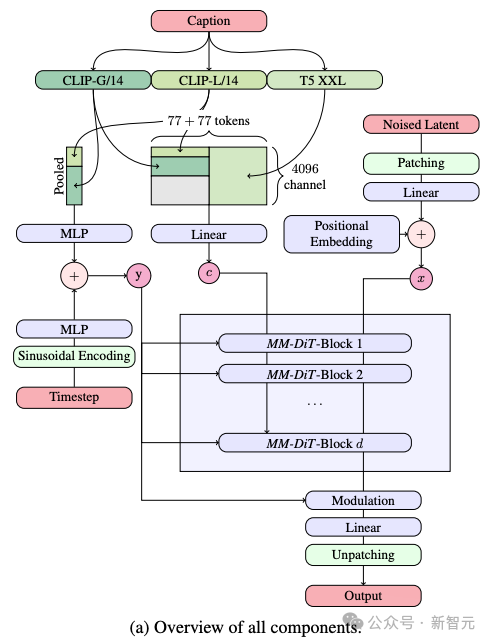

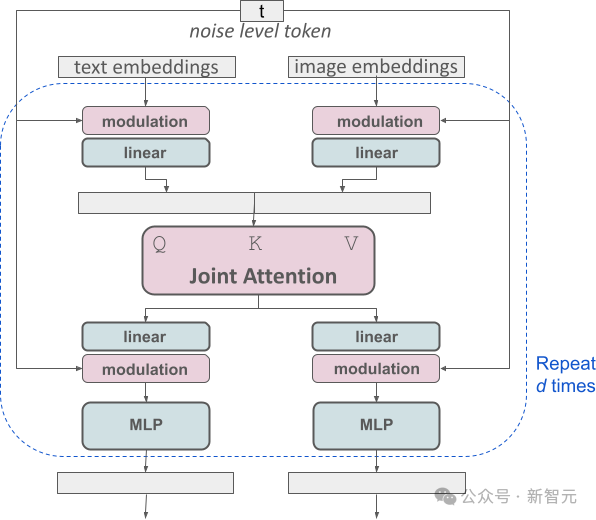

In the process of generating Vincent diagrams, the model needs to process text and images at the same time These two different kinds of information. So the author calls this new framework MMDiT.

In the process of text to image generation, the model needs to process two different information types, text and image, at the same time. This is why the authors call this new technology MMDiT (short for Multimodal Diffusion Transformer).

Like previous versions of Stable Diffusion, SD 3 uses a pre-trained model to extract suitable expressions of text and images.

Specifically, they utilized three different text encoders—two CLIP models and a T5—to process text information, while using a more advanced Autoencoding model to process image information.

The architecture of SD 3 is built on the basis of Diffusion Transformer (DiT). Due to the difference between text and image information, SD 3 sets independent weights for each of these two types of information.

This design is equivalent to equipping two independent Transformers for each information type, but when executing the attention mechanism, the data sequences of the two types of information will be merged, so that they can be used in their respective fields. While working independently, they can maintain mutual reference and integration.

Through this unique architecture, image and text information can flow and interact with each other, thereby improving the understanding of the content in the generated results. Overall understanding and visual representation.

Moreover, this architecture can be easily extended to other modalities including video in the future.

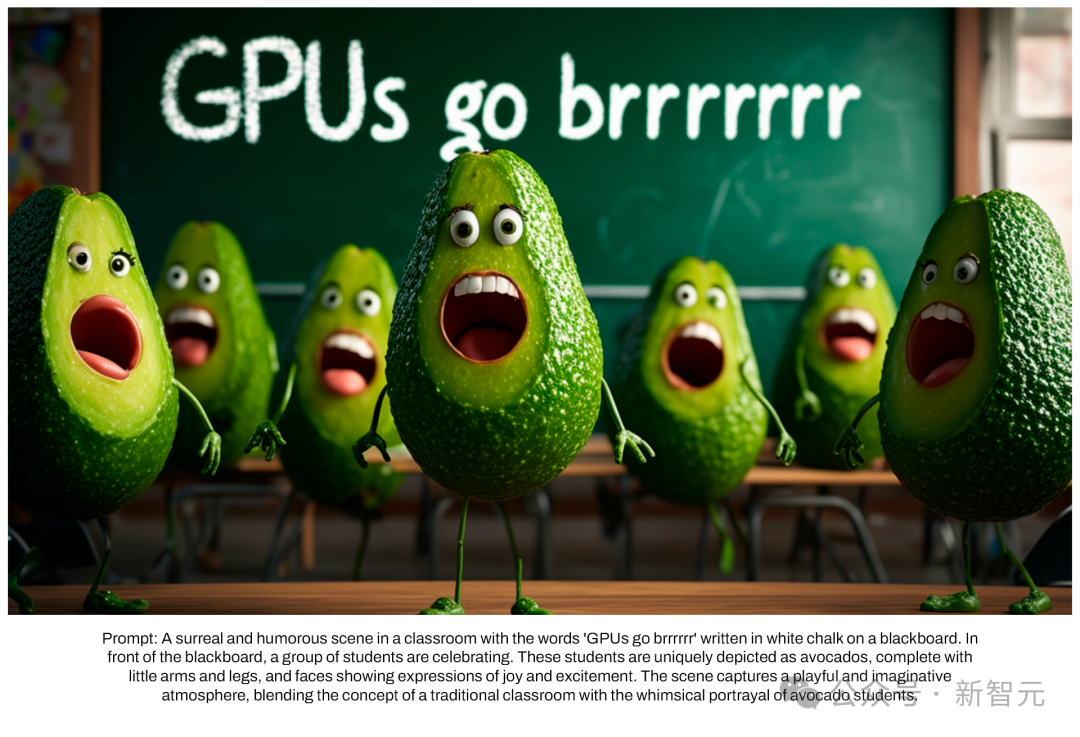

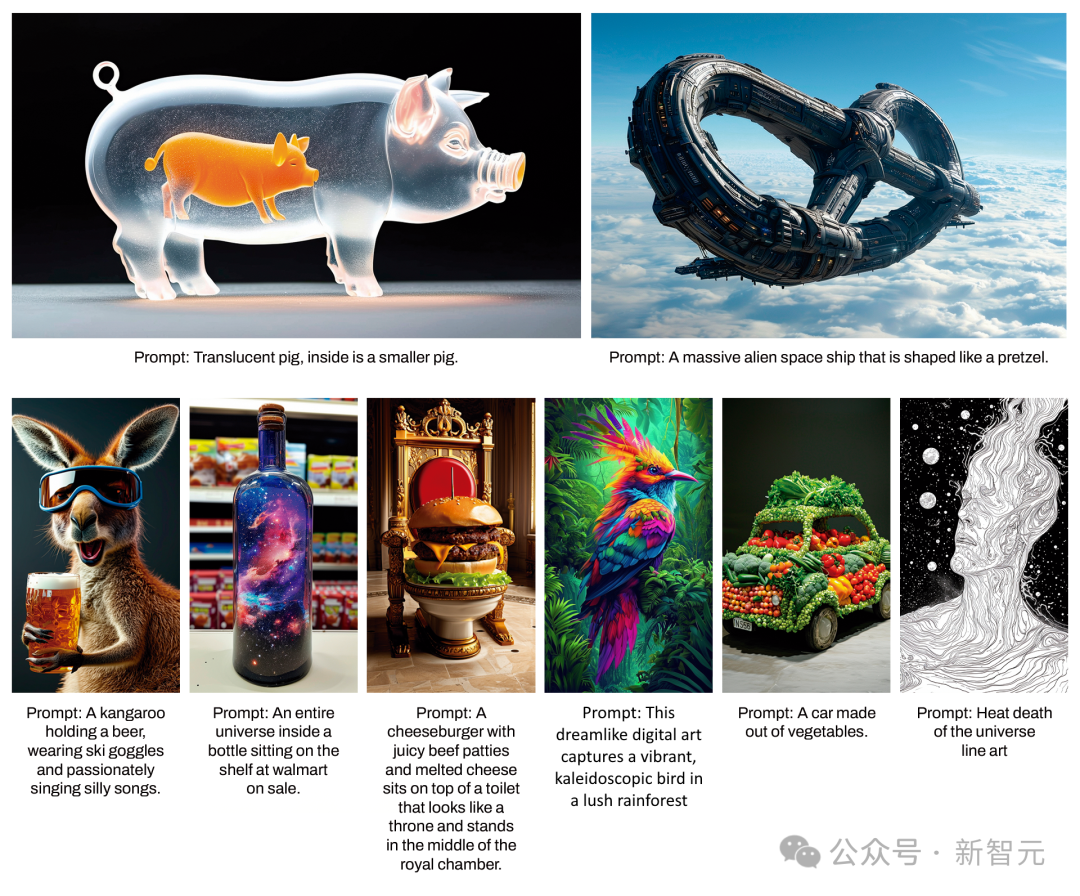

Thanks to SD 3’s improvements in following cues, the model is able to accurately generate images that focus on a variety of different topics and features, while The image style also maintains a high degree of flexibility.

Improving Rectified Flow through re-weighting method

In addition to the launch of the new Diffusion Transformer architecture , SD 3 also made significant improvements to the Diffusion model.

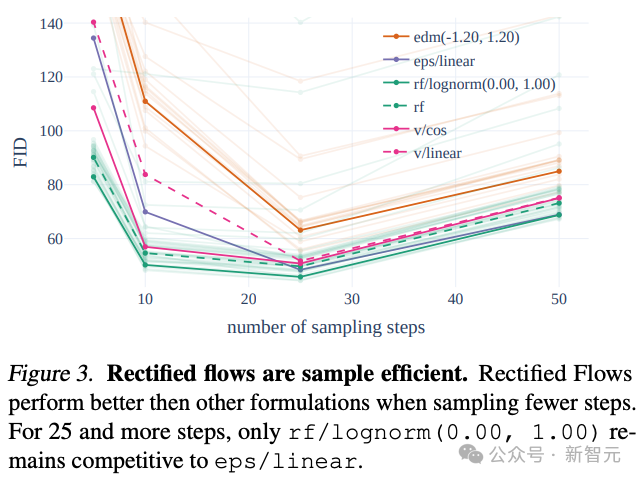

SD 3 adopts the Rectified Flow (RF) strategy to connect the training data and noise along a straight trajectory.

This method makes the model’s inference path more direct, so the sample generation can be completed in fewer steps.

The author introduced an innovative trajectory sampling plan in the training process, especially increasing the weight of the middle part of the trajectory, and the prediction of these parts The mission is more challenging.

By comparing with 60 other diffusion trajectories (such as LDM, EDM, and ADM), the authors found that although the previous RF method performed better in fewer steps of sampling, as the sampling As the number of steps increases, performance will slowly decrease.

In order to avoid this situation, the weighted RF method proposed by the author can continue to improve model performance.

Extended RF Transformer model

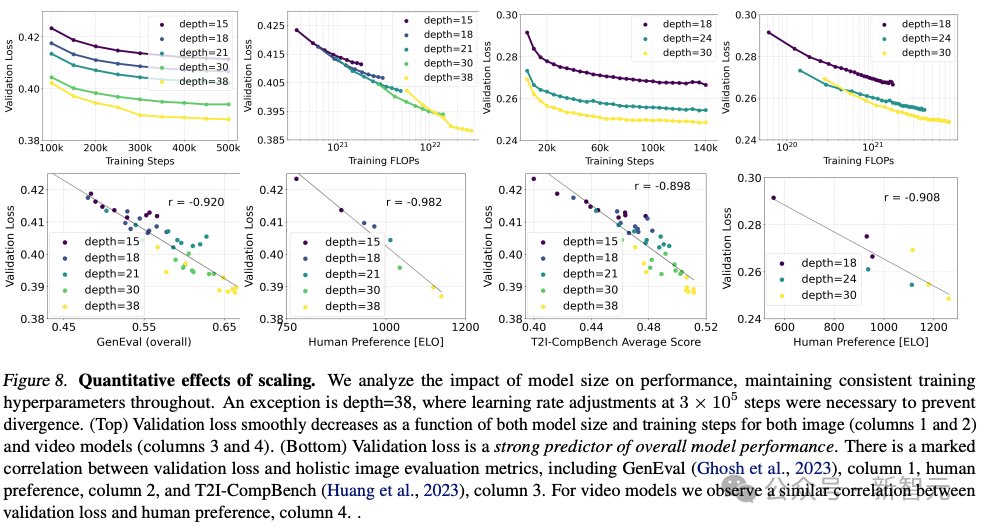

Stability AI trained multiple models of different sizes, from 15 modules and 450M parameters to 38 modules and 8B parameters, and found the model Both size and training steps reduce validation loss smoothly.

To verify whether this meant a substantial improvement in model output, they also evaluated automatic image alignment metrics and human preference scores.

The results show that these evaluation indicators are strongly correlated with the verification loss, indicating that the verification loss is an effective indicator to measure the overall performance of the model.

In addition, this expansion trend has not reached a saturation point, making us optimistic that we can further improve model performance in the future.

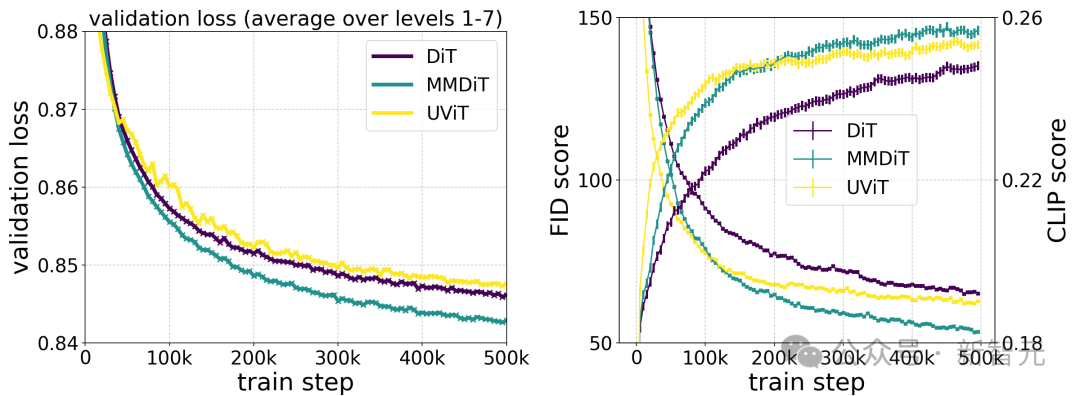

The author trained the model for 500k steps with different numbers of parameters at a resolution of 256 * 256 pixels and a batch size of 4096.

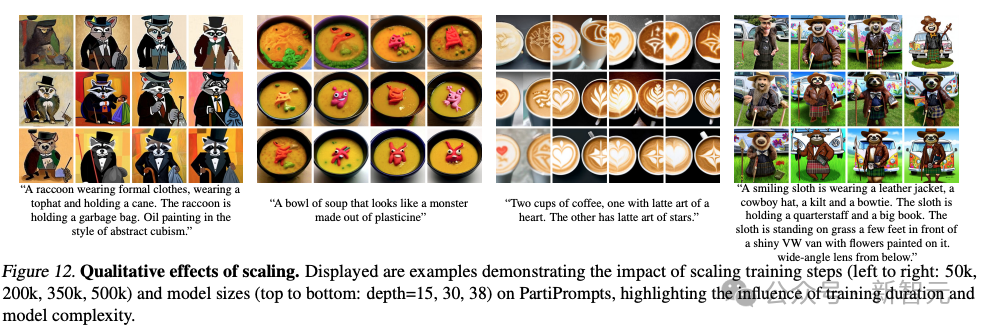

The above figure illustrates the impact of training a larger model for a long time on sample quality.

The table above shows the results of GenEval. When using the training method proposed by the authors and increasing the resolution of the training images, the largest model performed well in most categories, surpassing DALL·E by 3 in the overall score.

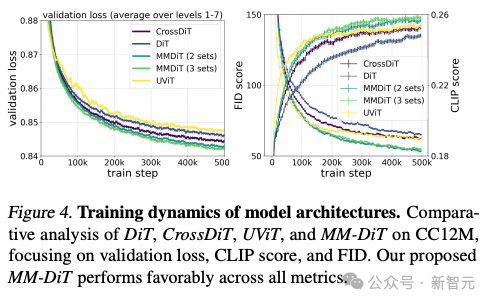

According to the author's test comparison of different architecture models, MMDiT is very effective, surpassing DiT, Cross DiT, UViT, and MM-DiT.

Flexible text encoder

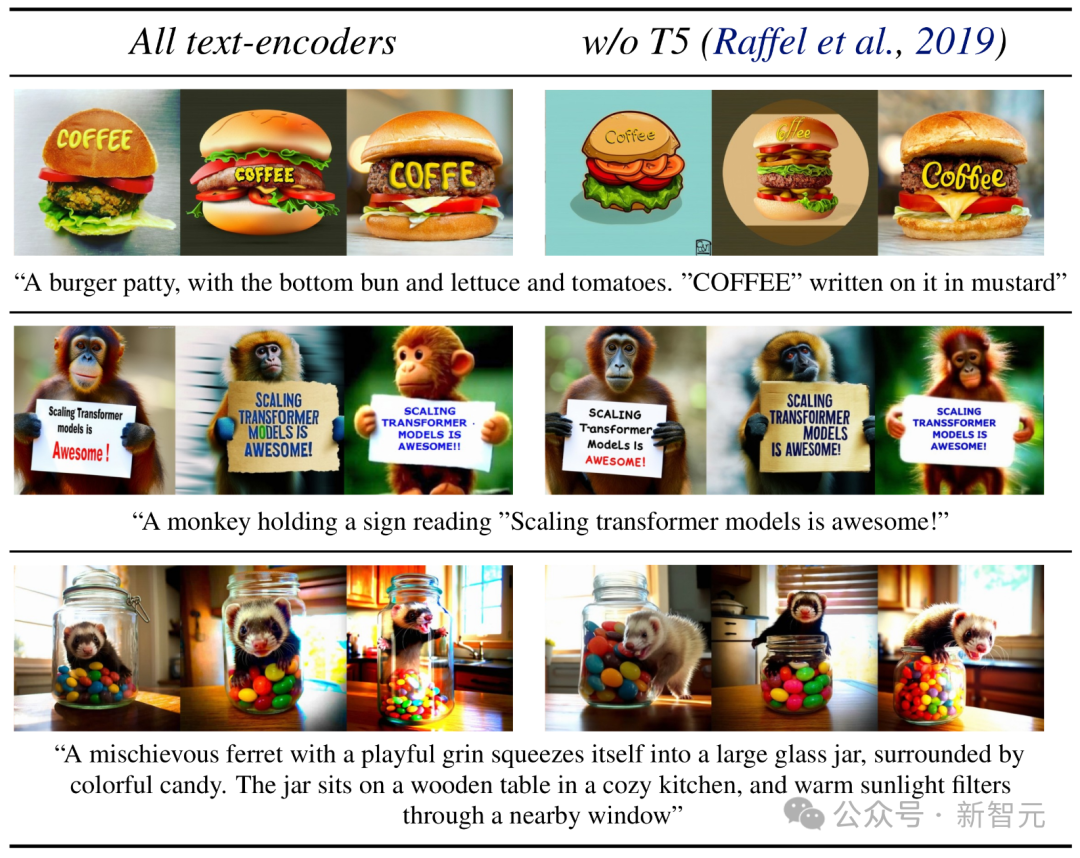

By removing the memory-intensive 4.7B parameter T5 text encoder during the inference phase, SD 3's memory requirements are significantly reduced with minimal performance loss.

Removing this text encoder will not affect the visual beauty of the image (50% win rate without T5), but will only slightly reduce the ability of the text to follow accurately (46% win rate) .

However, in order to give full play to SD 3's ability to generate text, the author still recommends using the T5 encoder.

Because the author found that without it, the performance of typesetting to generate text would be even greater (win rate 38%).

Netizens’ hot discussion

Netizens continue to tease users about Stability AI but refuse to use it They seemed a little impatient, and they all urged to put it online quickly for everyone to use.

After reading the technical application, netizens said that it seems that the photography circle is now going to be the first track where open source will overwhelm closed source!

The above is the detailed content of Stable Diffusion 3 technical report leaked out, Sora architecture has made great achievements again! Is the open source community violently beating Midjourney and DALL·E 3?. For more information, please follow other related articles on the PHP Chinese website!