Technology peripherals

Technology peripherals AI

AI Google's new method ASPIRE: gives LLM self-scoring capabilities, effectively solves the 'illusion' problem, and surpasses 10 times the volume model

Google's new method ASPIRE: gives LLM self-scoring capabilities, effectively solves the 'illusion' problem, and surpasses 10 times the volume modelThe "illusion" problem of large models will soon be solved?

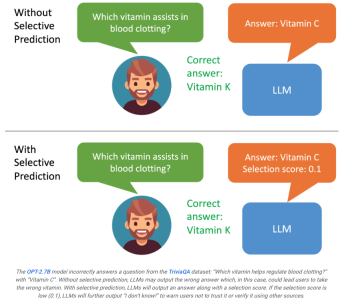

Researchers at the University of Wisconsin-Madison and Google recently launched the ASPIRE system, which enables large models to self-evaluate their output.

If the user sees that the result generated by the model has a low score, they will realize that the reply may be an illusion.

If the system can further filter the output based on the score, for example when the score is low, the large model can generate something like "I can't answer this question" " statement, which may improve the hallucination problem to the greatest extent.

Paper address: https://aclanthology.org/2023.findings-emnlp.345.pdf

ASPIRE allows LLM to output the answer and the confidence score of the answer.

The researchers’ experimental results show that ASPIRE significantly outperforms traditional selective prediction methods on various QA datasets such as the CoQA benchmark.

Let LLM not only answer questions, but also evaluate those answers.

In the benchmark test of selective prediction, the researchers achieved results of more than 10 times the scale of the model through the ASPIRE system.

It’s like asking students to verify their own answers at the back of the textbook. Although it sounds a bit unreliable, if you think about it carefully, everyone After completing a question, there will indeed be a score for the degree of satisfaction with the answer.

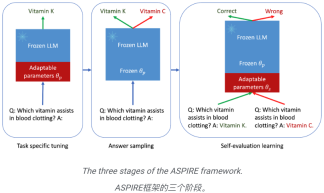

This is the essence of ASPIRE, which involves three phases:

(1) Tuning for a specific task,

(2) Answer sampling,

(3) Self-assessment learning.

In the eyes of researchers, ASPIRE is not just another framework, it represents a bright future that comprehensively improves LLM reliability and reduces hallucinations. .

If LLM can become a trusted partner in the decision-making process.

By continuously optimizing the ability to make selective predictions, humans are one step closer to fully realizing the potential of large models.

Researchers hope to use ASPIRE to start the evolution of the next generation of LLM, thereby creating more reliable and self-aware artificial intelligence.

ASPIRE’s mechanics

Fine-tuning for specific tasks

ASPIRE performs task-specific fine-tuning to train adaptive parameters while freezing the LLM.  Given a training dataset for the generation task, it fine-tunes the pre-trained LLM to improve its prediction performance.

Given a training dataset for the generation task, it fine-tunes the pre-trained LLM to improve its prediction performance.

To this end, parameter-efficient fine-tuning techniques (e.g., soft-cue word fine-tuning and LoRA) can be employed to fine-tune pre-trained LLMs on the task, as they can be efficiently obtained with a small number of targets Strong generalization task data.

Specifically, the LLM parameters (θ) are frozen, and adaptive parameters

are added for fine-tuning.  Only update θ (p) to minimize the standard LLM training loss (e.g. cross-entropy).

Only update θ (p) to minimize the standard LLM training loss (e.g. cross-entropy).

This kind of fine-tuning can improve selective prediction performance because it not only improves prediction accuracy, but also increases the likelihood of correctly outputting the sequence.

Answer sampling

## After being tuned for a specific task, ASPIRE uses LLM and learned  Generate different answers for each training question and create a dataset for self-evaluation learning.

Generate different answers for each training question and create a dataset for self-evaluation learning.

The researcher’s goal is to generate output sequences with high likelihood. They used Beam Search as the decoding algorithm to generate high-likelihood output sequences and used the Rouge-L metric to determine whether the generated output sequences were correct.

Self-evaluation learning

After sampling the high-likelihood output for each query, ASPIRE adds self-evaluation Adapt parameters  and fine-tune only

and fine-tune only  to learn self-evaluation.

to learn self-evaluation.

Since the generation of the output sequence depends only on θ and  , freezing θ and the learned

, freezing θ and the learned  can be avoided Changing LLM's predictive behavior when learning self-evaluation.

can be avoided Changing LLM's predictive behavior when learning self-evaluation.

The researchers optimized  so that the adapted LLM can distinguish correct and incorrect answers on its own.

so that the adapted LLM can distinguish correct and incorrect answers on its own.

In this framework, any parameter-valid fine-tuning method can be used to train  and

and  .

.

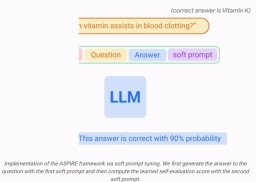

In this work, the researchers used soft-cue fine-tuning, a simple yet effective mechanism for learning "soft cues" to tune frozen language models, thereby Perform specific downstream tasks more efficiently than traditional discrete text prompts.

The core behind this approach is the recognition that if cues can be developed that effectively stimulate self-evaluation, it should be discoverable through fine-tuning of soft cues combined with targeted training goals These tips.

After training  and

and  , the researchers decoded by beam search Get predictions for the query (beam search decoding).

, the researchers decoded by beam search Get predictions for the query (beam search decoding).

The researchers then define a choice score that combines the likelihood of generating an answer with the learned self-assessment score (i.e., the likelihood that the prediction is correct for the query) to do Make selective predictions.

Results

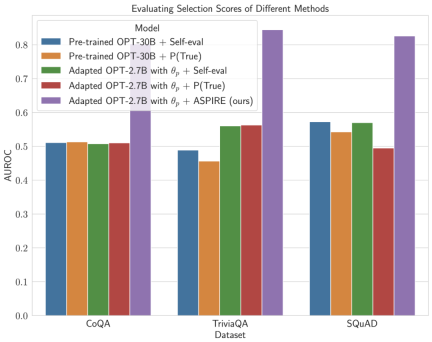

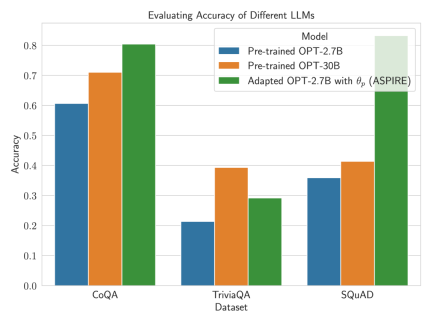

To demonstrate the effect of ASPIRE, the researchers used various open pre-trained Transformer (OPT) models on three question and answer data Evaluate it on the set (CoQA, TriviaQA and SQuAD).

By adjusting training using soft cues The researchers observed a substantial improvement in the accuracy of LLM.

The researchers observed a substantial improvement in the accuracy of LLM.

For example, the OPT-2.7B model with ASPIRE showed better performance than the larger pre-trained OPT-30B model using the CoQA and SQuAD datasets.

These results suggest that with appropriate tuning, smaller LLMs may have the ability to match or possibly exceed the accuracy of larger models in some situations.

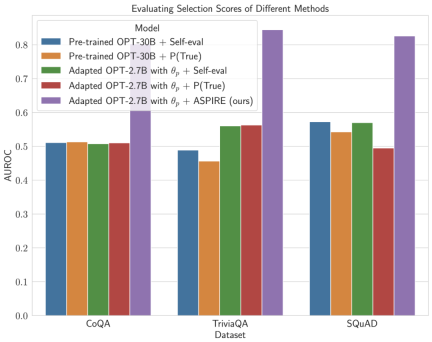

When delving into the calculation of selection scores for fixed model predictions, ASPIRE achieved higher AUROC scores than the baseline method for all datasets (random The probability that a selected correct output sequence has a higher selection score than a randomly selected incorrect output sequence).

For example, on the CoQA benchmark, ASPIRE improves AUROC from 51.3% to 80.3% compared to the baseline.

An interesting pattern emerged from the evaluation of the TriviaQA dataset.

Although the pre-trained OPT-30B model exhibits higher baseline accuracy, its choice when applying traditional self-evaluation methods (Self-eval and P(True)) The performance of sex prediction is not significantly improved.

In contrast, the much smaller OPT-2.7B model outperformed other models in this regard after being enhanced with ASPIRE.

This difference reflects an important issue: larger LLMs that utilize traditional self-assessment techniques may not be as effective at selective prediction as smaller ASPIRE-enhanced models.

The researchers’ experimental journey with ASPIRE highlights a key shift in the LLM landscape: A language model’s capacity is not the be-all and end-all of its performance .

Instead, model effectiveness can be greatly improved through policy adjustments, allowing for more accurate and confident predictions even in smaller models.

Thus, ASPIRE demonstrates the potential of LLMs to sensibly determine the certainty of their own answers and significantly outperform others 10 times their size in selective prediction tasks. Model.

The above is the detailed content of Google's new method ASPIRE: gives LLM self-scoring capabilities, effectively solves the 'illusion' problem, and surpasses 10 times the volume model. For more information, please follow other related articles on the PHP Chinese website!

The Hidden Dangers Of AI Internal Deployment: Governance Gaps And Catastrophic RisksApr 28, 2025 am 11:12 AM

The Hidden Dangers Of AI Internal Deployment: Governance Gaps And Catastrophic RisksApr 28, 2025 am 11:12 AMThe unchecked internal deployment of advanced AI systems poses significant risks, according to a new report from Apollo Research. This lack of oversight, prevalent among major AI firms, allows for potential catastrophic outcomes, ranging from uncont

Building The AI PolygraphApr 28, 2025 am 11:11 AM

Building The AI PolygraphApr 28, 2025 am 11:11 AMTraditional lie detectors are outdated. Relying on the pointer connected by the wristband, a lie detector that prints out the subject's vital signs and physical reactions is not accurate in identifying lies. This is why lie detection results are not usually adopted by the court, although it has led to many innocent people being jailed. In contrast, artificial intelligence is a powerful data engine, and its working principle is to observe all aspects. This means that scientists can apply artificial intelligence to applications seeking truth through a variety of ways. One approach is to analyze the vital sign responses of the person being interrogated like a lie detector, but with a more detailed and precise comparative analysis. Another approach is to use linguistic markup to analyze what people actually say and use logic and reasoning. As the saying goes, one lie breeds another lie, and eventually

Is AI Cleared For Takeoff In The Aerospace Industry?Apr 28, 2025 am 11:10 AM

Is AI Cleared For Takeoff In The Aerospace Industry?Apr 28, 2025 am 11:10 AMThe aerospace industry, a pioneer of innovation, is leveraging AI to tackle its most intricate challenges. Modern aviation's increasing complexity necessitates AI's automation and real-time intelligence capabilities for enhanced safety, reduced oper

Watching Beijing's Spring Robot RaceApr 28, 2025 am 11:09 AM

Watching Beijing's Spring Robot RaceApr 28, 2025 am 11:09 AMThe rapid development of robotics has brought us a fascinating case study. The N2 robot from Noetix weighs over 40 pounds and is 3 feet tall and is said to be able to backflip. Unitree's G1 robot weighs about twice the size of the N2 and is about 4 feet tall. There are also many smaller humanoid robots participating in the competition, and there is even a robot that is driven forward by a fan. Data interpretation The half marathon attracted more than 12,000 spectators, but only 21 humanoid robots participated. Although the government pointed out that the participating robots conducted "intensive training" before the competition, not all robots completed the entire competition. Champion - Tiangong Ult developed by Beijing Humanoid Robot Innovation Center

The Mirror Trap: AI Ethics And The Collapse Of Human ImaginationApr 28, 2025 am 11:08 AM

The Mirror Trap: AI Ethics And The Collapse Of Human ImaginationApr 28, 2025 am 11:08 AMArtificial intelligence, in its current form, isn't truly intelligent; it's adept at mimicking and refining existing data. We're not creating artificial intelligence, but rather artificial inference—machines that process information, while humans su

New Google Leak Reveals Handy Google Photos Feature UpdateApr 28, 2025 am 11:07 AM

New Google Leak Reveals Handy Google Photos Feature UpdateApr 28, 2025 am 11:07 AMA report found that an updated interface was hidden in the code for Google Photos Android version 7.26, and each time you view a photo, a row of newly detected face thumbnails are displayed at the bottom of the screen. The new facial thumbnails are missing name tags, so I suspect you need to click on them individually to see more information about each detected person. For now, this feature provides no information other than those people that Google Photos has found in your images. This feature is not available yet, so we don't know how Google will use it accurately. Google can use thumbnails to speed up finding more photos of selected people, or may be used for other purposes, such as selecting the individual to edit. Let's wait and see. As for now

Guide to Reinforcement Finetuning - Analytics VidhyaApr 28, 2025 am 09:30 AM

Guide to Reinforcement Finetuning - Analytics VidhyaApr 28, 2025 am 09:30 AMReinforcement finetuning has shaken up AI development by teaching models to adjust based on human feedback. It blends supervised learning foundations with reward-based updates to make them safer, more accurate, and genuinely help

Let's Dance: Structured Movement To Fine-Tune Our Human Neural NetsApr 27, 2025 am 11:09 AM

Let's Dance: Structured Movement To Fine-Tune Our Human Neural NetsApr 27, 2025 am 11:09 AMScientists have extensively studied human and simpler neural networks (like those in C. elegans) to understand their functionality. However, a crucial question arises: how do we adapt our own neural networks to work effectively alongside novel AI s

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Zend Studio 13.0.1

Powerful PHP integrated development environment

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

SublimeText3 English version

Recommended: Win version, supports code prompts!