Introduction to Transformer positional encoding and how to improve it

Transformer is a deep learning model widely used in natural language processing tasks. It uses a self-attention mechanism to capture the relationship between words in the sequence, but ignores the position order of words in the sequence, which may lead to information loss. To solve this problem, Transformer introduces positional encoding. The basic principle of positional encoding is to assign each word in the sequence a position vector, which contains information about the position of the word in the sequence. This way, the model can take into account the positional information of the word by adding the position vector to the word embedding vector. A common method of position encoding is to use sine and cosine functions to generate position vectors. Specifically, for each position and each dimension, the value of the position vector is composed of a sine function and a cosine function. This encoding method allows the model to learn the relationship between different locations. In addition to traditional position encoding methods, some improved methods have been proposed. For example, one can use learned position encoding, where position vectors are learned through a neural network. This method can adaptively adjust the position vector during the training process to better capture the position information in the sequence. In short, the Transformer model uses positional encoding to consider the order of words

1. Basic principles

In Transformer, positional encoding is to convert position information Encoded into a vector. It is added with the word’s embedding vector to get the final representation of each word. The specific calculation method is as follows:

PE_{(i,2j)}=sin(\frac{i}{10000^{2j/d_{model}}})

PE_{(i,2j 1)}=cos(\frac{i}{10000^{2j/d_{model}}})

Among them, i is the position of the word, j is the dimension of the position encoding vector, and d_{model} is the dimension of the Transformer model. With this formula, we can calculate the position encoding value for each position and each dimension. We can combine these values into a positional encoding matrix and then add it to the word embedding matrix to obtain a positional encoding representation of each word.

2. Improvement methods

Although Transformer's positional encoding performs well in many tasks, there are still some improvements that can be used.

1. Learning positional encoding

In the traditional Transformer model, positional encoding is calculated based on a fixed formula, which cannot adapt to different tasks and specific needs of different data sets. Therefore, researchers have proposed some methods to learn positional encoding. One approach is to use neural networks to learn positional encodings. Specifically, researchers use autoencoders or convolutional neural networks to learn positional encoding so that the positional encoding can be adapted to the specific needs of the task and data set. The advantage of this method is that the position encoding can be adaptively adjusted, thereby improving the generalization ability of the model.

2. Random position encoding

Another improvement method is to use random position encoding. This method replaces the fixed position encoding formula by randomly sampling a set of position encoding vectors. The advantage of this method is that it can increase the diversity of the model, thereby improving the robustness and generalization ability of the model. However, since the random position encoding is randomly generated at each training time, more training time is required.

3. Multi-scale position encoding

Multi-scale position encoding is a method to improve position by combining multiple position encoding matrices Coding method. Specifically, the researchers added position encoding matrices at different scales to obtain a richer position encoding representation. The advantage of this method is that it can capture position information at different scales, thereby improving the performance of the model.

4. Local positional encoding

Local positional encoding is a method to improve positional encoding by limiting positional encoding to a local area. Specifically, the researchers limited the calculation of positional encoding to a certain range around the current word, thereby reducing the complexity of positional encoding. The advantage of this approach is that it can reduce computational costs while also improving model performance.

In short, Transformer positional encoding is an important technique that can help the model capture the positional information between words in the sequence, thereby improving the performance of the model. Although traditional positional encoding performs well in many tasks, there are some improvements that can be used. These improvement methods can be selected and combined according to the needs of the task and data set, thereby improving the performance of the model.

The above is the detailed content of Introduction to Transformer positional encoding and how to improve it. For more information, please follow other related articles on the PHP Chinese website!

An easy-to-understand explanation of how to create a VBA macro in ChatGPT!May 14, 2025 am 02:40 AM

An easy-to-understand explanation of how to create a VBA macro in ChatGPT!May 14, 2025 am 02:40 AMFor beginners and those interested in business automation, writing VBA scripts, an extension to Microsoft Office, may find it difficult. However, ChatGPT makes it easy to streamline and automate business processes. This article explains in an easy-to-understand manner how to develop VBA scripts using ChatGPT. We will introduce in detail specific examples, from the basics of VBA to script implementation using ChatGPT integration, testing and debugging, and benefits and points to note. With the aim of improving programming skills and improving business efficiency,

I can't use the ChatGPT plugin function! Explaining what to do in case of an errorMay 14, 2025 am 01:56 AM

I can't use the ChatGPT plugin function! Explaining what to do in case of an errorMay 14, 2025 am 01:56 AMChatGPT plugin cannot be used? This guide will help you solve your problem! Have you ever encountered a situation where the ChatGPT plugin is unavailable or suddenly fails? The ChatGPT plugin is a powerful tool to enhance the user experience, but sometimes it can fail. This article will analyze in detail the reasons why the ChatGPT plug-in cannot work properly and provide corresponding solutions. From user setup checks to server troubleshooting, we cover a variety of troubleshooting solutions to help you efficiently use plug-ins to complete daily tasks. OpenAI Deep Research, the latest AI agent released by OpenAI. For details, please click ⬇️ [ChatGPT] OpenAI Deep Research Detailed explanation:

Does ChatGPT not follow the character count specification? A thorough explanation of how to deal with this!May 14, 2025 am 01:54 AM

Does ChatGPT not follow the character count specification? A thorough explanation of how to deal with this!May 14, 2025 am 01:54 AMWhen writing a sentence using ChatGPT, there are times when you want to specify the number of characters. However, it is difficult to accurately predict the length of sentences generated by AI, and it is not easy to match the specified number of characters. In this article, we will explain how to create a sentence with the number of characters in ChatGPT. We will introduce effective prompt writing, techniques for getting answers that suit your purpose, and teach you tips for dealing with character limits. In addition, we will explain why ChatGPT is not good at specifying the number of characters and how it works, as well as points to be careful about and countermeasures. This article

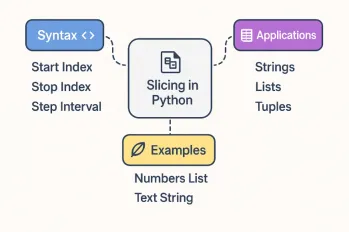

All About Slicing Operations in PythonMay 14, 2025 am 01:48 AM

All About Slicing Operations in PythonMay 14, 2025 am 01:48 AMFor every Python programmer, whether in the domain of data science and machine learning or software development, Python slicing operations are one of the most efficient, versatile, and powerful operations. Python slicing syntax a

An easy-to-understand explanation of how to use ChatGPT to create quotes!May 14, 2025 am 01:44 AM

An easy-to-understand explanation of how to use ChatGPT to create quotes!May 14, 2025 am 01:44 AMThe evolution of AI technology has accelerated business efficiency. What's particularly attracting attention is the creation of estimates using AI. OpenAI's AI assistant, ChatGPT, contributes to improving the estimate creation process and improving accuracy. This article explains how to create a quote using ChatGPT. We will introduce efficiency improvements through collaboration with Excel VBA, specific examples of application to system development projects, benefits of AI implementation, and future prospects. Learn how to improve operational efficiency and productivity with ChatGPT. Op

What is ChatGPT Pro (o1 Pro)? Explaining what you can do, the prices, and the differences between them from other plans!May 14, 2025 am 01:40 AM

What is ChatGPT Pro (o1 Pro)? Explaining what you can do, the prices, and the differences between them from other plans!May 14, 2025 am 01:40 AMOpenAI's latest subscription plan, ChatGPT Pro, provides advanced AI problem resolution! In December 2024, OpenAI announced its top-of-the-line plan, the ChatGPT Pro, which costs $200 a month. In this article, we will explain its features, particularly the performance of the "o1 pro mode" and new initiatives from OpenAI. This is a must-read for researchers, engineers, and professionals aiming to utilize advanced AI. ChatGPT Pro: Unleash advanced AI power ChatGPT Pro is the latest and most advanced product from OpenAI.

We explain how to create and correct your motivation for applying using ChatGPT! Also introduce the promptMay 14, 2025 am 01:29 AM

We explain how to create and correct your motivation for applying using ChatGPT! Also introduce the promptMay 14, 2025 am 01:29 AMIt is well known that the importance of motivation for applying when looking for a job is well known, but I'm sure there are many job seekers who struggle to create it. In this article, we will introduce effective ways to create a motivation statement using the latest AI technology, ChatGPT. We will carefully explain the specific steps to complete your motivation, including the importance of self-analysis and corporate research, points to note when using AI, and how to match your experience and skills with company needs. Through this article, learn the skills to create compelling motivation and aim for successful job hunting! OpenAI's latest AI agent, "Open

What's so amazing about ChatGPT? A thorough explanation of its features and strengths!May 14, 2025 am 01:26 AM

What's so amazing about ChatGPT? A thorough explanation of its features and strengths!May 14, 2025 am 01:26 AMChatGPT: Amazing Natural Language Processing AI and how to use it ChatGPT is an innovative natural language processing AI model developed by OpenAI. It is attracting attention around the world as an advanced tool that enables natural dialogue with humans and can be used in a variety of fields. Its excellent language comprehension, vast knowledge, learning ability and flexible operability have the potential to transform our lives and businesses. In this article, we will explain the main features of ChatGPT and specific examples of use, and explore the possibilities for the future that AI will unlock. Unraveling the possibilities and appeal of ChatGPT, and enjoying life and business

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SublimeText3 Chinese version

Chinese version, very easy to use

WebStorm Mac version

Useful JavaScript development tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver Mac version

Visual web development tools