Technology peripherals

Technology peripherals AI

AI An in-depth analysis of adversarial learning techniques in machine learning

An in-depth analysis of adversarial learning techniques in machine learningAn in-depth analysis of adversarial learning techniques in machine learning

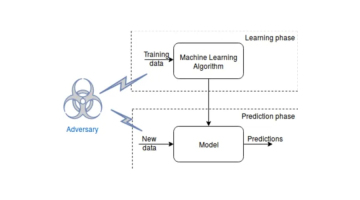

Adversarial learning is a machine learning technique that improves the robustness of a model by adversarially training it. The purpose of this training method is to cause the model to produce inaccurate or wrong predictions by deliberately introducing challenging samples. In this way, the trained model can better adapt to changes in real-world data, thereby improving the stability of its performance.

Adversarial attacks on machine learning models

Attacks on machine learning models can be divided into two categories: white box attacks and black box attacks. A white-box attack means that the attacker can access the structure and parameters of the model to carry out the attack; while a black-box attack means that the attacker cannot access this information. Some common adversarial attack methods include fast gradient sign method (FGSM), basic iterative method (BIM), and Jacobian matrix-based saliency map attack (JSMA).

Why is adversarial learning important to improve model robustness?

Adversarial learning plays an important role in improving model robustness. It can help the model generalize better and identify and adapt to data structures, thereby improving robustness. In addition, adversarial learning can also discover model weaknesses and provide guidance for improving the model. Therefore, adversarial learning is crucial for model training and optimization.

How to incorporate adversarial learning into machine learning models?

Incorporating adversarial learning into machine learning models requires two steps: generating adversarial examples and incorporating these examples into the training process.

Generation and training of adversarial examples

There are many ways to generate information, including gradient-based methods, genetic algorithms, and reinforcement learning. Among them, gradient-based methods are the most commonly used. This method involves calculating the gradient of the input loss function and adjusting the information based on the direction of the gradient to increase the loss.

Adversarial examples can be incorporated into the training process through adversarial training and adversarial enhancement. During training, adversarial examples are used to update model parameters while improving model robustness by adding adversarial examples to the training data.

Augmented data is a simple and effective practical method that is widely used to improve model performance. The basic idea is to introduce adversarial examples into the training data and then train the model on the augmented data. The trained model is able to accurately predict the class labels of original and adversarial examples, making it more robust to changes and distortions in the data. This method is very common in practical applications.

Application examples of adversarial learning

Adversarial learning has been applied to a variety of machine learning tasks, including computer vision, speech recognition, and natural language processing.

In computer vision, to improve the robustness of image classification models, adjusting the robustness of convolutional neural networks (CNN) can improve the accuracy of unseen data.

Adversarial learning plays a role in improving the robustness of automatic speech recognition (ASR) systems in speech recognition. The method works by using adversarial examples to alter the input speech signal in a way that is designed to be imperceptible to humans but cause the ASR system to mistranscribe it. Research shows that adversarial training can improve the robustness of ASR systems to these adversarial examples, thereby improving recognition accuracy and reliability.

In natural language processing, adversarial learning has been used to improve the robustness of sentiment analysis models. Adversarial examples in this field of NLP aim to manipulate input text in a way that results in incorrect and inaccurate model predictions. Adversarial training has been shown to improve the robustness of sentiment analysis models to these types of adversarial examples, resulting in improved accuracy and robustness.

The above is the detailed content of An in-depth analysis of adversarial learning techniques in machine learning. For more information, please follow other related articles on the PHP Chinese website!

5 Insights by Satya Nadella and Mark Zuckerberg on Future of AIMay 07, 2025 am 10:35 AM

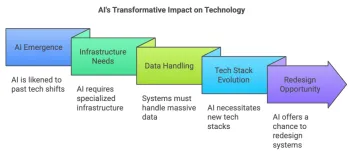

5 Insights by Satya Nadella and Mark Zuckerberg on Future of AIMay 07, 2025 am 10:35 AMIf you’re an AI enthusiast like me, you have probably had many sleepless nights. It’s challenging to keep up with all AI updates. Last week, a major event took place: Meta’s first-ever LlamaCon. The event started with

Top 30 AI Agent Interview QuestionsMay 07, 2025 am 10:24 AM

Top 30 AI Agent Interview QuestionsMay 07, 2025 am 10:24 AMAs AI agents become central to modern-day automation and intelligent systems, the demand for professionals who understand their design, deployment, and orchestration is rising rapidly. Whether you’re preparing for a technic

Cluely.ai: Will This AI Tool Mark the End of Virtual Interviews?May 07, 2025 am 10:11 AM

Cluely.ai: Will This AI Tool Mark the End of Virtual Interviews?May 07, 2025 am 10:11 AMYesterday I saw my roommate preparing for an upcoming interview and she was all over the place – revising topics, practicing codes, and whatnot. Coincidently, I came across an Instagram reel – talking about a tool nam

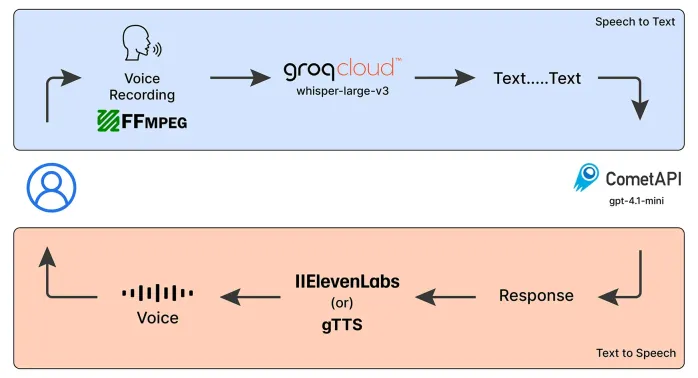

Emergency Operator Voice Chatbot: Empowering AssistanceMay 07, 2025 am 09:48 AM

Emergency Operator Voice Chatbot: Empowering AssistanceMay 07, 2025 am 09:48 AMLanguage models have been rapidly evolving in the world. Now, with Multimodal LLMs taking up the forefront of this Language Models race, it is important to understand how we can leverage the capabilities of these Multimodal model

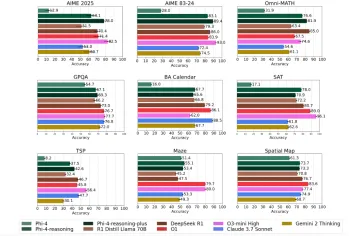

Microsoft's Phi-4 Reasoning Models Explained SimplyMay 07, 2025 am 09:45 AM

Microsoft's Phi-4 Reasoning Models Explained SimplyMay 07, 2025 am 09:45 AMMicrosoft isn’t like OpenAI, Google, and Meta; especially not when it comes to large language models. While other tech giants prefer to launch multiple models almost overwhelming the users with choices; Microsoft launches a few,

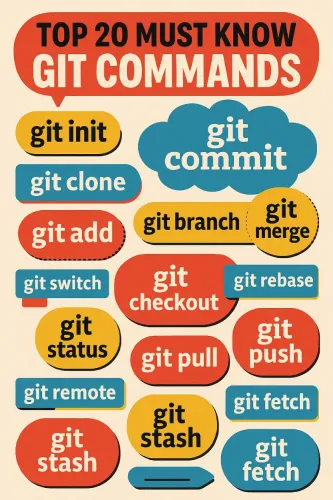

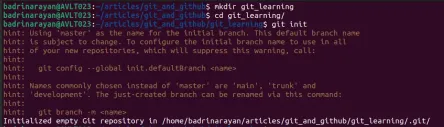

Top 20 Git Commands Every Developer Should Know - Analytics VidhyaMay 07, 2025 am 09:44 AM

Top 20 Git Commands Every Developer Should Know - Analytics VidhyaMay 07, 2025 am 09:44 AMGit can feel like a puzzle until you learn the key moves. In this guide, you’ll find the top 20 Git commands, ordered by how often they are used. Each entry starts with a quick “What it does” summary, followed by an image display

Git Tutorial for BeginnersMay 07, 2025 am 09:36 AM

Git Tutorial for BeginnersMay 07, 2025 am 09:36 AMIn software development, managing code across multiple contributors can get messy fast. Imagine several people editing the same document at the same time, each adding new ideas, fixing bugs, or tweaking features. Without a struct

Top 5 PDF to Markdown Converter for Effortless Formatting - Analytics VidhyaMay 07, 2025 am 09:21 AM

Top 5 PDF to Markdown Converter for Effortless Formatting - Analytics VidhyaMay 07, 2025 am 09:21 AMDifferent formats, such as PPTX, DOCX, or PDF, to Markdown converter is an essential tool for content writers, developers, and documentation specialists. Having the right tools makes all the difference when converting any type of

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

Dreamweaver CS6

Visual web development tools

Atom editor mac version download

The most popular open source editor

SublimeText3 Mac version

God-level code editing software (SublimeText3)