Home >Technology peripherals >AI >Byte's new generation video generation model makes the effect of Hulk wearing VR glasses better than Gen-2!

Byte's new generation video generation model makes the effect of Hulk wearing VR glasses better than Gen-2!

- WBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBOYWBforward

- 2024-01-15 21:12:11807browse

In one sentence, let the Hulk wear VR glasses.

4K quality.

Panda's Fantasy Life~

This is Byte's latest AI video generation modelMagicVideo- V2, all kinds of fantastic ideas can be realized. It not only supports 4K and 8K ultra-high resolutions, but can easily hold various drawing styles.

△From left to right: oil painting style, cyber style, design style

The evaluation effect is better than Gen-2 and Pika As well as existing AI video generation tools.

As a result, within 24 hours after it went online, it attracted a lot of attention. For example, one tweet had nearly 200,000 views.

Many netizens were surprised by its effect, and even bluntly said: It’s better than runway and pika.

"Better than runway and pika"

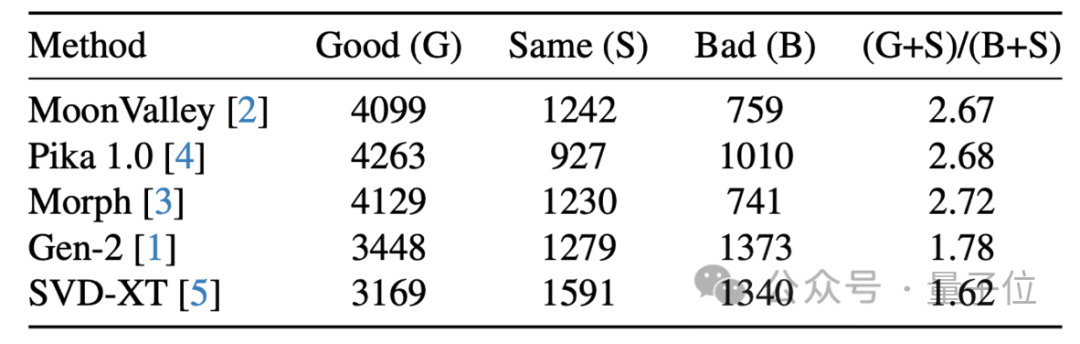

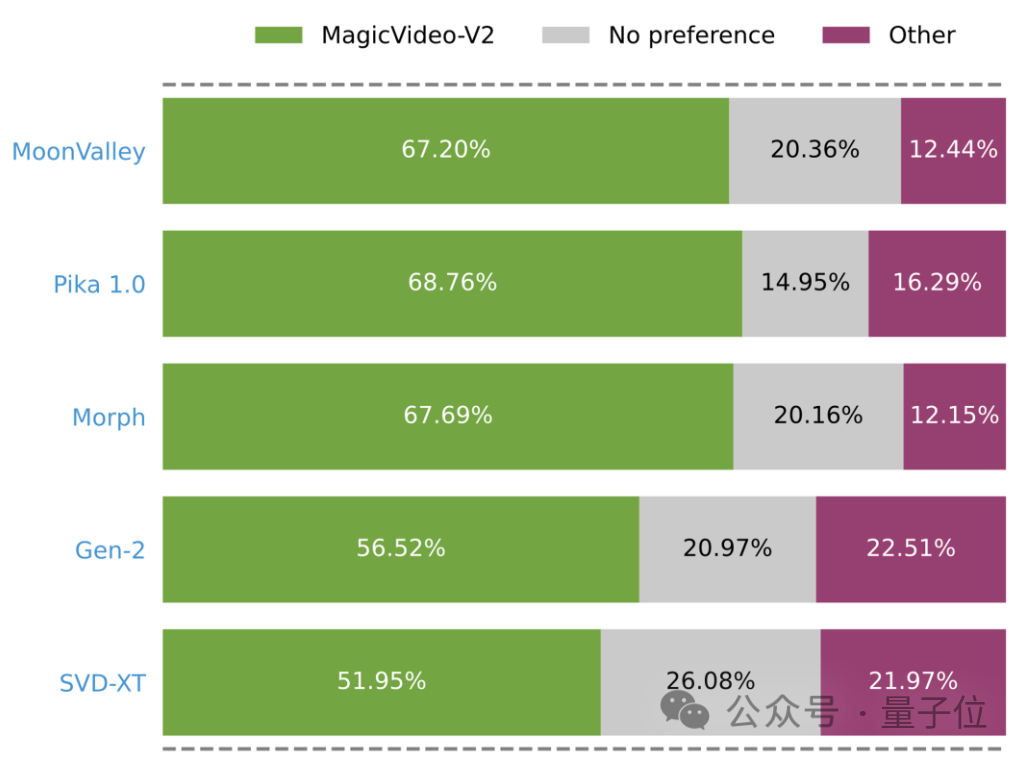

The researchers did conduct actual comparisons of effects. The players are: MagicVideo-V2, StabilityAI’s SVD-XT, new potential player Pika1.0, and Runway’s Gen-2.

The first round: light and shadow effects.

As the sun sets, the traveler walks alone in the misty forest.

(From left to right: MagicVideo-V2, SVD-XT, Pika on the upper right, Gen-2 on the lower right, the same below)

As you can see, MagicVideo-V2, Gen-2 and Pika all have obvious light and shadow. However, it cannot be seen that Pika is for travelers, MagicVideo-V2 has richer tones.

The second round: expression of situational plot.

A sitcom in the 1910s, telling the story of daily life and trivial matters in society

This round is obviously MagicVideo too -V2 and Gen-2 are even better. Although the mid-range composition presented by SVD-XT reflects the age, it is not expressive enough.

Round 3: Realism.

The little boy was riding a bicycle on the path in the park, the wheels making a crunching sound on the gravel.

(The green, gray and pink bars represent the experimental results in which MagicVideo-V2 was evaluated as better, equivalent or worse respectively.)

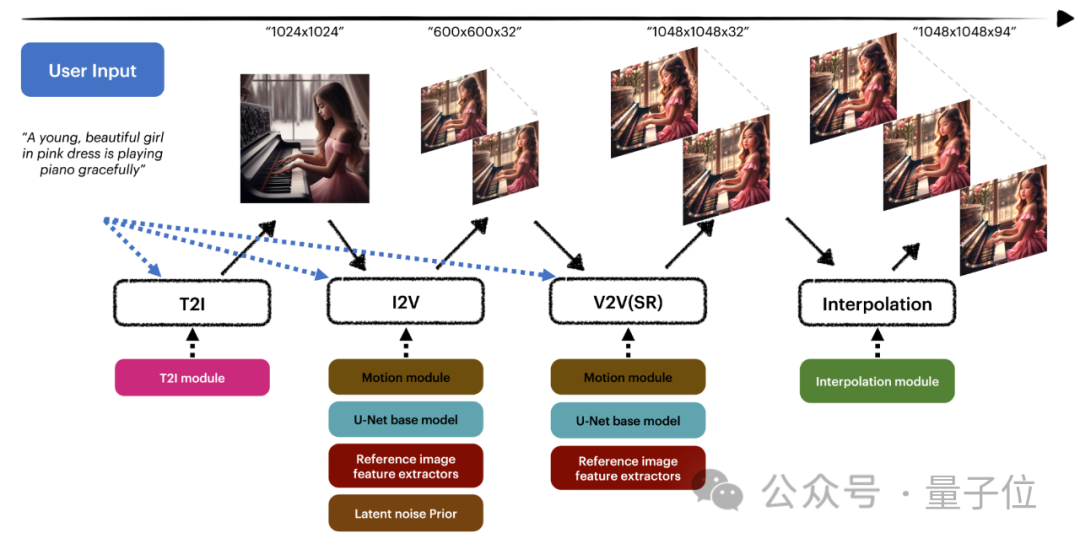

Firstly, the T2I module first generates a 1024×1024 image based on the text, and then the I2V module animates the static image to generate a 600×600×32 frame sequence, and then uses The V2V module enhances and improves the video content, and finally uses the interpolation module to extend the sequence to 94 frames.

In this way, high fidelity and temporal continuity are ensured.

But as early as November 2022, Byte launched the MagicVideo V1 version.

#However, at that time, more emphasis was placed on efficiency, which could generate 256x256 resolution video on a single GPU card.

Reference link:

https://twitter.com/arankomatsuzaki/status/1744918551415443768?s=20

Project link:

https://magicvideov2.github.io/

Paper link:

https://arxiv.org/abs/ 2401.04468

https://arxiv.org/abs/2211.11018

The above is the detailed content of Byte's new generation video generation model makes the effect of Hulk wearing VR glasses better than Gen-2!. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- How to solve the problem that the attribute bar at the top of AI is missing?

- Where is the overlay of ai?

- The official announcement of the Haimo Supercomputing Center: a large model with 100 billion parameters, a data scale of 1 million clips, and a 200-fold reduction in training costs

- Effectively integrate language models, graph neural networks, and text graph training framework GLEM to achieve new SOTA

- New breakthrough in AI dressing up: After training with 1 million photos, the accuracy of deconstructing and reconstructing clothing is 95.7%