System Tutorial

System Tutorial LINUX

LINUX A brief discussion on nine experiences summarized from 25 years of Linux kernel development experience

A brief discussion on nine experiences summarized from 25 years of Linux kernel development experienceA brief discussion on nine experiences summarized from 25 years of Linux kernel development experience

Original text: 9 lessons from 25 years of Linux kernel development

Author: Greg Kroah-Hartman

Translation: Yan Jinghan

The Linux kernel community celebrated its twenty-fifth anniversary in 2016, and many people came to us to ask us the secret of the project’s longevity and success. I usually laugh and then jokingly say that I really had no idea that 25 years had passed. The project has always faced disagreements and challenges. But, seriously, our ability to do this has a lot to do with the community's ability to reflect and change.

About 16 years ago, most of the kernel developers had never met each other, we only communicated through email, so Ted T’so came up with the idea of the Kernel Summit. Now, every year, kernel developers get together to solve technical problems and, more importantly, review what we did right and what we got wrong over the past year. Developers can openly discuss how they communicate with each other and how the development process works. Then, we will improve the process, we will develop new tools like Git, and continue to change the way we collaborate.

Although we have not fully understood all the key reasons for the success of the Linux kernel, there are still some experiences worth sharing.

1. Shorter release cycle is important

In the early stages of the Linux project, each major version of the kernel was released every few years, which meant that users had to wait a long time to enjoy new features, which was quite frustrating for users and resellers. of. And, more importantly, such a long cycle means a lot of code needs to be integrated at once. Consolidating so much code into one version is stressful.

Shorter cycles can solve all these problems. New code can be incorporated into the stable version in less time. Integrating new code onto a nearly stable baseline version enables fundamental changes to be introduced with minimal impact on the system. Developers know that if they miss this release cycle, there will be another one in two months, so they rarely merge code prematurely.

2. Process expansion requires a distributed layered development model

A long time ago, all change requirements would go directly to Linus Torvalds, but this soon proved to be inappropriate because no one could fully grasp a project as complex as the operating system kernel. Very early on, maintainers from different areas of the kernel came up with the idea of allocating parts of the kernel to people familiar with that area. For example, networking, wireless, driver subsystems like PCI or USB, or file systems like ext2 or vfat. This then scales to hundreds of maintainers responsible for code review and integration, enabling thousands of changes to be included in each release without sacrificing product quality.

3. The importance of tools

Kernel development has been trying to expand the scope of developers until the emergence of BitKeeper, a source code management system. The community's practices changed almost overnight, and the emergence of Git brought another leap. Without the right tools, a project like the kernel won't function properly and will collapse under its own weight.

4. A strong public opinion guidance model is very important

Generally speaking, if a senior developer rejects a submitted change, the change will not be merged. It's very frustrating when a developer finds out that code they submitted months ago has been rejected on the mailing list. But it also ensures that kernel development can adapt to a large number of users and problems. No user community can make changes at the expense of other groups. We have a code base that can support everything from microsystems to supercomputers, and it can be applied to many scenarios.

5. A strong “no regression” rule is also important

About a decade ago, the kernel development community promised that if a given kernel ran properly in a specific environment, then all subsequent kernel versions would also run properly in that environment. If the community discovers that a change has caused other problems, they will quickly fix the problem. This rule promises users that system upgrades will not destroy their original systems. Therefore, maintainers are willing to continue this kernel while developing new features.

6. It is crucial for companies to participate in the development process, but no one company can dominate kernel development

Since the release of the kernel version number 3.18 in December 2014, approximately 5,062 individual developers from nearly 500 companies have contributed to the Linux kernel. Most developers are paid for their work, and the changes they make serve the company they work for. However, although any company can improve the kernel according to specific needs, no one company can lead development to do things that harm others or limit the functionality of the kernel.

7. There should be no internal boundaries in the project

Kernel developers must focus on specific parts of the kernel, but any developer can make modifications to any part of the kernel as long as the modification is justified. As a result, problems are solved when they arise rather than avoided. Developers have many and varied opinions about the kernel as a whole, and even the most stubborn maintainers cannot indefinitely shelve necessary improvements in any given subsystem.

8. Important functions start bit by bit

The original version 0.01 kernel only had 10,000 lines of code; now more than 10,000 lines are added every two days. Some basic, tiny functionality that developers add now may grow into important subsystems in the future.

9. To sum up, the 25-year history of kernel development shows that continuous cooperation can bring common resources, which cannot be developed by a single group

Since 2005, approximately 14,000 individual developers from more than 1,300 companies have contributed to the kernel. Therefore, the Linux kernel has developed into a large-scale public resource through the efforts of many companies that compete fiercely with each other.

The above is the detailed content of A brief discussion on nine experiences summarized from 25 years of Linux kernel development experience. For more information, please follow other related articles on the PHP Chinese website!

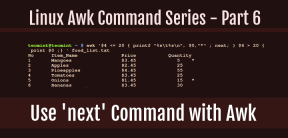

How to Use 'next' Command with Awk in Linux - Part 6May 15, 2025 am 10:43 AM

How to Use 'next' Command with Awk in Linux - Part 6May 15, 2025 am 10:43 AMIn this sixth installment of our Awk series, we will explore the next command, which is instrumental in enhancing the efficiency of your script executions by skipping redundant processing steps.What is the next Command?The next command in awk instruc

How to Efficiently Transfer Files in LinuxMay 15, 2025 am 10:42 AM

How to Efficiently Transfer Files in LinuxMay 15, 2025 am 10:42 AMTransferring files in Linux systems is a common task that every system administrator should master, especially when it comes to network transmission between local or remote systems. Linux provides two commonly used tools to accomplish this task: SCP (Secure Replication) and Rsync. Both provide a safe and convenient way to transfer files between local or remote machines. This article will explain in detail how to use SCP and Rsync commands to transfer files, including local and remote file transfers. Understand the scp (Secure Copy Protocol) in Linux scp command is a command line program used to securely copy files and directories between two hosts via SSH (Secure Shell), which means that when files are transferred over the Internet, the number of

10 Most Popular Linux Desktop Environments of All TimeMay 15, 2025 am 10:35 AM

10 Most Popular Linux Desktop Environments of All TimeMay 15, 2025 am 10:35 AMOne fascinating feature of Linux, in contrast to Windows and Mac OS X, is its support for a variety of desktop environments. This allows desktop users to select the most suitable and fitting desktop environment based on their computing requirements.A

How to Install LibreOffice 24.8 in Linux DesktopMay 15, 2025 am 10:15 AM

How to Install LibreOffice 24.8 in Linux DesktopMay 15, 2025 am 10:15 AMLibreOffice stands out as a robust and open-source office suite, tailored for Linux, Windows, and Mac platforms. It boasts an array of advanced features for handling word documents, spreadsheets, presentations, drawings, calculations, and mathematica

How to Work with PDF Files Using ONLYOFFICE Docs in LinuxMay 15, 2025 am 09:58 AM

How to Work with PDF Files Using ONLYOFFICE Docs in LinuxMay 15, 2025 am 09:58 AMLinux users who manage PDF files have a wide array of programs at their disposal. Specifically, there are numerous specialized PDF tools designed for various functions.For instance, you might opt to install a PDF viewer for reading files or a PDF edi

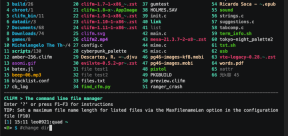

How to Filter Command Output Using Awk and STDINMay 15, 2025 am 09:53 AM

How to Filter Command Output Using Awk and STDINMay 15, 2025 am 09:53 AMIn the earlier segments of the Awk command series, our focus was primarily on reading input from files. However, what if you need to read input from STDIN?In Part 7 of the Awk series, we will explore several examples where you can use the output of o

Clifm - Lightning-Fast Terminal File Manager for LinuxMay 15, 2025 am 09:45 AM

Clifm - Lightning-Fast Terminal File Manager for LinuxMay 15, 2025 am 09:45 AMClifm stands out as a distinctive and incredibly swift command-line file manager, designed on the foundation of a shell-like interface. This means that users can engage with their file system using commands they are already familiar with.The choice o

How to Upgrade from Linux Mint 21.3 to Linux Mint 22May 15, 2025 am 09:44 AM

How to Upgrade from Linux Mint 21.3 to Linux Mint 22May 15, 2025 am 09:44 AMIf you prefer not to perform a new installation of Linux Mint 22 Wilma, you have the option to upgrade from a previous version.In this guide, we will detail the process to upgrade from Linux Mint 21.3 (the most recent minor release of the 21.x series

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Atom editor mac version download

The most popular open source editor

WebStorm Mac version

Useful JavaScript development tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

Dreamweaver Mac version

Visual web development tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.