Technology peripherals

Technology peripherals AI

AI Microsoft turned GPT-4 into a medical expert with just the 'Prompt Project'! More than a dozen highly fine-tuned models, the professional test accuracy exceeded 90% for the first time

Microsoft turned GPT-4 into a medical expert with just the 'Prompt Project'! More than a dozen highly fine-tuned models, the professional test accuracy exceeded 90% for the first timeMicrosoft's latest research once again proves the power of Prompt Project -

Without additional fine-tuning or expert planning, GPT-4 can become an "expert" with just prompts.

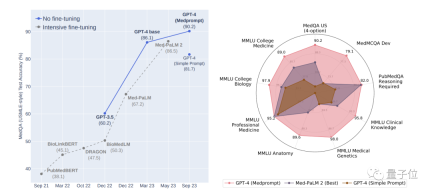

Using the latest prompting strategy they proposed Medprompt, in the medical professional field, GPT-4 achieved the best results in the nine test sets of MultiMed QA.

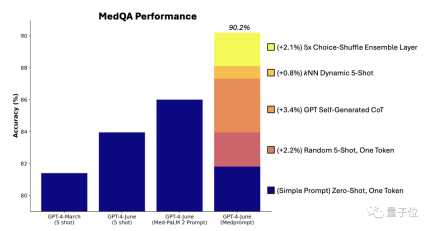

On the MedQA data set (United States Medical Licensing Examination questions), Medprompt made GPT-4's accuracy exceed 90% for the first time, surpassed BioGPT and Med-PaLM Waiting for a number of fine-tuning methods.

The researchers also stated that the Medprompt method is universal and is not only applicable to medicine, but can also be extended to electrical engineering, machine learning, law and other majors.

As soon as this study was shared on X (formerly Twitter), it attracted the attention of many netizens.

Wharton School professor Ethan Mollick, Artificial Intuition author Carlos E. Perez, etc. have all forwarded and shared it.

Carlos E. Perez said that "an excellent prompting strategy can take a lot of fine-tuning":

Some netizens said that they have had this premonition for a long time. , it’s really cool to see the results coming out now!

Some netizens think this is really "radical"

GPT-4 is a technology that can change the industry, but we are still far away The limits of the prompts have not been hit, nor have the limits of fine tuning been reached.

Combined prompt strategies, "transform" into an expert

Medprompt is a combination of multiple prompt strategies, including three magic weapons:

- Dynamic few-shot selection

- Self-generated chain of thought

- Choice shuffling ensemble )

Next, we will introduce them one by one

Dynamic few-sample selection

Few-sample learning is to make the model fast An effective way to learn context. Simply put, input some examples, let the model quickly adapt to a specific domain, and learn to follow the format of the task.

This kind of few-sample examples used for specific task prompts are usually fixed, so there are high requirements for the representativeness and breadth of the examples.

The previous method was to let domain expertsmanually produce examples, but even so, there is no guarantee that the fixed few-sample examples curated by experts are representative in each task.

Microsoft researchers proposed a method of dynamic few-shot examples, so

The idea is that the task training set can be used as a source of few-shot examples, and if the training set is large enough, then it can Select different few-shot examples for different task inputs.

In terms of specific operations, the researchers first used the text-embedding-ada-002 model to generate vector representations for each training sample and test sample. Then, for each test sample, by comparing the similarity of the vectors, the k most similar samples are selected from the training samples

Compared with the fine-tuning method, dynamic few-shot selection makes use of the training data, But it doesn't require extensive updates to model parameters.

Self-generated chain of thinking

The chain of thinking (CoT) method is a method that lets the model think step by step and generate a series of intermediate reasoning steps

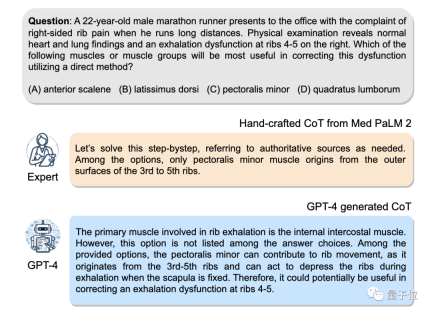

Previous methods relied on experts Manually write some examples with prompt thought chains

Here, the researchers found that GPT-4 can be simply asked to generate thought chains for training examples using the following prompt:

But the researchers also pointed out that this automatically generated thinking chain may contain wrong reasoning steps, so they set up a verification tag as a filter, which can effectively reduce errors.

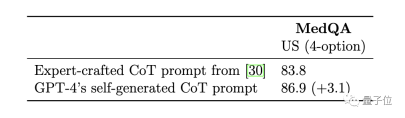

Compared with the thinking chain examples hand-crafted by experts in the Med-PaLM 2 model, the basic principles of the thinking chain generated by GPT-4 are longer, and the step-by-step reasoning logic is more fine-grained.

Option Shuffling Integration

GPT-4 may have a bias when dealing with multiple choice questions, that is, it tends to always choose A or always choose B, no matter what the content of the option is. , this is the position deviation

In order to solve this problem, the researchers decided to rearrange the order of the original options to reduce the impact. For example, the original order of options is ABCD, which can be changed to BCDA, CDAB, etc.

Then let GPT-4 do multiple rounds of predictions, using a different order of options in each round. This "forces" GPT-4 to consider the content of the options.

Finally, vote on the results of multiple rounds of predictions and choose the most consistent and correct option.

The combination of the above prompt strategies is Medprompt. Let’s take a look at the test results.

Multiple Test Optimal

In the test, the researchers used the MultiMed QA evaluation benchmark.

GPT-4, which uses the Medprompt prompting strategy, achieved the highest scores in all nine benchmark data sets of MultiMedQA, better than Flan-PaLM 540B and Med-PaLM 2.

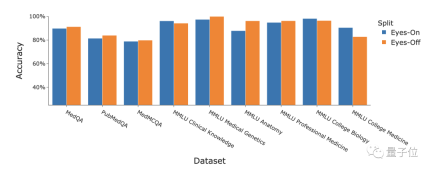

In addition, the researchers also discussed the performance of the Medprompt strategy on "Eyes-Off" data. The so-called "Eyes-Off" data refers to data that the model has never seen during the training or optimization process. It is used to test whether the model is overfitting the training data

Results GPT-4 combined with the Medprompt strategy performed well on multiple medical benchmark data sets, with an average accuracy of 91.3%.

The researchers conducted ablation experiments on the MedQA dataset to explore the relative contributions of the three components to the overall performance

In which thought chains are automatically generated The steps play the biggest role in improving performance

The score of the thinking chain automatically generated by GPT-4 is higher than the score planned by experts in Med-PaLM 2, and does not require Manual intervention

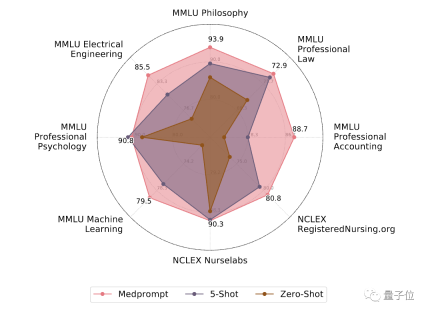

#Finally, the researchers also explored Medprompt’s cross-domain generalization capabilities, using six different datasets from the MMLU benchmark, covering electrical engineering , machine learning, philosophy, professional accounting, professional law and professional psychology issues.

Two additional datasets containing NCLEX (National Nurse Licensing Examination) questions have also been added.

The results show that the effect of Medprompt on these data sets is similar to the improvement on the MultiMedQA medical data set, with the average accuracy increased by 7.3%.

Please click the following link to view the paper: https://arxiv.org/pdf/2311.16452.pdf

The above is the detailed content of Microsoft turned GPT-4 into a medical expert with just the 'Prompt Project'! More than a dozen highly fine-tuned models, the professional test accuracy exceeded 90% for the first time. For more information, please follow other related articles on the PHP Chinese website!

The AI Skills Gap Is Slowing Down Supply ChainsApr 26, 2025 am 11:13 AM

The AI Skills Gap Is Slowing Down Supply ChainsApr 26, 2025 am 11:13 AMThe term "AI-ready workforce" is frequently used, but what does it truly mean in the supply chain industry? According to Abe Eshkenazi, CEO of the Association for Supply Chain Management (ASCM), it signifies professionals capable of critic

How One Company Is Quietly Working To Transform AI ForeverApr 26, 2025 am 11:12 AM

How One Company Is Quietly Working To Transform AI ForeverApr 26, 2025 am 11:12 AMThe decentralized AI revolution is quietly gaining momentum. This Friday in Austin, Texas, the Bittensor Endgame Summit marks a pivotal moment, transitioning decentralized AI (DeAI) from theory to practical application. Unlike the glitzy commercial

Nvidia Releases NeMo Microservices To Streamline AI Agent DevelopmentApr 26, 2025 am 11:11 AM

Nvidia Releases NeMo Microservices To Streamline AI Agent DevelopmentApr 26, 2025 am 11:11 AMEnterprise AI faces data integration challenges The application of enterprise AI faces a major challenge: building systems that can maintain accuracy and practicality by continuously learning business data. NeMo microservices solve this problem by creating what Nvidia describes as "data flywheel", allowing AI systems to remain relevant through continuous exposure to enterprise information and user interaction. This newly launched toolkit contains five key microservices: NeMo Customizer handles fine-tuning of large language models with higher training throughput. NeMo Evaluator provides simplified evaluation of AI models for custom benchmarks. NeMo Guardrails implements security controls to maintain compliance and appropriateness

AI Paints A New Picture For The Future Of Art And DesignApr 26, 2025 am 11:10 AM

AI Paints A New Picture For The Future Of Art And DesignApr 26, 2025 am 11:10 AMAI: The Future of Art and Design Artificial intelligence (AI) is changing the field of art and design in unprecedented ways, and its impact is no longer limited to amateurs, but more profoundly affecting professionals. Artwork and design schemes generated by AI are rapidly replacing traditional material images and designers in many transactional design activities such as advertising, social media image generation and web design. However, professional artists and designers also find the practical value of AI. They use AI as an auxiliary tool to explore new aesthetic possibilities, blend different styles, and create novel visual effects. AI helps artists and designers automate repetitive tasks, propose different design elements and provide creative input. AI supports style transfer, which is to apply a style of image

How Zoom Is Revolutionizing Work With Agentic AI: From Meetings To MilestonesApr 26, 2025 am 11:09 AM

How Zoom Is Revolutionizing Work With Agentic AI: From Meetings To MilestonesApr 26, 2025 am 11:09 AMZoom, initially known for its video conferencing platform, is leading a workplace revolution with its innovative use of agentic AI. A recent conversation with Zoom's CTO, XD Huang, revealed the company's ambitious vision. Defining Agentic AI Huang d

The Existential Threat To UniversitiesApr 26, 2025 am 11:08 AM

The Existential Threat To UniversitiesApr 26, 2025 am 11:08 AMWill AI revolutionize education? This question is prompting serious reflection among educators and stakeholders. The integration of AI into education presents both opportunities and challenges. As Matthew Lynch of The Tech Edvocate notes, universit

The Prototype: American Scientists Are Looking For Jobs AbroadApr 26, 2025 am 11:07 AM

The Prototype: American Scientists Are Looking For Jobs AbroadApr 26, 2025 am 11:07 AMThe development of scientific research and technology in the United States may face challenges, perhaps due to budget cuts. According to Nature, the number of American scientists applying for overseas jobs increased by 32% from January to March 2025 compared with the same period in 2024. A previous poll showed that 75% of the researchers surveyed were considering searching for jobs in Europe and Canada. Hundreds of NIH and NSF grants have been terminated in the past few months, with NIH’s new grants down by about $2.3 billion this year, a drop of nearly one-third. The leaked budget proposal shows that the Trump administration is considering sharply cutting budgets for scientific institutions, with a possible reduction of up to 50%. The turmoil in the field of basic research has also affected one of the major advantages of the United States: attracting overseas talents. 35

All About Open AI's Latest GPT 4.1 Family - Analytics VidhyaApr 26, 2025 am 10:19 AM

All About Open AI's Latest GPT 4.1 Family - Analytics VidhyaApr 26, 2025 am 10:19 AMOpenAI unveils the powerful GPT-4.1 series: a family of three advanced language models designed for real-world applications. This significant leap forward offers faster response times, enhanced comprehension, and drastically reduced costs compared t

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SublimeText3 Linux new version

SublimeText3 Linux latest version

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.