Home >Technology peripherals >AI >Next-generation technology: Edge intelligence enables real-time data processing and intelligent decision-making

Next-generation technology: Edge intelligence enables real-time data processing and intelligent decision-making

- 王林forward

- 2023-11-23 20:45:171720browse

Labs Introduction

Edge Intelligence is a system that combines artificial intelligence (AI) and edge computing. Emerging Technologies. Traditional artificial intelligence applications usually rely on cloud computing centers for data processing and decision-making, but this approach has problems with latency and network bandwidth.

Part 01, What is edge intelligence

Edge Intelligence is an emerging technology concept that refers to the deployment of artificial intelligence (AI) algorithms and models on IoT devices close to data sources and nearby network nodes for real-time Data processing and analysis capabilities. Over the past few years, the rapid development of AI has led to many innovative applications and solutions. However, as the scale and complexity of AI models continue to increase, traditional cloud computing architecture faces a series of challenges, such as high latency, network congestion, and data privacy issues. In order to overcome these challenges, the combination of edge computing and artificial intelligence emerged, forming the concept of edge intelligence. Edge intelligence not only moves the training and inference of AI models to edge devices closer to users, such as smartphones, sensors, routers, surveillance cameras, etc. By performing real-time data processing on these edge devices, quickly responding to and analyzing data, and making decisions locally, this avoids the delays and security risks of sending all data to the cloud for processing, bringing many new capabilities to AI applications. Chance.

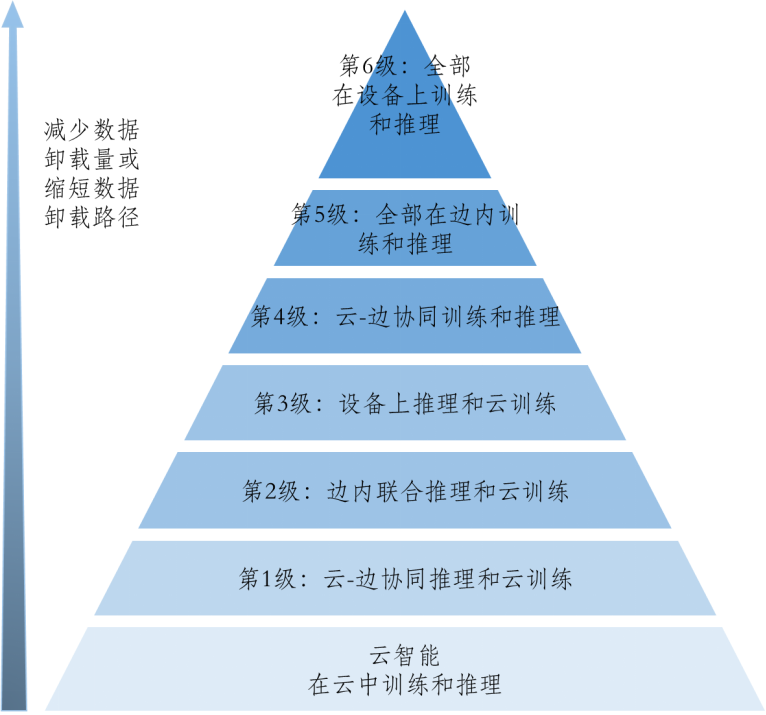

Regarding the scope and rating of edge intelligence, existing research believes that edge intelligence is the use of available data and resources in end devices, edge nodes, and cloud data center hierarchies to optimize deep neural networks Overall training and inference performance of the model (DNN). This means that edge intelligence does not necessarily have to be trained or inferred at the edge, but can realize the collaborative work of cloud, edge and terminal through data offloading. Edge intelligence is divided into six levels based on the amount of data offloading and path length

In calculating latency and energy consumption At an increased cost, as the edge intelligence level increases, the number and path length of data offloading will decrease, thereby reducing the transmission delay of data offloading, increasing data privacy, and reducing network bandwidth costs.

Part 02. Edge intelligent model training

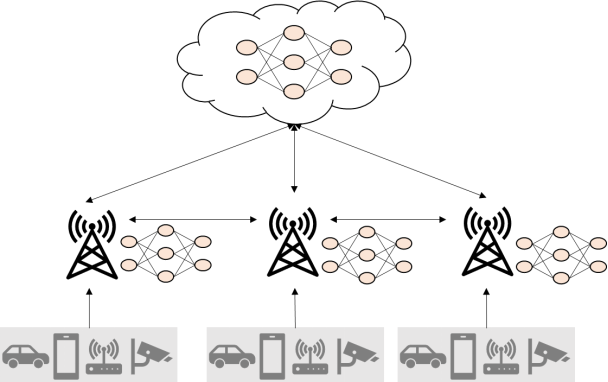

The edge distributed deep neural network training architecture can be divided into three modes: centralized and distributed Type, hybrid (cloud-edge-device collaboration)

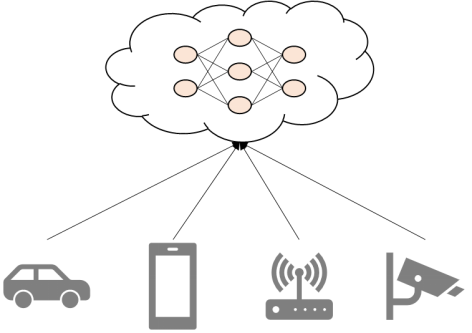

##➪ Centralized type: The DNN model is trained in the cloud data center. The data used for training is generated and collected from distributed terminal devices (such as mobile phones, cars and surveillance cameras). Once the data arrives, the cloud data center will use these data for DNN train. Systems based on centralized architecture can be identified as Level 1, Level 2, or Level 3 in edge intelligence, depending on the specific reasoning method adopted by the system.

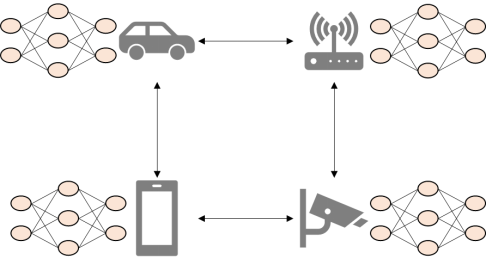

➪ Distributed: Each computing node uses local data Train respective DNN models locally and save private information locally. Obtain a global DNN model by sharing local training updates. In this mode, the global DNN model can be trained without the intervention of the cloud data center, corresponding to the fifth level of edge intelligence.

##➪ Hybrid (cloud-edge-device collaboration): combination Centralized and distributed, edge servers can train DNN models through distributed updates, or use cloud data centers for centralized training. Corresponds to Level 4 and Level 5 in edge intelligence.

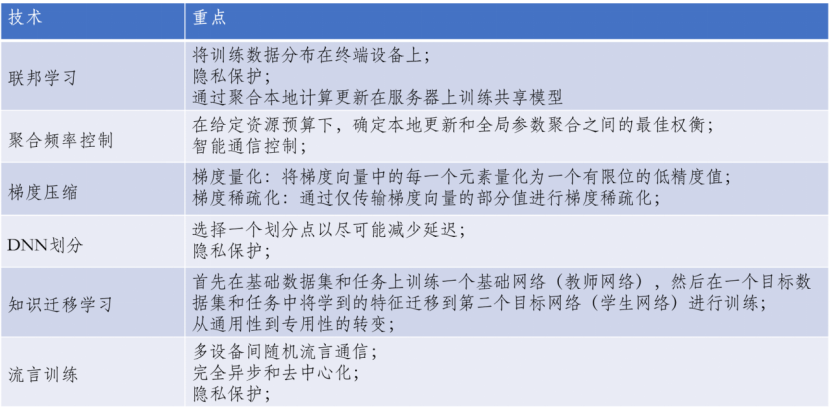

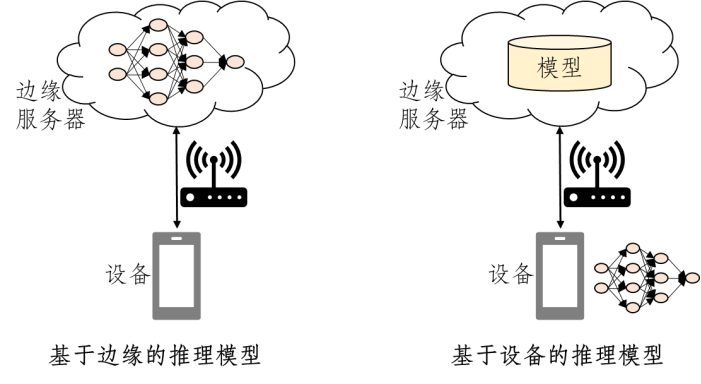

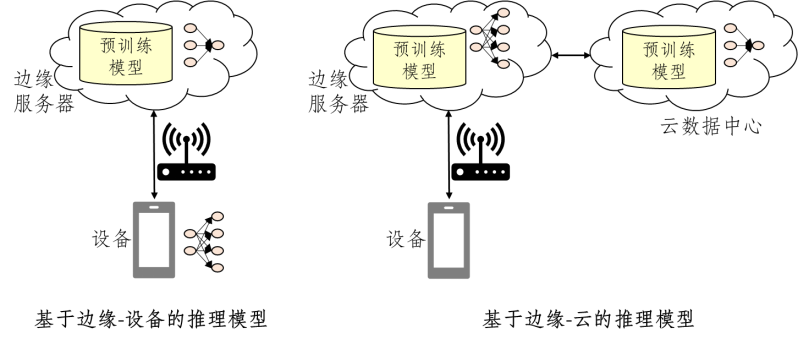

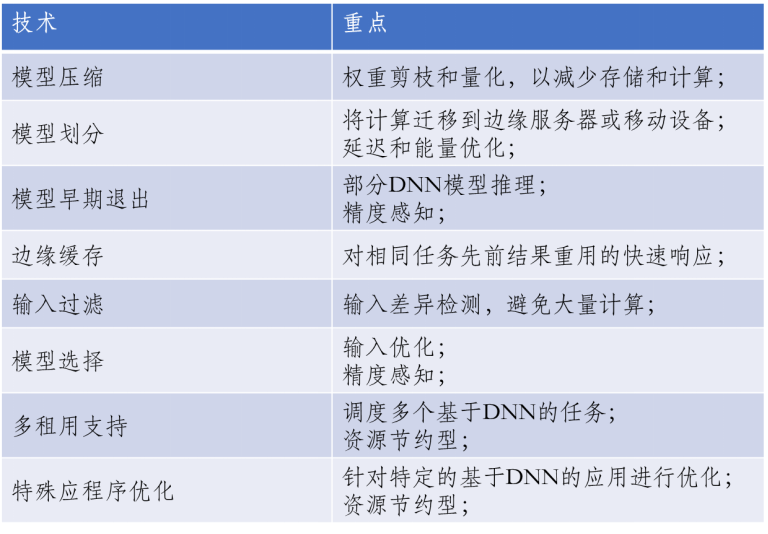

Currently, edge intelligence model training methods mainly usetraining loss, convergence, privacy, communication cost, delay and Energy Efficiency are evaluated based on these 6 key performance indicators. The technologies supported by edge intelligence model training are as follows: High-quality edge intelligence service deployment, in addition to realizing distributed training of deep learning models, also requires efficient implementation of model inference at the edge. The inference model of edge intelligence is divided into four modes: edge-based, device-based, edge-device and edge-cloud. ##➪ Edge-based inference model: Device In edge mode, it receives input data and sends them to the edge server. The edge server completes the DNN model inference and returns the prediction results to the device. Inference performance depends on the network bandwidth between the device and the edge server. ➪ Device-based inference model: The device is in device mode, and the mobile device obtains the DNN model from the edge server and completes the model locally Inference, during the inference process, the mobile device continuously communicates with the edge server, so the mobile device is required to have resources such as CPU, GPU, and RAM. ➪ Edge-device-based inference model: The device is in In the edge-device mode, the device first divides the DNN model into multiple parts based on factors such as network bandwidth, device resources, and edge server load; then executes the DNN model to specific layers and sends the intermediate data to the edge server. The edge server will execute the remaining layers and send the prediction results to the device. ➪ Edge-cloud based inference model: The device is in edge-cloud mode, and the device is responsible for collecting input data and passing it through the cloud edge Collaboratively execute DNN models. The performance of edge intelligence model inference is mainly throughDelay, accuracy, energy efficiency, privacy, communication cost and Memory usageThese six indicators are used for evaluation. The technologies supported by edge intelligence model training are as follows: As an emerging technology field, edge intelligence has broad research directions and development potential. According to the technical characteristics and application scenarios of edge intelligence, In the future, research can be conducted from the following aspects:

Part 03, Edge Intelligence Model Inference

Part 04. Research Direction of Edge Intelligence

The above is the detailed content of Next-generation technology: Edge intelligence enables real-time data processing and intelligent decision-making. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- What are ai artificial intelligence education

- What is the most advanced form of artificial intelligence in the future?

- The purpose of artificial intelligence is to make machines capable of

- Four characteristics of the artificial intelligence era?

- What are the application characteristics of artificial intelligence technology in the military?