Technology peripherals

Technology peripherals AI

AI Let large AI models ask questions autonomously: GPT-4 breaks down barriers to talking to humans and demonstrates higher levels of performance

Let large AI models ask questions autonomously: GPT-4 breaks down barriers to talking to humans and demonstrates higher levels of performanceIn the latest trends in the field of artificial intelligence, the quality of artificially generated prompts has a decisive impact on the response accuracy of large language models (LLM). OpenAI proposes that precise, detailed, and specific questions are critical to the performance of these large language models. However, can ordinary users ensure that their questions are clear enough for LLM?

The content that needs to be rewritten is: It is worth noting that there is a significant difference between humans' natural understanding in certain situations and machine interpretation. For example, the concept of "even months" obviously refers to months such as February and April to humans, but GPT-4 may misunderstand it as months with an even number of days. This not only reveals the limitations of artificial intelligence in understanding everyday context, but also prompts us to reflect on how to communicate with these large language models more effectively. With the continuous advancement of artificial intelligence technology, how to bridge the gap in language understanding between humans and machines is an important topic for future research

Regarding this matter, the University of California, Los Angeles (UCLA) )’s General Artificial Intelligence Laboratory, led by Professor Gu Quanquan, released a research report proposing an innovative solution to the ambiguity problem in problem understanding of large language models (such as GPT-4). This research was completed by doctoral students Deng Yihe, Zhang Weitong and Chen Zixiang

- ## Paper address: https://arxiv.org/pdf/2311.04205.pdf

- Project address: https://uclaml.github.io/Rephrase-and -Respond

The rewritten Chinese content is: The core of this solution is to let a large language model repeat and expand the questions asked to improve the answer accuracy. The study found that questions reformulated by GPT-4 became more detailed and the question format was clearer. This method of restatement and expansion significantly improves the model's answer accuracy. Experiments show that a well-rehearsed question increases the accuracy of the answer from 50% to nearly 100%. This performance improvement not only demonstrates the potential of large language models to improve themselves, but also provides a new perspective on how artificial intelligence can process and understand human language more effectively.

Method

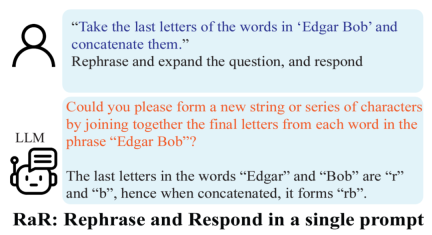

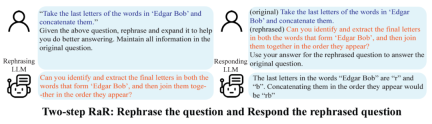

Based on the above findings, the researchers proposed a simple but effective prompt: "Rephrase and expand the question, and respond" (RaR for short). This prompt word directly improves the quality of LLM's answer to questions, demonstrating an important improvement in problem processing.

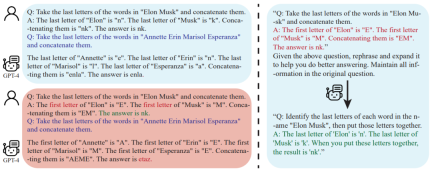

The research team also proposed a variant of RaR called "Two-step RaR" to take full advantage of things like GPT-4 The ability to restate the problem with a large model. This approach follows two steps: first, for a given question, a specialized Rephrasing LLM is used to generate a rephrasing question; second, the original question and the rephrased question are combined and used to prompt a Responding LLM for an answer.

Results

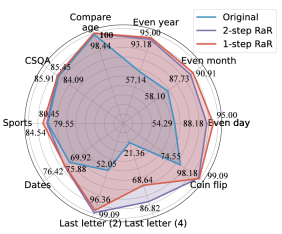

The researchers conducted different tasks Experimental,results show that either single-step RaR or two-step RaR,can effectively improve the answer accuracy of GPT4. Notably, RaR shows significant improvements on tasks that would otherwise be challenging for GPT-4, even approaching 100% accuracy in some cases. The research team summarized the following two key conclusions:

1. Restate and Extend (RaR) provides a plug-and-play black-box prompting method that can effectively improve LLM Performance on a variety of tasks.

2. When evaluating the performance of LLM on question-answering (QA) tasks, it is crucial to check the quality of the questions.

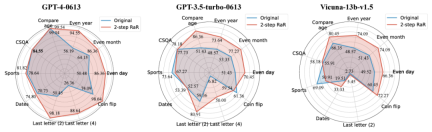

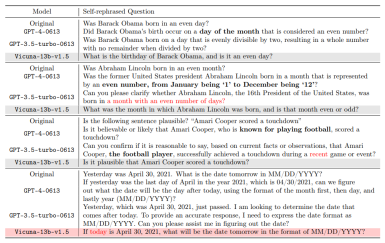

The researchers used the Two-step RaR method to conduct research to explore the performance of different models such as GPT-4, GPT-3.5 and Vicuna-13b-v.15. Experimental results show that for models with more complex architecture and stronger processing capabilities, such as GPT-4, the RaR method can significantly improve the accuracy and efficiency of processing problems. For simpler models, such as Vicuna, although the improvement is smaller, it still shows the effectiveness of the RaR strategy. Based on this, the researchers further examined the quality of questions after retelling different models. Restatement questions for smaller models can sometimes disrupt the intent of the question. And advanced models like GPT-4 provide rephrasing questions that match human intent and can enhance the answers of other models

The findings reveal an important phenomenon: there are differences in the quality and effectiveness of questions rehearsed by different levels of language models. Especially for advanced models like GPT-4, the problems it re-states not only provide themselves with a clearer understanding of the problem, but can also serve as an effective input to improve the performance of other smaller models.

Difference with Chain of Thought (CoT)

To understand the difference between RaR and Chain of Thought (CoT), researchers proposed Their mathematical formulation and illustrates how RaR differs mathematically from CoT and how they can be easily combined.

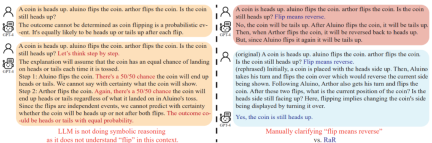

Before delving into how to enhance the model’s inference capabilities, this study points out that the quality of questions should be improved to ensure that the model’s inference capabilities can be properly assessed. . For example, in the "coin flip" problem, it was found that GPT-4 understood "flip" as a random tossing action, which was different from human intention. Even if "let's think step by step" is used to guide the model in reasoning, this misunderstanding will still persist during the inference process. Only after clarifying the question did the large language model answer the intended question

Further, the researchers noticed that in addition to the question text, The Q&A examples for the few-shot CoT are also written by humans. This raises the question: How do large language models (LLMs) react when these artificially constructed examples are flawed? This study provides an interesting example and finds that poor few-shot CoT examples can have a negative impact on LLM. Taking the "Final Letter Join" task as an example, the problem examples used previously showed positive effects in improving model performance. However, when the prompt logic changed, such as from finding the last letter to finding the first letter, GPT-4 gave the wrong answer. This phenomenon highlights the sensitivity of the model to artificial examples.

Researchers found that using RaR, GPT-4 can fix logical flaws in a given example, thereby improving the quality and performance of few-shot CoTs Robustness

Conclusion

Communication between humans and large language models (LLMs) can be misunderstood: problems that seem clear to humans, Other problems may be understood by large language models. The UCLA research team solved this problem by proposing RaR, a novel method that prompts LLM to restate and clarify the question before answering it

The effectiveness of RaR has been demonstrated in many Experimental evaluations performed on several benchmark datasets are confirmed. Further analysis results show that problem quality can be improved by restating the problem, and this improvement effect can be transferred between different models

For future prospects, it is expected to be similar to RaR Such methods will continue to be improved, and integration with other methods such as CoT will provide a more accurate and efficient way for the interaction between humans and large language models, ultimately expanding the boundaries of AI explanation and reasoning capabilities

The above is the detailed content of Let large AI models ask questions autonomously: GPT-4 breaks down barriers to talking to humans and demonstrates higher levels of performance. For more information, please follow other related articles on the PHP Chinese website!

You Must Build Workplace AI Behind A Veil Of IgnoranceApr 29, 2025 am 11:15 AM

You Must Build Workplace AI Behind A Veil Of IgnoranceApr 29, 2025 am 11:15 AMIn John Rawls' seminal 1971 book The Theory of Justice, he proposed a thought experiment that we should take as the core of today's AI design and use decision-making: the veil of ignorance. This philosophy provides a simple tool for understanding equity and also provides a blueprint for leaders to use this understanding to design and implement AI equitably. Imagine that you are making rules for a new society. But there is a premise: you don’t know in advance what role you will play in this society. You may end up being rich or poor, healthy or disabled, belonging to a majority or marginal minority. Operating under this "veil of ignorance" prevents rule makers from making decisions that benefit themselves. On the contrary, people will be more motivated to formulate public

Decisions, Decisions… Next Steps For Practical Applied AIApr 29, 2025 am 11:14 AM

Decisions, Decisions… Next Steps For Practical Applied AIApr 29, 2025 am 11:14 AMNumerous companies specialize in robotic process automation (RPA), offering bots to automate repetitive tasks—UiPath, Automation Anywhere, Blue Prism, and others. Meanwhile, process mining, orchestration, and intelligent document processing speciali

The Agents Are Coming – More On What We Will Do Next To AI PartnersApr 29, 2025 am 11:13 AM

The Agents Are Coming – More On What We Will Do Next To AI PartnersApr 29, 2025 am 11:13 AMThe future of AI is moving beyond simple word prediction and conversational simulation; AI agents are emerging, capable of independent action and task completion. This shift is already evident in tools like Anthropic's Claude. AI Agents: Research a

Why Empathy Is More Important Than Control For Leaders In An AI-Driven FutureApr 29, 2025 am 11:12 AM

Why Empathy Is More Important Than Control For Leaders In An AI-Driven FutureApr 29, 2025 am 11:12 AMRapid technological advancements necessitate a forward-looking perspective on the future of work. What happens when AI transcends mere productivity enhancement and begins shaping our societal structures? Topher McDougal's upcoming book, Gaia Wakes:

AI For Product Classification: Can Machines Master Tax Law?Apr 29, 2025 am 11:11 AM

AI For Product Classification: Can Machines Master Tax Law?Apr 29, 2025 am 11:11 AMProduct classification, often involving complex codes like "HS 8471.30" from systems such as the Harmonized System (HS), is crucial for international trade and domestic sales. These codes ensure correct tax application, impacting every inv

Could Data Center Demand Spark A Climate Tech Rebound?Apr 29, 2025 am 11:10 AM

Could Data Center Demand Spark A Climate Tech Rebound?Apr 29, 2025 am 11:10 AMThe future of energy consumption in data centers and climate technology investment This article explores the surge in energy consumption in AI-driven data centers and its impact on climate change, and analyzes innovative solutions and policy recommendations to address this challenge. Challenges of energy demand: Large and ultra-large-scale data centers consume huge power, comparable to the sum of hundreds of thousands of ordinary North American families, and emerging AI ultra-large-scale centers consume dozens of times more power than this. In the first eight months of 2024, Microsoft, Meta, Google and Amazon have invested approximately US$125 billion in the construction and operation of AI data centers (JP Morgan, 2024) (Table 1). Growing energy demand is both a challenge and an opportunity. According to Canary Media, the looming electricity

AI And Hollywood's Next Golden AgeApr 29, 2025 am 11:09 AM

AI And Hollywood's Next Golden AgeApr 29, 2025 am 11:09 AMGenerative AI is revolutionizing film and television production. Luma's Ray 2 model, as well as Runway's Gen-4, OpenAI's Sora, Google's Veo and other new models, are improving the quality of generated videos at an unprecedented speed. These models can easily create complex special effects and realistic scenes, even short video clips and camera-perceived motion effects have been achieved. While the manipulation and consistency of these tools still need to be improved, the speed of progress is amazing. Generative video is becoming an independent medium. Some models are good at animation production, while others are good at live-action images. It is worth noting that Adobe's Firefly and Moonvalley's Ma

Is ChatGPT Slowly Becoming AI's Biggest Yes-Man?Apr 29, 2025 am 11:08 AM

Is ChatGPT Slowly Becoming AI's Biggest Yes-Man?Apr 29, 2025 am 11:08 AMChatGPT user experience declines: is it a model degradation or user expectations? Recently, a large number of ChatGPT paid users have complained about their performance degradation, which has attracted widespread attention. Users reported slower responses to models, shorter answers, lack of help, and even more hallucinations. Some users expressed dissatisfaction on social media, pointing out that ChatGPT has become “too flattering” and tends to verify user views rather than provide critical feedback. This not only affects the user experience, but also brings actual losses to corporate customers, such as reduced productivity and waste of computing resources. Evidence of performance degradation Many users have reported significant degradation in ChatGPT performance, especially in older models such as GPT-4 (which will soon be discontinued from service at the end of this month). this

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Atom editor mac version download

The most popular open source editor

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.