Technology peripherals

Technology peripherals AI

AI Optimize learning efficiency: transfer old models to new tasks with 0.6% additional parameters

Optimize learning efficiency: transfer old models to new tasks with 0.6% additional parametersOptimize learning efficiency: transfer old models to new tasks with 0.6% additional parameters

The purpose of continuous learning is to imitate the human ability to continuously accumulate knowledge in continuous tasks. Its main challenge is how to maintain the performance of previously learned tasks after continuing to learn new tasks, that is, to avoid catastrophic Forget (catastrophic forgetting). The difference between continuous learning and multi-task learning is that the latter can get all tasks at the same time, and the model can learn all tasks at the same time; while in continuous learning, tasks appear one by one, and the model can only Learn knowledge about a task and avoid forgetting old knowledge in the process of learning new knowledge.

The University of Southern California and Google Research have proposed a new method to solve continuous learning Channel light Channel-wise Lightweight Reprogramming [CLR] : By adding a trainable lightweight module to the fixed task-invariant backbone, the feature map of each layer channel is Reprogramming makes the reprogrammed feature map suitable for new tasks. This trainable lightweight module only accounts for 0.6% of the entire backbone. Each new task can have its own lightweight module. In theory, infinite new tasks can be continuously learned without catastrophic forgetting. The paper has been published in ICCV 2023.

- ##Paper address: https://arxiv.org/pdf/2307.11386.pdf

- Project address: https://github.com/gyhandy/Channel-wise-Lightweight-Reprogramming

- Data Collection address: http://ilab.usc.edu/andy/skill102

Usually methods to solve continuous learning are mainly divided into three categories: based on regularization method, dynamic network method and replay method.

- The method based on regularization is that the model adds restrictions on parameter updates during the process of learning new tasks, and consolidates old knowledge while learning new knowledge.

- The dynamic network method is to add specific task parameters and limit the weight of old tasks when learning new tasks.

- The replay method assumes that when learning a new task, part of the data of the old task can be obtained and trained together with the new task.

The CLR method proposed in this article is a dynamic network method. The figure below represents the flow of the entire process: the researchers use the task-independent immutable part as shared task-specific parameters, and add task-specific parameters to recode the channel features. At the same time, in order to minimize the training amount of recoding parameters for each task, researchers only need to adjust the size of the kernel in the model and learn a linear mapping of channels from backbone to task-specific knowledge to implement recoding. In continuous learning, each new task can be trained to obtain a lightweight model; this lightweight model requires very few training parameters. Even if there are many tasks, the total number of parameters that need to be trained is very small compared to a large model. Small, and each lightweight model can achieve good results

Research motivation

Continuous learning focuses on the problem of learning from data streams, that is, learning new tasks in a specific order, continuously expanding its acquired knowledge, while avoiding forgetting previous tasks, so how to avoid catastrophic forgetting is the main issue in continuous learning research . Researchers consider the following three aspects:

- Reuse rather than relearn: Adversarial Reprogramming [1] is a method of perturbing the input space to "reencode" an already trained model without relearning the network parameters. And freeze the network to solve new tasks. The researchers borrowed the idea of "recoding" and performed a more lightweight but more powerful reprogramming in the parameter space of the original model instead of the input space.

- Channel-type transformation can connect two different kernels: The authors of GhostNet [2] found that traditional networks will get some similar feature maps after training, so they proposed a new Network architecture GhostNet: Reduce memory by using relatively cheap operations (such as linear changes) on existing feature maps to generate more feature maps. Inspired by this, this method also uses linear transformation to generate feature maps to enhance the network, so that it can be tailored for each new task at a relatively low cost.

- Lightweight parameters can change the model distribution: BPN [3] shifts the network parameter distribution from one task to another by adding a beneficial perturbation bias in the fully connected layer . However, BPN can only handle fully connected layers, with only one scalar bias per neuron, and therefore has limited ability to change the network. Instead, researchers designed more powerful modes for convolutional neural networks (CNNs) (adding “recoding” parameters to the convolution kernel) to achieve better performance in each new task.

Method description

Channel lightweight recodingFirst use A fixed backbone as a task-sharing structure, which can be a pre-trained model for supervised learning on a relatively diverse dataset (ImageNet-1k, Pascal VOC), or a proxy task without semantic labels Learning self-supervised learning models (DINO, SwAV). Different from other continuous learning methods (such as SUPSUP using a randomly initialized fixed structure, CCLL and EFTs using the model learned from the first task as backbone), the pre-trained model used by CLR can provide a variety of visual features, but these visual features Features require CLR layers for recoding on other tasks. Specifically, the researchers used channel-wise linear transformation to re-encode the feature image generated by the original convolution kernel.

The researchers first fixed the pre-trained backbone, and then added a channel-based lightweight reprogramming layer (CLR layer) after the convolution layer in each fixed convolution block. Perform channel-like linear changes on the feature map after the fixed convolution kernel.

Given a picture X, for each convolution kernel For continuous learning, a model that adds trainable CLR parameters and untrainable backbone can learn each task in turn. When testing, the researchers assume that there is a task predictor that can tell the model which task the test image belongs to, and then the fixed backbone and corresponding task-specific CLR parameters can make the final prediction. Since CLR has the characteristic of absolute parameter isolation (the CLR layer parameters corresponding to each task are different, but the shared backbone will not change), the CLR will not be affected by the number of tasks Data set: The researcher used image classification as the main task. The laboratory collected 53 image classification data sets, with approximately 1.8 million images and 1584 categories. These 53 datasets contain 5 different classification goals: object recognition, style classification, scene classification, counting and medical diagnosis. The researcher selected 13 baselines, which can be roughly divided into 3 categories There are also some baselines that are not continuous learning, such as SGD and SGD-LL. SGD learns each task by fine-tuning the entire network; SGD-LL is a variant that uses a fixed backbone for all tasks and a learnable shared layer whose length is equal to the maximum number of categories for all tasks. Experiment 1: Accuracy of the first task To evaluate all methods In their ability to overcome catastrophic forgetting, the researchers tracked accuracy on each task after learning the new task. If a method suffers from catastrophic forgetting, the accuracy on the same task will quickly drop after learning a new task. A good continuous learning algorithm can maintain its original performance after learning new tasks, which means that old tasks should be minimally affected by new tasks. The figure below shows the accuracy of the first task after learning the first to 53rd tasks of this method. Overall, this method can maintain the highest accuracy. More importantly, it avoids catastrophic forgetting and maintains the same accuracy as the original training method no matter how many tasks are continuously learned. Second experiment: average accuracy learning after completing all tasks The figure below shows the average accuracy of all methods after learning all tasks. The average accuracy reflects the overall performance of the continuous learning method. Because each task has different levels of difficulty, when a new task is added, the average accuracy across all tasks may rise or fall, depending on whether the added task is easy or difficult. First, let’s analyze the parameters and calculation costs For continuous learning, while it is important to achieve higher average accuracy, a good algorithm also hopes to minimize the requirements for additional network parameters and computational costs. "Add extra parameters for a new task" represents a percentage of the original backbone parameter amount. This article uses the calculation cost of SGD as the unit, and the calculation costs of other methods are normalized according to the cost of SGD. Rewritten content: Analysis of the impact of different backbone networks This article The method uses supervised learning or self-supervised learning methods to train on relatively diverse data sets to obtain a pre-trained model, which serves as an invariant parameter that is independent of the task. In order to explore the impact of different pre-training methods, this paper selected four different, task-independent pre-training models trained using different data sets and tasks. For supervised learning, the researchers used pre-trained models on ImageNet-1k and Pascal-VOC for image classification; for self-supervised learning, the researchers used pre-trained models obtained by two different methods, DINO and SwAV. The following table shows the average accuracy of the pre-trained model using four different methods. It can be seen that the final results of any method are very high (Note: Pascal-VOC is a relatively small data set, so the accuracy is relatively low. point) and is robust to different pre-trained backbones.  , we can get the feature map

, we can get the feature map  ; Then use 2D convolution kernel to linearly change each channel of X'

; Then use 2D convolution kernel to linearly change each channel of X'  , assuming that each convolution kernel

, assuming that each convolution kernel  The corresponding linearly changing convolution kernel is

The corresponding linearly changing convolution kernel is  , then the re-encoded feature map

, then the re-encoded feature map  can be obtained. The researcher initialized the CLR convolution kernel to the same change kernel (that is, for the 2D convolution kernel, only the middle parameter is 1, and the rest are 0), because this can make the original training at the beginning The features generated by the fixed backbone are the same as those generated by the model after adding the CLR layer. At the same time, in order to save parameters and prevent over-fitting, the researchers will not add a CLR layer after the convolution kernel. The CLR layer will only act after the convolution kernel. For ResNet50 after CLR, the increased trainable parameters only account for 0.59% compared to the fixed ResNet50 backbone.

can be obtained. The researcher initialized the CLR convolution kernel to the same change kernel (that is, for the 2D convolution kernel, only the middle parameter is 1, and the rest are 0), because this can make the original training at the beginning The features generated by the fixed backbone are the same as those generated by the model after adding the CLR layer. At the same time, in order to save parameters and prevent over-fitting, the researchers will not add a CLR layer after the convolution kernel. The CLR layer will only act after the convolution kernel. For ResNet50 after CLR, the increased trainable parameters only account for 0.59% compared to the fixed ResNet50 backbone.

Experimental results

The above is the detailed content of Optimize learning efficiency: transfer old models to new tasks with 0.6% additional parameters. For more information, please follow other related articles on the PHP Chinese website!

Exploring the Capabilities of Google's Gemma 2 ModelsApr 22, 2025 am 11:26 AM

Exploring the Capabilities of Google's Gemma 2 ModelsApr 22, 2025 am 11:26 AMGoogle's Gemma 2: A Powerful, Efficient Language Model Google's Gemma family of language models, celebrated for efficiency and performance, has expanded with the arrival of Gemma 2. This latest release comprises two models: a 27-billion parameter ver

The Next Wave of GenAI: Perspectives with Dr. Kirk Borne - Analytics VidhyaApr 22, 2025 am 11:21 AM

The Next Wave of GenAI: Perspectives with Dr. Kirk Borne - Analytics VidhyaApr 22, 2025 am 11:21 AMThis Leading with Data episode features Dr. Kirk Borne, a leading data scientist, astrophysicist, and TEDx speaker. A renowned expert in big data, AI, and machine learning, Dr. Borne offers invaluable insights into the current state and future traje

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AM

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AMThere were some very insightful perspectives in this speech—background information about engineering that showed us why artificial intelligence is so good at supporting people’s physical exercise. I will outline a core idea from each contributor’s perspective to demonstrate three design aspects that are an important part of our exploration of the application of artificial intelligence in sports. Edge devices and raw personal data This idea about artificial intelligence actually contains two components—one related to where we place large language models and the other is related to the differences between our human language and the language that our vital signs “express” when measured in real time. Alexander Amini knows a lot about running and tennis, but he still

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AM

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AMCaterpillar's Chief Information Officer and Senior Vice President of IT, Jamie Engstrom, leads a global team of over 2,200 IT professionals across 28 countries. With 26 years at Caterpillar, including four and a half years in her current role, Engst

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AM

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AMGoogle Photos' New Ultra HDR Tool: A Quick Guide Enhance your photos with Google Photos' new Ultra HDR tool, transforming standard images into vibrant, high-dynamic-range masterpieces. Ideal for social media, this tool boosts the impact of any photo,

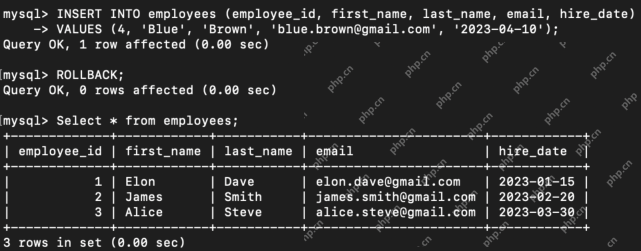

What are the TCL Commands in SQL? - Analytics VidhyaApr 22, 2025 am 11:07 AM

What are the TCL Commands in SQL? - Analytics VidhyaApr 22, 2025 am 11:07 AMIntroduction Transaction Control Language (TCL) commands are essential in SQL for managing changes made by Data Manipulation Language (DML) statements. These commands allow database administrators and users to control transaction processes, thereby

How to Make Custom ChatGPT? - Analytics VidhyaApr 22, 2025 am 11:06 AM

How to Make Custom ChatGPT? - Analytics VidhyaApr 22, 2025 am 11:06 AMHarness the power of ChatGPT to create personalized AI assistants! This tutorial shows you how to build your own custom GPTs in five simple steps, even without coding skills. Key Features of Custom GPTs: Create personalized AI models for specific t

Difference Between Method Overloading and OverridingApr 22, 2025 am 10:55 AM

Difference Between Method Overloading and OverridingApr 22, 2025 am 10:55 AMIntroduction Method overloading and overriding are core object-oriented programming (OOP) concepts crucial for writing flexible and efficient code, particularly in data-intensive fields like data science and AI. While similar in name, their mechanis

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SublimeText3 Mac version

God-level code editing software (SublimeText3)

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

Atom editor mac version download

The most popular open source editor