The impact of adversarial attacks on model stability

The impact of adversarial attacks on model stability requires specific code examples

Abstract: With the rapid development of artificial intelligence, deep learning models are widely used in various kind of field. However, these models often show surprising vulnerability when facing adversarial attacks. Adversarial attacks refer to behaviors that make small perturbations to model inputs, resulting in misjudgments in model outputs. This article will discuss the impact of adversarial attacks on model stability and demonstrate how to combat such attacks through example code.

- Introduction

As deep learning models have achieved great success in fields such as computer vision and natural language processing, people have paid increasing attention to their stability. Adversarial attacks are a security threat to deep learning models. Attackers can deceive the model through small perturbations, causing the model to output incorrect results. Adversarial attacks pose serious threats to the credibility and reliability of models, so it becomes crucial to study how to deal with adversarial attacks. - Types of adversarial attacks

Adversarial attacks can be divided into two categories: white-box-based attacks and black-box-based attacks. A white-box attack means that the attacker has a complete understanding of the model, including model structure, parameters and other information, while a black-box attack means that the attacker can only use the output results of the model to attack. - The impact of adversarial attacks

The impact of adversarial attacks on model stability is mainly reflected in the following aspects:

a. Invalidation of training data: adversarial samples can deceive the model, making the model perform better in the real world Failure in the world.

b. Introducing vulnerabilities: Adversarial attacks can cause the model to output incorrect results through small perturbations, which may cause security vulnerabilities.

c. Easily deceive the model: Adversarial samples usually look the same as the original samples to the human eye, but the model can be easily deceived.

d. The model cannot generalize: Adversarial attacks can make the model unable to generalize to other samples by making small perturbations to the samples in the training set. - Defense methods against adversarial attacks

For adversarial attacks, some common defense methods include:

a. Adversarial training: Improve the robustness of the model by adding adversarial samples to the training set.

b. Volatility defense: detect abnormal behavior in the input. If the input disturbance is too large, it will be judged as an adversarial sample and discarded.

c. Sample preprocessing: Process the input samples to make them more purified before entering the model.

d. Parameter adjustment: Adjust the parameters of the model to improve its robustness. - Code Example

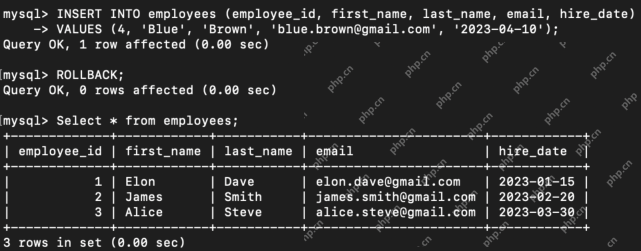

To better understand the impact of adversarial attacks and how to combat this attack, we provide the following code example:

import tensorflow as tf

from cleverhans.attacks import FastGradientMethod

from cleverhans.utils_keras import KerasModelWrapper

# 导入模型

model = tf.keras.applications.VGG16(weights='imagenet')

model.compile(optimizer='adam', loss='categorical_crossentropy')

# 包装模型,方便使用cleverhans库进行对抗性攻击

wrap = KerasModelWrapper(model)

# 构建对抗性攻击

fgsm = FastGradientMethod(wrap, sess=tf.Session())

# 对测试集进行攻击

adv_x = fgsm.generate(x_test)

# 评估攻击效果

adv_pred = model.predict(adv_x)

accuracy = np.sum(np.argmax(adv_pred, axis=1) == np.argmax(y_test, axis=1)) / len(y_test)

print('攻击成功率:', accuracy)The above code example uses TensorFlow and CleverHans library for adversarial attacks via Fast Gradient Method (FGSM). First import the pre-trained model, and then use KerasModelWrapper to wrap the model to facilitate attacks using the CleverHans library. Then build the FGSM attack object, and finally attack the test set and evaluate the attack effect.

- Conclusion

Adversarial attacks pose a huge threat to the stability of deep learning models, but we can perform adversarial training, volatility defense, sample preprocessing and parameter adjustment on the model, etc. method to improve the robustness of the model. This article provides a code example to help readers better understand the impact of adversarial attacks and how to combat them. At the same time, readers can also extend the code and try other adversarial attack methods to enhance the security of the model.

The above is the detailed content of The impact of adversarial attacks on model stability. For more information, please follow other related articles on the PHP Chinese website!

What are the TCL Commands in SQL? - Analytics VidhyaApr 22, 2025 am 11:07 AM

What are the TCL Commands in SQL? - Analytics VidhyaApr 22, 2025 am 11:07 AMIntroduction Transaction Control Language (TCL) commands are essential in SQL for managing changes made by Data Manipulation Language (DML) statements. These commands allow database administrators and users to control transaction processes, thereby

How to Make Custom ChatGPT? - Analytics VidhyaApr 22, 2025 am 11:06 AM

How to Make Custom ChatGPT? - Analytics VidhyaApr 22, 2025 am 11:06 AMHarness the power of ChatGPT to create personalized AI assistants! This tutorial shows you how to build your own custom GPTs in five simple steps, even without coding skills. Key Features of Custom GPTs: Create personalized AI models for specific t

Difference Between Method Overloading and OverridingApr 22, 2025 am 10:55 AM

Difference Between Method Overloading and OverridingApr 22, 2025 am 10:55 AMIntroduction Method overloading and overriding are core object-oriented programming (OOP) concepts crucial for writing flexible and efficient code, particularly in data-intensive fields like data science and AI. While similar in name, their mechanis

Difference Between SQL Commit and SQL RollbackApr 22, 2025 am 10:49 AM

Difference Between SQL Commit and SQL RollbackApr 22, 2025 am 10:49 AMIntroduction Efficient database management hinges on skillful transaction handling. Structured Query Language (SQL) provides powerful tools for this, offering commands to maintain data integrity and consistency. COMMIT and ROLLBACK are central to t

PySimpleGUI: Simplifying GUI Development in Python - Analytics VidhyaApr 22, 2025 am 10:46 AM

PySimpleGUI: Simplifying GUI Development in Python - Analytics VidhyaApr 22, 2025 am 10:46 AMPython GUI Development Simplified with PySimpleGUI Developing user-friendly graphical interfaces (GUIs) in Python can be challenging. However, PySimpleGUI offers a streamlined and accessible solution. This article explores PySimpleGUI's core functio

8 Mind-blowing Use Cases of Claude 3.5 Sonnet - Analytics VidhyaApr 22, 2025 am 10:40 AM

8 Mind-blowing Use Cases of Claude 3.5 Sonnet - Analytics VidhyaApr 22, 2025 am 10:40 AMIntroduction Large language models (LLMs) rapidly transform how we interact with information and complete tasks. Among these, Claude 3.5 Sonnet, developed by Anthropic AI, stands out for its exceptional capabilities. Experts o

How LLM Agents are Leading the Charge with Iterative Workflows?Apr 22, 2025 am 10:36 AM

How LLM Agents are Leading the Charge with Iterative Workflows?Apr 22, 2025 am 10:36 AMIntroduction Large Language Models (LLMs) have made significant strides in natural language processing and generation. However, the typical zero-shot approach, producing output in a single pass without refinement, has limitations. A key challenge i

Functional Programming vs Object-Oriented ProgrammingApr 22, 2025 am 10:24 AM

Functional Programming vs Object-Oriented ProgrammingApr 22, 2025 am 10:24 AMFunctional vs. Object-Oriented Programming: A Detailed Comparison Object-oriented programming (OOP) and functional programming (FP) are the most prevalent programming paradigms, offering diverse approaches to software development. Understanding thei

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

Dreamweaver CS6

Visual web development tools

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment