Home >Technology peripherals >AI >Baidu business multi-modal understanding and AIGC innovation practice

Baidu business multi-modal understanding and AIGC innovation practice

- 王林forward

- 2023-09-18 17:33:051232browse

1. Rich media multi-modal understanding

First, let’s introduce our perception of multi-modal content.

1. Multi-modal understanding

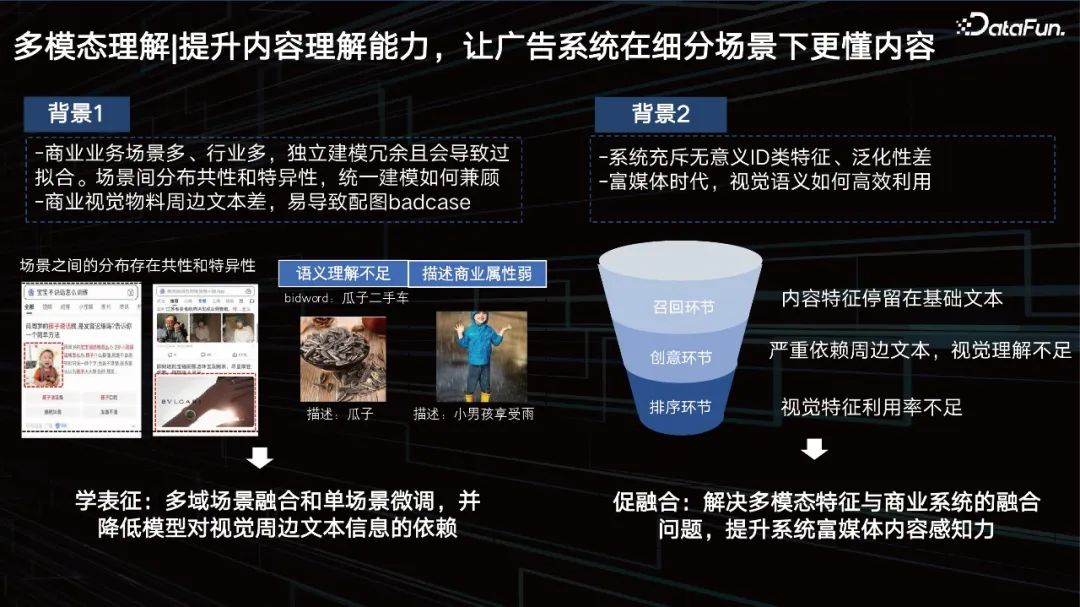

Improve content understanding capabilities, allowing the advertising system to better understand content in segmented scenarios.

When improving content understanding ability, you will encounter many practical problems:

- There are many commercial business scenarios and industries. Independent modeling is redundant and will lead to over-fitting. How to balance the commonality and specificity of distribution between scenarios. How to take into account unified modeling.

- #Poor text around commercial visual materials can easily lead to bad illustrations.

- #The system is full of meaningless ID features and has poor generalization.

- In the rich media era, how to effectively utilize visual semantics and how to integrate these content features, video features and other features are what we need to solve to improve The perceived intensity of rich media content within the system.

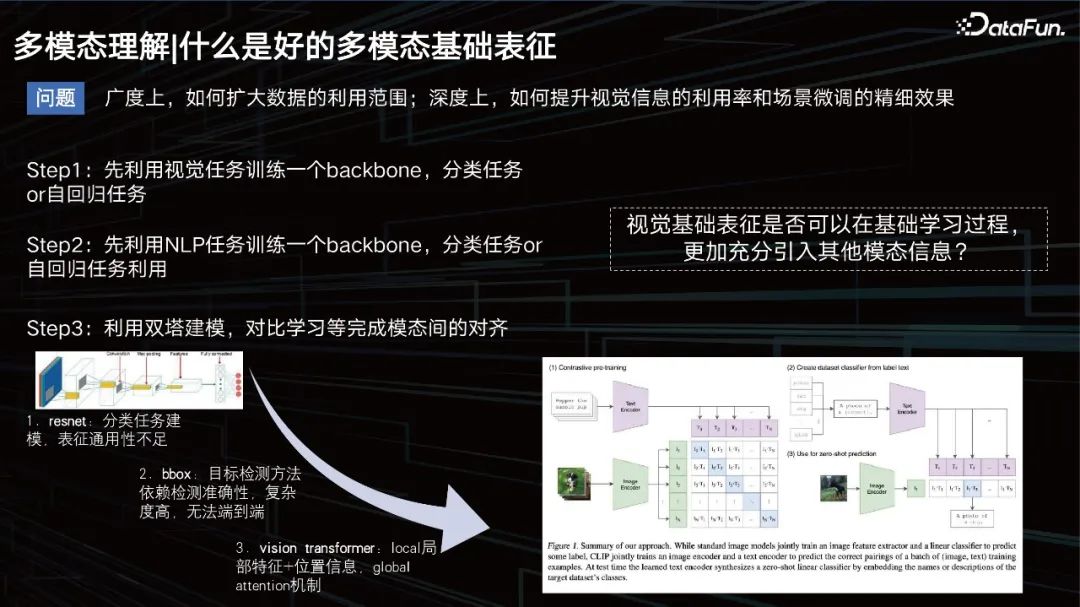

What is a good multimodal base representation.

#What is a good multimodal representation?

It is necessary to expand the scope of data application in terms of breadth, improve the visual effects in terms of depth, and ensure the fine-tuning of data in the scene.

Before, the conventional idea was to train a model to learn the image modality, an autoregressive task, and then do the text task, and then apply some twin tower models to Close the modal relationship between the two. At that time, text modeling was relatively simple, and everyone was more studying how to model vision. It started with CNN, and later included some methods based on target detection to improve visual representation, such as the bbox method. However, this method has limited detection capabilities and is too heavy, which is not conducive to large-scale data training.

Around 2020 and 2021, the VIT method has become mainstream. One of the more famous models that I have to mention here is CLIP, a model released by OpenAI in 2020, which is based on the twin-tower architecture for text and visual representation. Then use cosine to close the distance between the two. This model is very good at retrieval, but it is slightly less capable in some tasks that require logical reasoning such as VQA tasks.

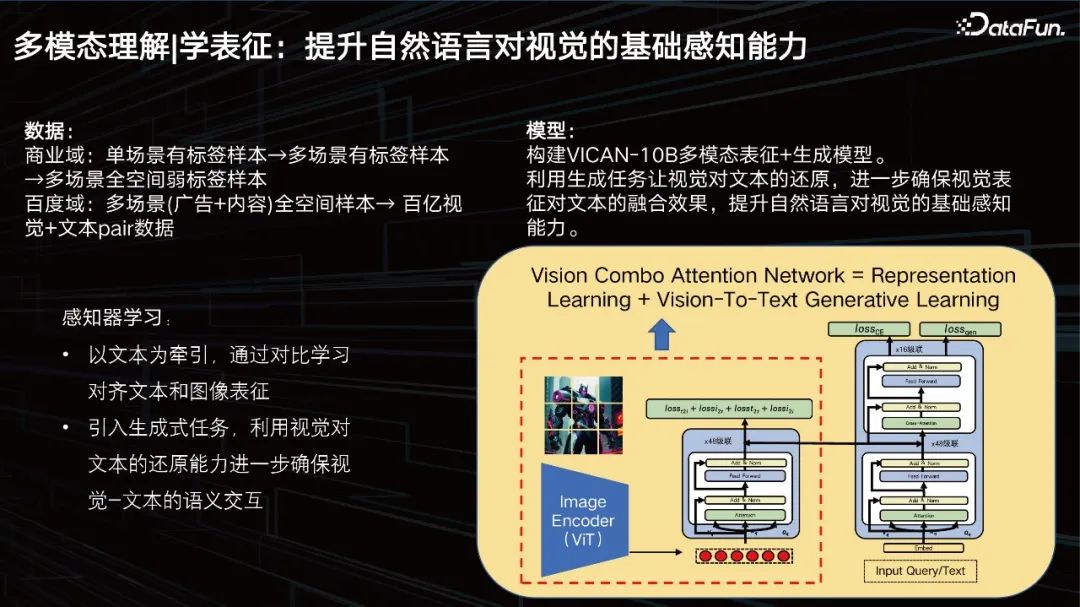

Learning representation: Improve the basic perception ability of natural language to vision.

# Our goal is to improve the basic visual perception of natural language. In terms of data, our business domain has billions of data, but it is still not enough. We need to further expand, introduce past data from the business domain, and clean and sort it out. A tens of billions-level training set was constructed.

We built the VICAN-12B multi-modal representation generation model, using the generation task to allow visual restoration of text, further ensuring the fusion effect of visual representation on text, and improving the visual effects of natural language. basic perception ability. The picture above shows the overall structure of the model. You can see that it is a composite structure of two towers and a single tower. Because the first thing to be solved is a large-scale image retrieval task. The part in the box on the left is what we call the visual perceptron, which is a ViT structure with a scale of 2 billion parameters. The right side can be viewed in two layers. The lower part is a stack of text transformers for retrieval, and the upper part is for generation. The model is divided into three tasks, one is a generation task, one is a classification task, and the other is a picture comparison task. The model is trained based on these three different goals, so it has achieved relatively good results, but we will further optimize it.

A set of efficient, unified, and transferable multi-scenario global representation scheme.

Combined with business scenario data, the LLM model is introduced to improve model understanding capabilities. The CV model is the perceptron and the LLM model is the understander. Our approach is to transfer the visual features accordingly, because as mentioned just now, the representation is multi-modal and the large model is based on text. We only need to adapt it to the large model of our Wenxin LLM, so we need to use Combo attention to perform corresponding feature fusion. We need to retain the logical reasoning capabilities of the large model, so we try not to leave the large model alone and only add business scenario feedback data to promote the integration of visual features into the large model. We can use few shots to support the task. The main tasks include:

- Description of the picture. In fact, it is not just a description, but a Prompt reverse engineering. High-quality graphic data can be used as our text later. A better data source for graphs.

- Image and text correlation control, because business needs configuration and understanding of image information, the search terms and image semantics of our advertising images are actually It needs to be controlled. Of course, this is a very general way to make relevant judgments on pictures and prompts.

- Picture risk & experience control, we have been able to describe the content of the picture relatively well, then we only need to simply use the small sample data of risk control Migration will make it clear whether it involves any risk issues.

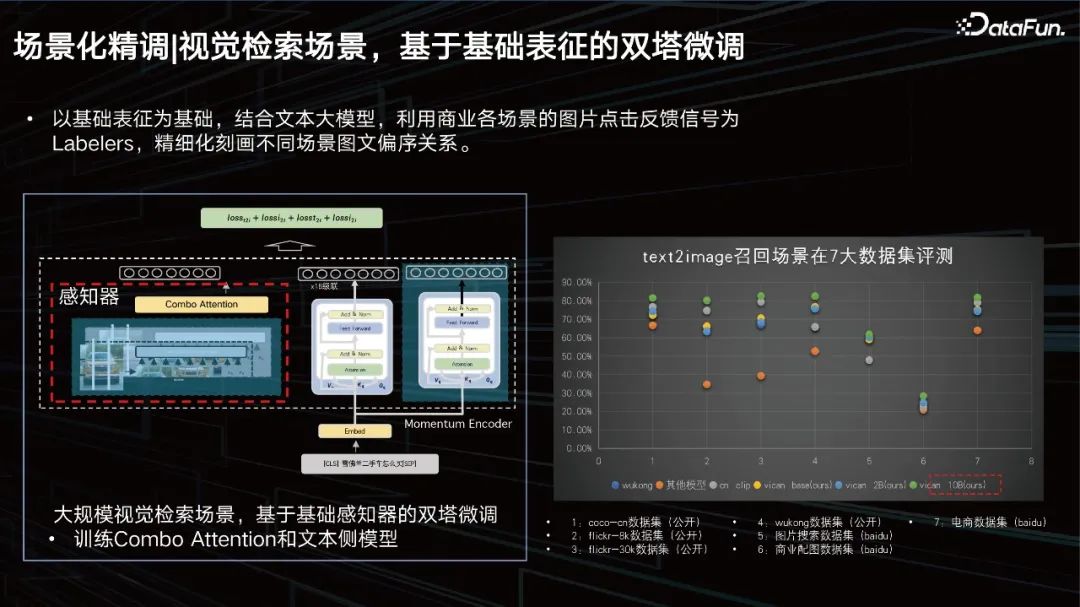

# Now, let’s focus on the scene-based fine-tuning.

2. Scenario-based fine-tuning

Visual retrieval scenario, twin-tower fine-tuning based on basic representation.

Based on the basic representation, combined with the large text model, the picture click feedback signals of various business scenes are used as Labelers to refine the partial order of pictures and texts in different scenes. relation. We have conducted evaluations on 7 major data sets, and all of them can achieve SOTA results.

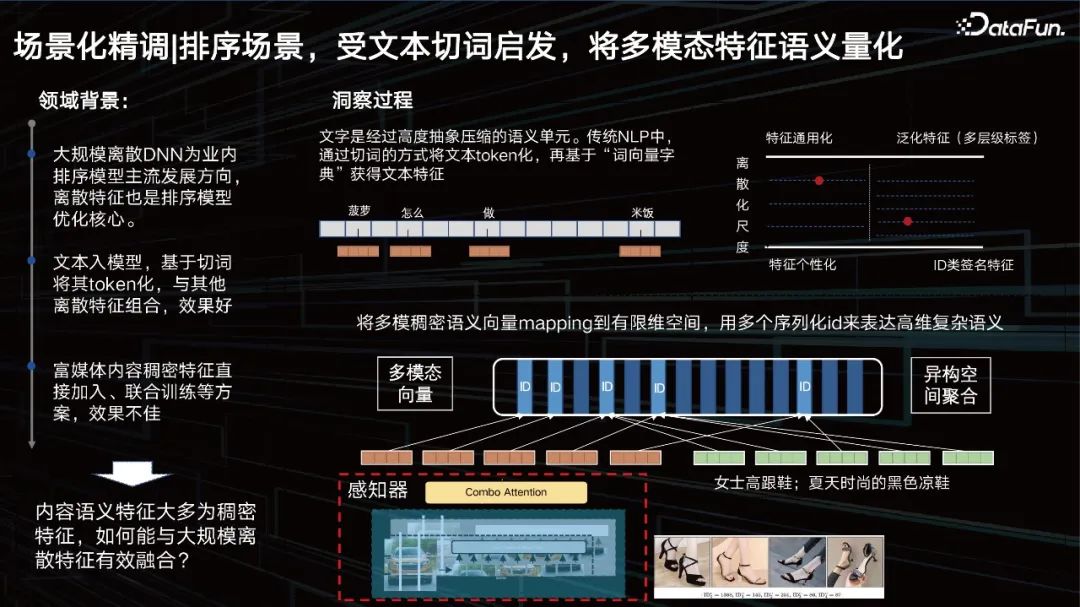

Sorting scenario, inspired by text segmentation, quantifies the semantics of multi-modal features.

#In addition to representation, another issue is how to improve the visual effect in the sorting scene. Let’s first look at the field background. Large-scale discrete DNN is the mainstream development direction of ranking models in the industry, and discrete features are also the core of ranking model optimization. The text is entered into the model, tokenized based on word segmentation, and combined with other discrete features to achieve good results. As for vision, we hope to tokenize it as well.

ID type feature is actually a very personalized feature, but as the generalized feature becomes more versatile, its characterization accuracy may become worse. We need to dynamically adjust this balance point through data and tasks. That is to say, we hope to find a scale that is most relevant to the data, to "segment" the features into an ID accordingly, and to segment multi-modal features like text. Therefore, we proposed a multi-scale, multi-level content quantification learning method to solve this problem.

Sorting scenarios, fusion of multi-modal features and models MmDict.

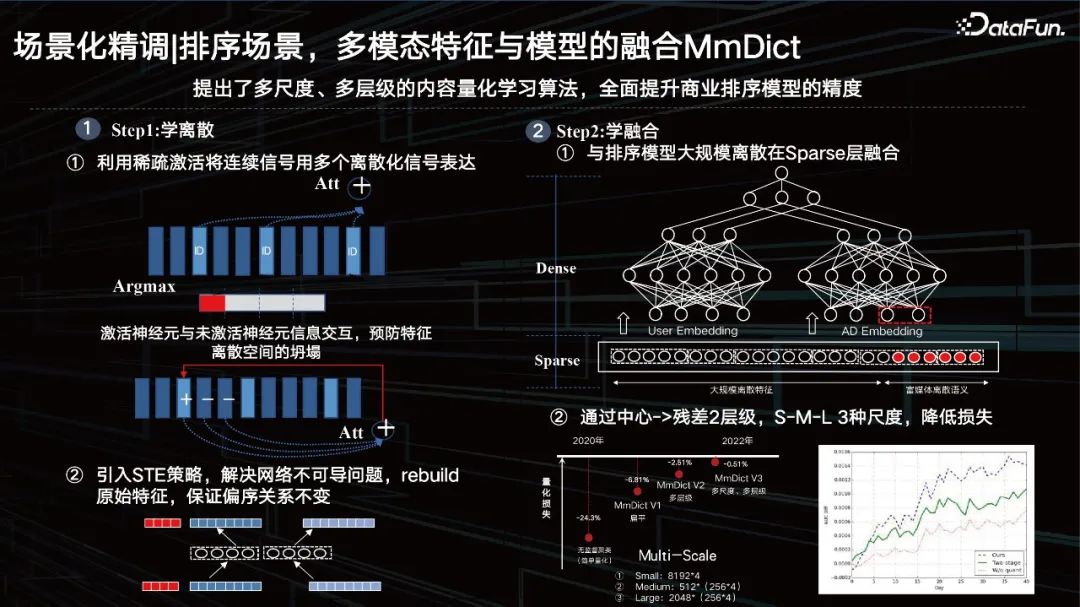

It is mainly divided into two steps. The first step is to learn discreteness, and the second step is to learn integration.

Step1: Learn Discrete

① Use sparse activation to express continuous signals with multiple discretized signals; that is, use sparse activation to segment dense features , and then activate the ID in the corresponding multi-modal codebook, but there is actually only argmax operation, which will lead to non-differentiable problems. At the same time, in order to prevent the collapse of the feature space, information interaction between activated neurons and inactive neurons is added. .

② Introduce the STE strategy to solve the problem of network non-differentiability, rebuild the original features, and ensure that the partial order relationship remains unchanged.

Through the encoder-decoder method, the dense features are serially quantized, and then the quantized features are restored in the correct way. It is necessary to ensure that its partial order relationship remains unchanged before and after restoration, and it can almost control the quantitative loss of features on specific tasks to less than 1%. Such an ID can not only personalize the current data distribution, but also have generalization properties.

Step2: Learning Fusion

① Fusion with the sorting model at the Sparse layer on a large scale.

Then the hidden layer reuse just mentioned is placed directly on top, but the effect is actually average. If you ID it, quantize it, and fuse it with the sparse feature layer and other types of features, it will have a better effect.

② Reduce the loss through center -> residual 2 levels and S-M-L 3 scales.

Of course we also use some residuals and multi-scale methods. Starting in 2020, we have gradually lowered the quantification loss, reaching below a point last year, so that after the large model extracts features, we can use this learnable quantification method to characterize the visual content, with semantic association ID The characteristics are actually very suitable for our current business systems, including such an exploratory research method on the ID of the recommendation system.

2. Qingduo

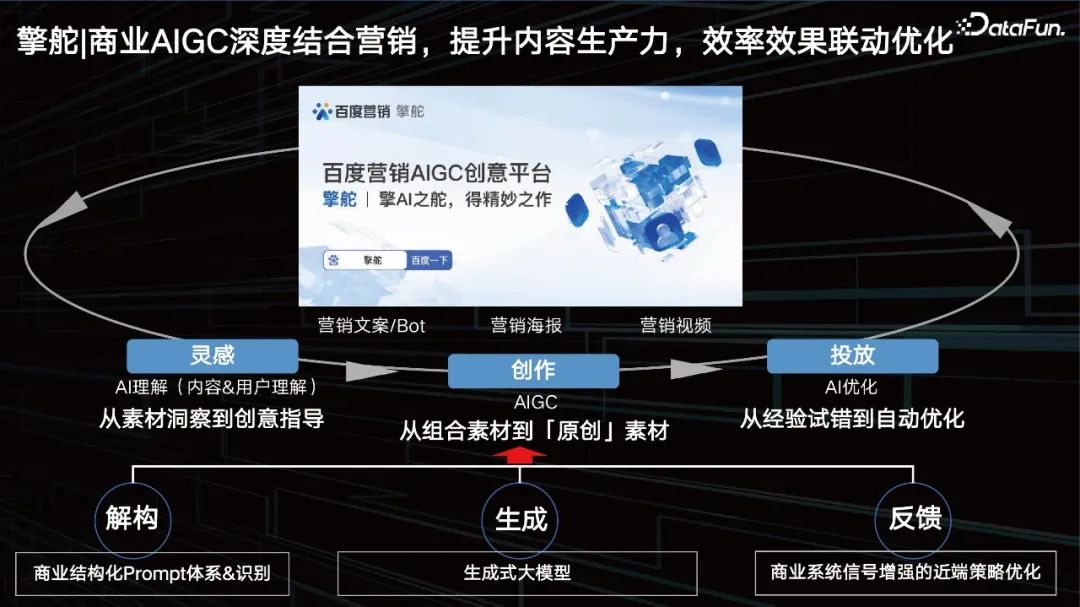

1. Commercial AIGC deeply integrates marketing to improve content productivity and optimize efficiency and effect linkage

##Baidu Marketing AIGC creative platform forms a perfect closed loop from inspiration to creation to delivery. From deconstruction, generation, and feedback, we are promoting and optimizing our AIGC.

- Inspiration: AI understanding (content & user understanding). Can AI help us find what kind of prompt is good? From material insight to creative direction.

- Creation: AIGC, such as text generation, image generation, digital people, video generation, etc.

- Delivery: AI optimization. From empirical trial and error to automatic optimization.

A good business Prompt has The following elements:

- Knowledge map, for example, selling a car, what business elements does the car need to contain? Only the brand is not enough, advertisers would like to have more A complete knowledge system;

- style, such as the current propaganda body of "Literary Style", actually needs to be abstracted into some labels to help us judge Mainly what kind of marketing title or some description of marketing.

- Selling point, selling point is actually a characteristic of product attributes, which is the most powerful reason for consumption.

- User portraits are divided into different types based on differences in the target’s behavioral views, quickly organized together, and then the newly derived types are refined to form A type of user persona.

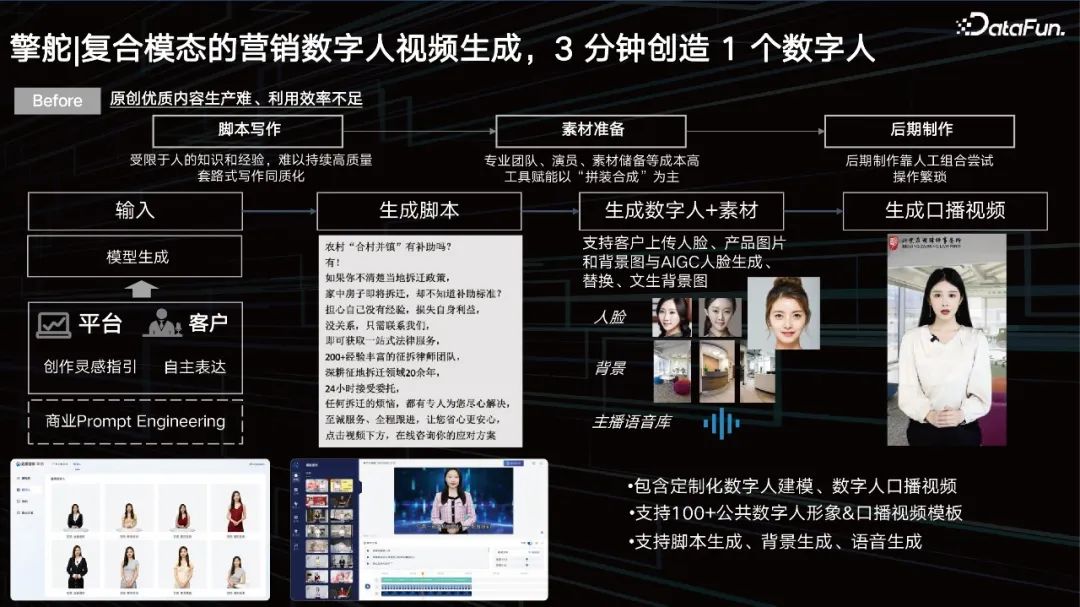

Video generation is now relatively mature. But it actually still has some problems:

- Script writing: limited by people’s knowledge and experience, it is difficult to sustain high-quality writing, and homogeneity is serious.

- Material preparation: Professional teams, actors, material reserves and other high-cost tools are empowered, focusing on "assembly and synthesis".

- Post-production: Post-production relies on manual trial and error, and the operation is cumbersome.

In the early stage, input through prompt. What kind of video you want to generate, what kind of person you want to choose, and what kind of person you want to say, all input through prompt, and then We can accurately control our large model to generate corresponding scripts based on its demands.

Next we can recall the corresponding digital people through our digital human library, but we may use AI technology to further enhance the diversity of digital people, such as face replacement, background replacement, Accent and voice replacement are used to adapt to our prompts. Finally, script, digital lip shape replacement, background replacement, face replacement, and video suppression will give you a spoken video. Customers can use digital humans to introduce some marketing selling points corresponding to the product. In this way, you can be a digital person in 3 minutes, which greatly improves advertisers' ability to be a digital person.

4. Marketing poster image generation, combining multi-modal representation of marketing image generation

The large model can also help businesses realize the generation of marketing posters and products Background replacement. We already have a tens of billions of multi-modal representations. The middle layer is a diffusion we learned. We learn unet based on good dynamic representations. After training with big data, customers also want something particularly personalized, so we also need to add some fine-tuning methods.

We provide a solution to help customers fine-tune, a solution for dynamically loading small parameters for large models. This is also a common solution in the industry.

First of all, we provide customers with the ability to generate pictures. Customers can change the background behind the picture through editing or prompting.

The above is the detailed content of Baidu business multi-modal understanding and AIGC innovation practice. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- How has the controversial AIGC become a top player?

- Google scientists speak personally: How to implement embodied reasoning? Let the large model 'speak' the language of the robot

- Discovery of three major Python models and top ten commonly used algorithm examples

- Kingsoft Office WPS AI will embed large models into tables, text, presentations, and PDFs

- 0 code fine-tuning of large models is popular, only 5 steps are needed, and the cost is as low as 150 yuan