Technology peripherals

Technology peripherals AI

AI Google scientists speak personally: How to implement embodied reasoning? Let the large model 'speak' the language of the robot

Google scientists speak personally: How to implement embodied reasoning? Let the large model 'speak' the language of the robotGoogle scientists speak personally: How to implement embodied reasoning? Let the large model 'speak' the language of the robot

With the development of large-scale language models, can it use its capabilities to guide robots to understand complex instructions and complete more advanced tasks? And what challenges will we face in this process? Recently, Zhiyuan Community invited Dr. Xia Fei, a Google research scientist, to give a report on "Embodied Reasoning Based on Language and Vision", detailing the team's cutting-edge work in this emerging field.

About the author: Xia Fei is currently working as a research scientist in the robotics team of Google Brain. His main research direction is the application of robots to unstructured complex environments. His representative work includes GibsonEnv, iGibson, SayCan, etc. His research has been reported by WIRED, the Washington Post, the New York Times and other media. Dr. Xia Fei graduated from Stanford University where he studied under Silvio Savarese and Leonidas Guibas. He has published many articles in conferences and journals such as CVPR, CoRL, IROS, ICRA, Neurips, RA-L, Nature Communications, etc. His recent research direction is to use foundation models (Foundation Models) in the decision-making process of intelligent agents. His team recently proposed the PaLM-SayCan model.

01 Background

Machine learning for robots has made great achievements in recent years Great progress has been made, but there are still relatively big problems. Machine learning requires a lot of data to train, but the data generated by robots is very expensive, and the robots themselves are also subject to loss.

#When humans are children, they interact with the physical world through play and learn many physical laws. Inspired by this, can the robot also interact with the environment to obtain this physical information to complete various tasks? Applying machine learning to robots relies heavily on simulation environments.

In this regard, Dr. Xia Fei and his colleagues have proposed work such as Gibson Env (Environment) and iGibson. The former focuses on the reconstruction of the visual environment, and the latter Others focus on physical simulation. By conducting three-dimensional scanning and reconstruction of the real world, and rendering visual signals through neural networks, a simulation environment is created, allowing a variety of robots to perform physical simulations and learn control from time sensors to actuators. In the iGibson environment, robots can learn richer interactions with the environment, such as learning to use a dishwasher.

## Dr. Xia Fei believes that the above work represents the transformation from Internet AI to embodied AI. In the past, AI training was mainly based on data sets such as ImageNet and MS COCO, which were Internet tasks. Embodied AI requires the AI's perception and action to form a closed loop - the AI must decide the next action based on the perceived results. Xia Fei's doctoral thesis "large scale simulation for embodied perception and robot learning" is about large-scale robot simulation for learning, perception and reasoning.

#In recent years, basic models have developed rapidly in the field of artificial intelligence. Some researchers believe that instead of relying on the simulation environment, information can be extracted from the basic model to help the robot make decisions. Dr. Xia Fei called this new direction "Foundation Model for Decision Making", and he and his team proposed work such as PaLM-SayCan.

02PaLM-SayCan: Let the language model guide the robot

1. Why is robot difficult? Handling complex, long-range tasks?

The PaLM-SayCan team has 45 authors. It is a collaborative project between the Google Robotics Team and Everyday Robots, with the purpose of exploring the use of machine learning to change robots. fields, and let robots provide data to improve machine learning capabilities. Research focuses on two issues: unstructured complex environments, and making robots more useful in daily life.

Although people already have personal assistants like Siri or Alexa, there is no such presence in the field of robotics. Dr. Xia gave this example: When a drink is spilled, we want to explain the situation to the robot and ask it for help. Or if you are tired after exercise, ask it to provide drinks and snacks. Research hopes that robots can understand and perform these tasks.

The current difficulty is that it is still difficult for robots to do long-term or long-distance tasks, and they are still incapable of tasks that require complex planning, common sense and reasoning. The reasons are two aspect. The first is the lack of good user interaction interfaces in the field of robotics. When traditional robots perform Pick&Place tasks, they usually use Goal-conditioning or One-hot Conditioning methods. Goal-conditioning needs to tell the robot what the goal is and let the robot perform the task of changing the initial conditions to the goal conditions. This requires first demonstrating to the robot what the task conditions will be after completion. Kind of.

##And One-hot Conditioning uses One-hot coding, which is suitable for all tasks that the robot can complete (such as 100 tasks) are numbered from 0 to 99. Every time it needs to be executed, a number is provided to the robot, and it knows what task to complete. However, the problem with the one-hot condition is that the user needs to remember the encoding corresponding to each task, and the one-hot encoding does not obtain the dependency information between tasks (such as completing the task encoding sequence corresponding to a goal).

As a result, the current robots can only complete short-range tasks, usually grabbing and placing. And the robot itself is static rather than mobile. In addition, the environment is also limited to scenes such as laboratories, often without humans.

#2. Language model is used for robots: How to make it "speak" the robot's language?

In order to solve these problems, the team thought of using the basic model. Language models can replace Goal-conditioning and describe tasks clearly and unambiguously through language. At the same time, language also contains dependency information between task steps, such as the first step, the second step, etc. on the recipe, to provide help for robot learning. In addition, language can also define long-term tasks and solve the limitations of imitation learning methods.

Using large models on robots may face some challenges. The most important thing is to determine the language that is oriented towards the output of the robot. The large model is trained based on human natural language, and the tasks it outputs may not be possible for robots. And the language model wasn't trained on the robot's data, so it doesn't know the scope of the robot's capabilities. The second is the Grounding problem. The large model has not personally experienced the physical world and lacks embodied information. The third one is the safety and interpretability of the robot itself under the guidance of a large model. Biases in language models may be amplified by their association with physical systems, causing real-world consequences.

There is an example of credibility: when a human user communicates with Google's LaMDA model, the user asks the model "favorite island", and the model answers Crete, Greece. Island, and can also answer some reasons. But this result is not credible, because the result that the AI should give is "I don't know which island I like best, because I have never been to any island." The problem with the language model is that it has not interacted with the real world and only outputs the most likely next sentence based on statistical rules.

#If language models are used on robots, different models will give different results, some of which are not useful for driving the robot to perform tasks. For example, if a user asks the robot to "clean up a spilled drink," GPT-3 might say, "You can use a vacuum cleaner." This result is not entirely correct because vacuum cleaners cannot clean liquids.

If it is a LaMDA model, LaMDA may say "Do you want me to help you find a cleaner?" This answer is normal, but it is not practical. Useful, because LaMDA fine-tunes the dialogue material, and its objective function is to extend the length of the dialogue as much as possible, not to help complete the task. If it is a FLAN model, it will reply "Sorry, I didn't mean it." It does not understand the user's intention: is it a conversation? Still need to solve a problem? Therefore, there are a series of problems in using large language models on robots.

PaLM-SayCan works to solve these challenges. The first is to enable the large model to speak the language of the robot through Few-shot Prompting (few-shot learning). For example, construct tasks such as "get the coffee to the cupboard", "give me an orange", etc., and give the corresponding steps (such as 1-5 and 1-3). The user then gives the model an instruction: "Put an apple on the table." After having the previous step prompts, the model will find and combine the appropriate task steps on its own, and generate a plan to complete the task step by step.

##It should be noted that there are two main ways to interact with large models, One is the Generative Interface, which generates the next Token based on the input; the other is the Scoring Interface, which calculates the likelihood function for a given Token. PaLM-SayCan uses a scoring method, which makes the language model more stable and easy to output the desired results. In the task of placing an apple, the model scores various steps and selects the appropriate outcome.

3. Bridging the gap between the language model and the real world: Let the robot explore the affordances of the environment

There is another problem that needs to be solved: when the language model generates the task steps, it does not know what the robot can currently do. If there is no apple in front of the robot, the robot cannot complete the task of placing the apple. Therefore, this requires letting the language model know what tasks the robot can do in the current environment and state. A new concept needs to be introduced here, called Robotic Affordances, which is also the core of this work.

##Affordances translated into Chinese is called affordances, which is the name of American psychologist James J. A concept proposed by Gibson around 1977, is defined as the tasks that an agent can do in an environment in the current state, which represents its affordances. sex. Affordance can be obtained using supervised learning, but this requires a large amount of data and labeling. In this regard, the team adopted a reinforcement learning method and used the value function of Policy to approximate affordances. For example, train a robot to grab various things in the environment. After training, let the robot explore the room. When it sees an item in front of it, the value function of picking up the item will become very high, thus replacing the available items. sexual prediction. Combining affordances and language models, the PaLM-SayCan algorithm is obtained. As shown in the figure above, the left side is the language model, which scores the tasks that the robot can complete according to the instructions given by the user, and calculates the probability that completing the sub-tasks will help complete the overall task. The right side is the value function, which represents the probability of completing each task in the current state. The product of the two represents the probability that the robot successfully completes a subtask that contributes to the overall task. In the example of Apple, there is no apple in front of the robot in the current state. To complete this task, the first thing to do is to find the apple, so the affordance score of finding the apple is relatively high, and the score of grabbing the apple is low. After finding the apple, the affordance score for grabbing the apple increases, and the task of grabbing the apple is performed. This process is repeated until the overall task is completed. In addition to PaLM-SayCan, Dr. Xia and colleagues have also completed some other related work. In terms of Prompt, the team proposed Chain of Thought Prompting (which can be understood as problem-solving ideas) to give the language model more reasoning capabilities. The standard Prompt mode is to design a question template and give the answer. The model outputs answers during inference, but sometimes the answers given by the model are wrong. Therefore, the goal of Chain of Thought Prompting is to provide an explanation to the model while providing the problem, which can significantly improve the results of the model and even surpass human levels on some tasks. The model is prone to errors when processing negative sentences. For example, a human user proposes "Give me a fruit, but not an apple". Models tend to provide an apple because there are apples in both the question and the executable options. Using Chain of Thought Prompting, some explanations can be provided. For example, the model would output "The user wants a fruit, but not an apple. A banana is a fruit, not an apple. I can give the user a banana." Chain of Thought Prompting can also solve more subtle negative requirements. For example, a user expresses an allergy to caffeine and asks the robot to get a drink. Allergies are another subtle form of negation. Using traditional methods, the robot might reach for a caffeinated drink (without understanding the negation that allergies represent). Chain of Thought Prompting can explain allergies, etc. and improve the reasoning effect. Use large models to make robot decisions and The combination of environmental interaction is also an important direction of research. The team proposed the work of Inner Monologue, which aims to allow the language model to review past decisions based on changes in the environment and recover from wrong instructions or accidents caused by the environment. ##For example, when humans go home and find that the selected key cannot open the door, then people will choose Try another key, or change the direction of rotation. What this embodies is correcting errors and updating new actions based on feedback from the environment. Inner Monologue works in this way. For example, if the cola falls while the robot is grabbing a cola, subsequent tasks cannot be completed. Inner Monologue is needed to detect whether the task is completed successfully, and put feedback into the decision-making process, and make new decisions based on the feedback information. decision. #As shown in the figure, the Inner Monologue work includes active scene description (Active Scene Description) and task success detector (Success Detector). When humans give instructions, the model can execute the instructions and activate scenario descriptions to assist the robot in decision-making. The training process still uses the Few-shot Prompt method, so that it can draw inferences from one example. For example, when the robot is instructed to get a drink, it will ask the human whether to get a Coke or a soda. 04 Q&A Q: When working on Inner Monologue, will the Agent also take the initiative to ask questions? How was this absorbed? Q: How does the robot know where an item is (such as potato chips in the drawer)? If the capabilities of robots gradually increase in the future, will the search space be too large during exploration? #A: The robot’s knowledge of the storage location of items is currently hard-coded, not a Automatic process. But the large language model also contains certain semantic knowledge, such as where the items are. This semantic knowledge can reduce the search space. At the same time, you can also explore based on the probability of finding items. Currently, Xia Fei's team has published a new work to solve this problem. The core idea is to establish a natural language indexed scene representation. Reference website nlmap-saycan.github.io ##Q: In addition, the hierarchical reinforcement learning that has emerged in recent years, Does it provide some inspiration for complex task planning? PaLM-SayCan is similar to hierarchical reinforcement learning, with underlying skills and upper-level tasks The planning can be said to be a hierarchical method, but it is not hierarchical reinforcement learning. I personally prefer this layered approach, because when planning tasks, you don't necessarily have to do every detailed step, which would be a waste of time. Mission planning can be trained using massive Internet data, but the underlying skills require physical data, so they need to interact with the environment and learn. Q: When PaLM-SayCan is actually used in robots, are there any fundamental issues that remain unresolved? If it can be used as a replacement for daily nanny, how long will it take to realize it? #A: There are still some fundamental issues that have not been resolved, and they are not simple engineering issues. . In terms of principle, the underlying motion control and grasping of the robot is a big challenge. We are still unable to achieve 100% grasping success, which is a big problem. #Of course, it can already provide some value to people with limited mobility. However, if it is truly a commercial product, it is not possible yet. The mission success rate is about 90%, which does not meet commercial requirements. #Q: Is the success rate of robot planning limited by the training data set? A: The robot’s planning ability is limited by the training corpus. It is easy to find some instructions in the corpus, such as "throw away the garbage". However, there is almost no corpus such as "move the robot's two-finger claw to the right 10 centimeters" in the corpus, because people will not leave such information on the Internet. This involves the problem of granular information. Currently, limited by corpus, robots can only complete coarse-grained tasks. On the other hand, fine-grained planning itself should not be done by the language model, because it contains too much physical information and is likely to be unusable described in human language. One idea is that fine-grained operations can be implemented using imitation learning (refer to BC-Z work) or code generation (refer to the team's latest work https://code-as-policies.github.io/). The larger role of the large model is to serve as the user's interactive interface, interpret the instructions given by humans to the robot, and decompose them into steps that the machine can perform. #In addition, the language can do high-level semantic planning without the need for more physical planning. If you want to achieve fine-grained planning tasks, you still have to rely on imitation learning or reinforcement learning.

More Embodied Intelligence Work: Improving the Model Reasoning ability, using environmental feedback to form a closed loop

1.Chain of Thought Prompting: Understanding complex common sense

2.Inner Monologue: Correct errors and return to the correct execution track

Q: Is the large language model of PaLM-SayCan trained from scratch? Still only used the model.

The above is the detailed content of Google scientists speak personally: How to implement embodied reasoning? Let the large model 'speak' the language of the robot. For more information, please follow other related articles on the PHP Chinese website!

A Comprehensive Guide to Selenium with PythonApr 15, 2025 am 09:57 AM

A Comprehensive Guide to Selenium with PythonApr 15, 2025 am 09:57 AMIntroduction This guide explores the powerful combination of Selenium and Python for web automation and testing. Selenium automates browser interactions, significantly improving testing efficiency for large web applications. This tutorial focuses o

A Guide to Understanding Interaction TermsApr 15, 2025 am 09:56 AM

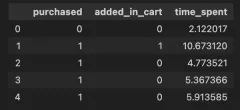

A Guide to Understanding Interaction TermsApr 15, 2025 am 09:56 AMIntroduction Interaction terms are incorporated in regression modelling to capture the effect of two or more independent variables in the dependent variable. At times, it is not just the simple relationship between the control

Swiggy's Hermes: AI Solution for Seamless Data-Driven DecisionsApr 15, 2025 am 09:50 AM

Swiggy's Hermes: AI Solution for Seamless Data-Driven DecisionsApr 15, 2025 am 09:50 AMSwiggy's Hermes: Revolutionizing Data Access with Generative AI In today's data-driven landscape, Swiggy, a leading Indian food delivery service, is leveraging the power of generative AI through its innovative tool, Hermes. Designed to accelerate da

Gaurav Agarwal's Blueprint for Success with RagaAI - Analytics VidhyaApr 15, 2025 am 09:46 AM

Gaurav Agarwal's Blueprint for Success with RagaAI - Analytics VidhyaApr 15, 2025 am 09:46 AMThis episode of "Leading with Data" features Gaurav Agarwal, CEO and founder of RagaAI, a company focused on ensuring the reliability of generative AI. Gaurav discusses his journey in AI, the challenges of building dependable AI systems, a

Grok 2 Image Generator: Shown Angry Elon Musk Holding AR15Apr 15, 2025 am 09:45 AM

Grok 2 Image Generator: Shown Angry Elon Musk Holding AR15Apr 15, 2025 am 09:45 AMGrok-2: Unfiltered AI Image Generation Sparks Ethical Debate Elon Musk's xAI has launched Grok-2, a powerful AI model boasting enhanced chat, coding, and reasoning capabilities, alongside a controversial unfiltered image generator. This release has

Top 10 GitHub Repositories to Master Statistics - Analytics VidhyaApr 15, 2025 am 09:44 AM

Top 10 GitHub Repositories to Master Statistics - Analytics VidhyaApr 15, 2025 am 09:44 AMStatistical Mastery: Top 10 GitHub Repositories for Data Science Statistics is fundamental to data science and machine learning. This article explores ten leading GitHub repositories that provide excellent resources for mastering statistical concept

How to Become Robotics Engineer?Apr 15, 2025 am 09:41 AM

How to Become Robotics Engineer?Apr 15, 2025 am 09:41 AMRobotics: A Rewarding Career Path in a Rapidly Expanding Field The field of robotics is experiencing explosive growth, driving innovation across numerous sectors and daily life. From automated manufacturing to medical robots and autonomous vehicles,

How to Remove Duplicates in Excel? - Analytics VidhyaApr 15, 2025 am 09:20 AM

How to Remove Duplicates in Excel? - Analytics VidhyaApr 15, 2025 am 09:20 AMData Integrity: Removing Duplicates in Excel for Accurate Analysis Clean data is crucial for effective decision-making. Duplicate entries in Excel spreadsheets can lead to errors and unreliable analysis. This guide shows you how to easily remove dup

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Dreamweaver CS6

Visual web development tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

SublimeText3 Linux new version

SublimeText3 Linux latest version

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

WebStorm Mac version

Useful JavaScript development tools