A large model development toolset has been created!

The content that needs to be rewritten is: Author Richard MacManus

Planning | Yan Zheng

Web3 failed to subvert Web2, but the emerging large model development stack is allowing developers to start from the "cloud" The "native" era is moving towards a new AI technology stack.

Tip engineers may not be able to touch the nerves of developers to rush to large models, but a sentence from a product manager or leader: Can an "agent" be developed, can a "chain" be implemented, and "Which vector database to use?" , but it has become the difficulty for driving technology students in major mainstream large model application companies to overcome the development of generative AI.

What are the layers of the emerging technology stack? Where is the most difficult part? This article will lead you to find out

1. The technology stack needs to be updated. Developers are ushering in the era of AI engineers

In the past year, some tools have emerged, such as LangChain and LlamaIndex. This has allowed the developer ecosystem for AI applications to begin to mature. There is even a term now used to describe those who focus on the development of artificial intelligence, namely "AI engineer". According to Shawn @swyx Wang, this is the next step for "prompt engineers". He also created a coordinate chart to visualize where AI engineers fit into the broader artificial intelligence ecosystem

Source: swyx

Source: swyx

Large-scale language model (LLM) is the core technology of AI engineers. It is no coincidence that both LangChain and LlamaIndex are tools that extend and complement LLM. But what other tools are available for this new breed of developer?

So far, the best diagram I’ve seen of the LLM stack comes from venture capital firm Andreessen Horowitz (a16z). The following is its view on the "LLM app stack":

Source: a16z

Source: a16z

2. Yes, the top layer is still data

In the LLM technology stack, data is the most important component, this is very obvious. According to a16z's chart, the data is at the top. In LLM, "embedded model" is a very critical area, and you can choose from OpenAI, Cohere, Hugging Face, or dozens of other LLM options, including the increasingly popular open source LLM

Before using LLM, a "data pipeline" needs to be established. For example, consider Databricks and Airflow as two examples, or the data can be processed "unstructured". This also applies to the periodicity of data and can help companies "clean" or simply organize the data before entering it into a custom LLM. "Data intelligence" companies like Alation offer this type of service, which sounds a bit like tools such as "business intelligence" that are better known in the IT technology stack

The last part of the data layer is very popular these days A vector database for storing and processing LLM data. According to Microsoft's definition, this is a database that stores data as high-dimensional vectors, which are mathematical representations of features or attributes. Data is stored as vectors using embedding technology. In a media chat, leading vector database vendor Pinecone noted that their tools are often used with data pipeline tools such as Databricks. In this case, the data is typically stored elsewhere (such as a data lake) and then transformed into embedded data via a machine learning model. After processing and chunking, the resulting vectors are sent to Pinecone

3, Hints and Queries

The next two levels can be summarized as hints and queries - this is an artificial intelligence application The point of interaction where the program interfaces with LLM and (optionally) other data tools. A16z positions LangChain and LlamaIndex as "orchestration frameworks," meaning that once developers understand which LLM they are using, they can leverage these tools

According to a16z, orchestration like LangChain and LlamaIndex The framework "abstracts away many of the details of prompt linking," which means querying and managing data between the application and the LLM. This orchestration process includes interacting with external API interfaces, retrieving context data from the vector database, and maintaining memory across multiple LLM calls. The most interesting box in a16z’s diagram is “Playground,” which includes OpenAI, nat.dev, and Humanloop

A16z isn’t exactly defined in the blog post, but we can infer that the “Playground” tool can help The developers perform what A16z calls "cue jiu-jitsu." In these places, developers can experiment with various prompting techniques.

Humanloop is a British company whose platform features a “collaborative prompt workspace.” It further describes itself as a "complete development toolkit for production LLM functionality." So basically it allows you to try LLM stuff and then deploy it into your application if it works

4. Assembly line operations: LLMOps

At present, the layout of large-scale production lines is gradually becoming clear. On the right side of the orchestration box, there are many operation boxes, including LLM caching and verification. In addition, there are a series of LLM-related cloud services and API services, including open API repositories such as Hugging Face, and proprietary API providers such as OpenAI

This may be our first step in "cloud native" It’s no coincidence that many DevOps companies have added artificial intelligence to their product lists in the most similar place in the tech stack that developers are used to. In May, I spoke with Harness CEO Jyoti Bansal. Harness runs a "software delivery platform" that focuses on the "CD" part of the CI/CD process.

Bansai told me that AI can alleviate the tedious and repetitive tasks involved in the software delivery life cycle, from generating specifications based on existing functionality to writing code. Additionally, he said AI can automate code reviews, vulnerability testing, bug fixes, and even create CI/CD pipelines for builds and deployments. According to another conversation I had in May, AI is also changing developer productivity. Trisha Gee from the build automation tool Gradle told me that AI can speed up development by reducing time on repetitive tasks, like writing boilerplate code, and allowing developers to focus on the big picture, like making sure the code meets business needs.

5. Web3 is out, and the large model development stack is coming

In the emerging LLM development technology stack, we can observe a series of new product types, such as orchestration frameworks (such as LangChain and LlamaIndex), vector databases and "playground" platforms such as Humanloop. All of these products are extending and/or supplementing the core technologies of the current era: large language models, just like the rise of cloud-native era tools such as Spring Cloud and Kubernetes in previous years. However, at present, almost all large, small, and top companies in the cloud native era are trying their best to adapt their tools to AI engineering, which will be very beneficial to the future development of the LLM technology stack.

Yes, this time the big model seems to be "standing on the shoulders of giants." The best innovations in computer technology are always based on the past. Maybe that's why the "Web3" revolution failed - it wasn't so much building on the previous generation as trying to usurp it.

The LLM technology stack seems to have done it, and it has become a bridge from the cloud development era to a newer, artificial intelligence-based developer ecosystem

Reference link:

https:/ /www.php.cn/link/c589c3a8f99401b24b9380e86d939842The above is the detailed content of A large model development toolset has been created!. For more information, please follow other related articles on the PHP Chinese website!

Explaining the reasons why you cannot use your credit card with ChatGPT's paid plan and how to deal with itMay 14, 2025 am 03:32 AM

Explaining the reasons why you cannot use your credit card with ChatGPT's paid plan and how to deal with itMay 14, 2025 am 03:32 AMTroubleshooting Guide for Credit Card Payment with ChatGPT Paid Subscriptions Credit card payments may be problematic when using ChatGPT paid subscription. This article will discuss the reasons for credit card rejection and the corresponding solutions, from problems solved by users themselves to the situation where they need to contact a credit card company, and provide detailed guides to help you successfully use ChatGPT paid subscription. OpenAI's latest AI agent, please click ⬇️ for details of "OpenAI Deep Research" 【ChatGPT】Detailed explanation of OpenAI Deep Research: How to use and charging standards Table of contents Causes of failure in ChatGPT credit card payment Reason 1: Incorrect input of credit card information Original

An easy-to-understand explanation of how to create a VBA macro in ChatGPT!May 14, 2025 am 02:40 AM

An easy-to-understand explanation of how to create a VBA macro in ChatGPT!May 14, 2025 am 02:40 AMFor beginners and those interested in business automation, writing VBA scripts, an extension to Microsoft Office, may find it difficult. However, ChatGPT makes it easy to streamline and automate business processes. This article explains in an easy-to-understand manner how to develop VBA scripts using ChatGPT. We will introduce in detail specific examples, from the basics of VBA to script implementation using ChatGPT integration, testing and debugging, and benefits and points to note. With the aim of improving programming skills and improving business efficiency,

I can't use the ChatGPT plugin function! Explaining what to do in case of an errorMay 14, 2025 am 01:56 AM

I can't use the ChatGPT plugin function! Explaining what to do in case of an errorMay 14, 2025 am 01:56 AMChatGPT plugin cannot be used? This guide will help you solve your problem! Have you ever encountered a situation where the ChatGPT plugin is unavailable or suddenly fails? The ChatGPT plugin is a powerful tool to enhance the user experience, but sometimes it can fail. This article will analyze in detail the reasons why the ChatGPT plug-in cannot work properly and provide corresponding solutions. From user setup checks to server troubleshooting, we cover a variety of troubleshooting solutions to help you efficiently use plug-ins to complete daily tasks. OpenAI Deep Research, the latest AI agent released by OpenAI. For details, please click ⬇️ [ChatGPT] OpenAI Deep Research Detailed explanation:

Does ChatGPT not follow the character count specification? A thorough explanation of how to deal with this!May 14, 2025 am 01:54 AM

Does ChatGPT not follow the character count specification? A thorough explanation of how to deal with this!May 14, 2025 am 01:54 AMWhen writing a sentence using ChatGPT, there are times when you want to specify the number of characters. However, it is difficult to accurately predict the length of sentences generated by AI, and it is not easy to match the specified number of characters. In this article, we will explain how to create a sentence with the number of characters in ChatGPT. We will introduce effective prompt writing, techniques for getting answers that suit your purpose, and teach you tips for dealing with character limits. In addition, we will explain why ChatGPT is not good at specifying the number of characters and how it works, as well as points to be careful about and countermeasures. This article

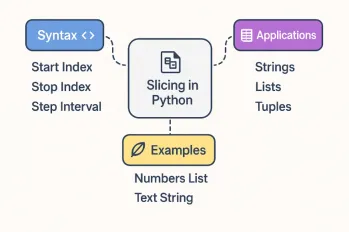

All About Slicing Operations in PythonMay 14, 2025 am 01:48 AM

All About Slicing Operations in PythonMay 14, 2025 am 01:48 AMFor every Python programmer, whether in the domain of data science and machine learning or software development, Python slicing operations are one of the most efficient, versatile, and powerful operations. Python slicing syntax a

An easy-to-understand explanation of how to use ChatGPT to create quotes!May 14, 2025 am 01:44 AM

An easy-to-understand explanation of how to use ChatGPT to create quotes!May 14, 2025 am 01:44 AMThe evolution of AI technology has accelerated business efficiency. What's particularly attracting attention is the creation of estimates using AI. OpenAI's AI assistant, ChatGPT, contributes to improving the estimate creation process and improving accuracy. This article explains how to create a quote using ChatGPT. We will introduce efficiency improvements through collaboration with Excel VBA, specific examples of application to system development projects, benefits of AI implementation, and future prospects. Learn how to improve operational efficiency and productivity with ChatGPT. Op

What is ChatGPT Pro (o1 Pro)? Explaining what you can do, the prices, and the differences between them from other plans!May 14, 2025 am 01:40 AM

What is ChatGPT Pro (o1 Pro)? Explaining what you can do, the prices, and the differences between them from other plans!May 14, 2025 am 01:40 AMOpenAI's latest subscription plan, ChatGPT Pro, provides advanced AI problem resolution! In December 2024, OpenAI announced its top-of-the-line plan, the ChatGPT Pro, which costs $200 a month. In this article, we will explain its features, particularly the performance of the "o1 pro mode" and new initiatives from OpenAI. This is a must-read for researchers, engineers, and professionals aiming to utilize advanced AI. ChatGPT Pro: Unleash advanced AI power ChatGPT Pro is the latest and most advanced product from OpenAI.

We explain how to create and correct your motivation for applying using ChatGPT! Also introduce the promptMay 14, 2025 am 01:29 AM

We explain how to create and correct your motivation for applying using ChatGPT! Also introduce the promptMay 14, 2025 am 01:29 AMIt is well known that the importance of motivation for applying when looking for a job is well known, but I'm sure there are many job seekers who struggle to create it. In this article, we will introduce effective ways to create a motivation statement using the latest AI technology, ChatGPT. We will carefully explain the specific steps to complete your motivation, including the importance of self-analysis and corporate research, points to note when using AI, and how to match your experience and skills with company needs. Through this article, learn the skills to create compelling motivation and aim for successful job hunting! OpenAI's latest AI agent, "Open

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SublimeText3 Chinese version

Chinese version, very easy to use

WebStorm Mac version

Useful JavaScript development tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver Mac version

Visual web development tools