VMware and NVIDIA today announced the expansion of their strategic partnership to help thousands of companies using VMware cloud infrastructure prepare for the AI era.

VMware Private AI Foundation with NVIDIA will enable enterprises to customize models and run a variety of generative AI applications such as intelligent chatbots, assistants, search and summarization, and more. The platform will be a fully integrated solution using generative AI software and accelerated computing from NVIDIA, built on VMware Cloud Foundation and optimized for AI.

Raghu Raghuram, CEO of VMware, said: “Generative AI and multi-cloud are a perfect match. Customers’ data is everywhere, in their data centers, at the edge, in the cloud, and more. Together with NVIDIA, we will help enterprises run their data with confidence. Run generative AI workloads nearby and address their concerns around enterprise data privacy, security, and control."

NVIDIA founder and CEO Jensen Huang said: “Enterprises around the world are racing to integrate generative AI into their businesses. By expanding our cooperation with VMware, we will be able to provide solutions for financial services, healthcare, manufacturing and other fields. Thousands of customers are provided with the full-stack software and computing they need to realize the full potential of generative AI with applications customized for their own data."

Full stack computing greatly improves the performance of generative AI

To realize business benefits faster, enterprises want to simplify and increase the efficiency of developing, testing and deploying generative AI applications. According to McKinsey, generative AI could add as much as $4.4 trillion to the global economy annually(1).

VMware Private AI Foundation with NVIDIA will help enterprises take full advantage of this ability to customize large language models, create more secure private models for internal use, and provide generative AI as a service to users more securely Run inference workloads at scale.

The platform plans to provide a variety of integrated AI tools that will help enterprises cost-effectively run mature models trained on their private data. The platform, built on VMware Cloud Foundation and NVIDIA AI Enterprise software, is expected to deliver the following benefits:

• Privacy: Customers will be able to easily run AI services wherever their data resides with an architecture that protects data privacy and secures access.

• Choice: From NVIDIA NeMo™ to Llama 2 and beyond, enterprises will have a wide range of choices in where to build and run their models, including leading OEM hardware configurations and future public cloud and service provider solutions.

• Performance: Recent industry benchmarks show that certain use cases running on NVIDIA-accelerated infrastructure match or exceed bare metal performance.

• Datacenter Scale: Optimized GPU scaling in virtualized environments enables AI workloads to scale to up to 16 vGPUs/GPUs on a single VM and multiple nodes, accelerating the fine-tuning and deployment of generative AI models .

• Lower Cost: All computing resources across GPUs, DPUs, and CPUs will be maximized to reduce overall costs and create a pooled resource environment that can be shared efficiently across teams.

• Accelerated storage: VMware vSAN Express Storage Architecture delivers performance-optimized NVMe storage and supports GPUDirect® storage via RDMA, enabling direct I/O transfers from storage to the GPU without the need for a CPU.

• Accelerated Networking: Deep integration between vSphere and NVIDIA NVSwitch™ technology will further ensure that multi-GPU models can be executed without inter-GPU bottlenecks.

• Rapid deployment and time to value: vSphere Deep Learning VM images and libraries will provide stable, turnkey solution images that come pre-installed with various frameworks and performance-optimized libraries for rapid prototyping.

The platform will be powered by NVIDIA NeMo, an end-to-end cloud-native framework included in NVIDIA AI Enterprise, the operating system of the NVIDIA AI platform, that enables enterprises to build, customize and deploy generative AI models virtually anywhere. NeMo combines a custom framework, guardrail toolkit, data wrangling tools, and pre-trained models to enable enterprises to adopt generative AI in a simple, affordable, and fast way.

To deploy generative AI into production, NeMo uses TensorRT for Large Language Models (TRT-LLM) to accelerate and optimize the inference performance of the latest LLM on NVIDIA GPUs. Through NeMo, VMware Private AI Foundation with NVIDIA will enable enterprises to import their own data and build and run custom generative AI models on VMware hybrid cloud infrastructure.

At the VMware Explore 2023 conference, NVIDIA and VMware will focus on how developers within the enterprise can use the new NVIDIA AI Workbench to extract community models (such as Llama 2 provided on Hugging Face), remotely customize these models and run them on Deploy production-grade generative AI in VMware environments.

Extensive ecosystem support for VMware Private AI Foundation With NVIDIA

VMware Private AI Foundation with NVIDIA will be supported by Dell, HPE and Lenovo. The three companies will be the first to offer systems powered by NVIDIA L40S GPUs, NVIDIA BlueField®-3 DPUs, and NVIDIA ConnectX®-7 SmartNICs that will accelerate enterprise LLM customization and inference workloads.

Compared to NVIDIA A100 Tensor Core GPU, NVIDIA L40S GPU can improve the inference performance and training performance of generative AI by 1.2 times and 1.7 times respectively.

NVIDIA BlueField-3 DPU accelerates, offloads and isolates massive computing workloads on the GPU or CPU, including virtualization, networking, storage, security, and other cloud-native AI services.

NVIDIA ConnectX-7 SmartNICs provide intelligent, accelerated networking for data center infrastructure to host some of the world’s most demanding AI workloads.

VMware Private AI Foundation with NVIDIA builds on a decade-long collaboration between the two companies. The joint research and development results of the two parties have optimized VMware's cloud infrastructure so that it can run NVIDIA AI Enterprise with performance comparable to that of bare metal. The resource and infrastructure management and flexibility provided by VMware Cloud Foundation will further benefit mutual customers.

Supply

VMware plans to release VMware Private AI Foundation with NVIDIA in early 2024.

The above is the detailed content of VMware and NVIDIA usher in the era of generative AI for enterprises. For more information, please follow other related articles on the PHP Chinese website!

What is Model Context Protocol (MCP)?Mar 03, 2025 pm 07:09 PM

What is Model Context Protocol (MCP)?Mar 03, 2025 pm 07:09 PMThe Model Context Protocol (MCP): A Universal Connector for AI and Data We're all familiar with AI's role in daily coding. Replit, GitHub Copilot, Black Box AI, and Cursor IDE are just a few examples of how AI streamlines our workflows. But imagine

Building a Local Vision Agent using OmniParser V2 and OmniToolMar 03, 2025 pm 07:08 PM

Building a Local Vision Agent using OmniParser V2 and OmniToolMar 03, 2025 pm 07:08 PMMicrosoft's OmniParser V2 and OmniTool: Revolutionizing GUI Automation with AI Imagine AI that not only understands but also interacts with your Windows 11 interface like a seasoned professional. Microsoft's OmniParser V2 and OmniTool make this a re

Replit Agent: A Guide With Practical ExamplesMar 04, 2025 am 10:52 AM

Replit Agent: A Guide With Practical ExamplesMar 04, 2025 am 10:52 AMRevolutionizing App Development: A Deep Dive into Replit Agent Tired of wrestling with complex development environments and obscure configuration files? Replit Agent aims to simplify the process of transforming ideas into functional apps. This AI-p

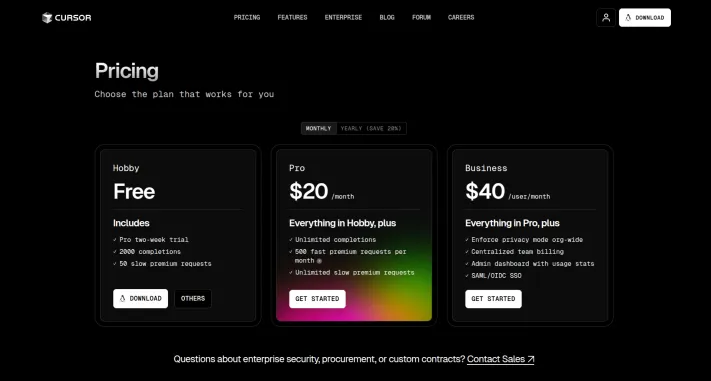

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PMVibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

Runway Act-One Guide: I Filmed Myself to Test ItMar 03, 2025 am 09:42 AM

Runway Act-One Guide: I Filmed Myself to Test ItMar 03, 2025 am 09:42 AMThis blog post shares my experience testing Runway ML's new Act-One animation tool, covering both its web interface and Python API. While promising, my results were less impressive than expected. Want to explore Generative AI? Learn to use LLMs in P

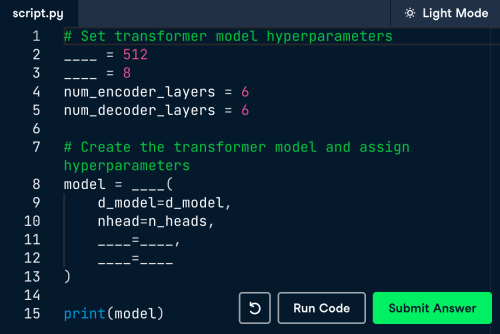

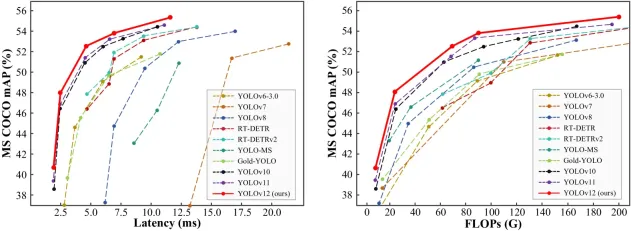

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AMYOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AMFebruary 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

Elon Musk & Sam Altman Clash over $500 Billion Stargate ProjectMar 08, 2025 am 11:15 AM

Elon Musk & Sam Altman Clash over $500 Billion Stargate ProjectMar 08, 2025 am 11:15 AMThe $500 billion Stargate AI project, backed by tech giants like OpenAI, SoftBank, Oracle, and Nvidia, and supported by the U.S. government, aims to solidify American AI leadership. This ambitious undertaking promises a future shaped by AI advanceme

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

WebStorm Mac version

Useful JavaScript development tools

SublimeText3 Linux new version

SublimeText3 Linux latest version

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

SublimeText3 Mac version

God-level code editing software (SublimeText3)

SublimeText3 English version

Recommended: Win version, supports code prompts!