Technology peripherals

Technology peripherals AI

AI Cambridge, Tencent AI Lab and others proposed the large language model PandaGPT: one model unifies six modalities

Cambridge, Tencent AI Lab and others proposed the large language model PandaGPT: one model unifies six modalitiesCambridge, Tencent AI Lab and others proposed the large language model PandaGPT: one model unifies six modalities

Researchers from Cambridge, NAIST and Tencent AI Lab recently released a research result called PandaGPT, which is a method to align and bind large language models with different modalities to achieve cross-modality Techniques for command-following abilities. PandaGPT can accomplish complex tasks such as generating detailed image descriptions, writing stories from videos, and answering questions about audio. It can receive multi-modal inputs simultaneously and combine their semantics naturally.

- ## Project homepage: https://panda-gpt.github.io/

- Code: https://github.com/yxuansu/PandaGPT

- ##Paper: http ://arxiv.org/abs/2305.16355

- Online Demo display: https://huggingface.co/spaces/GMFTBY/PandaGPT

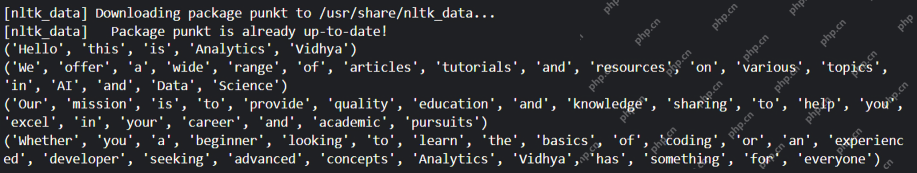

#In order to realize image & video, text, audio, heat map , depth map, IMU readings, command following capabilities in six modalities, PandaGPT combines ImageBind's multi-modal encoder with the Vicuna large language model (as shown in the figure above).

To align the feature spaces of ImageBind's multi-modal encoder and Vicuna's large language model, PandaGPT uses a total of 160k image-based language instructions released by combining LLaVa and Mini-GPT4 Follow the data as training data. Each training instance consists of an image and a corresponding set of dialogue rounds.

In order to avoid destroying the multi-modal alignment nature of ImageBind itself and reduce training costs, PandaGPT only updated the following modules:

Add a linear projection matrix to the encoding result of ImageBind, convert the representation generated by ImageBind and insert it into Vicuna's input sequence;

- Added additional information to Vicuna's attention module LoRA weight. The total number of parameters of the two accounts for about 0.4% of Vicuna parameters. The training function is a traditional language modeling objective. It is worth noting that during the training process, only the weight of the corresponding part of the model output is updated, and the user input part is not calculated. The entire training process takes about 7 hours to complete on 8×A100 (40G) GPUs.

- It is worth emphasizing that the current version of PandaGPT only uses aligned image-text data for training, but inherits the six modal understanding capabilities of the ImageBind encoder ( image/video, text, audio, depth, heat map and IMU) and the alignment properties between them, enabling cross-modal capabilities between all modalities.

In the experiment, the author demonstrated PandaGPT's ability to understand different modalities, including image/video-based question and answer, image/video-based creative writing, visual and auditory information-based Reasoning and more, here are some examples:

Image:

Compared with other multi-modal language models, the most outstanding feature of PandaGPT is its ability to understand and naturally combine information from different modalities.

Video audio:

##Image Audio:

- PandaGPT can further improve the understanding of modalities other than images by using other modal alignment data, such as using ASR and TTS data for audio-text modalities. State-of-the-art understanding and ability to follow instructions.

- Modes other than text are only represented by an embedding vector, causing the language model to be unable to understand the fine-grained information of the model outside of text. More research on fine-grained feature extraction, such as cross-modal attention mechanisms, may help improve performance.

- PandaGPT currently only allows modal information other than text to be used as input. In the future, this model has the potential to unify the entire AIGC into the same model, that is, one model can simultaneously complete tasks such as image & video generation, speech synthesis, and text generation.

- New benchmarks are needed to evaluate the ability to combine multimodal inputs.

- PandaGPT may also exhibit some common pitfalls of existing language models, including hallucinations, toxicity, and stereotyping.

The above is the detailed content of Cambridge, Tencent AI Lab and others proposed the large language model PandaGPT: one model unifies six modalities. For more information, please follow other related articles on the PHP Chinese website!

What's the Difference Between Type I and Type II Errors ? - Analytics VidhyaApr 18, 2025 am 09:48 AM

What's the Difference Between Type I and Type II Errors ? - Analytics VidhyaApr 18, 2025 am 09:48 AMUnderstanding Type I and Type II Errors in Statistical Hypothesis Testing Imagine a clinical trial testing a new blood pressure medication. The trial concludes the drug significantly lowers blood pressure, but in reality, it doesn't. This is a Type

Automated Text Summarization with Sumy LibraryApr 18, 2025 am 09:37 AM

Automated Text Summarization with Sumy LibraryApr 18, 2025 am 09:37 AMSumy: Your AI-Powered Summarization Assistant Tired of sifting through endless documents? Sumy, a powerful Python library, offers a streamlined solution for automatic text summarization. This article explores Sumy's capabilities, guiding you throug

SQL CASE Statements: From Basics to Advanced TechniquesApr 18, 2025 am 09:31 AM

SQL CASE Statements: From Basics to Advanced TechniquesApr 18, 2025 am 09:31 AMData Challenges: Mastering SQL's CASE Statement for Accurate Insights Who needs lawyers when you've got data enthusiasts? Data analysts, scientists, and everyone in the vast data world face their own complex challenges, ensuring systems function fla

What is Power of Chain of Knowledge in Prompt Engineering?Apr 18, 2025 am 09:30 AM

What is Power of Chain of Knowledge in Prompt Engineering?Apr 18, 2025 am 09:30 AMHarnessing the Power of Knowledge Chains in AI: A Deep Dive into Prompt Engineering Do you know that Artificial Intelligence (AI) can not only understand your questions but also weave together vast amounts of knowledge to deliver insightful answers?

Can AI Help us Achieve Work-Life Balance? - Analytics VidhyaApr 18, 2025 am 09:27 AM

Can AI Help us Achieve Work-Life Balance? - Analytics VidhyaApr 18, 2025 am 09:27 AMIntroduction Joanna Maciejewska recently shared a insightful observation on X: The biggest challenge with the AI push? It's misdirected. I want AI to handle laundry and dishes so I can focus on art and writing, not the other way around. — Joanna Ma

Guide to Tool-Calling with Llama 3.1Apr 18, 2025 am 09:26 AM

Guide to Tool-Calling with Llama 3.1Apr 18, 2025 am 09:26 AMMeta's Llama 3.1: A Deep Dive into Open-Source LLM Capabilities Meta continues to lead the charge in open-source Large Language Models (LLMs). The Llama family, evolving from Llama to Llama 2, Llama 3, and now Llama 3.1, demonstrates a commitment to

What is SPC Charts? - Analytics VidhyaApr 18, 2025 am 09:24 AM

What is SPC Charts? - Analytics VidhyaApr 18, 2025 am 09:24 AMIntroduction Statistical Process Control (SPC) charts are essential tools in quality management, enabling organizations to monitor, control, and improve their processes. By applying statistical methods, SPC charts visually represent data variations

Top 30 MySQL Interview Questions - Analytics VidhyaApr 18, 2025 am 09:23 AM

Top 30 MySQL Interview Questions - Analytics VidhyaApr 18, 2025 am 09:23 AMThis guide equips you for your MySQL interview by covering 30 questions spanning beginner, intermediate, and advanced levels. MySQL, a vital tool in data management and analytics, is explored through theoretical concepts and practical query example

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

Zend Studio 13.0.1

Powerful PHP integrated development environment

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

Dreamweaver CS6

Visual web development tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment