Why are NVIDIA GPUs expensive?

Author: 江月

Editor:Tao Li Lu Taoran

Picture source: 图 insects

"The era of CPU expansion is over" was what Nvidia founder and CEO Jen-Hsun Huang announced at the Taipei International Computer Show on May 29. "During the speech that lasted for 2 hours, he gave a thorough introduction to Nvidia's recent hardware, software, and new system products around the "trigger points" brought by generative AI.

Huang Renxun said that the "new computer" shaped by GPU has arrived. The new "computer" built by Nvidia has a different form than before, and its single unit sells for up to 200,000 US dollars. Amid the "expensive" controversy, Huang Renxun also said that GPU is the only choice for every company with limited power budget, and it is also the most "saving" infrastructure option.

Huang Renxun recently said in a public speech that in the face of the AI era, people need to "run, not walk slowly." In the garden of generative AI, Nvidia is clearly digging hard.

Decoding GPU dataHeart cost

"Everyone always says that GPU data centers are expensive, let me calculate it for you." On May 29, Nvidia founder and CEO Huang Jen-Hsun introduced GPU to the public in detail at the Taipei International Computer Show Data center costs.

NVIDIA brought its new AI computer DGX GH200 when meeting with suppliers and customers. This is a supercomputer integrating 256 Nvidia’s highest-performance CPUs and GPUs, which can meet the training needs of “very large models”.

DGX GH200 uses NVIDIA NVLink-C2C interconnection technology to interconnect Arm-based Grace architecture CPU and Hopper architecture GPU, achieving a total bandwidth of up to 900GB/s, which is higher than the standard PCIe Gen5 channel in traditional acceleration systems 7 times faster, this kind of computing power can meet the most demanding generative AI and HPC applications today.

The picture shows the GH200 Grace Hopper super chip announced by NVIDIA on May 29

Photo courtesy of NVIDIA

As the market’s demand for data center infrastructure grows, Nvidia now also needs to use some “sales methods” to the market. The most urgent need is to resolve people's fear that their equipment is "expensive".

To put it simply, "Using a GPU data center, you can get 150 times the performance and save 2/3 of the cost." Huang Renxun said.

Huang Renxun introduced that taking the same budget of US$10 million as an example, AIGC developers can build a data center composed of 960 CPU servers, which is enough to train a large language model, and the final operating consumption is 11 gigawatts. Hours of electricity (GWh, equivalent to 11 million kilowatt-hours of electricity).

But if you switch to GPU, you can build a data center composed of 48 GPU servers. However, this data center can train 44 large language models, consuming a total of 3.2 gigawatt hours (equivalent to 3.2 million kilowatt hours of electricity). .

According to the above calculation method, based on the single-chip price, the price of a GPU chip is 20 times that of a CPU, which seems to be "more expensive". However, according to the data center TCO cost calculation method, the GPU data center is more "saving money".

Huang Renxun even listed a formula directly in the demonstration PPT: data center holding cost = f {hardware cost (chip, system, hardware ecosystem), throughput (GPU, AIgo software, network, system software, software Ecosystem), usage (AIgo Lib, software ecosystem), procurement operations, life cycle optimization, computing power}, thus explaining the TCO cost issue of the data center.

"Why is this important? Because in real life most companies have power restrictions." Huang Renxun emphasized again. When investing in AIGC, taking this objective factor into consideration, enterprises must choose more efficient and low-power consumption data center products.

Why are GPUs so powerful in data centers? According to Huang Renxun, this is mainly due to three major functions: ray tracing (simulating the characteristics of light), artificial intelligence with tensor computing as the core, and new algorithms.

Since 2017, NVIDIA has begun to implement these three functions on GPU at the same time, and its use of GPU to generate images has surprised the market for the first time. At that time, it took several hours to "make" a picture out of nothing using a CPU server (this process is called "rendering" in professional parlance), but Nvidia only took 15 seconds using the GPU.

However, the so-called "cheapness" of new GPU computers is not aimed at the consumer market. Currently, CPU-core PCs and notebooks in the personal computer market cannot be replaced because they are more affordable.

In his speech, Huang Renxun showed a new GPU computer composed of 8 H100 chips. "It is the most expensive computer in the world." Huang Renxun said.

This new computer weighs 65 pounds (approximately 29.5 kilograms) and requires the help of a robot to achieve smooth and precise installation. "This computer sells for US$200,000." Huang Renxun said.

Start AIGC industry change

This is a rewrite: NVIDIA’s first step in capturing the AIGC leadership position is to eliminate the “CPU era” by using hardware. At present, NVIDIA has carefully laid out its plans in the software ecosystem. In addition to promoting the CUDA computing model to 4 million software developers, it has also launched game AI model OEM services, and has gone deep into the manufacturing industry to support virtual factories and robot simulations. Technology and automated detection.

"Why have people been unable to create a new computing method for so many years?" Huang Renxun said when talking about the CPU era. He pointed out that This is because there is a "chicken-and-egg" relationship between hardware and software, the consumer market, and developers and suppliers, which leads to mutual restraint and allows the CPU-based computing method to continue even. Long.

Therefore, in order to break the shackles of the "CPU era", Nvidia not only vigorously designs chip hardware, but also pays great attention to building a software ecological environment. The CUDA computing model is a key piece that NVIDIA has made for this long-term layout.

Currently, more than 3,000 applications and 4 million developers use the NVIDIA Cuda computing model. In the last year alone, Cuda has been downloaded 25 million times, and its total downloads have reached 40 million. " Huang Renxun said. He pointed out that it is only possible for GPU to replace CPU based on such a large-scale software.

Summarizing Huang Renxun’s two-hour speech, we can see Nvidia’s exploration of the AIGC field, covering core super chips, interconnect technology, algorithm engine optimization, and supporting software upgrades.

In fact, the texts, texts, pictures, 2D pictures and 3D pictures involved in AIGC are being implemented through a variety of large models or applications, including NVIDIA's conversational AI model open source framework NeMo and Meta's The large model LLaMa, the application ChatGPT using the GPT model, and the Vincent graph application Stable Diffusion, etc.

Currently, the world's most influential AIGC technology leaders are using the tools provided by NVIDIA in depth, which has also pushed NVIDIA to climb the peak of "1 trillion U.S. dollars" in market value in the U.S. stock market. It will soon compete with Apple, Microsoft, Google, and Amazon are in the "trillion club" together.

The number of tools Nvidia brought to the AIGC industry this time surprised the market. In addition to the above products, NVIDIA's involvement in large-scale game creation and digital factories is also very eye-catching.

In terms of game creation, Huang Renxun showed a game clip. In this clip, in addition to realistic picture production, the dialogue between game players and NPCs was also completely generated by AIGC. In other words, future games can have "thousands of faces", and players will no longer face NPCs who only give out patterned responses. ACE Game Development Edition is an AI model foundry service provided by NVIDIA, which can help game developers easily use this feature.

NVIDIA also introduced that some leading electronics manufacturers have now used NVIDIA's AIGC and omniverse platform to realize the "digitization" of factories.

In manufacturing, there are approximately 10 million factories around the world, and they are key areas for industrial digitalization.

Huang Renxun said: "Industrial manufacturing is all physical objects. If products can be manufactured digitally first, billions of dollars can be saved."

Currently, in the industrial field, NVIDIA mainly creates Omniverse and generative AI to help factories design virtual factories. It also launches Isaac Sim simulation and test robots and Metropolis optical inspection automation tools.

It is understood that electronic equipment manufacturers such as Foxconn, Pegatron, and Quanta are already using the above-mentioned tools of NVIDIA to speed up the production and assembly of laptops and smartphones.

21Tech

Nancai Group Special Column

Previous recommendations

From Industrial Internet to AIGC: How does intelligent manufacturing make the transition?

05-30

Glory CEO Zhao Ming: Develop chip strategy on demand and maintain R&D ratio at 10% in 2023

05-30

Mobile phone manufacturers compete for imaging and folding screens. Huawei explains the technical secrets in detail

05-29

NVIDIA calls for "running instead of walking" to develop AI, develop supercomputing, and eliminate the CPU era

05-29

The above is the detailed content of In the garden of generative AI, how NVIDIA works as a 'digger”. For more information, please follow other related articles on the PHP Chinese website!

OpenAI's o1-preview vs o1-mini: A Step Forward to AGIApr 12, 2025 am 10:04 AM

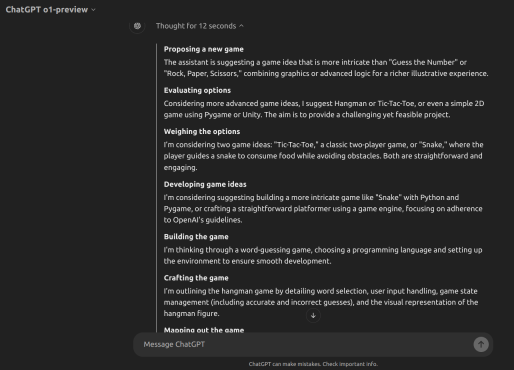

OpenAI's o1-preview vs o1-mini: A Step Forward to AGIApr 12, 2025 am 10:04 AMIntroduction On September 12th, OpenAI released an update titled “Learning to Reason with LLMs.” They introduced the o1 model, which is trained using reinforcement learning to tackle complex reasoning tasks. What sets this mod

How to Build Games with OpenAI o1? - Analytics VidhyaApr 12, 2025 am 10:03 AM

How to Build Games with OpenAI o1? - Analytics VidhyaApr 12, 2025 am 10:03 AMIntroduction The OpenAI o1 model family significantly advances reasoning power and economic performance, especially in science, coding, and problem-solving. OpenAI’s goal is to create ever-more-advanced AI, and o1 models

Popular LLM Agent Tools for Customer Query ManagementApr 12, 2025 am 10:01 AM

Popular LLM Agent Tools for Customer Query ManagementApr 12, 2025 am 10:01 AMIntroduction Today, the world of customer query management is moving at an unprecedented pace, with new tools making headlines every day. Large language model (LLM) agents are the latest innovation in this context, boosting cu

100 Day Generative AI Implementation Plan for EnterprisesApr 12, 2025 am 09:56 AM

100 Day Generative AI Implementation Plan for EnterprisesApr 12, 2025 am 09:56 AMIntroduction Adopting generative AI can be a transformative journey for any company. However, the process of GenAI implementation can often be cumbersome and confusing. Rajendra Singh Pawar, chairman and co-founder of NIIT Lim

Pixtral 12B vs Qwen2-VL-72BApr 12, 2025 am 09:52 AM

Pixtral 12B vs Qwen2-VL-72BApr 12, 2025 am 09:52 AMIntroduction The AI revolution has given rise to a new era of creativity, where text-to-image models are redefining the intersection of art, design, and technology. Pixtral 12B and Qwen2-VL-72B are two pioneering forces drivin

What is PaperQA and How Does it Assist in Scientific Research?Apr 12, 2025 am 09:51 AM

What is PaperQA and How Does it Assist in Scientific Research?Apr 12, 2025 am 09:51 AMIntroduction With the advancement of AI, scientific research has seen a massive transformation. Millions of papers are published annually on different technologies and sectors. But, navigating this ocean of information to retr

DataGemma: Grounding LLMs Against Hallucinations - Analytics VidhyaApr 12, 2025 am 09:46 AM

DataGemma: Grounding LLMs Against Hallucinations - Analytics VidhyaApr 12, 2025 am 09:46 AMIntroduction Large Language Models are rapidly transforming industries—today, they power everything from personalized customer service in banking to real-time language translation in global communication. They can answer quest

How to Build Multi-Agent System with CrewAI and Ollama?Apr 12, 2025 am 09:44 AM

How to Build Multi-Agent System with CrewAI and Ollama?Apr 12, 2025 am 09:44 AMIntroduction Don’t want to spend money on APIs, or are you concerned about privacy? Or do you just want to run LLMs locally? Don’t worry; this guide will help you build agents and multi-agent frameworks with local LLMs t

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Atom editor mac version download

The most popular open source editor

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

SublimeText3 Linux new version

SublimeText3 Linux latest version

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function