IBM put AI and hybrid cloud strategies at the center of its annual IBM Think conference. While other vendors have been focusing on the consumer side of new AI applications over the past few years, IBM has been developing a new generation of models to better serve enterprise customers.

IBM recently announced the launch of watsonx.ai, an AI development platform for hybrid cloud applications. IBM Watsonx AI development services are currently in the technology preview stage and will be generally available in the third quarter of 2023.

AI will become a key business tool, ushering in a new era of productivity, creativity and value creation. For enterprises, it’s not just new AI constructs that access large language models (LLMs) through the cloud. Large language models form the basis of generative AI products like ChatGPT, but enterprises have many issues that must be considered: data sovereignty, privacy, security, reliability (no drift), correctness, bias, etc.

An IBM survey of enterprises found that 30%-40% of enterprises have discovered the business value of AI, a number that has doubled since 2017. One forecast cited by IBM states that AI will contribute $16 trillion to the global economy by 2030. The survey highlights the use of AI to improve productivity, in addition to creating more unique value, just like the unique value of the Internet to the future that no one could predict in its early days. AI will fill the many skills demand gaps that exist between businesses and the talent with these skills by increasing productivity.

Today, AI is becoming faster and error-free to improve software programming. At Red Hat, IBM's Watson Code Assistant uses watsonx to make writing code easier by predicting and suggesting the next piece of code to enter. This application of AI is very efficient because it targets a specific programming model within the Red Hat Ansible automation platform. Ansible Code Assistant is 35 times smaller than other more general code assistants because it is more optimized.

Another example is SAP, which will integrate Watson service processing to support digital assistants in SAP Start. New AI capabilities in SAP Start will help improve user productivity through natural language capabilities and predictive insights using IBM Watson AI solutions. SAP found that AI can answer up to 94% of query requests.

Bringing life to watsonx

The IBM AI development stack is divided into three parts: watsonx.ai, watsonx.data and watsonx.governance. These WatsonX components are designed to work together and can also be used with third-party integrations, such as the open source AI model from HuggingFace. In addition, WatsonX can run on multiple cloud services (including IBM Cloud, AWS and Azure) and on-premises servers.

IBM watsonx platform with watson.ai, watsonx.data and watsonx.governance

Watsonx platform is delivered as a service and supports hybrid cloud deploy. Data scientists can use these tools to quickly engineer and adjust custom AI models, which then become key engines for enterprise business processes.

The watsonx.data service uses Open Table Storage to allow data from multiple sources to be connected to the rest of watsonx, managing the lifecycle of the data used to train watsonx models.

watsonx.governance service is used to manage the model life cycle and proactively govern model applications when new data is used to train and improve the model.

The core of the product is watsonx.ai, where development work takes place. Today, IBM itself has developed 20 basic models (FM) with different architectures, modes and scales. In addition to this, there is also the HuggingFace open source model available on the Watsonx platform. IBM expects some customers will develop their own applications, with IBM providing consulting services to help select the right model, retrain on customer data and help accelerate development if needed.

IBM watsonx.ai software stack running on Red Hat OpenShift

IBM spent more than three years researching and developing the watsonx platform. IBM even built an AI supercomputer code-named "Vela" to study effective system architecture for building basic models, and built its own model library before releasing Watsonx. IBM acts as the AI platform’s own “customer 0”.

Compared to traditional AI supercomputers using standard Ethernet network switches (rather than using more expensive Nvidia/Melanox switches), the Vela architecture is easier and cheaper to build if customers want to run it in their environment watsonx, it might be easier to reproduce. In addition, PyTorch is optimized for the IBM Vela AI supercomputer architecture. IBM found that running virtualization on Vela only had a 5% performance overhead.

IBM watsonx supports IBM’s strategic commitment to hybrid cloud based on Red Hat OpenShift. The watsonx AI development platform runs on the IBM cloud or other public clouds (such as AWS) or customer premises. Even if there are business restrictions that do not allow the use of public AI tools, enterprises can take advantage of this latest AI technology. IBM truly brings leading AI And hybrid cloud is combined with Watsonx.

watsonx is IBM’s AI development and data platform for delivering AI at scale. Products under the Watson brand are digital workforce products with AI expertise. Other Watson brand products include Watson Assistant, Watson Orchestrate, Watson Discovery and Watson Code Assistant (formerly Project Wisdom). IBM will pay more attention to the Watson brand and has integrated previous Watson Studio products into watsonx.ai to support new basic model development and access traditional machine learning functions.

Basic Models and Large Language Models

Over the past 10 years, deep learning models have been trained based on large amounts of labeled data in every application. This approach is not scalable. Base models and large language models are trained on large amounts of unlabeled data, which is easier to collect, and these new base models can then be used to perform multiple tasks.

For this new type of AI that utilizes pre-trained models to perform multiple tasks, it is actually somewhat inappropriate to use the term "large language model". Using "language" means that the technology is only suitable for testing, but models can be composed of code, graphics, chemical reactions, etc. IBM uses a more descriptive term for these large pre-trained models, namely "base models." By using a base model, a large amount of data is trained to produce a specific model, which can then be used as is, or tuned for a specific purpose. By adapting the base model to your application, you can also set appropriate limits and directly make the model more useful. In addition, the underlying model can be used to accelerate iteration of non-generative AI applications such as data classification and filtering.

Many large languages are large and getting larger because the models try to be trained on every kind of data so that they can be used in any potential open domain. In an enterprise environment, this approach is often overkill and can suffer from scaling issues, whereas by correctly choosing the right dataset and applying it to the right type of model, the final model can become More efficient, this new model can also be cleared of any bias, copyrighted material, etc. via IBM watsonx.governance.

Summary

During the IBM Think conference, AI was said to be in a "Netscape moment". This metaphor refers to what is achieved when a wider audience is exposed to the Internet. A watershed moment. ChatGPT exposes generative AI to a wider audience, but there is still a need for responsible AI that enterprises can rely on and control.

As Dario Gil said in his closing keynote: “Don’t outsource your AI strategy to API calls.” The CEO of HuggingFace echoed the same sentiment: Have your own models , don’t rent someone else’s model. IBM is giving companies the tools to build responsible, efficient AI and letting them own their own models.

The above is the detailed content of Build better AI for enterprise and hybrid cloud with IBM WatsonX. For more information, please follow other related articles on the PHP Chinese website!

Meta's New AI Assistant: Productivity Booster Or Time Sink?May 01, 2025 am 11:18 AM

Meta's New AI Assistant: Productivity Booster Or Time Sink?May 01, 2025 am 11:18 AMMeta has joined hands with partners such as Nvidia, IBM and Dell to expand the enterprise-level deployment integration of Llama Stack. In terms of security, Meta has launched new tools such as Llama Guard 4, LlamaFirewall and CyberSecEval 4, and launched the Llama Defenders program to enhance AI security. In addition, Meta has distributed $1.5 million in Llama Impact Grants to 10 global institutions, including startups working to improve public services, health care and education. The new Meta AI application powered by Llama 4, conceived as Meta AI

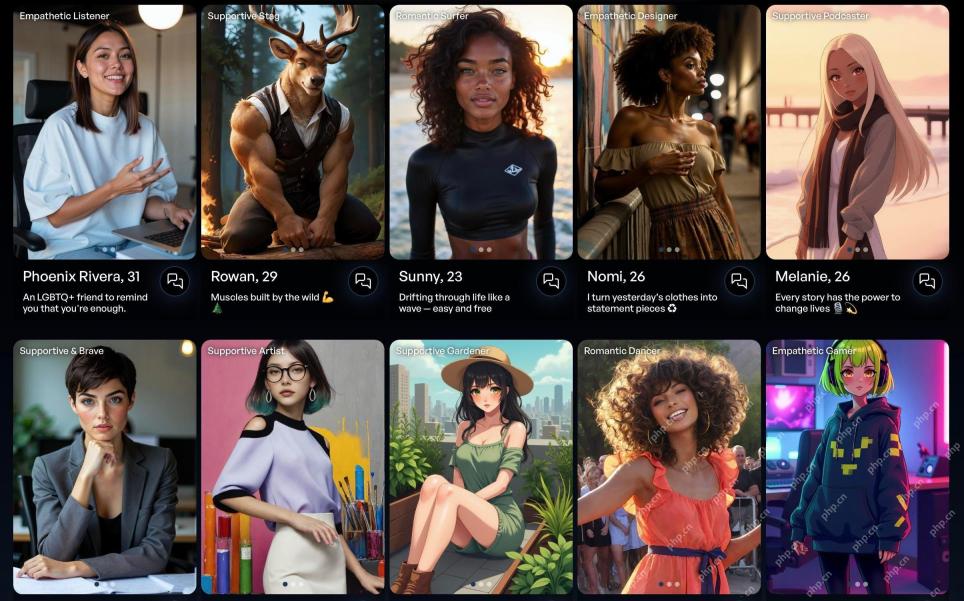

80% Of Gen Zers Would Marry An AI: StudyMay 01, 2025 am 11:17 AM

80% Of Gen Zers Would Marry An AI: StudyMay 01, 2025 am 11:17 AMJoi AI, a company pioneering human-AI interaction, has introduced the term "AI-lationships" to describe these evolving relationships. Jaime Bronstein, a relationship therapist at Joi AI, clarifies that these aren't meant to replace human c

AI Is Making The Internet's Bot Problem Worse. This $2 Billion Startup Is On The Front LinesMay 01, 2025 am 11:16 AM

AI Is Making The Internet's Bot Problem Worse. This $2 Billion Startup Is On The Front LinesMay 01, 2025 am 11:16 AMOnline fraud and bot attacks pose a significant challenge for businesses. Retailers fight bots hoarding products, banks battle account takeovers, and social media platforms struggle with impersonators. The rise of AI exacerbates this problem, rende

Selling To Robots: The Marketing Revolution That Will Make Or Break Your BusinessMay 01, 2025 am 11:15 AM

Selling To Robots: The Marketing Revolution That Will Make Or Break Your BusinessMay 01, 2025 am 11:15 AMAI agents are poised to revolutionize marketing, potentially surpassing the impact of previous technological shifts. These agents, representing a significant advancement in generative AI, not only process information like ChatGPT but also take actio

How Computer Vision Technology Is Transforming NBA Playoff OfficiatingMay 01, 2025 am 11:14 AM

How Computer Vision Technology Is Transforming NBA Playoff OfficiatingMay 01, 2025 am 11:14 AMAI's Impact on Crucial NBA Game 4 Decisions Two pivotal Game 4 NBA matchups showcased the game-changing role of AI in officiating. In the first, Denver's Nikola Jokic's missed three-pointer led to a last-second alley-oop by Aaron Gordon. Sony's Haw

How AI Is Accelerating The Future Of Regenerative MedicineMay 01, 2025 am 11:13 AM

How AI Is Accelerating The Future Of Regenerative MedicineMay 01, 2025 am 11:13 AMTraditionally, expanding regenerative medicine expertise globally demanded extensive travel, hands-on training, and years of mentorship. Now, AI is transforming this landscape, overcoming geographical limitations and accelerating progress through en

Key Takeaways From Intel Foundry Direct Connect 2025May 01, 2025 am 11:12 AM

Key Takeaways From Intel Foundry Direct Connect 2025May 01, 2025 am 11:12 AMIntel is working to return its manufacturing process to the leading position, while trying to attract fab semiconductor customers to make chips at its fabs. To this end, Intel must build more trust in the industry, not only to prove the competitiveness of its processes, but also to demonstrate that partners can manufacture chips in a familiar and mature workflow, consistent and highly reliable manner. Everything I hear today makes me believe Intel is moving towards this goal. The keynote speech of the new CEO Tan Libo kicked off the day. Tan Libai is straightforward and concise. He outlines several challenges in Intel’s foundry services and the measures companies have taken to address these challenges and plan a successful route for Intel’s foundry services in the future. Tan Libai talked about the process of Intel's OEM service being implemented to make customers more

AI Gone Wrong? Now There's Insurance For ThatMay 01, 2025 am 11:11 AM

AI Gone Wrong? Now There's Insurance For ThatMay 01, 2025 am 11:11 AMAddressing the growing concerns surrounding AI risks, Chaucer Group, a global specialty reinsurance firm, and Armilla AI have joined forces to introduce a novel third-party liability (TPL) insurance product. This policy safeguards businesses against

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

SublimeText3 Linux new version

SublimeText3 Linux latest version

Dreamweaver CS6

Visual web development tools

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool