Java

Java javaTutorial

javaTutorial How Springboot microservice project integrates Kafka to implement article uploading and delisting functions

How Springboot microservice project integrates Kafka to implement article uploading and delisting functions1: Quick Start for Kafka Message Sending

1. Pass String Message

(1) Send Message

Create a Controller package and write a test class for Send message

package com.my.kafka.controller;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class HelloController {

@Autowired

private KafkaTemplate<String,String> kafkaTemplate;

@GetMapping("hello")

public String helloProducer(){

kafkaTemplate.send("my-topic","Hello~");

return "ok";

}

}(2) Listen for messages

Write a test class to receive messages:

package com.my.kafka.listener;

import org.junit.platform.commons.util.StringUtils;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.stereotype.Component;

@Component

public class HelloListener {

@KafkaListener(topics = "my-topic")

public void helloListener(String message) {

if(StringUtils.isNotBlank(message)) {

System.out.println(message);

}

}

}(3) Test results

Open browser input localhost:9991/hello, and then go to the console to view the message. You can see that the successful message has been monitored and consumed.

2. Passing object messages

Currently springboot integrated kafka, because the serializer is StringSerializer, if you need to pass objects at this time, there are two ways Method:

Method 1: You can customize the serializer with many object types. This method is not very versatile and will not be introduced here.

Method 2: You can convert the object to be transferred into a json string, and then convert it into an object after receiving the message. This method is used in this project.

(1) Modify the producer code

@GetMapping("hello")

public String helloProducer(){

User user = new User();

user.setName("赵四");

user.setAge(20);

kafkaTemplate.send("my-topic", JSON.toJSONString(user));

return "ok";

}(2) Result test

You can see that all object parameters are successfully received, later To use this object, you only need to convert it into a User object.

2: Function introduction

1. Requirements analysis

After publishing an article, there may be some errors or other reasons in the article. We will implement the article on the article management side. The upload and delist function (see the picture below), that is, when the management terminal removes an article from the shelves, the mobile terminal will no longer display the article. Only after the article is re-listed can the article information be seen on the mobile terminal.

2. Logical analysis

#After the back-end receives the parameters passed by the front-end, it must first do a verification , the parameter is not empty before the execution can continue. First, the article information of the self-media database should be queried based on the article id passed from the front end (self-media end article id) and judge whether the article has been published, because only if the review is successful and successful Only published articles can be uploaded or removed. After the self-media side microservice modifies the article uploading and delisting status, it can send a message to Kafka. The message is a Map object. The data stored in it is the article id of the mobile terminal and the uploading and delisting parameter enable passed from the front end. Of course, this message must be The Map object can be sent after it is converted into a JSON string.

The article microservice listens to the message sent by Kafka, converts the JSON string into a Map object, and then obtains the relevant parameters to modify the up and down status of the mobile article.

3: Early preparation

1.Introduce dependencies

<!-- kafkfa -->

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

</dependency>2.Define constants

package com.my.common.constans;

public class WmNewsMessageConstants {

public static final String WM_NEWS_UP_OR_DOWN_TOPIC="wm.news.up.or.down.topic";

}3.Kafka configuration information

Because I use Nacos as the registration center, so the configuration information can be placed on Nacos.

(1) Self-media terminal configuration

spring:

kafka:

bootstrap-servers: 4.234.52.122:9092

producer:

retries: 10

key-serializer: org.apache.kafka.common.serialization.StringSerializer

value-serializer: org.apache.kafka.common.serialization.StringSerializer(2) Mobile terminal configuration

spring:

kafka:

bootstrap-servers: 4.234.52.122:9092

consumer:

group-id: ${spring.application.name}-test

key-deserializer: org.apache.kafka.common.serialization.StringDeserializer

value-deserializer: org.apache.kafka.common.serialization.StringDeserializerFour: Code implementation

1. Self-media terminal

@Autowired

private KafkaTemplate<String,String> kafkaTemplate;

/**

* 文章下架或上架

* @param id

* @param enable

* @return

*/

@Override

public ResponseResult downOrUp(Integer id,Integer enable) {

log.info("执行文章上下架操作...");

if(id == null || enable == null) {

return ResponseResult.errorResult(AppHttpCodeEnum.PARAM_INVALID);

}

//根据id获取文章

WmNews news = getById(id);

if(news == null) {

return ResponseResult.errorResult(AppHttpCodeEnum.DATA_NOT_EXIST,"文章信息不存在");

}

//获取当前文章状态

Short status = news.getStatus();

if(!status.equals(WmNews.Status.PUBLISHED.getCode())) {

return ResponseResult.errorResult(AppHttpCodeEnum.PARAM_INVALID,"文章非发布状态,不能上下架");

}

//更改文章状态

news.setEnable(enable.shortValue());

updateById(news);

log.info("更改文章上架状态{}-->{}",status,news.getEnable());

//发送消息到Kafka

Map<String, Object> map = new HashMap<>();

map.put("articleId",news.getArticleId());

map.put("enable",enable.shortValue());

kafkaTemplate.send(WmNewsMessageConstants.WM_NEWS_UP_OR_DOWN_TOPIC,JSON.toJSONString(map));

log.info("发送消息到Kafka...");

return ResponseResult.okResult(AppHttpCodeEnum.SUCCESS);

}2. Mobile terminal

(1) Set up the listener

package com.my.article.listener;

import com.baomidou.mybatisplus.core.toolkit.StringUtils;

import com.my.article.service.ApArticleService;

import com.my.common.constans.WmNewsMessageConstants;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Component;

import org.springframework.kafka.annotation.KafkaListener;

@Slf4j

@Component

public class EnableListener {

@Autowired

private ApArticleService apArticleService;

@KafkaListener(topics = WmNewsMessageConstants.WM_NEWS_UP_OR_DOWN_TOPIC)

public void downOrUp(String message) {

if(StringUtils.isNotBlank(message)) {

log.info("监听到消息{}",message);

apArticleService.downOrUp(message);

}

}

}(2) Get the message and modify the article status

/**

* 文章上下架

* @param message

* @return

*/

@Override

public ResponseResult downOrUp(String message) {

Map map = JSON.parseObject(message, Map.class);

//获取文章id

Long articleId = (Long) map.get("articleId");

//获取文章待修改状态

Integer enable = (Integer) map.get("enable");

//查询文章配置

ApArticleConfig apArticleConfig = apArticleConfigMapper.selectOne

(Wrappers.<ApArticleConfig>lambdaQuery().eq(ApArticleConfig::getArticleId, articleId));

if(apArticleConfig != null) {

//上架

if(enable == 1) {

log.info("文章重新上架");

apArticleConfig.setIsDown(false);

apArticleConfigMapper.updateById(apArticleConfig);

}

//下架

if(enable == 0) {

log.info("文章下架");

apArticleConfig.setIsDown(true);

apArticleConfigMapper.updateById(apArticleConfig);

}

}

else {

throw new RuntimeException("文章信息不存在");

}

return ResponseResult.okResult(AppHttpCodeEnum.SUCCESS);

}The above is the detailed content of How Springboot microservice project integrates Kafka to implement article uploading and delisting functions. For more information, please follow other related articles on the PHP Chinese website!

怎么使用SpringBoot+Canal实现数据库实时监控May 10, 2023 pm 06:25 PM

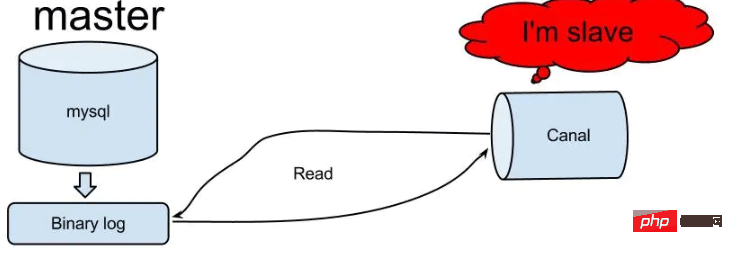

怎么使用SpringBoot+Canal实现数据库实时监控May 10, 2023 pm 06:25 PMCanal工作原理Canal模拟MySQLslave的交互协议,伪装自己为MySQLslave,向MySQLmaster发送dump协议MySQLmaster收到dump请求,开始推送binarylog给slave(也就是Canal)Canal解析binarylog对象(原始为byte流)MySQL打开binlog模式在MySQL配置文件my.cnf设置如下信息:[mysqld]#打开binloglog-bin=mysql-bin#选择ROW(行)模式binlog-format=ROW#配置My

Spring Boot怎么使用SSE方式向前端推送数据May 10, 2023 pm 05:31 PM

Spring Boot怎么使用SSE方式向前端推送数据May 10, 2023 pm 05:31 PM前言SSE简单的来说就是服务器主动向前端推送数据的一种技术,它是单向的,也就是说前端是不能向服务器发送数据的。SSE适用于消息推送,监控等只需要服务器推送数据的场景中,下面是使用SpringBoot来实现一个简单的模拟向前端推动进度数据,前端页面接受后展示进度条。服务端在SpringBoot中使用时需要注意,最好使用SpringWeb提供的SseEmitter这个类来进行操作,我在刚开始时使用网上说的将Content-Type设置为text-stream这种方式发现每次前端每次都会重新创建接。最

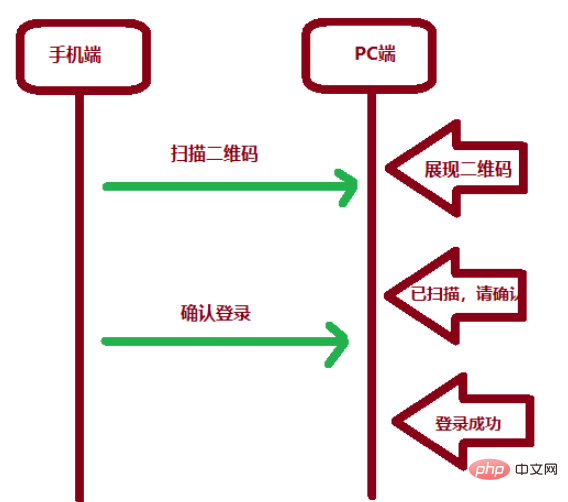

SpringBoot怎么实现二维码扫码登录May 10, 2023 pm 08:25 PM

SpringBoot怎么实现二维码扫码登录May 10, 2023 pm 08:25 PM一、手机扫二维码登录的原理二维码扫码登录是一种基于OAuth3.0协议的授权登录方式。在这种方式下,应用程序不需要获取用户的用户名和密码,只需要获取用户的授权即可。二维码扫码登录主要有以下几个步骤:应用程序生成一个二维码,并将该二维码展示给用户。用户使用扫码工具扫描该二维码,并在授权页面中授权。用户授权后,应用程序会获取一个授权码。应用程序使用该授权码向授权服务器请求访问令牌。授权服务器返回一个访问令牌给应用程序。应用程序使用该访问令牌访问资源服务器。通过以上步骤,二维码扫码登录可以实现用户的快

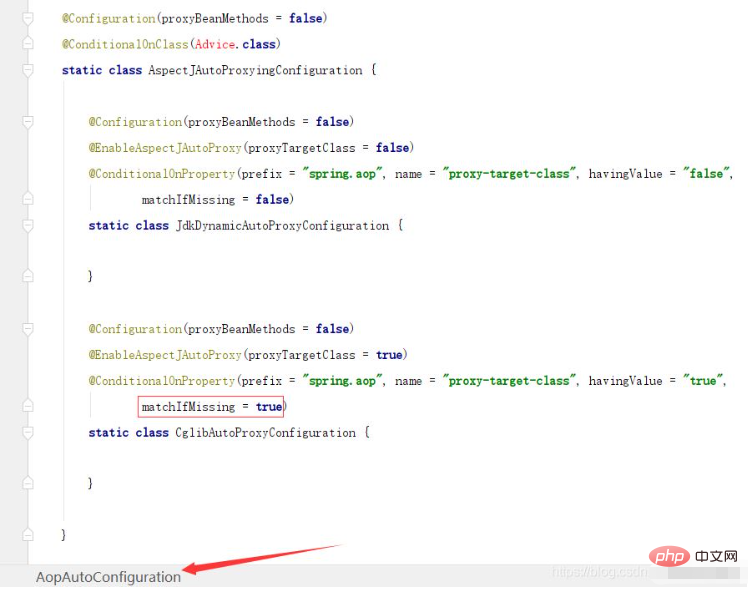

SpringBoot/Spring AOP默认动态代理方式是什么May 10, 2023 pm 03:52 PM

SpringBoot/Spring AOP默认动态代理方式是什么May 10, 2023 pm 03:52 PM1.springboot2.x及以上版本在SpringBoot2.xAOP中会默认使用Cglib来实现,但是Spring5中默认还是使用jdk动态代理。SpringAOP默认使用JDK动态代理,如果对象没有实现接口,则使用CGLIB代理。当然,也可以强制使用CGLIB代理。在SpringBoot中,通过AopAutoConfiguration来自动装配AOP.2.Springboot1.xSpringboot1.xAOP默认还是使用JDK动态代理的3.SpringBoot2.x为何默认使用Cgl

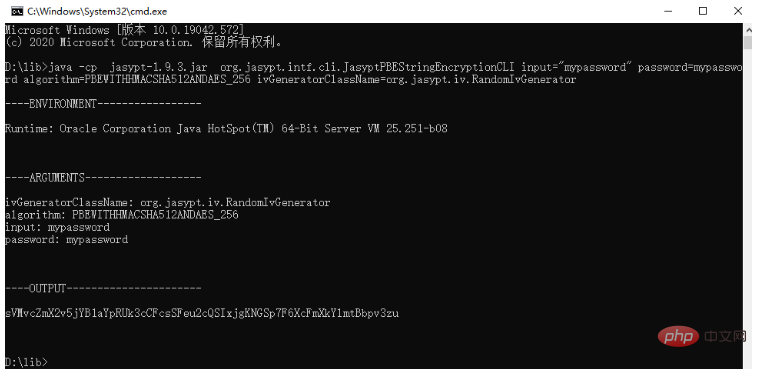

spring boot怎么对敏感信息进行加解密May 10, 2023 pm 02:46 PM

spring boot怎么对敏感信息进行加解密May 10, 2023 pm 02:46 PM我们使用jasypt最新版本对敏感信息进行加解密。1.在项目pom文件中加入如下依赖:com.github.ulisesbocchiojasypt-spring-boot-starter3.0.32.创建加解密公用类:packagecom.myproject.common.utils;importorg.jasypt.encryption.pbe.PooledPBEStringEncryptor;importorg.jasypt.encryption.pbe.config.SimpleStrin

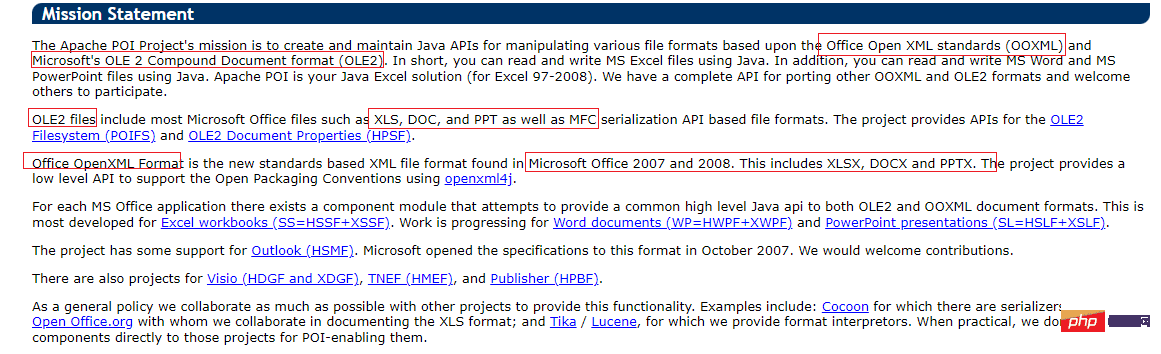

使用Java SpringBoot集成POI实现Word文档导出Apr 21, 2023 pm 12:19 PM

使用Java SpringBoot集成POI实现Word文档导出Apr 21, 2023 pm 12:19 PM知识准备需要理解ApachePOI遵循的标准(OfficeOpenXML(OOXML)标准和微软的OLE2复合文档格式(OLE2)),这将对应着API的依赖包。什么是POIApachePOI是用Java编写的免费开源的跨平台的JavaAPI,ApachePOI提供API给Java程序对MicrosoftOffice格式档案读和写的功能。POI为“PoorObfuscationImplementation”的首字母缩写,意为“简洁版的模糊实现”。ApachePOI是创建和维护操作各种符合Offic

springboot怎么整合shiro实现多验证登录功能May 10, 2023 pm 04:19 PM

springboot怎么整合shiro实现多验证登录功能May 10, 2023 pm 04:19 PM1.首先新建一个shiroConfigshiro的配置类,代码如下:@ConfigurationpublicclassSpringShiroConfig{/***@paramrealms这儿使用接口集合是为了实现多验证登录时使用的*@return*/@BeanpublicSecurityManagersecurityManager(Collectionrealms){DefaultWebSecurityManagersManager=newDefaultWebSecurityManager();

Springboot如何实现视频上传及压缩功能May 10, 2023 pm 05:16 PM

Springboot如何实现视频上传及压缩功能May 10, 2023 pm 05:16 PM一、定义视频上传请求接口publicAjaxResultvideoUploadFile(MultipartFilefile){try{if(null==file||file.isEmpty()){returnAjaxResult.error("文件为空");}StringossFilePrefix=StringUtils.genUUID();StringfileName=ossFilePrefix+"-"+file.getOriginalFilename(

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

Dreamweaver Mac version

Visual web development tools

Notepad++7.3.1

Easy-to-use and free code editor

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft