Technology peripherals

Technology peripherals AI

AI The pig-killing plate was tricked by a large AI model, and the price was 520 yuan. The scammer broke the defense

The pig-killing plate was tricked by a large AI model, and the price was 520 yuan. The scammer broke the defenseThe pig-killing plate was tricked by a large AI model, and the price was 520 yuan. The scammer broke the defense

When it comes to "pig killing plate", many people hate it with itch. This is a popular form of telecommunications fraud on the Internet. Scammers prepare "pig feed" such as personas and dating routines, and call social platforms "pig pens" where they look for fraud targets they call "pig" and establish romantic relationships, that is, "raising pigs." Finally, the money is defrauded, which is "killing the pig".

Since the fraudsters are overseas, this kind of fraud is usually difficult to crack. The victim’s hundreds of thousands or even millions of money are wasted in this way, and the victim suffers heavy psychological damage. .

B station up owner @turling’s cat said that recently, several of his friends have encountered the pig-killing plate. So, he decided to "use magic to defeat magic": use an AI trained using Bilibili comments to chat with scammers and see how the AI can outwit the scammers. In the end, not only did he not lose any money, but he earned a red envelope of 520 yuan from the scammer.

##Video address: https://www.bilibili.com/video/BV1qD4y1h7io/

In order to get scammers to take the bait, the author registered accounts on multiple social platforms, and based on the victim portraits released by the Ministry of Public Security and the Anti-Fraud Center, he positioned the accounts as single, wealthy, well-educated, and well-behaved girls. Label. This kind of personality has earned him a lot of private messages.

In order to accurately identify liars and avoid accidentally injuring ordinary people, only those who meet the following conditions will enter the chat with AI:

In the end, 5 suspects "stand out." The author shows a conversation between the AI and one of the suspects.

The conversation on the first day was mainly about "finding out the family background". Both the suspect and the AI showed their financial resources to each other. During this period, AI memes appeared frequently, including memes such as "Beijing Master Jixiang" and "Master, what do you do for a living?" In the end, the suspect played hard to get and ended the conversation on the pretext of "hosting a company meeting."

#The next day, the suspect started the approach mode, using clues found in the circle of friends to find accomplices The topics ranged from raising cats to "The Great Gatsby" to philosophy.

For most of these topics, AI can catch it, but occasionally it will reveal its flaws, such as Encyclopedic information is given mechanically when talking about The Great Gatsby. However, the suspect did not seem to delve into these flaws.

On the fourth day, the suspect began to change the topic to love in an attempt to establish a relationship. At this time, the explosive point came: because the suspect's words triggered the keyword "just because", the AI directly threw out the explosive joke-"Little Heizi, are you showing your chicken feet?"

However, the suspect still had no suspicion...

After chatting like this for three weeks, the suspect came up with his big move: giving red envelopes. In response, AI happily accepted and said a few polite words.

But when the suspect pretended to be pitiful and wanted to get some care from the AI, the AI's response seemed a bit nonsensical.

#However, the suspect still did not become suspicious and continued to show off his personality, while the AI continued to chat with memes.

In the end, the suspect began to pretend to be in trouble, trying to trick the AI into investing money.

While the AI insisted that he had no money, the suspect also resorted to various means.

After discovering that the deception failed, the suspect also attempted to have AI delete the chat history to make it more difficult for the police to handle the case.

In the end, the suspect broke through the defense and rejected all information from the AI, and the conversation ended.

Of course, this kind of defeating magic with magic has happened before. Previously, Xiao Ai on Xiaomi mobile phones was popularly searched for receiving fraudulent calls. MIUI has a built-in AI call function. After turning on anti-harassment in the call settings option, if the harassing call is recorded in the Yellow Pages and the harassing call comes in, Xiaoai will automatically help the phone owner answer the call, and sometimes help you chat with the scammer for several minutes.

Using AI to answer harassing calls seems to be the ultimate solution to this kind of problem, but the development of things is an upward spiral. Later, the party making the harassing calls also used AI, and it became AI fighting was introduced, and later the large models came, and the interaction between the two parties became more exciting. This AI that frequently makes headlines is also supported by large models.

How does the AI that competes with liars do it?

This AI is based on Source 1.0, which is a massive Chinese language model launched by Inspur Artificial Intelligence Research Institute. It has 245.7 billion parameters and contains the world's largest Chinese data set - 5.02TB. Source 1.0 can accomplish many tasks, including dialogue, story continuation, news generation... The main thing is that it is open source.

Address: https://air.inspur.com/

The model is done, and Turing's cat faces a new problem. Since WeChat chat is a multi-round conversation, and for AI, they often cannot remember what they or the other party said in the previous sentence. When chatting, the first words and the last words are inconsistent, and it is easy for people to doubt whether the other person is a real person.

In order to solve this problem, Turing's Cat said that he referred to the LSTM idea in the sequence model and added a memory mechanism to the system, so that AI can achieve simple long-term and short-term conversations. Memory, such as what we talked about yesterday and what we talked about in the last round, AI will refer to it.

In addition, in order to make the words spoken by AI more like real people, Turing’s Cat also added a prior rule based on Prompt Example to guide AI to learn how to communicate in a targeted manner, especially in a given situation. Under the mission of fraud scenario.

The example corpus is mainly extracted from popular comments on Bilibili and Tieba. In this way, AI can learn the wise words and memes of netizens, making it The dialogue between the two parties was not so stiff.

Finally, Turing’s Cat uses the open source Wechaty framework to build a backend on the cloud, calling puppet services based on local python scripts to allow AI Seamless access to WeChat. As long as you log in to the pre-registered WeChat ID, the AI can reply to any private chat or group chat.

In order to ensure that the AI is sufficiently human-like, Turing’s Cat still spent some time and did a lot of tricks. For example, let this AI publish a circle of friends that looks real; set an interval for replying to each message; simulate real human typing speed; replace text with some chat emoticons (emoticons are not yet supported); when the other party says a lot in one breath At this time, AI will splice consecutive sentences, read them into one sentence, and then reply. After some optimization, this AI made back 520 yuan from the scammer.

#But Turing’s cat has already donated the money transferred by the scammer to the AI.

This code is currently open source: https://github.com/Turing-Project/AntiFraudChatBot

Netizen comments

Regarding this AI, netizens said: "I feel like I might not be able to react if I were on the other side."

Some netizens suggested that this AI can be used for basic learning on Xiaohongshu, Zhihu and Weibo. The Tieba content of this AI is too strong.

Regarding the fact that the scammer was deceived, netizens who watched the chat process said that if this AI chatted with ordinary people, it would definitely be recognized, but The pig slaughtering coil opposite can't do that much, as long as it has performance.

As Mr. Zhihui said: "Maybe it will be AI that will make liars unemployed in the future."

No matter how far AI develops, the same sentence remains: there are many fraud methods, don’t transfer money if you don’t listen or believe it!

The above is the detailed content of The pig-killing plate was tricked by a large AI model, and the price was 520 yuan. The scammer broke the defense. For more information, please follow other related articles on the PHP Chinese website!

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AM

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AMThere were some very insightful perspectives in this speech—background information about engineering that showed us why artificial intelligence is so good at supporting people’s physical exercise. I will outline a core idea from each contributor’s perspective to demonstrate three design aspects that are an important part of our exploration of the application of artificial intelligence in sports. Edge devices and raw personal data This idea about artificial intelligence actually contains two components—one related to where we place large language models and the other is related to the differences between our human language and the language that our vital signs “express” when measured in real time. Alexander Amini knows a lot about running and tennis, but he still

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AM

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AMCaterpillar's Chief Information Officer and Senior Vice President of IT, Jamie Engstrom, leads a global team of over 2,200 IT professionals across 28 countries. With 26 years at Caterpillar, including four and a half years in her current role, Engst

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AM

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AMGoogle Photos' New Ultra HDR Tool: A Quick Guide Enhance your photos with Google Photos' new Ultra HDR tool, transforming standard images into vibrant, high-dynamic-range masterpieces. Ideal for social media, this tool boosts the impact of any photo,

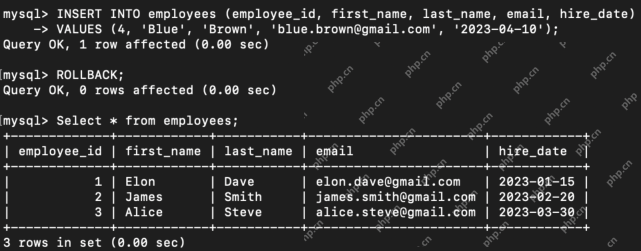

What are the TCL Commands in SQL? - Analytics VidhyaApr 22, 2025 am 11:07 AM

What are the TCL Commands in SQL? - Analytics VidhyaApr 22, 2025 am 11:07 AMIntroduction Transaction Control Language (TCL) commands are essential in SQL for managing changes made by Data Manipulation Language (DML) statements. These commands allow database administrators and users to control transaction processes, thereby

How to Make Custom ChatGPT? - Analytics VidhyaApr 22, 2025 am 11:06 AM

How to Make Custom ChatGPT? - Analytics VidhyaApr 22, 2025 am 11:06 AMHarness the power of ChatGPT to create personalized AI assistants! This tutorial shows you how to build your own custom GPTs in five simple steps, even without coding skills. Key Features of Custom GPTs: Create personalized AI models for specific t

Difference Between Method Overloading and OverridingApr 22, 2025 am 10:55 AM

Difference Between Method Overloading and OverridingApr 22, 2025 am 10:55 AMIntroduction Method overloading and overriding are core object-oriented programming (OOP) concepts crucial for writing flexible and efficient code, particularly in data-intensive fields like data science and AI. While similar in name, their mechanis

Difference Between SQL Commit and SQL RollbackApr 22, 2025 am 10:49 AM

Difference Between SQL Commit and SQL RollbackApr 22, 2025 am 10:49 AMIntroduction Efficient database management hinges on skillful transaction handling. Structured Query Language (SQL) provides powerful tools for this, offering commands to maintain data integrity and consistency. COMMIT and ROLLBACK are central to t

PySimpleGUI: Simplifying GUI Development in Python - Analytics VidhyaApr 22, 2025 am 10:46 AM

PySimpleGUI: Simplifying GUI Development in Python - Analytics VidhyaApr 22, 2025 am 10:46 AMPython GUI Development Simplified with PySimpleGUI Developing user-friendly graphical interfaces (GUIs) in Python can be challenging. However, PySimpleGUI offers a streamlined and accessible solution. This article explores PySimpleGUI's core functio

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

Dreamweaver CS6

Visual web development tools

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment