Technology peripherals

Technology peripherals AI

AI Commercial use of self-driving cars requires attention to algorithm safety regulations

Commercial use of self-driving cars requires attention to algorithm safety regulationsCommercial use of self-driving cars requires attention to algorithm safety regulations

Under the development trend of vehicle electrification, networking, and intelligence, and due to the rapid development of autonomous vehicle technology, the automotive industry is increasingly approaching a fundamental change. However, in recent years, the safety challenges of autonomous vehicles have become increasingly prominent, and related accidents have weakened the public's confidence and trust in autonomous vehicles to a certain extent. In order to effectively respond to the safety challenges brought by autonomous driving algorithms, it is necessary to build a unified autonomous driving algorithm security framework to accelerate the transition of autonomous vehicles from the research and development testing stage to the commercial application stage.

##Main manifestations of safety issues in autonomous driving algorithms

my country's current autonomous vehicle legislation mainly focuses on road testing, demonstration applications, and vehicle data security. It has not yet formed a complete and unified safety regulatory framework for autonomous driving systems with artificial intelligence algorithms as the core. Future legislation needs to focus on responding to three aspects.

First, general technical security is the most important and relevant variable. Compared with traditional cars that are subject to an established regulatory framework (covering safety standards, testing and certification, product approval, etc.) and human drivers who are subject to driver's licenses and liability mechanisms, the safety standards of autonomous driving systems have yet to be established, so autonomous driving Driving a car does not yet demonstrate safety and compliance in the same way as a traditional car. Therefore, at this stage, the core issue is to establish the safety thresholds, safety standards, testing and certification methods, approval mechanisms and other requirements for autonomous driving systems.

A key question is how safe autonomous driving systems need to be before policymakers and regulators will allow the commercial deployment of autonomous vehicles. Society's biases against technology can lead to inappropriately high thresholds for acceptable security. For example, it is believed that self-driving cars need to reach an absolute level of safety sufficient to achieve zero accidents. In the author's opinion, the safety threshold of autonomous driving algorithms should not be based on the autonomous driving level of absolute goals (such as zero accidents, zero casualties), but should be based on the general human driving level as a benchmark to determine a scientific and reasonable safety threshold. For example, the autonomous driving policy document "Connected and Autonomous Mobility 2025: Unleashing the Benefits of Autonomous Vehicles in the UK" released by the UK in August 2022 clearly puts forward the safety threshold for autonomous vehicles, that is, autonomous vehicles should meet the requirements of "competent and prudent" "Competent and Careful Human driver" (Competent and Careful Human driver) has the same level of safety, which is higher than that of ordinary human drivers.

Second, network security challenges. Cybersecurity is a key factor affecting the development and application of autonomous vehicles, and the risks and threats related to it will become the most complex and difficult to solve threats to autonomous vehicles. Overall, the cybersecurity challenges of autonomous vehicles mainly present the following characteristics:

First, autonomous vehicles are more susceptible to cybersecurity risks than traditional vehicles. Self-driving cars are "robots on wheels". In addition to facing cybersecurity risks in the traditional sense, self-driving cars will also face new cybersecurity challenges, risks and threats brought by self-driving algorithms.

Second, the sources of cybersecurity risks for self-driving cars are more diversified. In manufacturing, operations, maintenance, intelligent infrastructure, insurance, supervision and other links, different entities have different risks. Access or control of autonomous vehicles may bring cyber risks.

Third, autonomous vehicles face more diverse network intrusion methods. For example, hackers can target software vulnerabilities, launch physical attacks on autonomous vehicles by connecting malicious devices, or attack components of the autonomous vehicle ecosystem such as smart road infrastructure.

In addition, in terms of attack effects, hackers can use many types of attacks, including failure attacks, operational attacks, data manipulation attacks, data theft, etc. The impact of these attacks can be huge It’s small and should not be underestimated. Fourth, the cybersecurity risks of autonomous vehicles present both breadth and depth characteristics, bringing all-round and multi-level harmful consequences. In terms of breadth, the software and hardware vulnerabilities of self-driving cars may be extensive, which means that cyber attacks will be amplified; in terms of depth, after self-driving cars are invaded and controlled, they may cause adverse consequences at different levels, including casualties, Property damage, data theft, etc.

Third, ethical safety challenges. The most important ethical safety issue for autonomous driving algorithms is how should the algorithm make decisions and act when faced with an unavoidable accident? Especially when faced with dilemmas (i.e. moral dilemmas), how should we choose? Should we choose to minimize casualties or protect vehicle occupants at all costs, even if this may mean sacrificing other road participants such as pedestrians? The possibility of moral dilemmas in self-driving cars makes the interaction between technology and ethics an unavoidable question, that is, how to program complex human morality into the design of self-driving algorithms. Regarding this issue, all sectors of society have not yet reached a consensus.

Building an algorithmic security framework for autonomous vehicles

In order to ensure the realization of the "Intelligent Vehicle Innovation and Development Strategy" proposed that "by 2025, the technological innovation, industrial ecology, infrastructure, regulations and standards, product supervision and network security systems of China's standard intelligent vehicles will be basically formed. To realize the intelligence of conditional autonomous driving" To achieve the goal of large-scale production of automobiles and the market application of highly autonomous smart cars in specific environments, it is necessary to speed up the revision and innovation of the legislative and regulatory framework for traditional cars and human drivers to integrate autonomous vehicles into the current road traffic system. Establish new legal systems and regulatory frameworks. One of the core aspects is to build a safety regulatory framework with autonomous driving algorithms as the core, which needs to cover the three dimensions of technical safety standards and approval certification, network security certification, and ethical risk management.

First, establish new safety standards and certification mechanisms for autonomous driving systems. The country urgently needs to establish new and unified safety standards for self-driving cars, shifting from safety standards that traditionally focus on car hardware and human drivers to safety standards with self-driving algorithms as the core. This means allowing innovative cars Design – a self-driving car that requires no cockpit, steering wheel, pedals, or rearview mirrors. In addition, in order to more accurately and reliably evaluate and verify the safety of autonomous driving systems, future legislation and policies need to set scientific and reasonable safety thresholds and benchmarks for autonomous driving systems. Consideration can be given to requiring autonomous vehicles to at least meet the requirements of "competent and prudent" "The same level of safety as human drivers", and establish a set of scientific and reasonable detection methods based on the technical level of road driving.

Second, a new cybersecurity framework is needed for autonomous vehicles. Policymakers need to consider integrating traditional cybersecurity principles to ensure the cybersecurity of autonomous vehicles as a whole. The first is to establish a cybersecurity certification mechanism for autonomous vehicles. Only autonomous vehicles that have passed cybersecurity certification are allowed to be sold and used. And this mechanism needs to extend to the software and hardware supply chain. Second, it is necessary to clarify the cybersecurity protection capabilities and requirements of autonomous vehicles, including technical measures and non-technical measures. The third is the need to realize B2B, B2G, G2B and other forms of data sharing between the industry and the government, especially data related to safety incidents such as safety accidents, network security, and autonomous driving system disengagement. Establishing an accident data reporting and sharing mechanism is of great significance to improving the development level of the entire autonomous driving industry.

Third, establish ethical risk management mechanisms such as autonomous driving algorithms. On the one hand, clear government supervision is needed to set standards for ethical choices in the design of self-driving algorithms to ensure that self-driving algorithms are ethically consistent with the general public interest and achieve a certain balance between public acceptance and moral requirements. balance. On the other hand, self-driving car companies need to strengthen the scientific and technological ethics governance of self-driving car technology, actively fulfill the main responsibilities of science and technology ethics management, adhere to the bottom line of science and technology ethics, carry out scientific and technological ethics risk assessment and review for self-driving car technology, and establish scientific and technological ethics risk monitoring and early warning mechanism, strengthen ethics training for scientific and technological personnel, etc. For example, British regulators proposed the establishment of a "Committee on AV Ethics and Safety" to better support the governance of autonomous vehicles.

In summary, the widespread deployment and use of autonomous vehicles is a necessary condition for realizing many of its positive benefits. The necessary condition for its widespread deployment and use is to establish a suitable safety framework and accelerate the leap from testing to commercial use of autonomous vehicles. But no reasonable legal policy can be blind to public acceptance. In other words, if autonomous vehicles want to become the most preferred mode of transportation, they must consider the expectations of their users and society as a whole. These expectations include user satisfaction and safety as well as design values such as trust, responsibility, and transparency.

Safety regulations for autonomous vehicles must also consider these expectations and even reconcile the excessive expectations. Based on these considerations, this article innovatively proposes a new self-driving algorithm safety regulatory framework to address the algorithm safety challenges that must be faced before self-driving vehicles can be commercialized. In the long run, the commercial use of self-driving cars is only the starting point, not the end, of future traffic law. A series of changes in car design, traffic regulations, liability, insurance compensation, driving habits, etc. will come one after another.

The above is the detailed content of Commercial use of self-driving cars requires attention to algorithm safety regulations. For more information, please follow other related articles on the PHP Chinese website!

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AM

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AMThere were some very insightful perspectives in this speech—background information about engineering that showed us why artificial intelligence is so good at supporting people’s physical exercise. I will outline a core idea from each contributor’s perspective to demonstrate three design aspects that are an important part of our exploration of the application of artificial intelligence in sports. Edge devices and raw personal data This idea about artificial intelligence actually contains two components—one related to where we place large language models and the other is related to the differences between our human language and the language that our vital signs “express” when measured in real time. Alexander Amini knows a lot about running and tennis, but he still

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AM

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AMCaterpillar's Chief Information Officer and Senior Vice President of IT, Jamie Engstrom, leads a global team of over 2,200 IT professionals across 28 countries. With 26 years at Caterpillar, including four and a half years in her current role, Engst

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AM

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AMGoogle Photos' New Ultra HDR Tool: A Quick Guide Enhance your photos with Google Photos' new Ultra HDR tool, transforming standard images into vibrant, high-dynamic-range masterpieces. Ideal for social media, this tool boosts the impact of any photo,

What are the TCL Commands in SQL? - Analytics VidhyaApr 22, 2025 am 11:07 AM

What are the TCL Commands in SQL? - Analytics VidhyaApr 22, 2025 am 11:07 AMIntroduction Transaction Control Language (TCL) commands are essential in SQL for managing changes made by Data Manipulation Language (DML) statements. These commands allow database administrators and users to control transaction processes, thereby

How to Make Custom ChatGPT? - Analytics VidhyaApr 22, 2025 am 11:06 AM

How to Make Custom ChatGPT? - Analytics VidhyaApr 22, 2025 am 11:06 AMHarness the power of ChatGPT to create personalized AI assistants! This tutorial shows you how to build your own custom GPTs in five simple steps, even without coding skills. Key Features of Custom GPTs: Create personalized AI models for specific t

Difference Between Method Overloading and OverridingApr 22, 2025 am 10:55 AM

Difference Between Method Overloading and OverridingApr 22, 2025 am 10:55 AMIntroduction Method overloading and overriding are core object-oriented programming (OOP) concepts crucial for writing flexible and efficient code, particularly in data-intensive fields like data science and AI. While similar in name, their mechanis

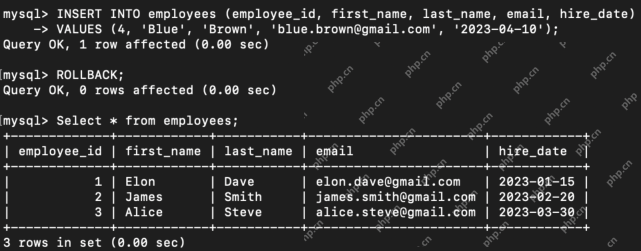

Difference Between SQL Commit and SQL RollbackApr 22, 2025 am 10:49 AM

Difference Between SQL Commit and SQL RollbackApr 22, 2025 am 10:49 AMIntroduction Efficient database management hinges on skillful transaction handling. Structured Query Language (SQL) provides powerful tools for this, offering commands to maintain data integrity and consistency. COMMIT and ROLLBACK are central to t

PySimpleGUI: Simplifying GUI Development in Python - Analytics VidhyaApr 22, 2025 am 10:46 AM

PySimpleGUI: Simplifying GUI Development in Python - Analytics VidhyaApr 22, 2025 am 10:46 AMPython GUI Development Simplified with PySimpleGUI Developing user-friendly graphical interfaces (GUIs) in Python can be challenging. However, PySimpleGUI offers a streamlined and accessible solution. This article explores PySimpleGUI's core functio

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

Dreamweaver CS6

Visual web development tools

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment