After decades of unremitting efforts by scientific and technological personnel, artificial intelligence in the real world has finally reached a critical point. The amazing performance of AI models such as ChatGPT and DALL-E has made many people feel that increasingly smart AI systems are catching up with humans.

The capabilities of generative AI are so diverse and unique that it’s hard to believe they come from a machine. But once the sense of wonder wears off, so will the star power of generative AI. AI has also shown the limitations of its scene perception or common sense in some applications. Nowadays, many people are paying attention to or worrying about the shortcomings or flaws of generative AI.

Here are 10 shortcomings or flaws of generative AI that people are worried about.

1. Plagiarized content

When researchers create generative AI models like DALL-E and ChatGPT, these are actually just millions of examples from the training set The new schema created in the example. The result is a composite of cut-and-paste extractions from various data sources, a human practice known as “plagiarism.”

Of course, humans also learn through imitation, but in some cases, this kind of plagiarism is undesirable and even illegal. The content generated by generative AI consists of a large amount of text, and some content is more or less plagiarized. However, sometimes there is enough mixing or synthesis involved that even a college professor may have difficulty detecting the true source. Regardless, what the content it generates lacks is uniqueness. Although they appear powerful, they do not produce truly innovative products.

2. Copyright issues

Although plagiarism is a problem that schools try to avoid, copyright law applies to the market. When someone steals someone else's intellectual property or work, they can be sued or fined millions of dollars. But what about AI systems? Do the same rules apply to them? Copyright is a complex subject, and the legal status of generative AI will take years to determine. But what needs to be remembered is that when AI starts to replace some of the jobs of humans, then there will be lawsuits filed under copyright regulations.

3. Obtaining human labor for freeThe legal issues caused by generative AI are not only plagiarism and copyright infringement, some lawyers have launched lawsuits over the ethics caused by AI. For example, does a company that makes a drawing program collect data about users’ drawing behavior and then use that data for AI training purposes? Should humans be compensated for the use of this creative labor? Much of the success of AI stems from understanding Data access. So what happens when the humans who generated the data want to profit from it? So what is fair? What is legal?

4. Exploiting information without creating knowledgeAI is good at imitating the kind of intelligence that takes humans years to develop. When an anthropologist introduces an unknown 17th-century artist, or an artist composes new music in an almost forgotten Renaissance tone, people admire their advanced knowledge and skill. , as it requires years of study and practice. When an AI can do the same thing after only a few months of training, the results can be incredibly precise and correct, but it always feels like something is missing.

A well-trained AI system machine can understand a certain thing by obtaining a large amount of information, and can even decipher Mayan hieroglyphs. AI seems to be imitating the fun and unpredictable side of human creativity, but they can't really do it. At the same time, unpredictability is a driver of creative innovation. An industry like fashion is not only obsessed with change, but defined by it. In fact, both AI and humans have their own areas of expertise.

5. Intelligence growth is limitedWhen it comes to intelligence, AI is essentially mechanical and rule-based. Once an AI system is trained on a set of data, a model is created that doesn't really change. Some engineers and data scientists envision gradually retraining AI models over time so that the AI learns to adapt.

But, in most cases, the idea is to create a complex set of neurons that encode specific knowledge in a fixed form. This may apply to some industries. The danger of AI is that its growth in intelligence will always be trapped by the limitations of its training data. What will happen when humans become so dependent on generative AI that they can no longer provide new materials for training models?

6. Privacy and security need to be improvedAI training requires a lot of data, and humans are not always sure what the results of neural networks will be. What if an AI leaks personal information from its training data? Worse, controlling AI is much harder because they are designed to be very flexible. Relational databases can restrict access to specific tables with personal information. However, AI can query in dozens of different ways.

Cyber attackers will quickly learn how to ask the right questions in the right way to get the sensitive data they want. Assuming a cyberattacker has targeted the latitude and longitude of a particular facility, the AI system might be asked for the exact time at that location, and a conscientious AI system might be able to answer the question. Therefore, how to train AI to protect private data is also a difficult matter.

7. Generating Bias

Even early mainframe programmers understood the heart of the computer problem, and they coined "garbage in, garbage out" (GIGO) this concept. Many problems with AI come from poor training data. If a data set is inaccurate or biased, it will be reflected in its output.

The core hardware of generative AI is driven by logic, but the humans who build and train the machines are not. Bias and errors have been shown to find their way into AI models. Maybe someone used biased data to create the model, maybe they just overridden the AI to prevent it from answering specific hot questions, maybe they fed in some canned answers that would bias the AI system.

8. AI makes mistakes too

It’s easy to forgive AI models for mistakes because they do a lot of other things well, just a lot of mistakes It’s hard to predict because AI thinks differently than humans. For example, many users of the text-to-image feature found that the AI made mistakes in fairly simple things, such as counting.

Human beings learn basic arithmetic from elementary school and then use this skill in a variety of ways. For example, if a 10-year-old child is asked to draw an octopus, he will usually determine that it has eight legs. Current versions of AI models tend to get bogged down when it comes to mathematical abstractions and contextual applications. This situation can easily be changed if the model builder pays some attention to this error, but there are other errors. Machine intelligence is different from human intelligence, which means the mistakes machines make will be different.

9. Deceiving Humans

Sometimes humans tend to be deceived by AI systems without realizing the error. For example, if an AI tells humans that King Henry VIII of England killed his wife, they will usually believe it because they may not understand this history either. People tend to assume that the answers provided by AI are true and correct.

For users of generative AI, the toughest problem is knowing when an AI system goes wrong. Machines are thought to be less able to lie than humans can, making them even more dangerous. AI systems can write out some perfectly accurate data and then turn to guesswork or even lies, often without humans knowing what's going on. Used car dealers or poker players tend to know when they are lying, and most will tell where, but AI can't do that.

10. Infinite replicability

Digital content is infinitely replicable, which overwhelms many AI models built around scarcity. Generative AI will further break these patterns. Generative AI will put some writers and artists out of work, and it will upend many of the economic rules we follow.

Will ad-supported content still be effective when ads and content can be constantly remixed and updated? Will the free part of the internet descend into a world of "bots clicking on web ads", all driven by Generative AI generates and infinitely replicates?

Infinite richness could disrupt the digital economy. For example, if non-fungible tokens could be copied, would people continue to pay for them? If creating art was so easy, would it still be respected? Would it still be unique? When everything is taken for granted , will everything lose value?

Instead of trying to answer these questions yourself, look to generative AI for an interesting and strange answer.

The above is the detailed content of ChatGPT is popular, but 10 generative AI flaws are worrisome.. For more information, please follow other related articles on the PHP Chinese website!

Sam's Club Bets On AI To Eliminate Receipt Checks And Enhance RetailApr 22, 2025 am 11:29 AM

Sam's Club Bets On AI To Eliminate Receipt Checks And Enhance RetailApr 22, 2025 am 11:29 AMRevolutionizing the Checkout Experience Sam's Club's innovative "Just Go" system builds on its existing AI-powered "Scan & Go" technology, allowing members to scan purchases via the Sam's Club app during their shopping trip.

Nvidia's AI Omniverse Expands At GTC 2025Apr 22, 2025 am 11:28 AM

Nvidia's AI Omniverse Expands At GTC 2025Apr 22, 2025 am 11:28 AMNvidia's Enhanced Predictability and New Product Lineup at GTC 2025 Nvidia, a key player in AI infrastructure, is focusing on increased predictability for its clients. This involves consistent product delivery, meeting performance expectations, and

Exploring the Capabilities of Google's Gemma 2 ModelsApr 22, 2025 am 11:26 AM

Exploring the Capabilities of Google's Gemma 2 ModelsApr 22, 2025 am 11:26 AMGoogle's Gemma 2: A Powerful, Efficient Language Model Google's Gemma family of language models, celebrated for efficiency and performance, has expanded with the arrival of Gemma 2. This latest release comprises two models: a 27-billion parameter ver

The Next Wave of GenAI: Perspectives with Dr. Kirk Borne - Analytics VidhyaApr 22, 2025 am 11:21 AM

The Next Wave of GenAI: Perspectives with Dr. Kirk Borne - Analytics VidhyaApr 22, 2025 am 11:21 AMThis Leading with Data episode features Dr. Kirk Borne, a leading data scientist, astrophysicist, and TEDx speaker. A renowned expert in big data, AI, and machine learning, Dr. Borne offers invaluable insights into the current state and future traje

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AM

AI For Runners And Athletes: We're Making Excellent ProgressApr 22, 2025 am 11:12 AMThere were some very insightful perspectives in this speech—background information about engineering that showed us why artificial intelligence is so good at supporting people’s physical exercise. I will outline a core idea from each contributor’s perspective to demonstrate three design aspects that are an important part of our exploration of the application of artificial intelligence in sports. Edge devices and raw personal data This idea about artificial intelligence actually contains two components—one related to where we place large language models and the other is related to the differences between our human language and the language that our vital signs “express” when measured in real time. Alexander Amini knows a lot about running and tennis, but he still

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AM

Jamie Engstrom On Technology, Talent And Transformation At CaterpillarApr 22, 2025 am 11:10 AMCaterpillar's Chief Information Officer and Senior Vice President of IT, Jamie Engstrom, leads a global team of over 2,200 IT professionals across 28 countries. With 26 years at Caterpillar, including four and a half years in her current role, Engst

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AM

New Google Photos Update Makes Any Photo Pop With Ultra HDR QualityApr 22, 2025 am 11:09 AMGoogle Photos' New Ultra HDR Tool: A Quick Guide Enhance your photos with Google Photos' new Ultra HDR tool, transforming standard images into vibrant, high-dynamic-range masterpieces. Ideal for social media, this tool boosts the impact of any photo,

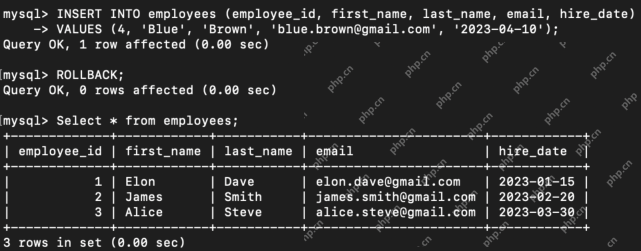

What are the TCL Commands in SQL? - Analytics VidhyaApr 22, 2025 am 11:07 AM

What are the TCL Commands in SQL? - Analytics VidhyaApr 22, 2025 am 11:07 AMIntroduction Transaction Control Language (TCL) commands are essential in SQL for managing changes made by Data Manipulation Language (DML) statements. These commands allow database administrators and users to control transaction processes, thereby

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SublimeText3 Mac version

God-level code editing software (SublimeText3)

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

Atom editor mac version download

The most popular open source editor